Convolutional neural network IP core based on FPGA

A convolutional neural network and convolutional layer technology, applied in the field of convolutional neural network IP core design, can solve problems such as long convolution calculation delay time, peak calculation performance limitations, and fewer pipelines

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

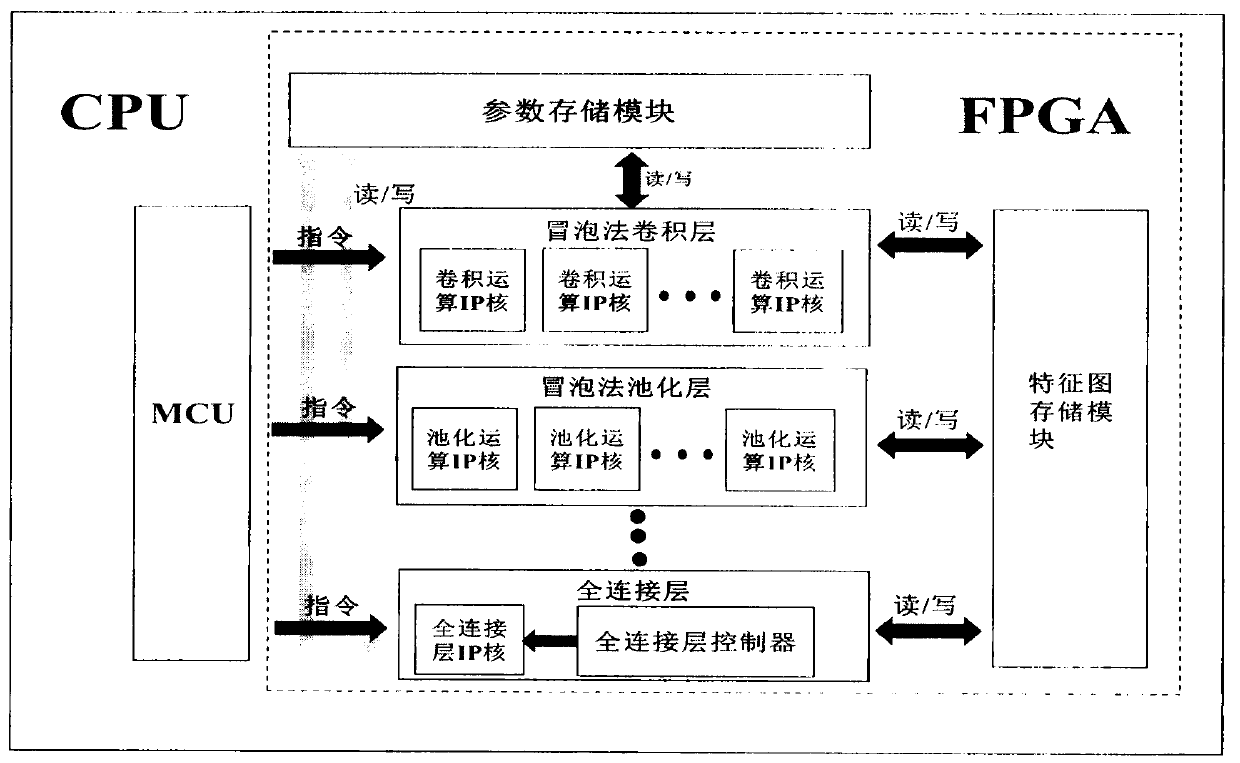

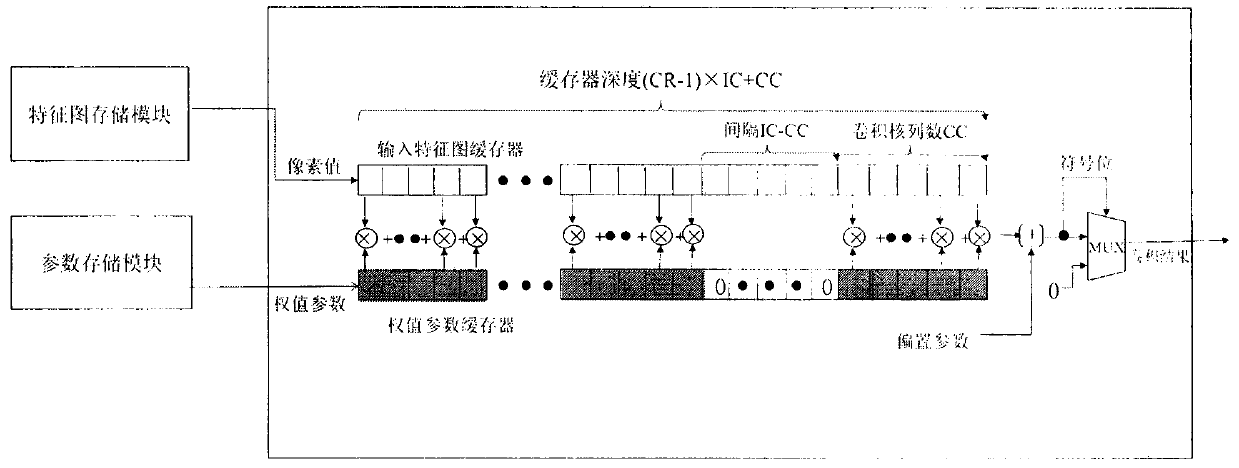

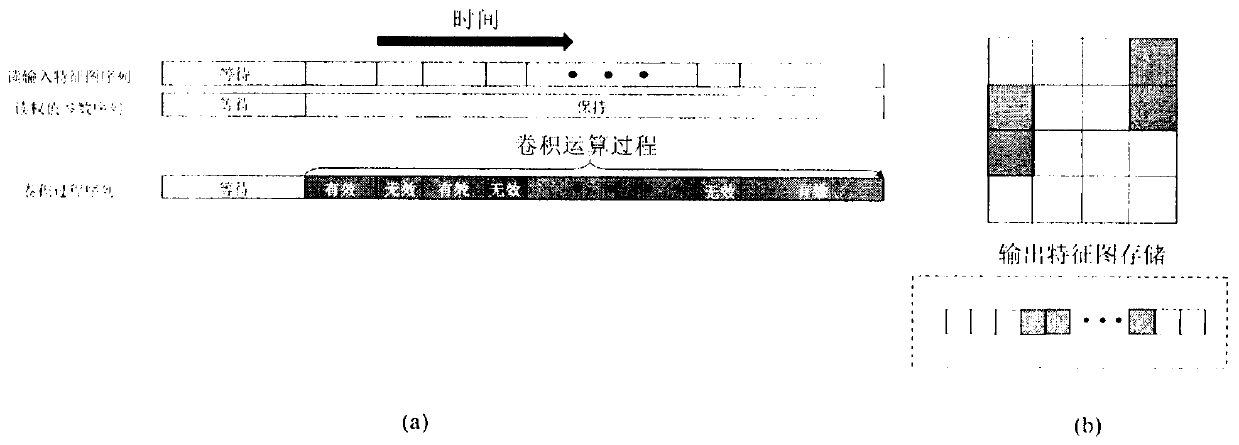

[0050] The present invention analyzes the basic characteristics of the convolutional neural network, investigates the current research status at home and abroad and analyzes its advantages and disadvantages, and combines the high parallelism, high energy efficiency ratio and reconfigurability of the FPGA to calculate the dense convolution from the convolutional neural network. The FPGA-based convolutional neural network IP core is designed to accelerate the feed-forward propagation of the convolutional neural network in three aspects: pooling, pooling, and full connection. The IP core designed with Verilog HDL language can effectively use the least logic resources to build the required hardware structure, and it is easy to transplant to different types of FPGA.

[0051] Firstly, the following basic unit definitions are given for subsequent specific implementation and mathematical formula descriptions:

[0052] Table 1 CNN basic unit definition of the present invention

[0053...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com