Visual slam closed-loop detection method based on feature extraction and dimensionality reduction neural network

A feature extraction and neural network technology, applied in the field of robot vision and mobile robots, can solve the problems of dynamic changes in the environment and the influence of light changes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

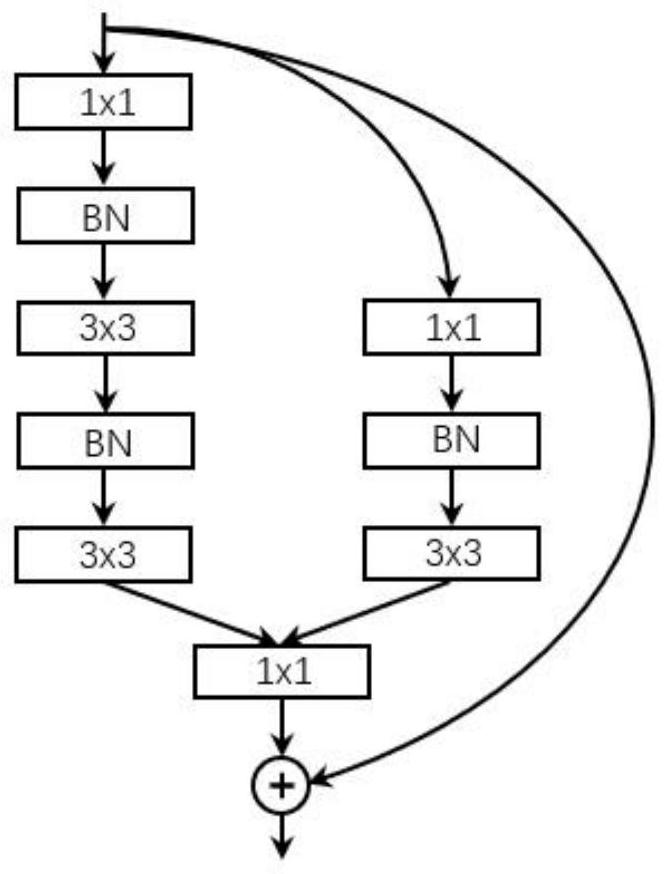

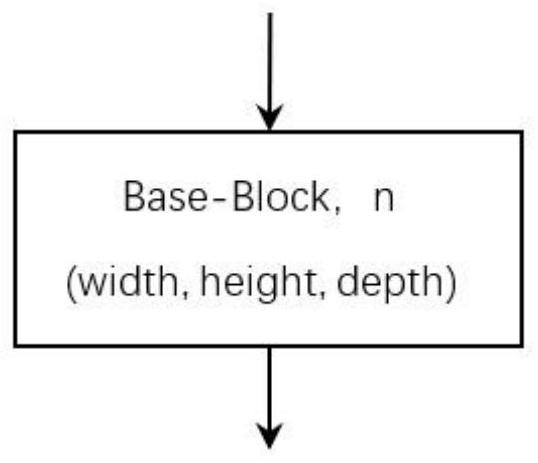

[0060] The first step is to build a network model. use figure 1 The shown Base-Block unit, pooling layer and softmax classification layer construct a convolutional neural network for classification, and the classification network is obtained as Figure 4 shown. The specific implementation is written using the open source deep learning framework TensorFlow.

[0061] In the second step, train the convolutional neural network for classification built in the first step. The network is trained using the Places205 scene classification dataset, which contains 205 categories of scenes. The loss function of the network looks like this:

[0062]

[0063] The update strategy of network weights adopts Adam algorithm:

[0064] g t =▽ θ loss t (θ t-1 )

[0065] m t = β 1 m t-1 +(1-β 1 )g t

[0066]

[0067]

[0068]

[0069]

[0070] The parameters are set to: β 1 =0.9,β 2 = 0.999, ε = 10 -8 . Set t=0 in the initial iteration, m 0 = 0, v 0 = 0, the initi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com