Neural network processing method and device, storage medium, and electronic apparatus

A neural network and processing method technology, applied in the fields of storage media, electronic devices, neural network processing methods and devices, can solve the problems of slow convergence, low precision, and no solution found, so as to reduce errors and solve convergence problems. slow effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

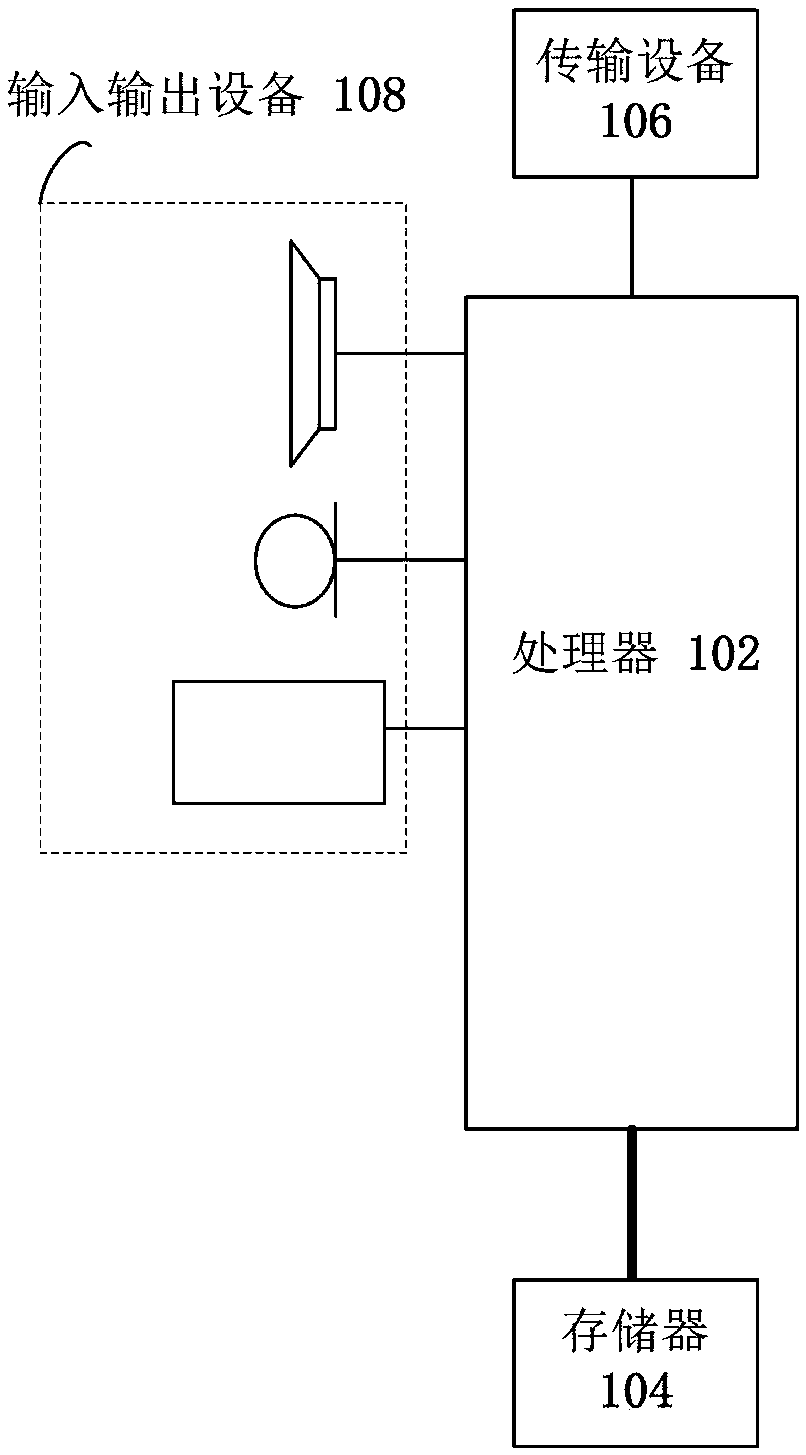

[0030] The method embodiment provided in Embodiment 1 of the present application may be executed in a server, a network terminal, a computer terminal or a similar computing device. Take running on a network terminal as an example, figure 2 It is a hardware structural block diagram of a network terminal of a neural network training method in an embodiment of the present invention. Such as figure 2 As shown, the network terminal 10 may include one or more ( figure 2 Only one is shown in the figure) a processor 102 (the processor 102 may include but not limited to a processing device such as a microprocessor MCU or a programmable logic device FPGA) and a memory 104 for storing data. Optionally, the above-mentioned network terminal also A transmission device 106 for communication functions as well as input and output devices 108 may be included. Those of ordinary skill in the art can understand that, figure 2 The shown structure is only for illustration, and does not limit...

Embodiment 2

[0060] This embodiment also provides a neural network training device, which is used to implement the above embodiments and preferred implementation modes, and what has already been described will not be repeated. As used below, the term "module" may be a combination of software and / or hardware that realizes a predetermined function. Although the devices described in the following embodiments are preferably implemented in software, implementations in hardware, or a combination of software and hardware are also possible and contemplated.

[0061] Figure 4 is a structural block diagram of the training process of the neural network according to an embodiment of the present invention, such as Figure 4 As shown, the device includes:

[0062] The determination module 40 is used to determine the objective function in the convolutional neural network, wherein each layer of the objective function corresponds to an initial network weight, wherein the convolutional neural network is ...

Embodiment 3

[0071] An embodiment of the present invention also provides a storage medium, in which a computer program is stored, wherein the computer program is set to execute the steps in any one of the above method embodiments when running.

[0072] Optionally, in this embodiment, the above-mentioned storage medium may be configured to store a computer program for performing the following steps:

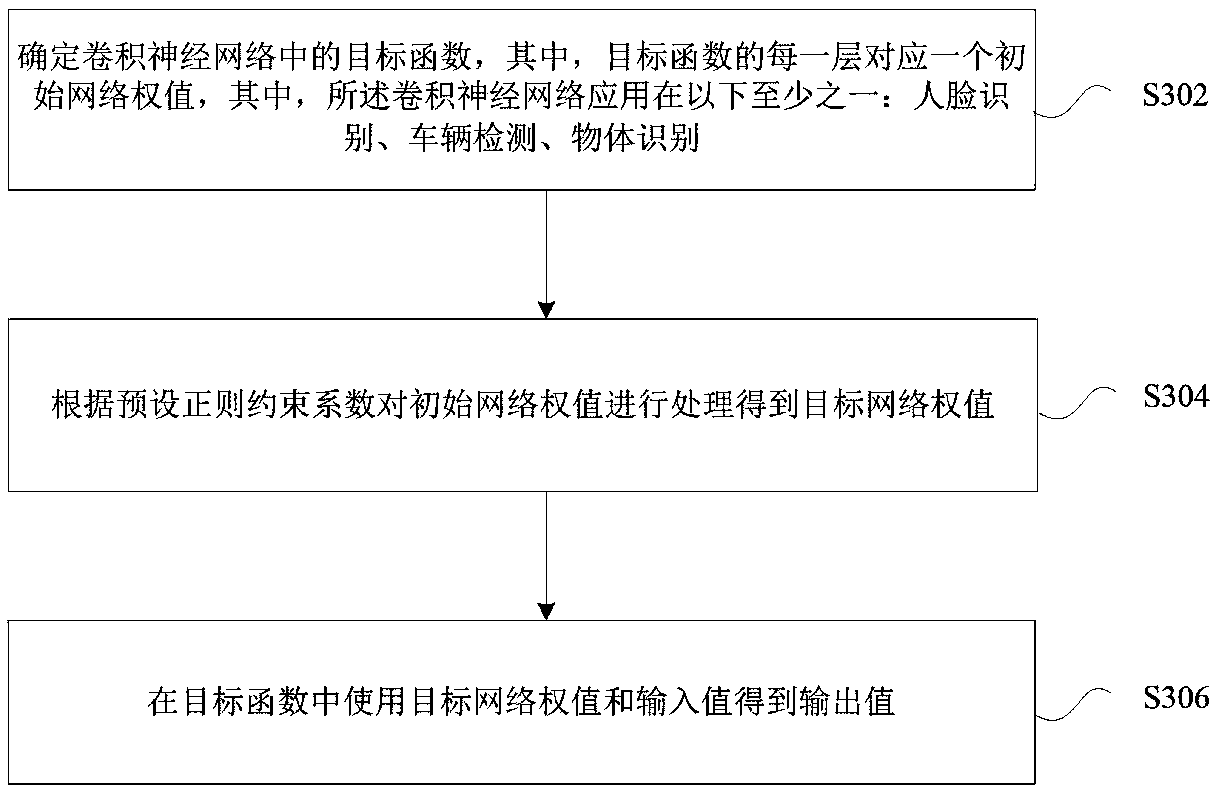

[0073] S1, determining the objective function in the convolutional neural network, wherein each layer of the objective function corresponds to an initial network weight, wherein the convolutional neural network is applied to at least one of the following: face recognition, vehicle detection, object identification;

[0074] S2, process the initial network weights according to the preset regular constraint coefficients to obtain the target network weights;

[0075] S3, using the target network weights and input values in the objective function to obtain an output value.

[0076] Optionally, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com