A Cache Realization Method Based on Interleaved Storage

An implementation method and cross-storage technology, applied in the field of integrated circuit design, can solve the problems of large area overhead, high power consumption, and large number of small blocks, and achieve the effect of ensuring correct indication, reducing read power consumption, and small area

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

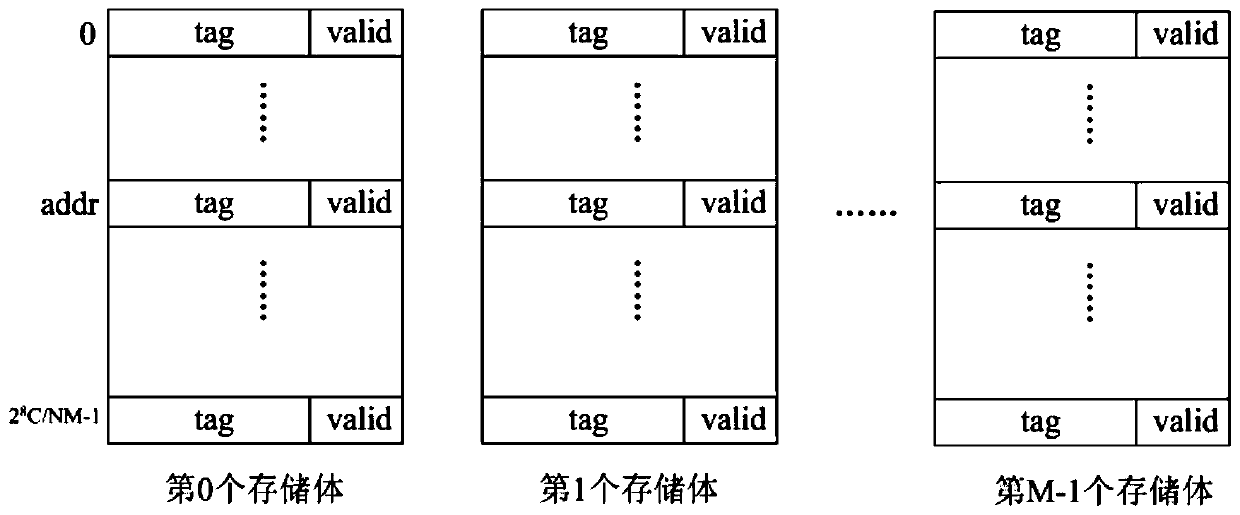

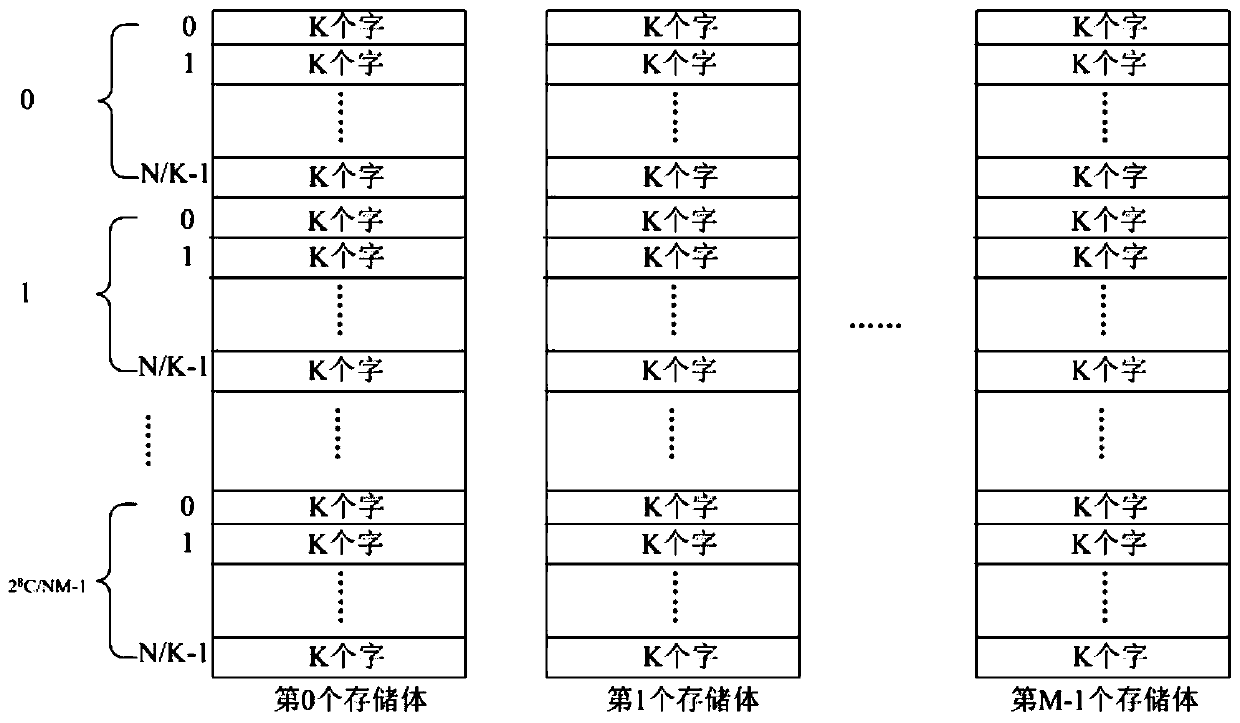

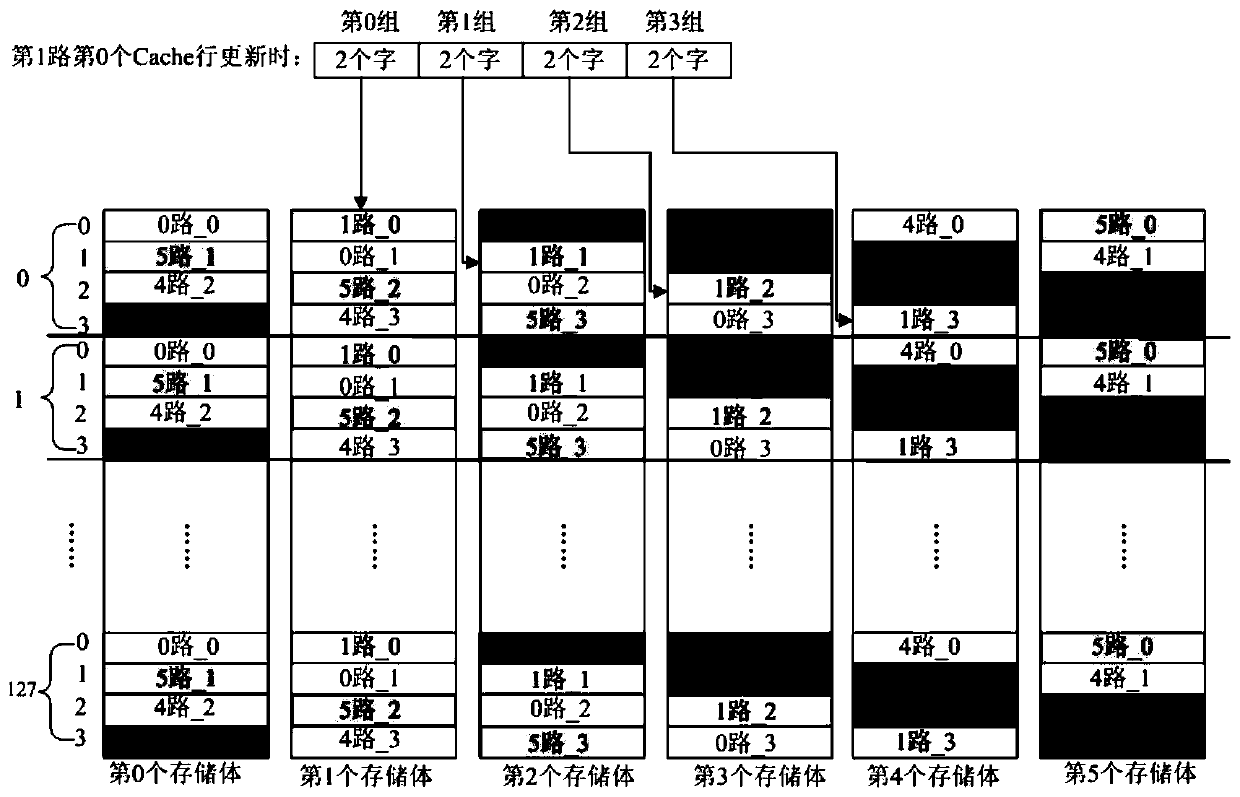

[0033] The present invention provides a kind of Cache realization method based on interleaved memory, when satisfying Under the conditions, where N is the size of the Cache line, K is the data bit width between the pipeline and the Cache, and N is an integer multiple of K, and M is the number of Cache ways), one cycle can fill all the cache lines N words, at the same time, the same address can be used to read all M channels corresponding to K words in the hit judgment period, which meets the timing requirements of the pipeline for Cache access.

[0034] A kind of Cache implementation method based on interleaved storage of the present invention, comprises the following steps:

[0035] S1. Determine the organizational structure of the DATA memory and the TAG memory according to the number of ways of the Cache determined in the design, the size of each Cache line, the data bandwidth between the pipeline and the Cache, and the capacity of the Cache;

[0036] For example, the Cac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com