Multi-computing-unit coarse-grained reconfigurable system and method for recurrent neural network

A recursive neural network and computing unit technology, applied in the field of embedded reconfigurable systems, can solve problems such as increased workload of parallel program process management, high GPU computing power consumption, complex program code, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] Below in conjunction with specific embodiment, further illustrate the present invention, should be understood that these embodiments are only used to illustrate the present invention and are not intended to limit the scope of the present invention, after having read the present invention, those skilled in the art will understand various equivalent forms of the present invention All modifications fall within the scope defined by the appended claims of the present application.

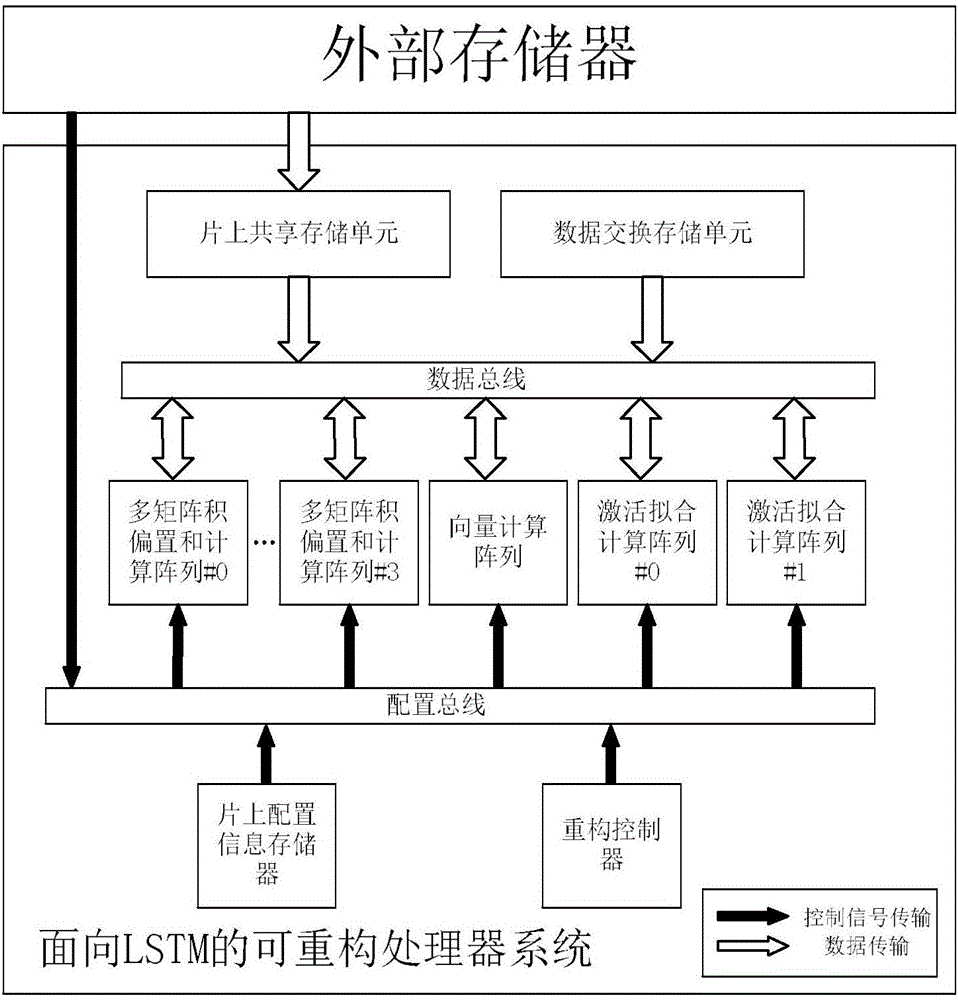

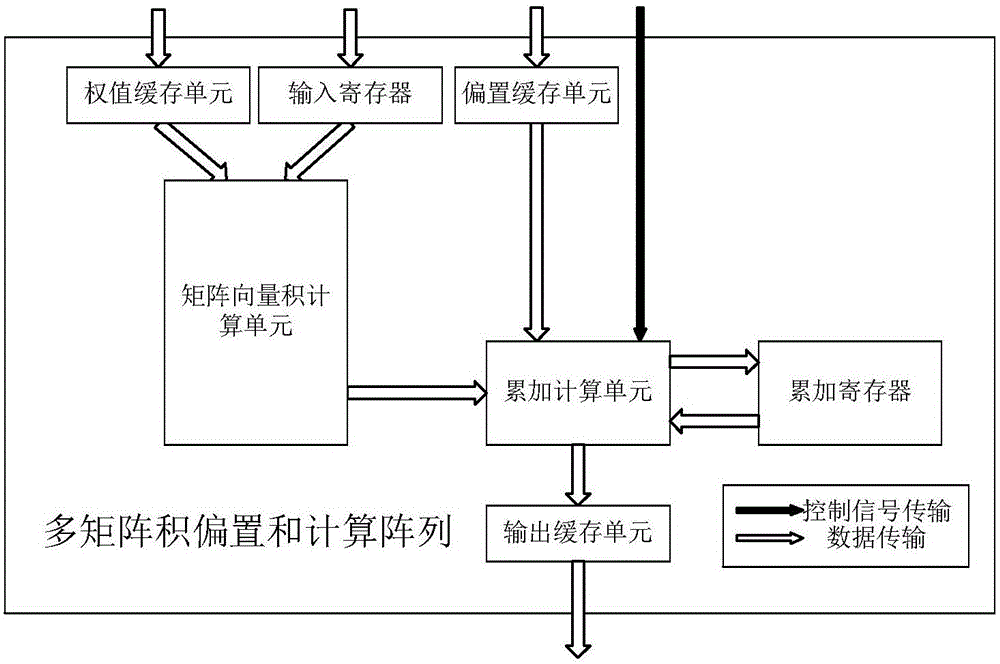

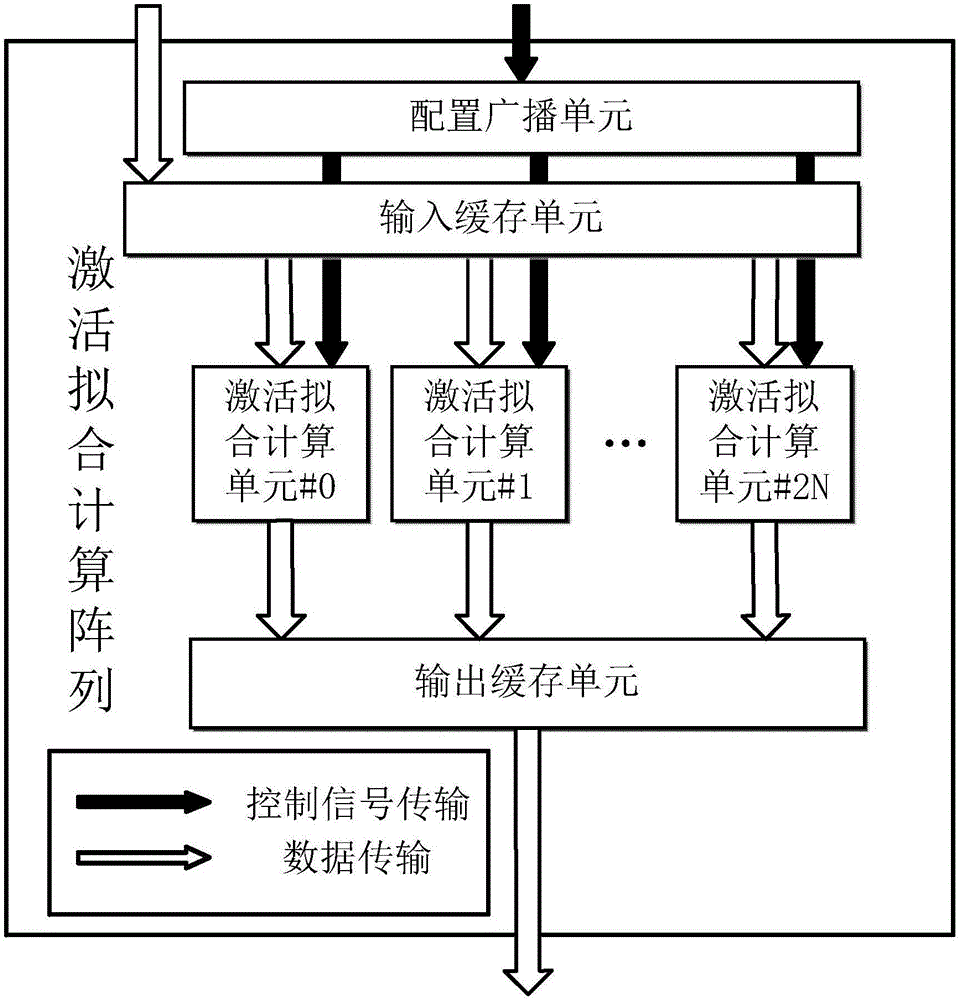

[0025] Such as figure 1 As shown, the multi-computing unit coarse-grained reconfigurable system oriented to the recurrent neural network LSTM obtains the data of the external memory through the on-chip shared storage unit, and the on-chip configuration information storage and reconstruction controller controls the on-chip computing array through the configuration bus, and each computing array Data can be exchanged through the data exchange storage unit; including on-chip shared storage unit, data ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com