Method and system for accelerating deep learning algorithm on field programmable gate array platform

A deep learning and gate array technology, applied in neural learning methods, physical implementation, biological neural network models, etc., can solve the problem of high GPU power consumption, achieve low power consumption, and accelerate the effect of deep learning algorithms

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0062] The field programmable gate array platform in the embodiment of the present invention refers to a computing system that simultaneously integrates a general purpose processor (General Purpose Processor, referred to as "GPP") and a field programmable gate array (Field Programmable GateArrays, referred to as "FPGA") chip , wherein, the data path between FPGA and GPP can adopt PCI-E bus protocol, AXI bus protocol, etc. The data path in the drawings of the embodiments of the present invention is illustrated by using the AXI bus protocol as an example, but the present invention is not limited thereto.

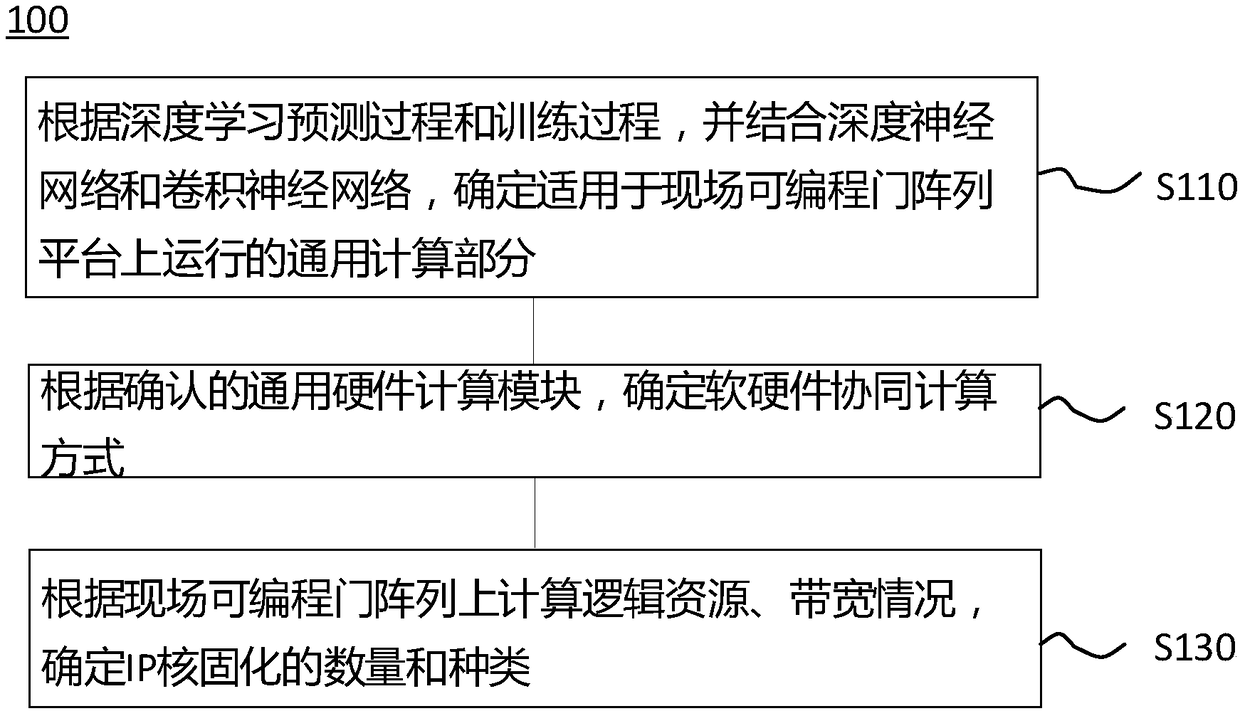

[0063] figure 1 It is a flowchart of a method 100 for accelerating a deep learning algorithm on a field programmable gate array platform according to an embodiment of the present invention. The method 100 includes:

[0064] S110, according to the deep learning prediction process and training process, wherein the training process includes a local pre-training process and a gl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com