Deep learning oriented sparse self-adaptive neural network, algorithm and implementation device

A neural network algorithm and deep learning technology, applied in biological neural network models, physical implementation, etc., can solve problems such as loss of accuracy, and achieve the effect of saving storage requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

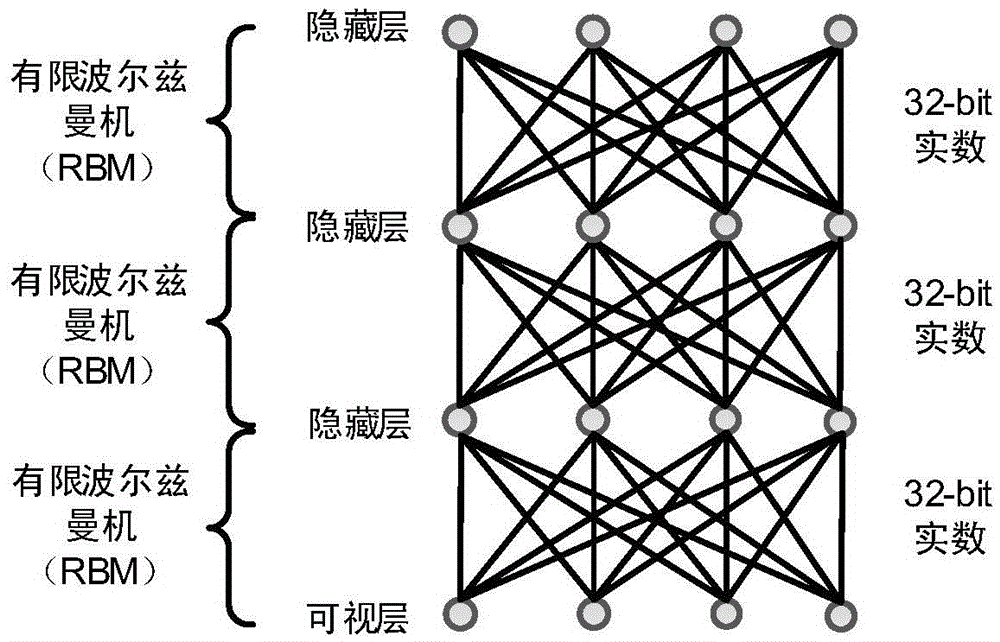

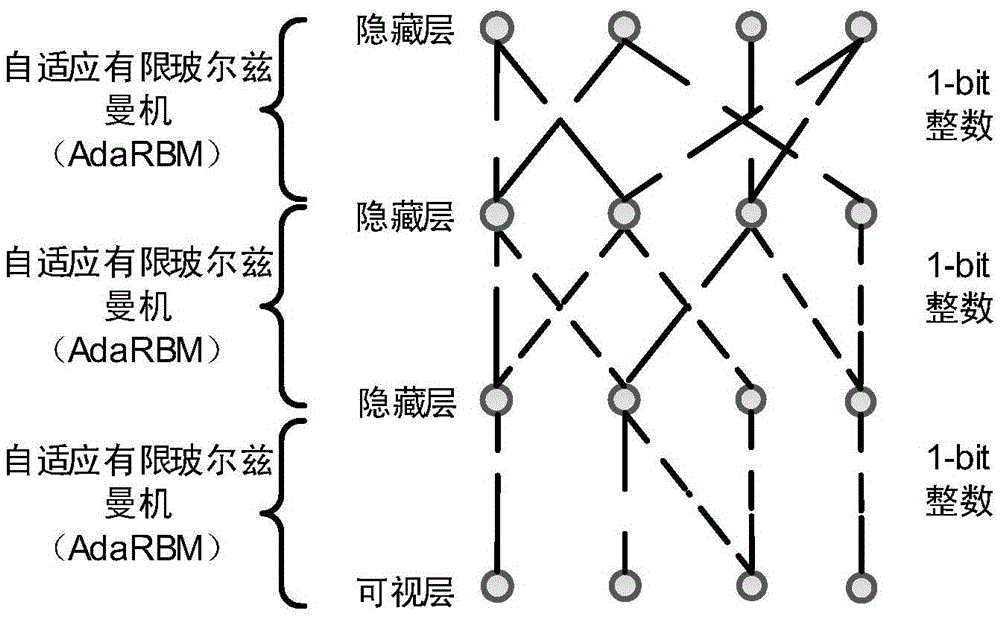

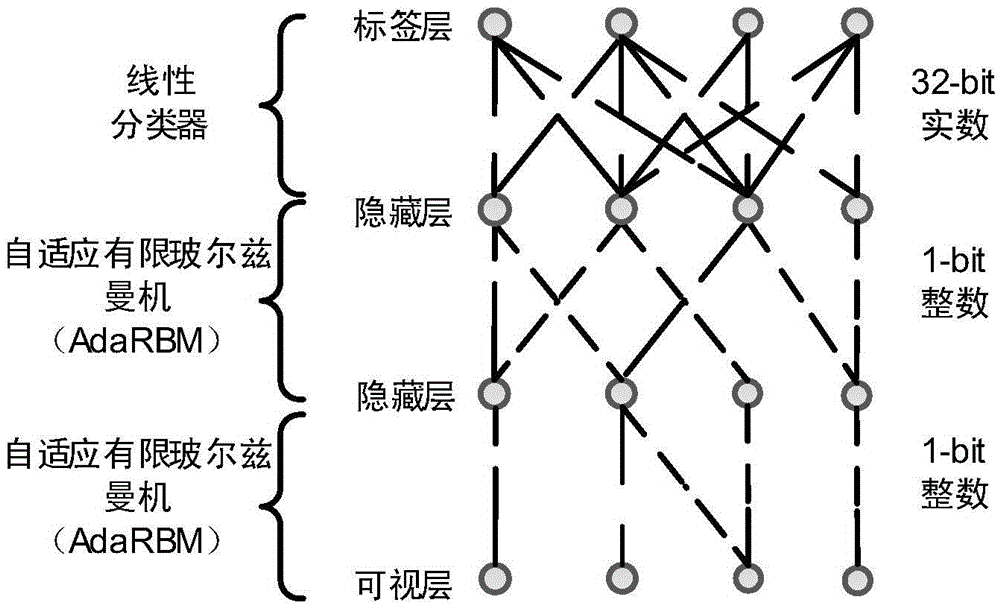

[0038] Generally, a traditional artificial neural network includes a visible layer with a certain number of input nodes and a hidden layer with a certain number of output nodes. Some designs use a label layer in the highest network layer, which is also an optional but not required part of the present invention. The nodes of a hidden layer are connected to the input nodes of the visible layer by weights. Note that when there are two or more hidden layers, the previous hidden layer is connected to another hidden layer. Once the hidden layer of the low-level network is trained, for the high-level network, the hidden layer is the visible layer of the high-level network.

[0039] figure 1 It is a schematic diagram of the classic DBN model. In the DBN network, the parameters describing the connection are dense real numbers. The calculation of each layer is the matrix multiplication between the interconnected units and their excitations. A large number of floating-point data multip...

Embodiment 2

[0077] Early sparse DBN research only focused on extracting sparse features rather than using sparse connections to generate efficient network architectures for hardware models; recent neuromorphic hardware models for deep learning have an increasing number of neurons on a chip, but integrated on a chip A million neurons and a billion synapses are still no small challenge. Figure 4 A deep learning-oriented sparse adaptive neural network optimization and implementation device is shown, and its MAP table and TABLE table are obtained by the DAN sparse algorithm described in the present invention.

[0078] The specific workflow is as follows:

[0079] 1) Detect whether the input bit axon[i] is 1: if it is 1, that is, a synaptic event arrives, then access the corresponding position in the MAP list according to the value of i, if it is 0, then detect the next input bit.

[0080] 2) Read out the corresponding start address and length value in the MAP, if the length value is not 0, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com