Single-machine-oriented mass small record efficient storage and management method

A technology for storage management and recording, which is applied in the direction of memory system, electrical digital data processing, memory address/allocation/relocation, etc., can solve the problems that cannot meet the needs of massive small record storage management, etc., and achieve enhanced processing of complex data types ability, reduce hardware performance requirements, and facilitate backup effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] This embodiment is a description of related conventions such as data structures and parameters in the present invention.

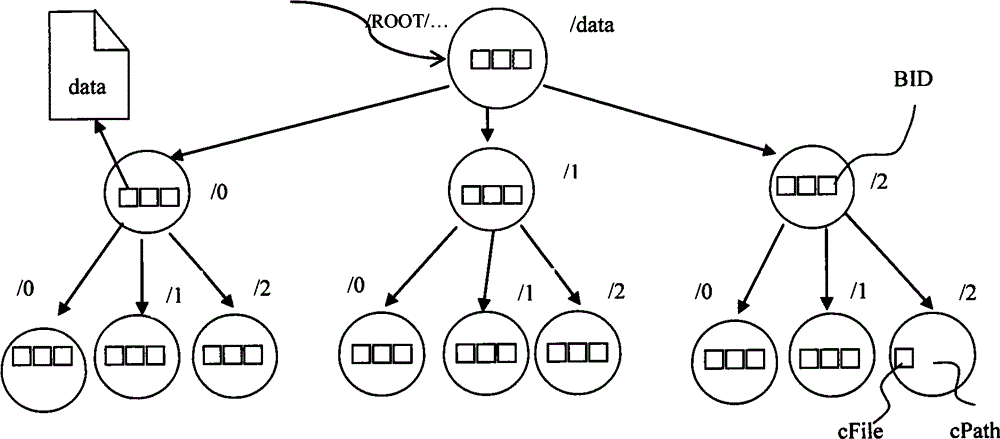

[0049] Without loss of generality, figure 1 Schematic diagram of the storage structure for the B-tree. Create a log storage directory / data under a certain directory / Root / ... on the device disk. The / data folder is equivalent to the root node of the B-tree. cFile is the block file to be written to, and the directory where cFile is located is the current working directory cPath .

[0050] The invention adopts the idea of automatic block, and connects multiple small records into a block file and stores them in the disk. The block file mentioned in the present invention refers to the file saved in the disk storage. The present invention defines the order of the B-tree as M, that is, the maximum number of folders under a single folder on a node is M, and the maximum number of files is M. Define the maximum size of a single chunk file as fSize. R...

Embodiment 2

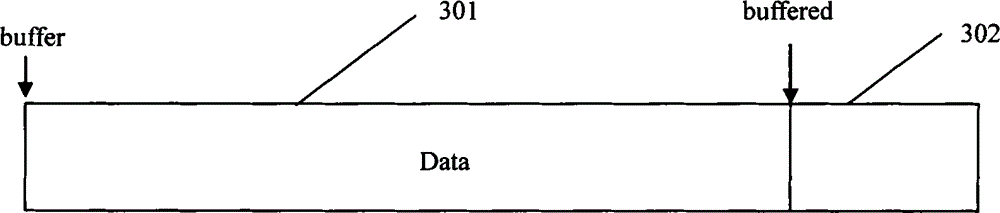

[0058] This embodiment is an implementation of the data caching mechanism, such as image 3 shown. Since the received data recording speed is very fast, and the records are relatively small. If the disk write operation is performed immediately every time a record is received, the disk I / O read and write will be too frequent, the seek time will be too long, and the storage efficiency will be low. In order to avoid frequent disk writing operations, the present invention introduces a data cache mechanism. The new records received are not stored directly in the library, but are stored in the cache area first, and only when the cache space is full, the write operation is performed. This method can reduce the number of disk writes as much as possible and improve storage efficiency.

[0059] In the data cache mechanism, the present invention chooses to allocate a memory area in the memory as the data cache area in advance, and the size of the cache area is configurable. Such as ...

Embodiment 3

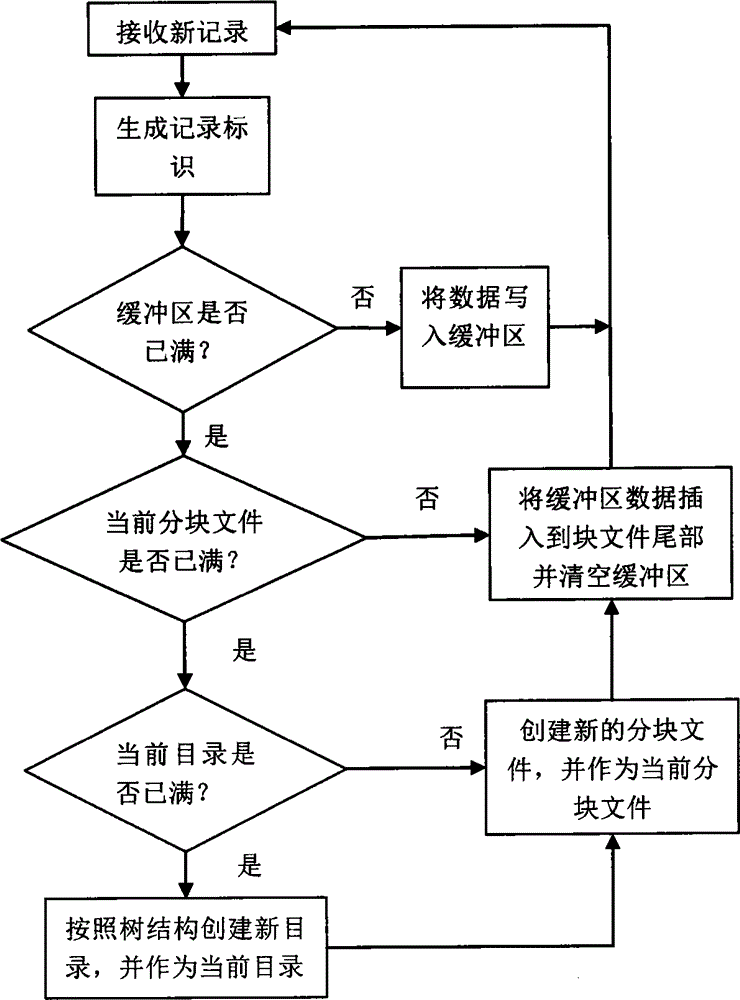

[0062] This embodiment is the process of the data cache stage, such as Figure 4 shown, including the following steps:

[0063] Step 401: Receive a new record;

[0064] Step 402: Generate a record identifier for the new record;

[0065] Step 403: Determine whether the memory buffer area is full, that is, whether its used size plus the new record size exceeds the maximum value of the set memory buffer, if not, go to step 405, otherwise go to step 404;

[0066] Step 404: Obtain the current block file cFile, write the data in the memory cache area into the current block file, clear the memory cache area, and set the value of the pointer buffered to null;

[0067] Step 405: Temporarily store the record in the memory buffer area, and adjust the pointer buffered to point to the end address of the data record in the memory buffer area.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com