Method of signalling motion information for efficient scalable video compression

a technology of motion information and video compression, applied in the direction of color television with bandwidth reduction, television systems, instruments, etc., can solve the problems of ineffective video browsing solutions, limited compression bit-rates which are lower than the capacity of relevant network connections, and still disappointing video quality and duration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

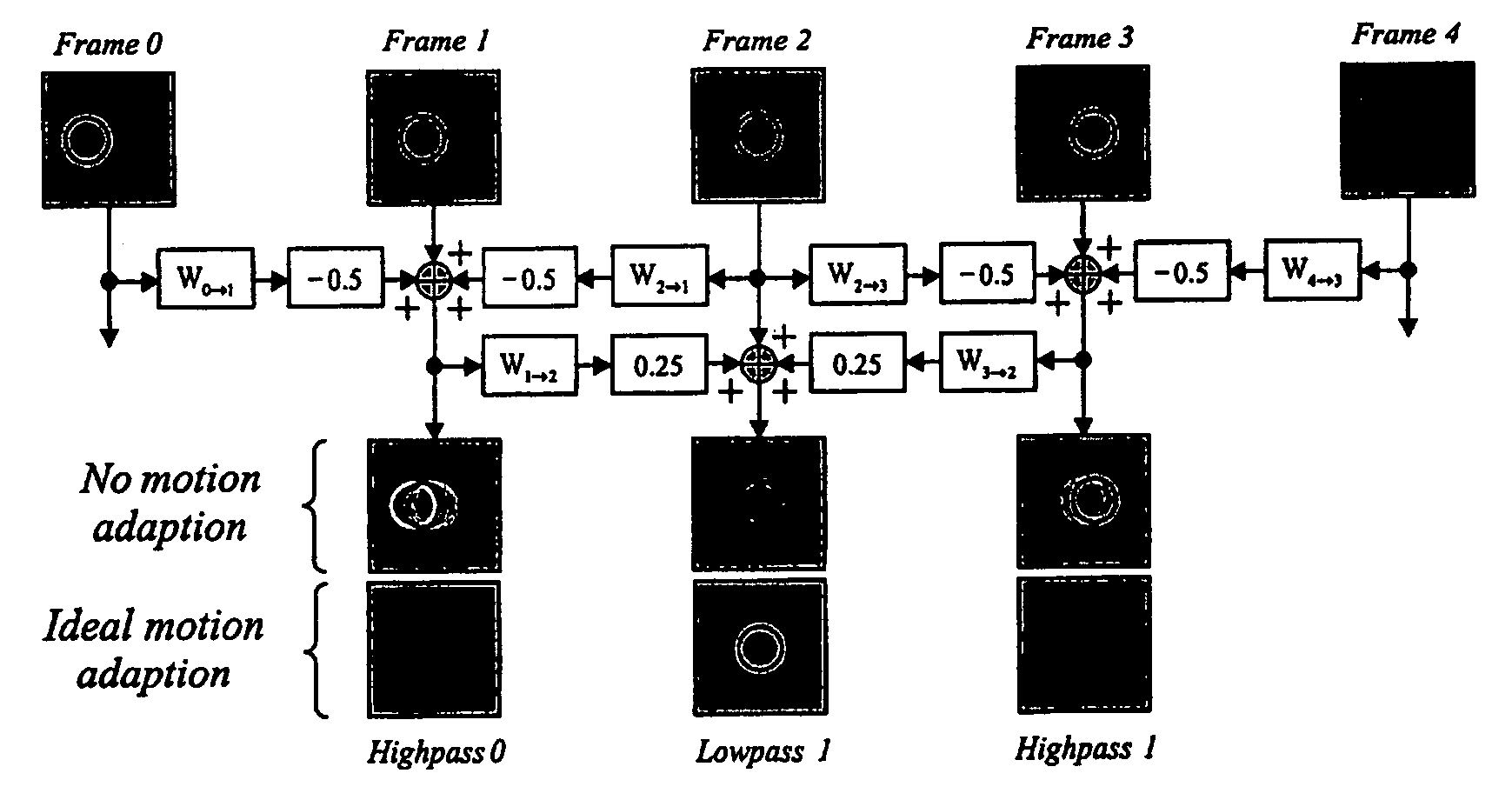

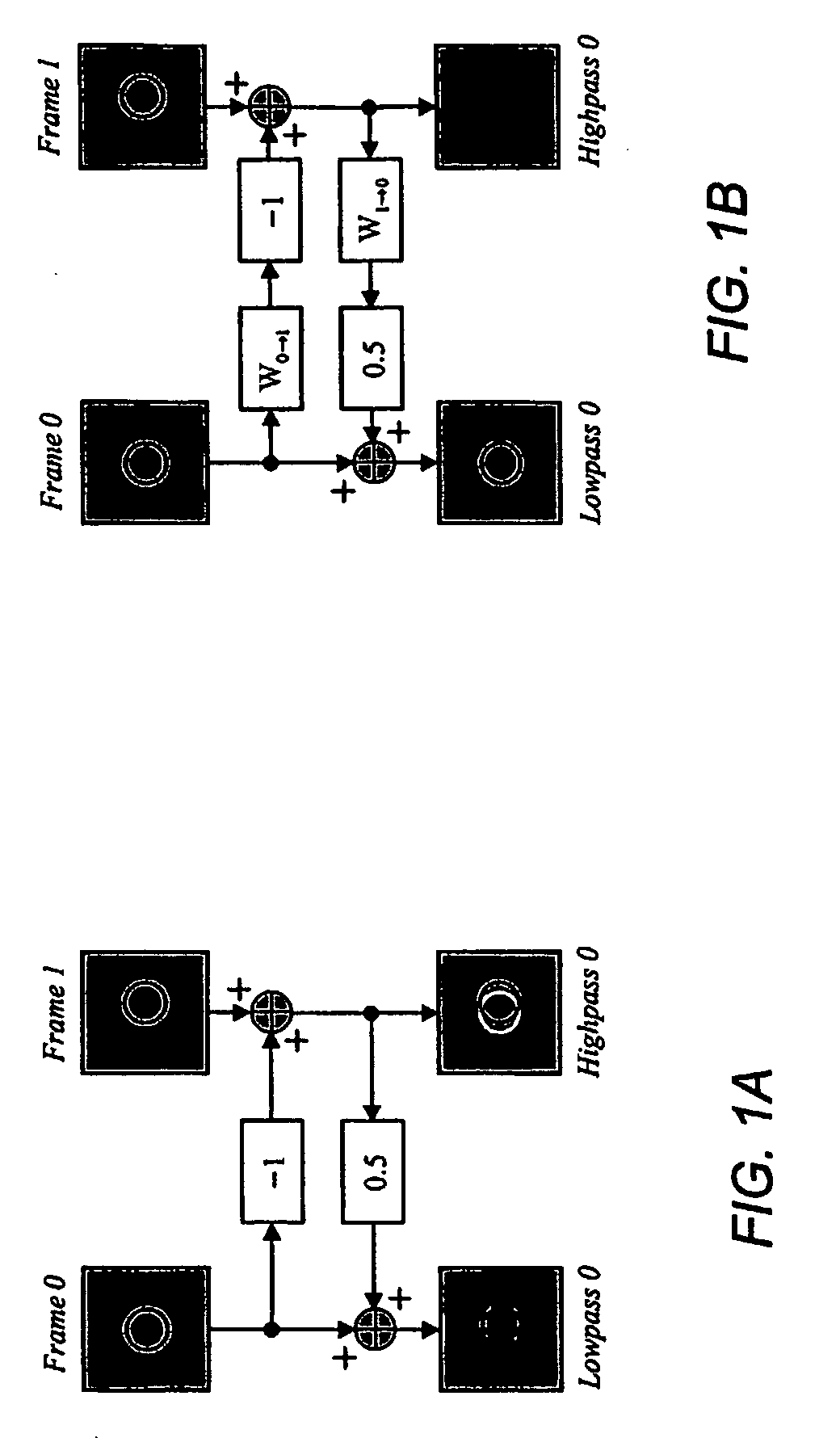

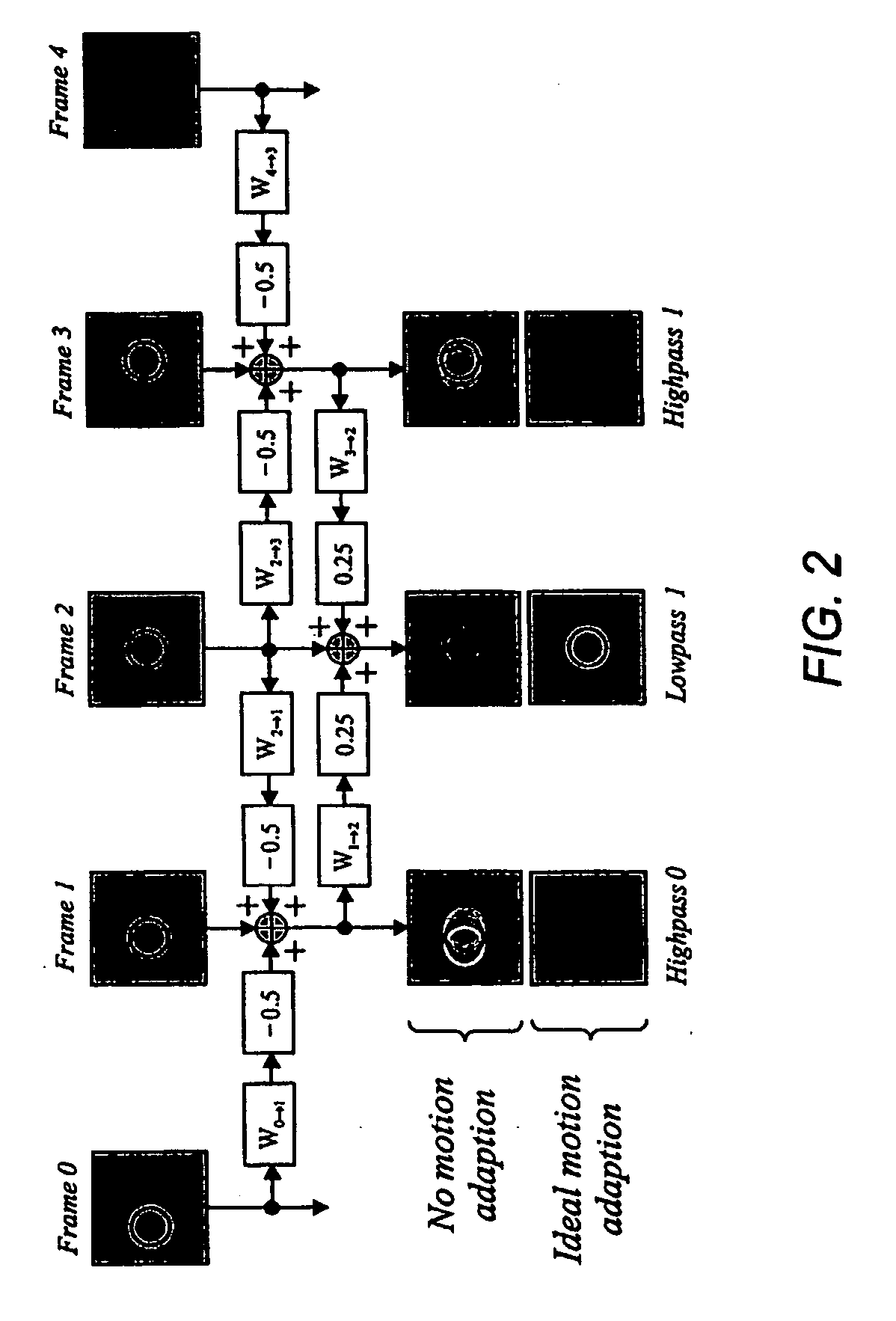

Embodiment Construction

1st Aspect: Reciprocal Motion Fields

[0059] A natural strategy for estimating the reciprocal motion fields, W2k→2k+1 and W2k+1→2k, would be to determine the parameters for W2k→2k+1 which minimise some measure (e.g., energy) of the mapping residual x2k+1−W2k→2k+1(x2k) and to separately determine the parameters for W2k+1→2k which minimise some measure of its residual signal, x2k−W2k+1→2k(x2k+1). In general, such a procedure will lead to parameters for W2k→2k+1, which cannot be deduced from those for W2k+1→k2 and vice-versa, so that both sets of parameters must be sent to the decoder.

[0060] It turns out that only one of the two motion fields must be directly estimated. The other can then be deduced by “inverting” the motion field which was actually estimated. Both the compressor and the decompressor may perform this inversion so that only one motion field must actually be transmitted.

[0061] True scene motion fields cannot generally be inverted, due to the presence of occlusions and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com