Data allocation in a distributed storage system

a distributed storage system and data technology, applied in the field of data storage, can solve the problem that the failure of a device has a minimal effect on the performance of the distribution system

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

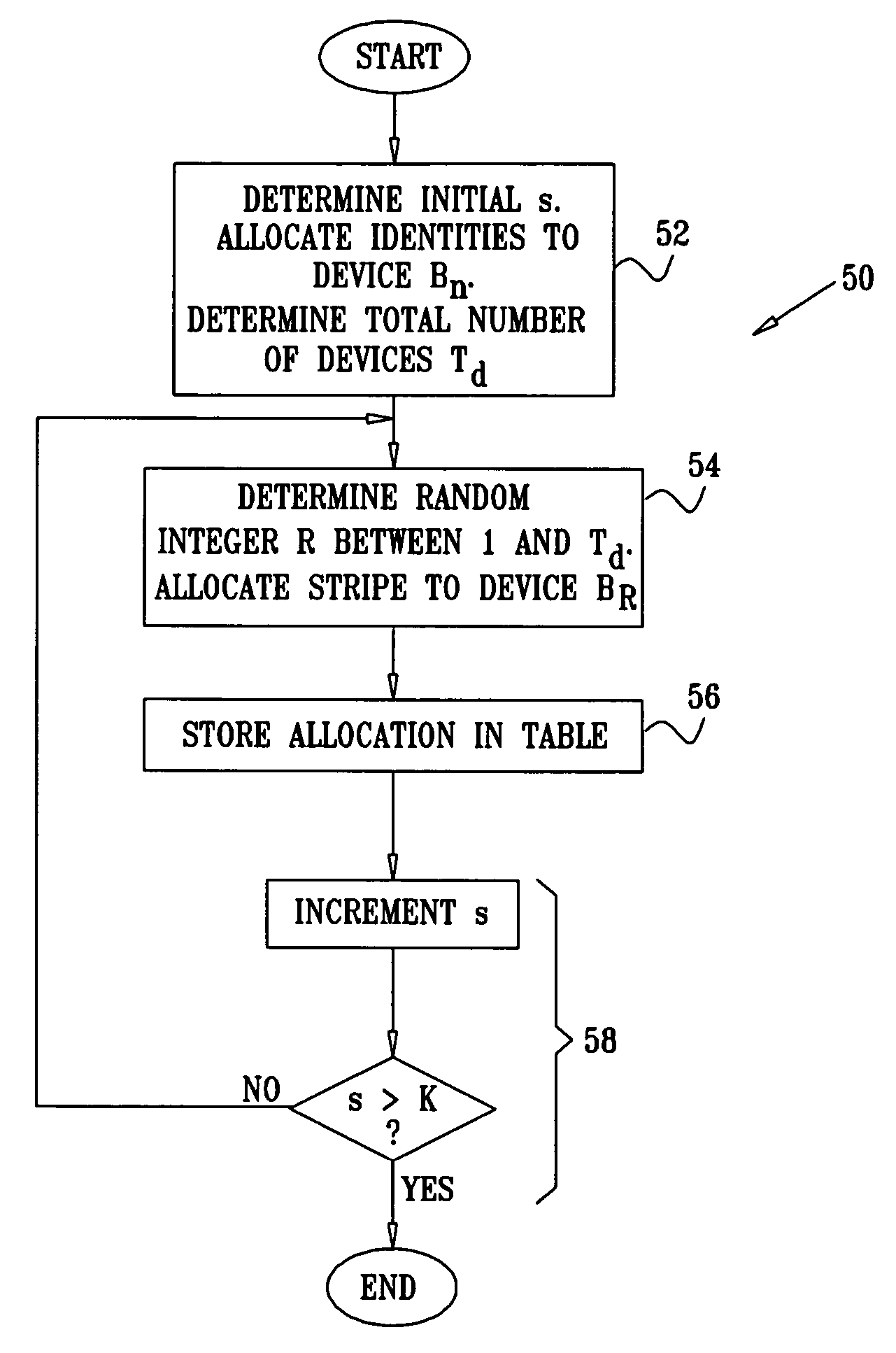

[0077] Reference is now made to FIG. 1, which illustrates distribution of data addresses among data storage devices, according to a preferred embodiment of the present invention. A storage system 12 comprises a plurality of separate storage devices 14, 16, 18, 20, and 22, also respectively referred to herein as storage devices B1, B2, B3, B4, and B5, and collectively as devices Bn. It will be understood that system 12 may comprise substantially any number of physically separate devices, and that the five devices Bn used herein are by way of example. Devices Bn comprise any components wherein data 34, also herein termed data D, may be stored, processed, and / or serviced. Examples of devices Bn comprise random access memory (RAM) which has a fast access time and which are typically used as caches, disks which typically have a slow access time, or any combination of such components. A host 24 communicates with system 12 in order to read data from, or write data to, the system. A central...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com