A kind of asynchronous processing method and device based on neural network accelerator

A neural network and asynchronous processing technology, applied in the field of deep learning, can solve the problems of complex deep learning network design, bloated code callback, poor flexibility, etc., to speed up technical problems of asynchronous operation, reduce CPU bandwidth, and improve utilization.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

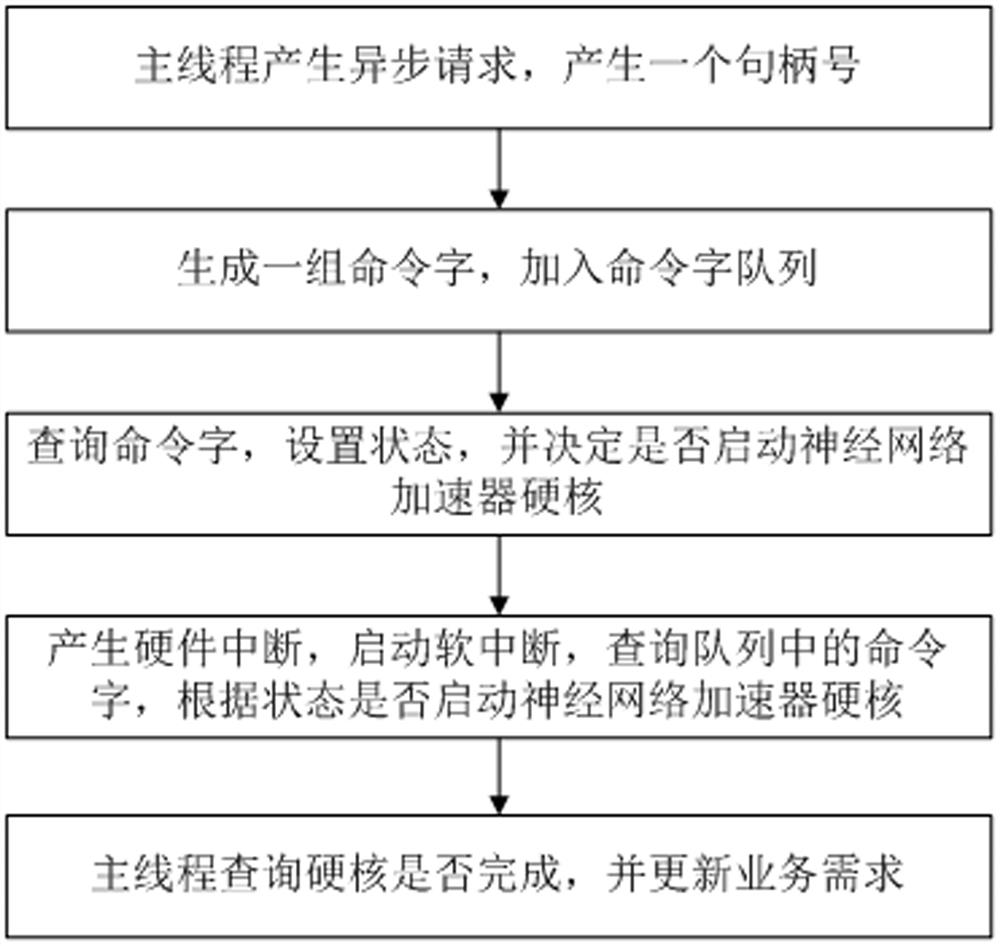

[0057] An asynchronous method based on a neural network accelerator provided by an embodiment of the present application is applied to a face capture machine in the security field, and the method includes the following steps:

[0058] The test evaluates the performance of hard and soft cores, and makes a choice based on the flexibility of the current invention:

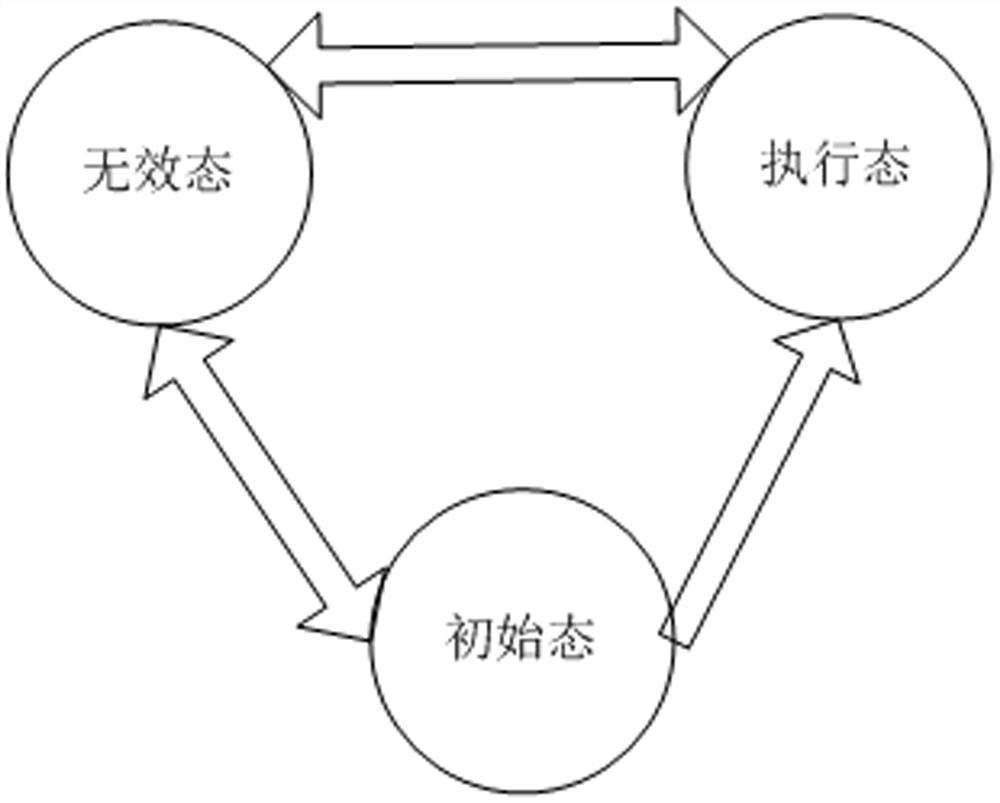

[0059] The first solution includes: when the face feature network is used, the hard core execution performance of the neural network accelerator is similar to the soft core CPU execution post-processing, and the feature matching performance is close. The main thread can be used to asynchronously request a post-processing feature matching, and then query the hard core to complete;

[0060] The second solution includes: when the facial feature network, neural network accelerator hard-core execution performance and soft-core CPU execution post-processing, feature matching performance is compared, the CPU execution time is...

Embodiment 2

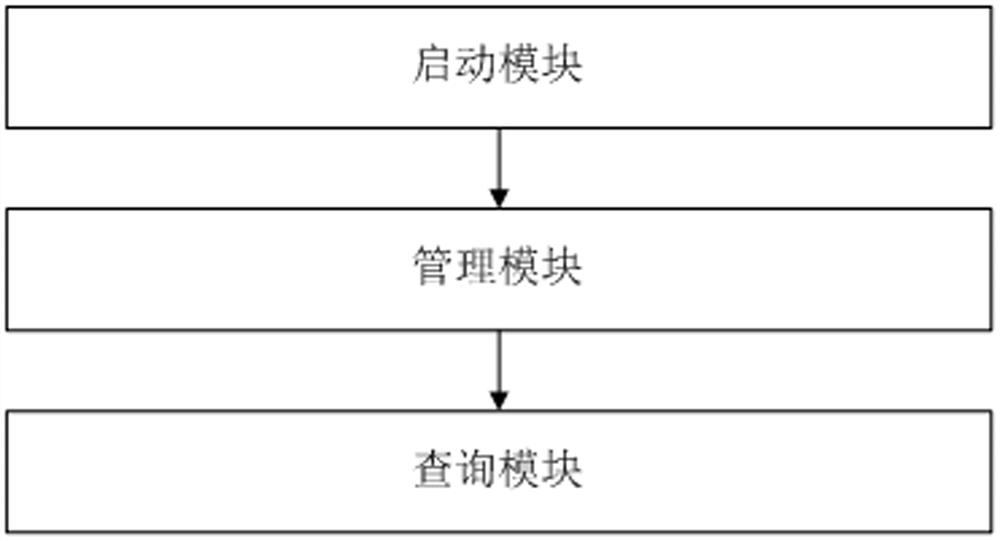

[0069] An asynchronous method based on neural network accelerator provided by the embodiment of this application is to implement Example 2 of the present invention, which requires cooperation Figure 5 The hardware architecture of the implementation of the present invention, the hard core instruction Figure 5 Medium NPU network processing unit, soft core refers to CPU central processing unit;

[0070] The shared queue exists in the DDR controller, so that the soft core and the hard core can be accessed through the bus;

[0071] The main thread runs in the CPU soft core, sends asynchronous requests, starts the hard core, and at the same time the hard core executes, the soft core processes other services synchronously to achieve a parallel effect.

[0072] In particular, the processes described above with reference to the flowcharts may be implemented as computer software programs in accordance with the disclosed embodiments of the present invention. For example, embodiments ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com