Dynamic resource scheduling method based on analysis technology and data flow information on heterogeneous many-cores

A technology of dynamic resources and scheduling methods, applied in resource allocation, electrical digital data processing, program control design, etc., can solve communication delays, low utilization of heterogeneous core resources, etc., to reduce communication delays, improve parallelism and Calculation efficiency and the effect of load balancing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The present invention is based on the MGUPUSim heterogeneous platform, and a resource scheduling method is added to the platform, so as to illustrate the purpose, advantages and key technical features of the present invention.

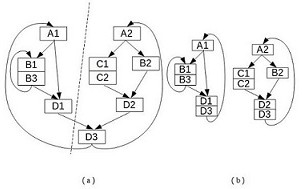

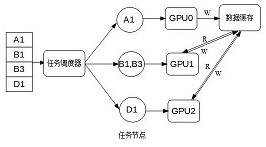

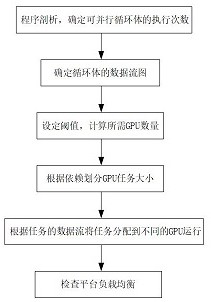

[0032] MGUPUSim is a highly configurable heterogeneous platform. Users can freely choose a unified multi-GPU model or a discrete multi-GPU model, as well as configure the number of GPUs. However, configuring the number of GPUs by the user will cause waste of GPU resources and affect existing data. Dependent tasks cannot be manually assigned to the GPU to run, so there may be low utilization of GPU resources and resource imbalance. Therefore, the steps of the resource scheduling method based on profiling technology and data flow information on the heterogeneous simulator MGPUSim are as follows:

[0033] Step 1: Program analysis to determine the execution times of the parallel loop body.

[0034] Using offline analysis technology, select the loop ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com