Feature extraction method and device based on voice signal time domain and frequency domain, and echo cancellation method and device

A feature extraction and speech signal technology, applied in speech analysis, instruments, etc., can solve the problems of insufficient feature information and poor echo cancellation effect, and achieve the effect of improving the effect and comprehensive feature information.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0058] The embodiment of the present invention provides a feature extraction method based on the speech signal time domain and frequency domain, including:

[0059] S1: Calculate the time weight vector according to the intermediate mapping feature, and expand the time weight vector to the dimension equal to the intermediate mapping feature, wherein the intermediate mapping feature is the time-frequency feature of the speech signal through a multi-layer convolutional neural network After transformation, the time weight vector contains important time frame information in speech features;

[0060] S2: Perform a Hadamard product of the intermediate mapping feature and the time weight vector to obtain a time-domain weighted mapping feature;

[0061] S3: Calculate a frequency weight vector according to the time-domain weighted mapping feature, and expand the frequency weight vector to a dimension equal to the time-domain weighted mapping feature, wherein the frequency weight vector ...

Embodiment 2

[0075] Based on the same inventive concept, this embodiment provides a feature extraction device based on the time domain and frequency domain of speech signals, the device is an attention module, including:

[0076] A time-domain attention module is used to calculate a time weight vector according to the intermediate mapping feature, and expand the time weight vector to a dimension equal to the intermediate mapping feature, wherein the intermediate mapping feature is passed through by the time-frequency feature of the speech signal After multi-layer convolutional neural network transformation, the time weight vector contains important time frame information in speech features;

[0077] A time-domain weighting module, configured to perform a Hadamard product on the intermediate mapping feature and the time weight vector to obtain a time-domain weighted mapping feature;

[0078] A frequency-domain attention module, configured to calculate a frequency weight vector according to ...

Embodiment 3

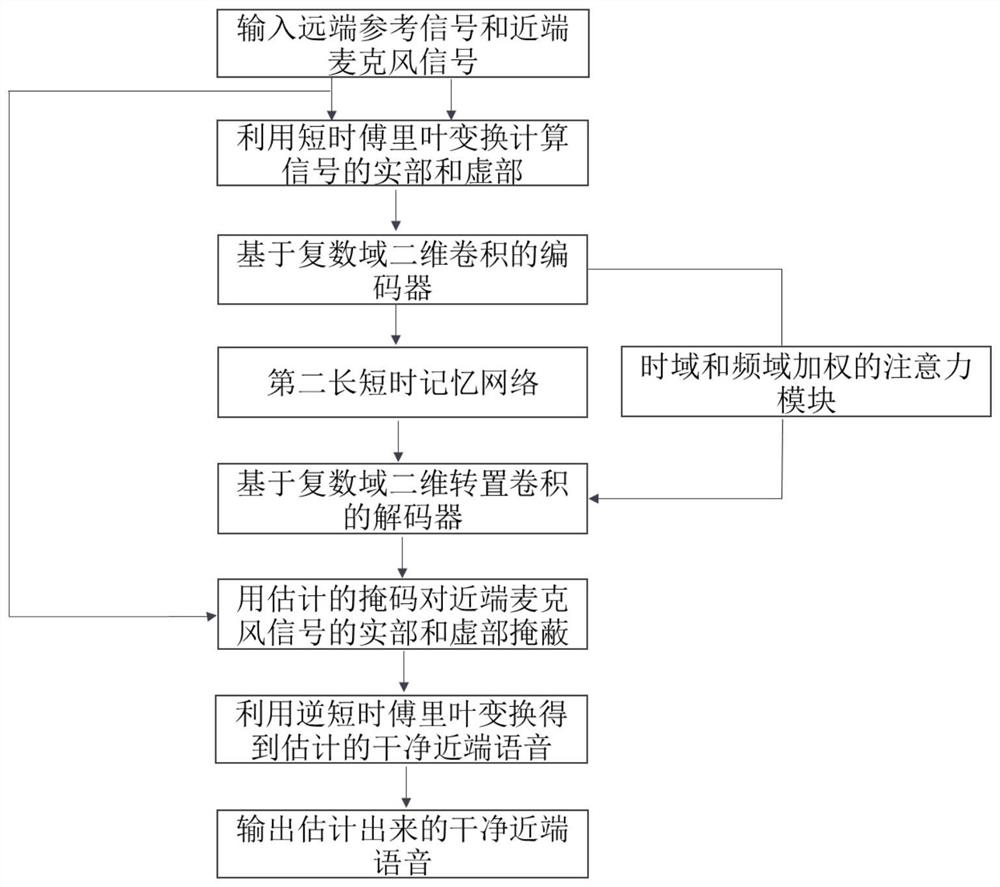

[0084] Based on the same inventive concept, this embodiment provides an echo cancellation method, including:

[0085] S101: Use the short-time Fourier transform to calculate the real and imaginary parts of the far-end reference signal and the near-end microphone signal, and stack the real and imaginary parts of the far-end reference signal and the near-end microphone signal in the channel dimension to form a four-dimensional input initial acoustic characteristics of the channel;

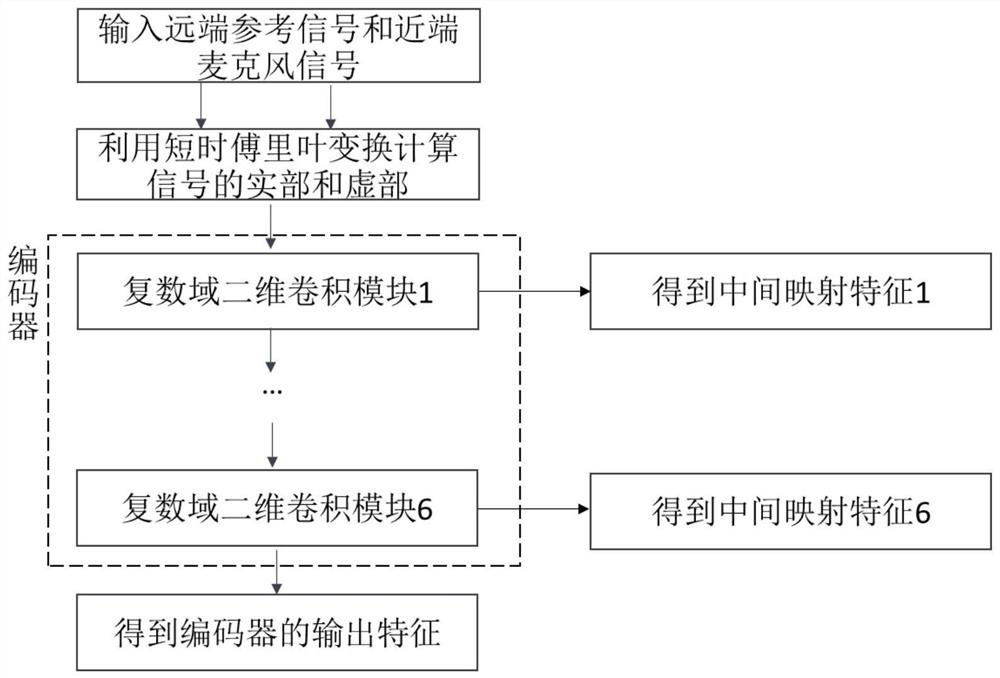

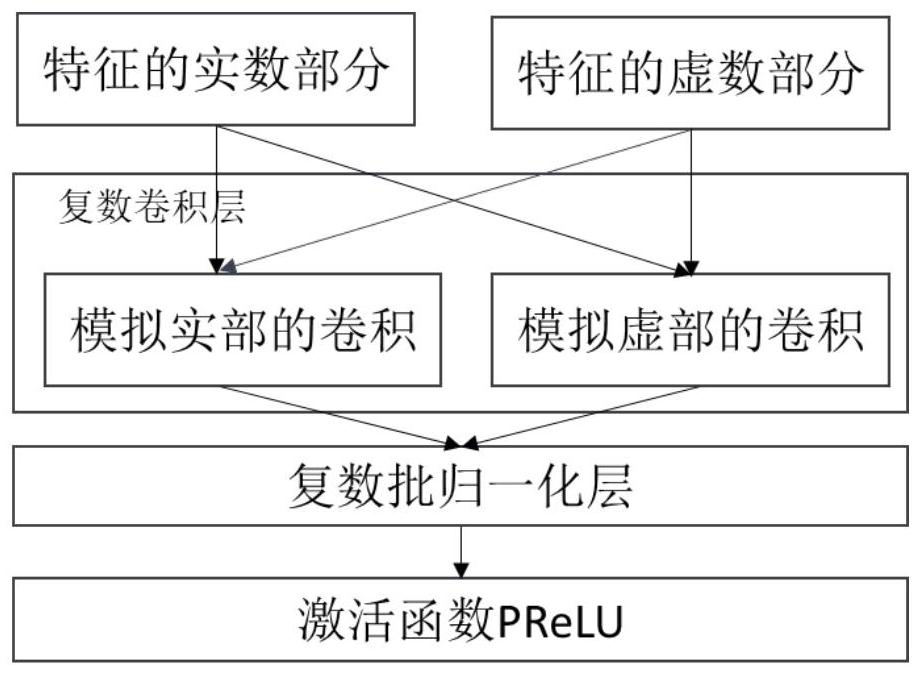

[0086] S102: Using two-dimensional convolution based on the complex number field for the initial acoustic features to obtain intermediate mapping features;

[0087] S103: Perform feature extraction on the intermediate mapping features to obtain mapping features weighted in the time domain and frequency domain;

[0088] S104: Perform temporal feature learning on the intermediate mapping features to obtain time-modeled features;

[0089] S105: Obtain a complex number domain ratio mask according to th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com