A training method for horizontally federated xgboost decision trees

A training method and decision tree technology, applied in machine learning, digital transmission systems, instruments, etc., can solve the problems of excessive leakage of original information of data owners, high number of communication rounds, and inability to effectively protect data, so as to improve privacy Effect of protection strength, high data protection strength, high performance and practicality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] Exemplary embodiments of the present invention will be described in more detail below with reference to the accompanying drawings. Although exemplary embodiments of the present invention are shown in the drawings, it should be understood that the invention may be embodied in various forms and should not be limited to the embodiments set forth herein. Rather, these embodiments are provided for more thorough understanding of the present invention and to fully convey the scope of the present invention to those skilled in the art.

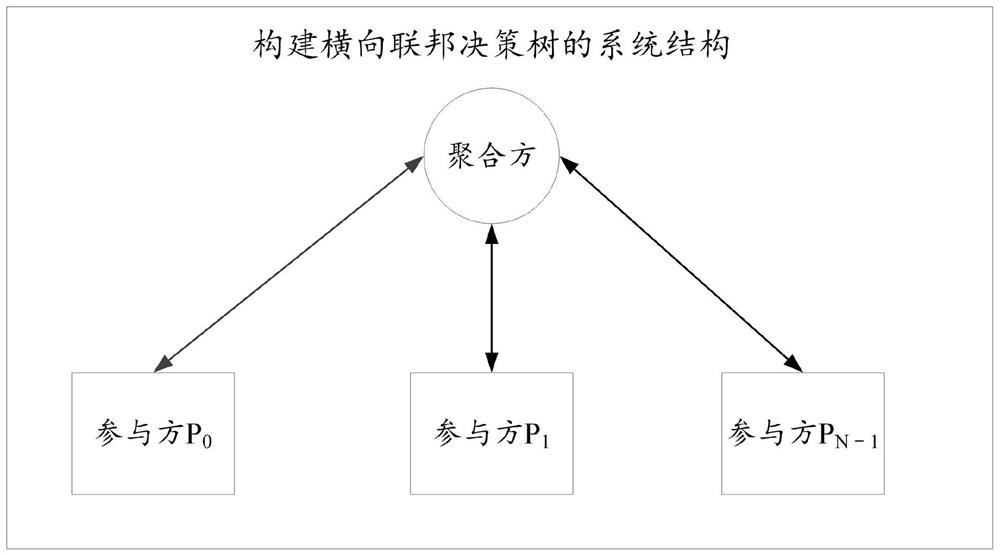

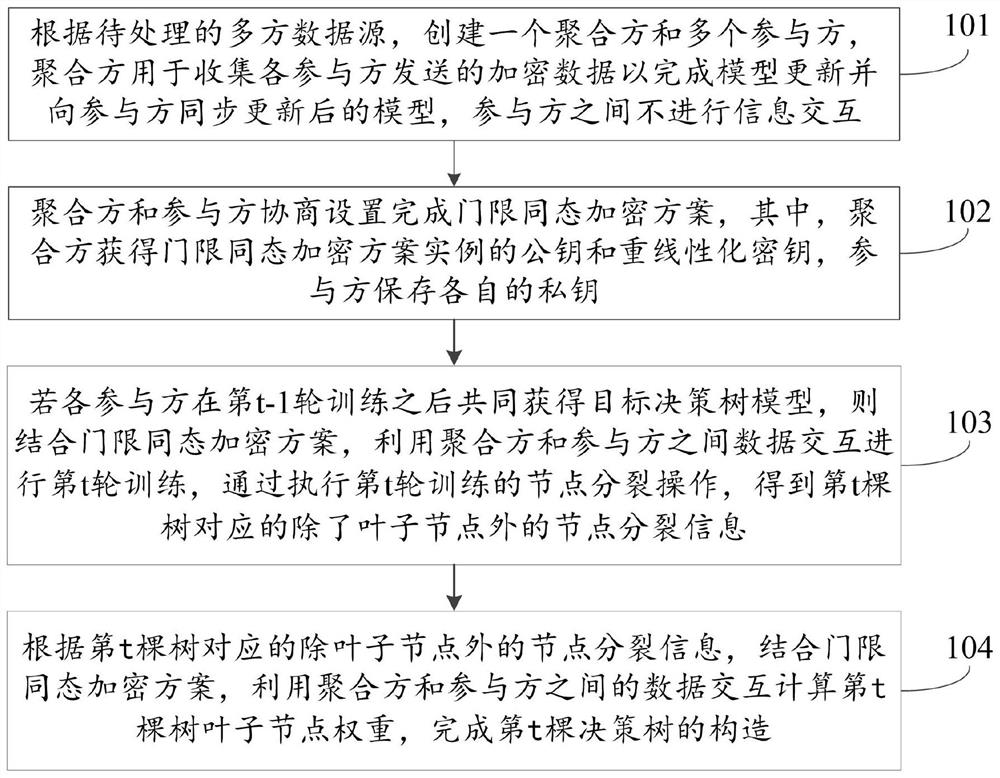

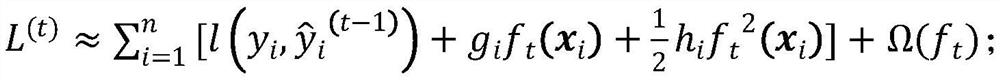

[0048] The horizontal federated decision tree constructed in the embodiment of the present invention means that multiple data owners jointly train the decision tree model while maintaining the privacy of the original data of each data owner. Each data owner holds the same feature type and label type respectively. the same data set Assuming that the samples between the data sets do not overlap, the goal of the horizontal federated decision tr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com