Distributed multi-level graph network training method and system

A network training, multi-level technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve the problems of high parameter server load, high algorithm complexity and low efficiency of decentralized training methods, and achieve consistent reduction complexity, speed up training time, and improve training efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0040] A distributed multi-level graph network training method, comprising:

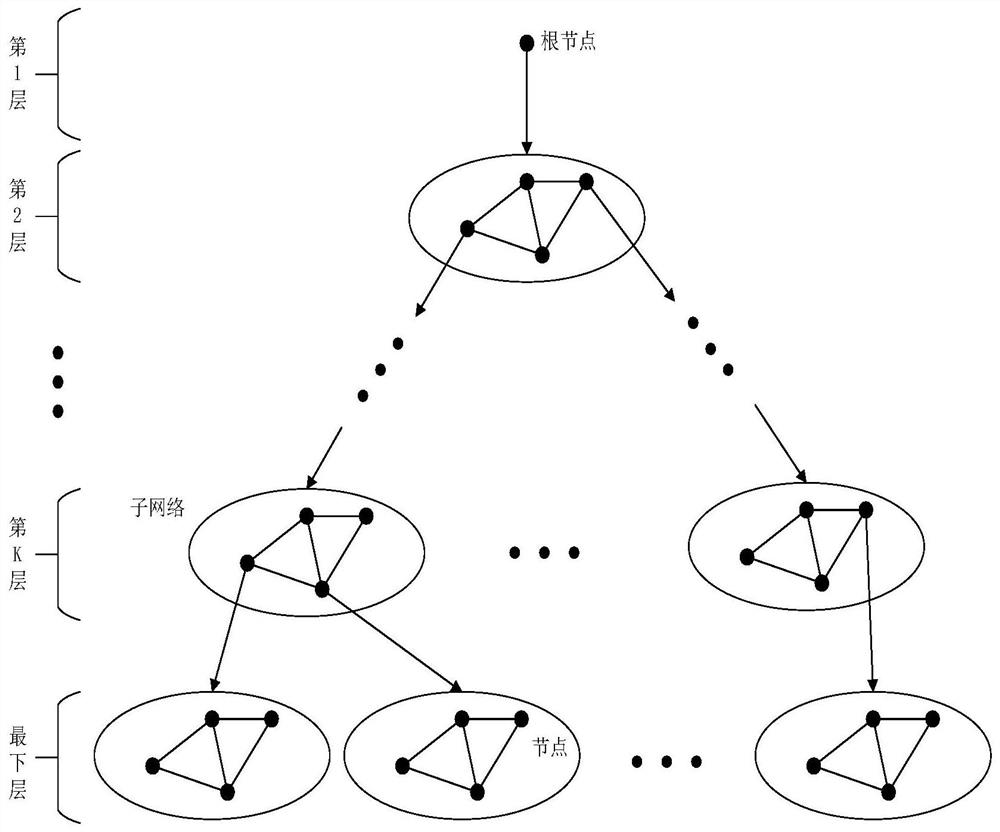

[0041] Step 1), each node of the graph is divided into multi-level networks according to the strength of the connection relationship, using the graph segmentation algorithm, and the upper-level network manages the lower-level network;

[0042] Step 2), performing parallel graph network calculation on the lowermost network in the multi-level network obtained in step 1), and passing the result matrix to the upper management network;

[0043] Step 3), improve the Paxos consensus algorithm for the superior management network obtained in the step 2) to perform node data sharing;

[0044] Step 4), performing information fusion on the shared result matrix obtained in each sub-network of the layer where the upper-level management network involved in step 3), so that each sub-network of this layer can obtain a respective fused result matrix;

[0045] Step 5), uploading each fused result matrix in step 4) to ...

Embodiment 2

[0080] This embodiment provides a distributed multi-level graph network training system, including: a graph network training module and a graph network model generation module; wherein,

[0081] The graph network training module is used to use the graph segmentation algorithm. Based on the strength of the connection relationship between each node of the graph data, the graph data is divided into multi-level networks, in which the bottom layer is the original data; each layer includes multiple sub-networks, each sub-network The management node of the network is located on the adjacent upper layer of the sub-network; the graph network calculation is performed on the bottom layer of the multi-level network, and the result matrix of each sub-network at the bottom layer is uploaded to the management node of the sub-network; Each management node of the matrix performs the processing of the improved Paxos consensus algorithm, so that the relevant nodes in the sub-network to which the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com