Target recognition system and method based on time slice convolutional neural network

A convolutional neural network and time slicing technology, applied in biological neural network models, neural architectures, character and pattern recognition, etc., to improve recognition efficiency and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

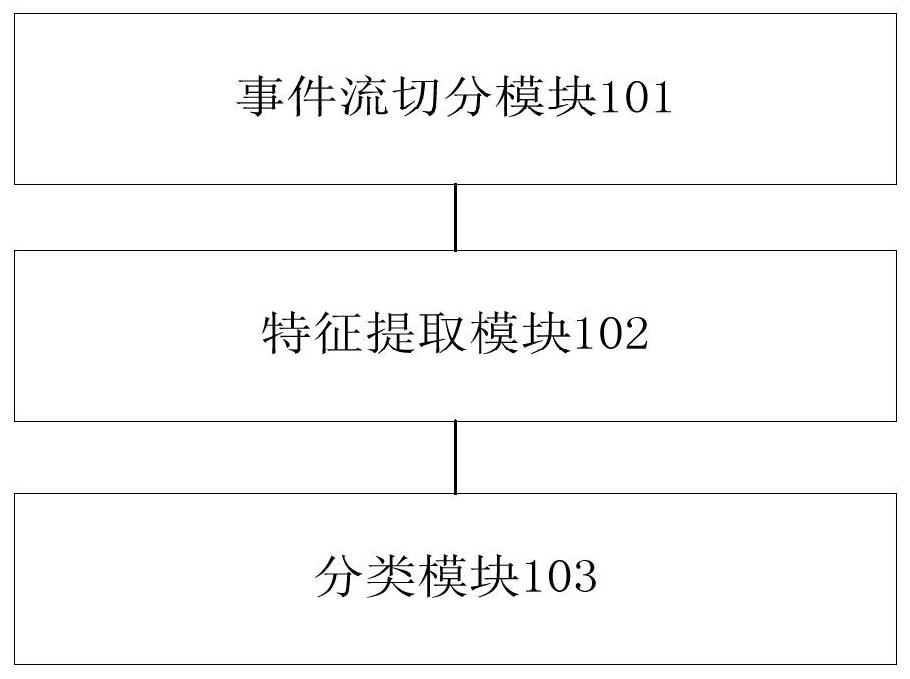

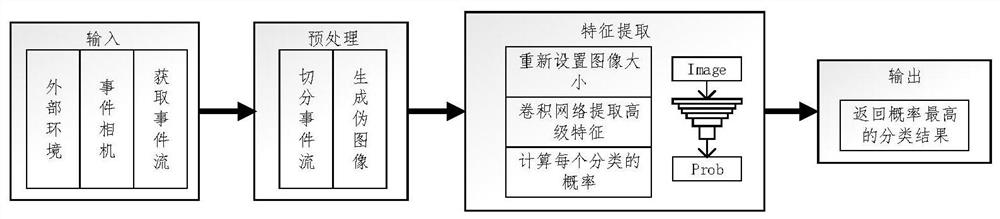

[0050] An object recognition system based on time-sliced convolutional neural network, such as figure 1 As shown, the system includes:

[0051] The event stream segmentation module 101 is used to segment the event stream samples to form an event set, represent the event set as a pseudo image through an event representation method, and stitch the pseudo image into the first feature according to the input channel of the time-sliced convolutional neural network map, and then re-assign different weights to each channel to obtain the second feature map;

[0052] The feature extraction module 102 is used to input the second feature map into the time-sliced convolutional neural network for feature extraction to become a feature map of preset specifications;

[0053] The classification module 103 is configured to convert the feature map of the preset specification into a vector, and obtain the category with the highest probability as the target recognition result.

[0054] Speci...

Embodiment 2

[0073] An object recognition system based on time-sliced convolutional neural network, still as figure 1 As shown, the system includes: an event stream segmentation module 101, which is used to segment the event stream samples to form an event set, express the event set as a pseudo image through an event representation method, and convolute the pseudo image according to time slice convolutional neural network The input channels are spliced into the first feature map, and then re-assign different weights to each channel to obtain the second feature map; the feature extraction module 102 is used to input the second feature map into the time-sliced convolutional neural network for feature extraction become a feature map of a preset specification; the classification module 103 is used to convert the feature map of a preset specification into a vector, and obtain the category with the highest probability as the target recognition result.

[0074] Preferably, during execution,...

Embodiment 3

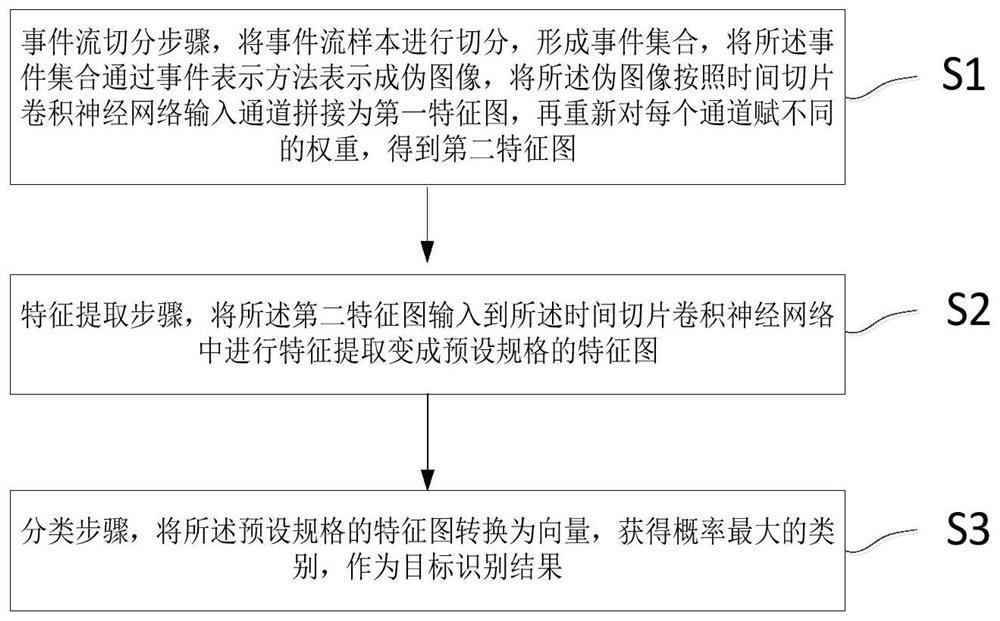

[0083] An object recognition method based on time-sliced convolutional neural network, such as image 3 shown, including the following steps:

[0084] S1. The step of segmenting the event stream, segmenting the event stream samples to form an event set, representing the event set as a pseudo image through an event representation method, splicing the pseudo image according to the time-sliced convolutional neural network input channel into The first feature map, and then re-assign different weights to each channel to obtain the second feature map;

[0085] S2. A feature extraction step, inputting the second feature map into the time-sliced convolutional neural network for feature extraction to become a feature map of a preset specification;

[0086] S3. A classification step, converting the feature map of the preset specification into a vector, and obtaining the category with the highest probability as the target recognition result.

[0087] As a transformable implementa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com