Neural network compiling optimization method and system

A neural network and optimization method technology, applied in the optimization method and system of neural network compilation, compiler and compilation process, can solve the problems of increasing memory burden, increasing system burden, and not significantly improving system execution efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055]In order to understand the technical features, objects and effects of the invention, the specific embodiments of the present invention will be described with reference to the drawings, and the same reference numerals are similar or structurally, but the same components are the same.

[0056]Herein, "schematic" means "acting as an example, example or description", which is not described herein as "schematic", and the embodiment is interpreted as a more preferred or more advantageous Technical solutions. In order to make the drawing simple, only the portions related to the present exemplary embodiments are schematically representative, which does not represent its actual structure and true ratio of the product.

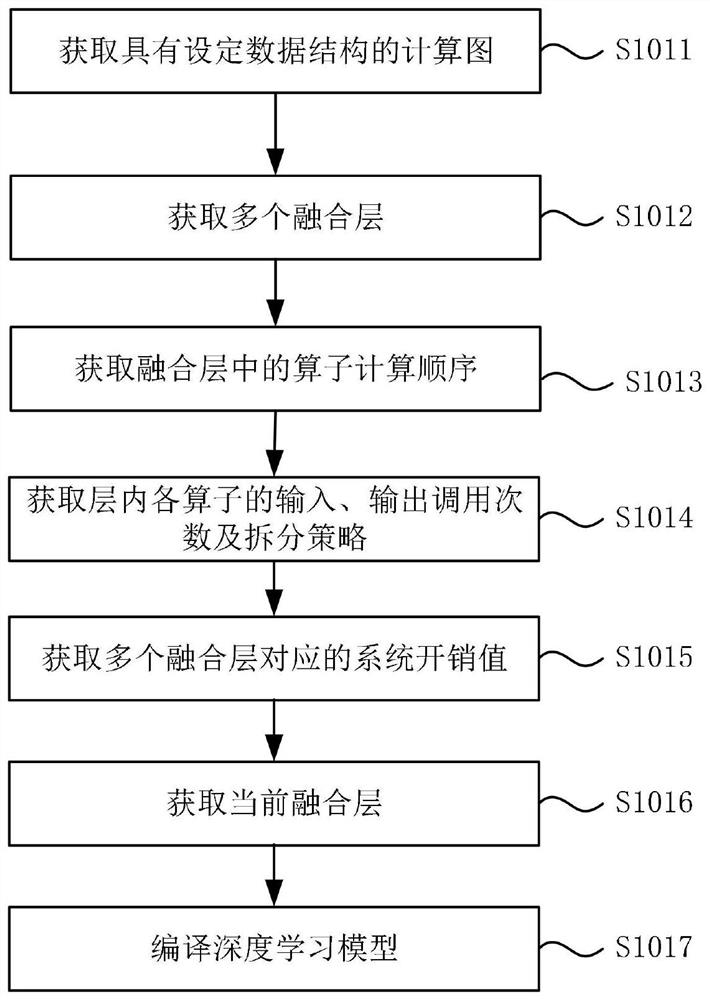

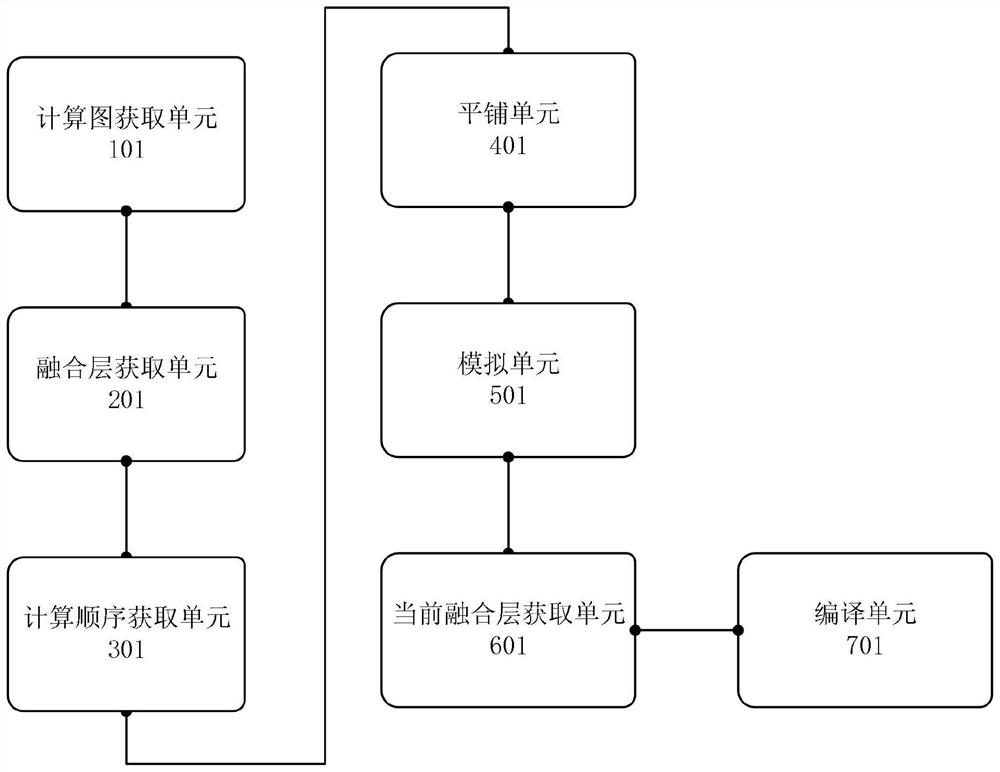

[0057]In one embodiment of the optimization method compiled by the neural network of the present invention, the present invention mainly includes the following portions: IR conversion, interlayer fusion scheme search module, block and single-operator scheduling module, cost m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com