Neural network compiling method and system, computer storage medium and compiling equipment

A technology of neural network and compilation method, which is applied in the direction of neural learning method, biological neural network model, neural architecture, etc., and can solve the problems of detailed algorithm invisible to users, poor transferability, poor flexibility of optimization algorithm, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

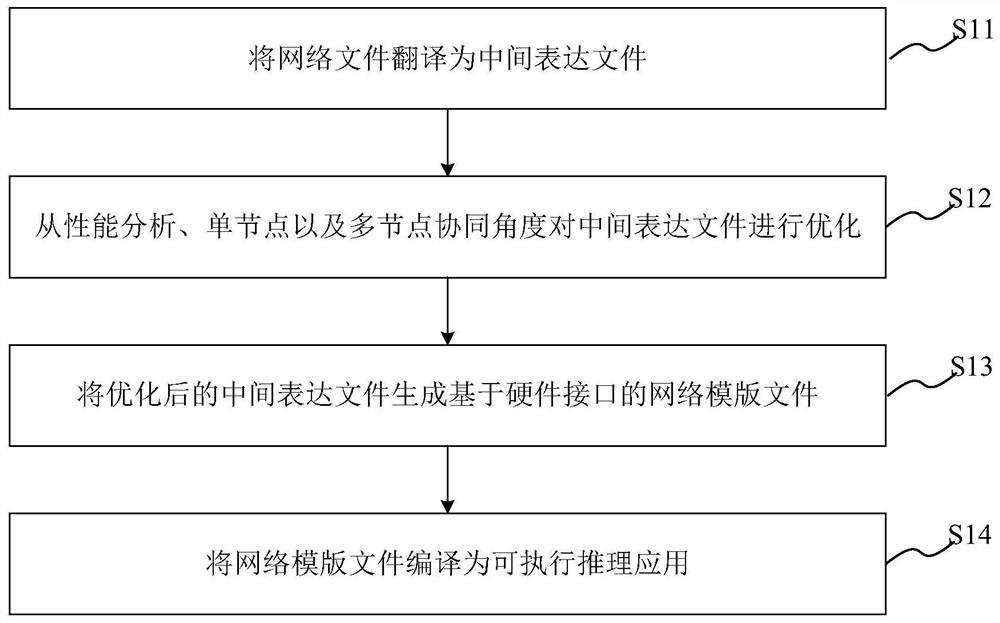

[0035] The invention provides a method for compiling a neural network, comprising:

[0036] Translate network files into intermediate expression files;

[0037] Optimizing the intermediate expression file from the perspective of performance analysis, single node and multi-node collaboration;

[0038] Generate the optimized intermediate expression file into a network template file based on the hardware interface;

[0039] Compiling the network template file into an executable reasoning application.

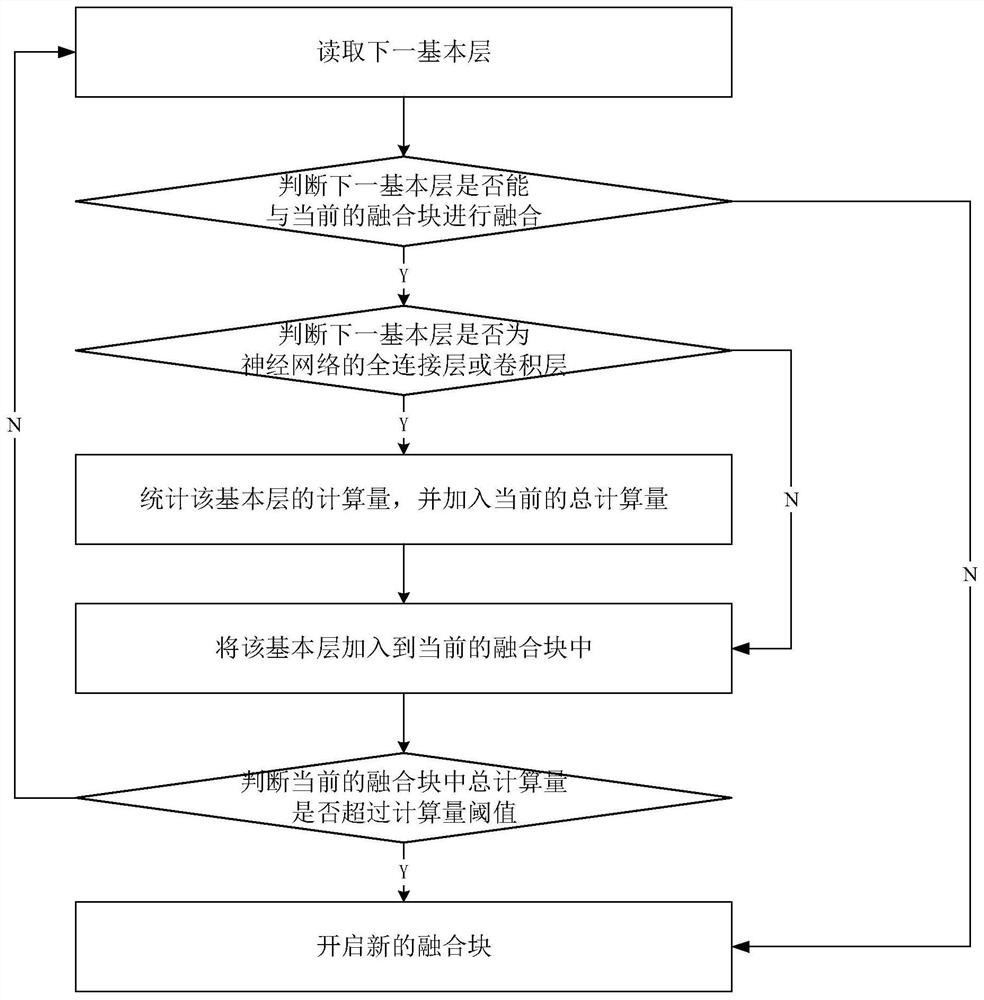

[0040] The method for compiling the neural network provided by this embodiment will be described in detail below with reference to illustrations. The neural network compilation method described in this embodiment provides end-to-end inference services for users, and generates template files based on target hardware interfaces from existing, packaged network files, and then generates executable inference applications. The optimization process can optimize the execution efficiency...

Embodiment 2

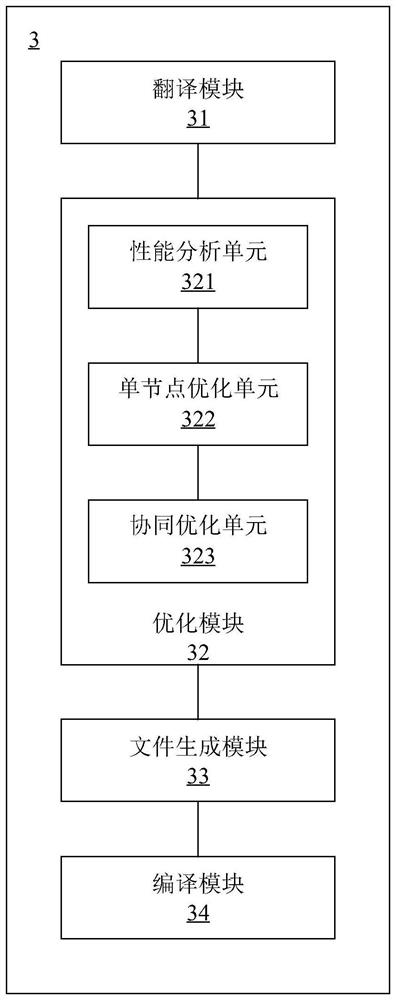

[0085] This embodiment provides a neural network compilation system, including:

[0086] The translation module is used for translating network files into intermediate expression files;

[0087] An optimization module, configured to optimize the intermediate expression file from the perspective of performance analysis, single node and multi-node collaboration;

[0088] A file generation module, configured to generate a network template file based on a hardware interface from the optimized intermediate expression file;

[0089] The compilation module is used to compile the network template file into an executable reasoning application.

[0090] The neural network compiling system provided by this embodiment will be described in detail below with reference to diagrams. please participate image 3 , is a schematic structural diagram of a neural network compiling system in an embodiment. Such as image 3 As shown, the compilation system 3 of the neural network includes a tran...

Embodiment 3

[0118] This embodiment provides a compiling device, including: a processor, a memory, a transceiver, a communication interface or / and a system bus; the memory and the communication interface are connected to the processor and the transceiver through the system bus and complete mutual communication, and the memory uses The computer program is stored, the communication interface is used to communicate with other devices, the processor and the transceiver are used to run the computer program, so that the compiling device executes the above steps of the neural network compiling method.

[0119] The system bus mentioned above may be a Peripheral Component Interconnect (PCI for short) bus or an Extended Industry Standard Architecture (EISA for short) bus or the like. The system bus can be divided into address bus, data bus, control bus and so on. For ease of representation, only one thick line is used in the figure, but it does not mean that there is only one bus or one type of bus....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com