Method for preprocessing pictures in video saliency detection task

A detection task and preprocessing technology, applied in the field of computer vision, can solve problems such as inability to apply multi-scene videos, reading frames without effective recognition models, etc., and achieve the effects of easy packaging, easy fitting, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present application will be further described in detail below in conjunction with the accompanying drawings and embodiments. It can be understood that the specific implementation described here is only used to explain the relevant method, but not to limit the use of the method. It should also be noted that, for ease of description, only parts related to the method are shown in the drawings. Hereinafter, the present application will be described in detail with reference to the accompanying drawings.

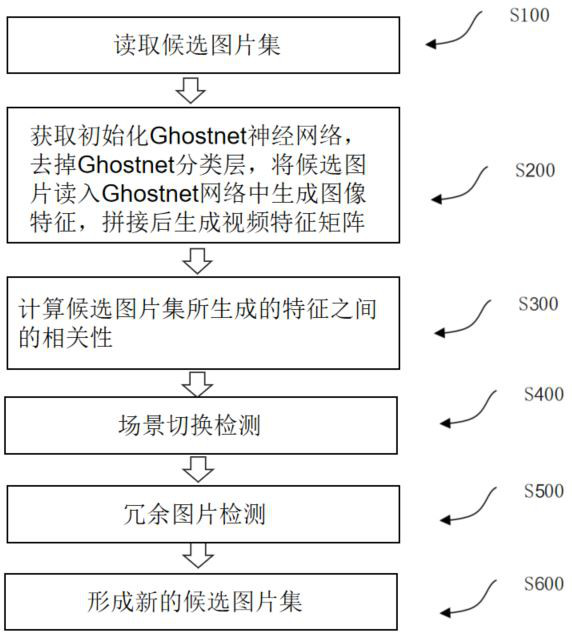

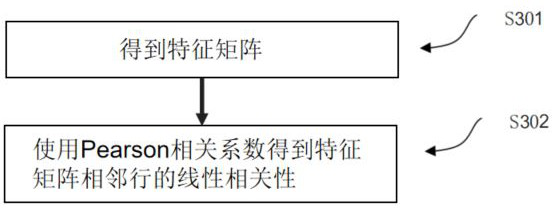

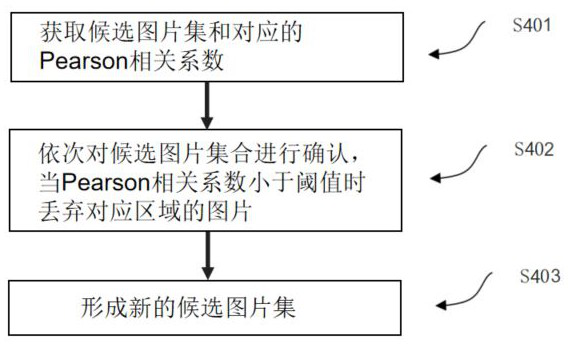

[0035] figure 1 It is the technical roadmap of the method, which can be used in a variety of deep learning video tasks, and can improve the accuracy and robustness of the model without changing the model parameters, including the following steps:

[0036] Step S100, read the preselected picture.

[0037] In this method, the pictures used for preprocessing need to know the fixed number of frames of the subsequent model first, and expand the search range of the preproc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com