A meta-knowledge fine-tuning method and platform based on domain-invariant features

A technology of knowledge and real domain, applied in the field of meta-knowledge fine-tuning method and platform based on domain-invariant features, which can solve problems such as limited effect of compression model

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

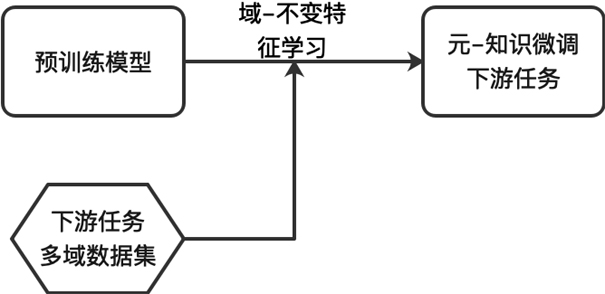

[0033] The invention discloses a meta-knowledge fine-tuning method and platform of a general language model based on domain-invariant features on the basis of a general compression framework of a pre-trained language model. The fine-tuning method of the pre-trained language model for downstream tasks is to perform fine-tuning on the cross-domain data sets of downstream tasks, and the effect of the obtained compression model is suitable for data scenarios of different domains of similar tasks.

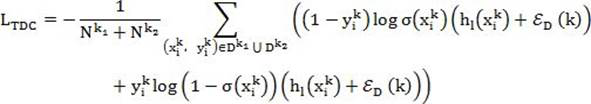

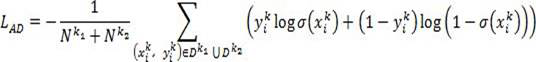

[0034] Such as figure 1 As shown, the present invention designs a meta-knowledge fine-tuning learning method: a learning method based on domain-invariant features. The present invention learns highly transferable shared knowledge, ie, domain-invariant features, on different datasets for similar tasks. Introduce domain-invariant features, fine-tune the common domain features on different domains corresponding to different data sets of similar tasks learned by the network, and quickly a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com