Image sentiment classification method based on class activation mapping and visual saliency

An emotion classification and image technology, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve problems such as underutilization and limited performance of emotion classification

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

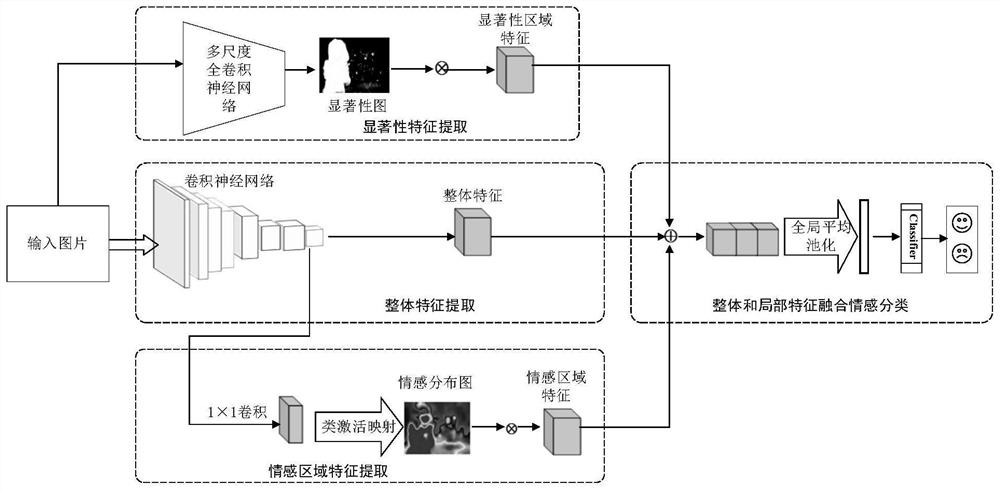

[0060] Such as figure 1 As shown, an image sentiment classification method based on class activation mapping and visual saliency includes the following steps:

[0061] S1: Prepare the emotional image dataset for training the model, expand the dataset, and adjust the size of the image samples in the dataset to 448×448×3;

[0062] S2: Extract the overall feature F of each image through the overall feature extraction network of the model;

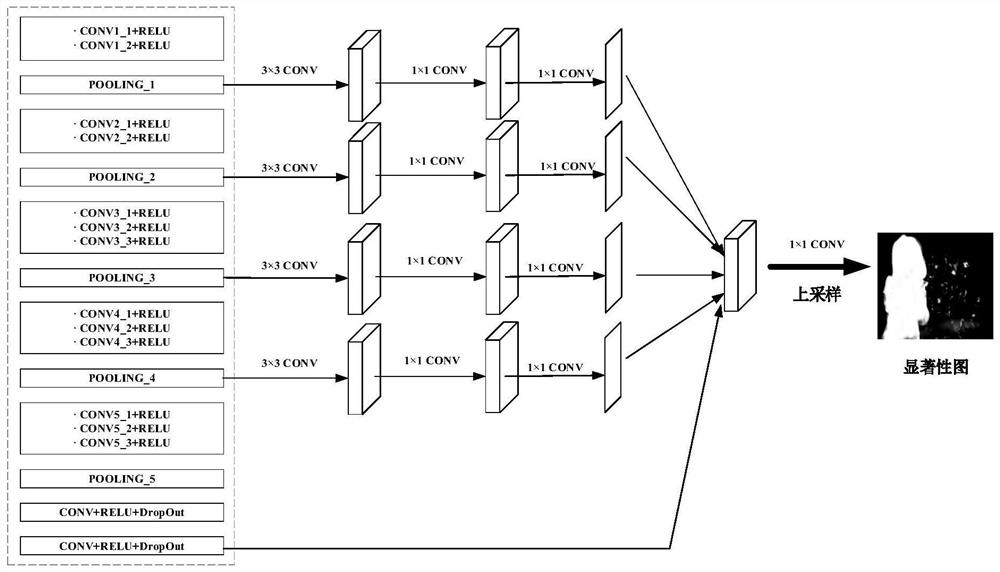

[0063] S3: Generate an image saliency map and extract its salient region feature F through the salient region feature extraction network of the model S ;

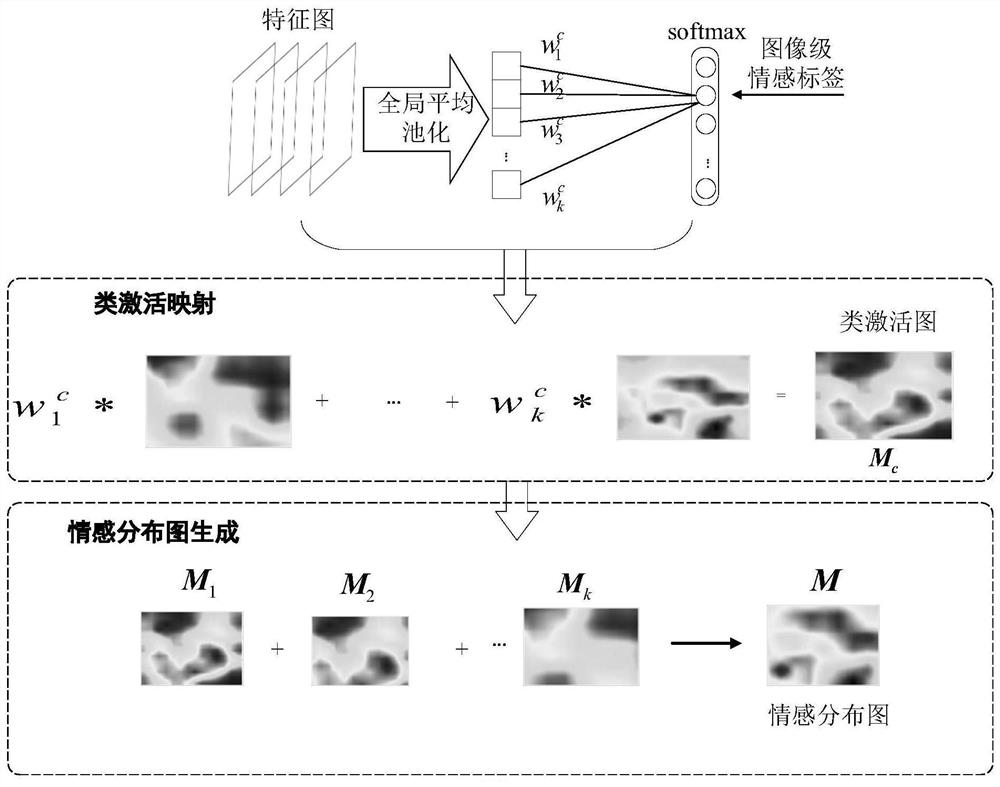

[0064] S4: Generate the image emotion distribution map and extract the emotional region feature F through the emotional region feature extraction network of the model M ;

[0065] S5: Fusion of overall feature F and local feature F S , F M , get the discriminative features, and generate the semantic vector d through the global average pooling operation;

[0066] S6: Input the semanti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com