Front-end multi-thread scheduling method and system based on cloud platform

A scheduling method and technology of a scheduling system, applied in the direction of multi-program device, program control design, program startup/switching, etc., to achieve the effects of improving response speed and resource utilization, preventing thread loss, reducing delay and thread loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

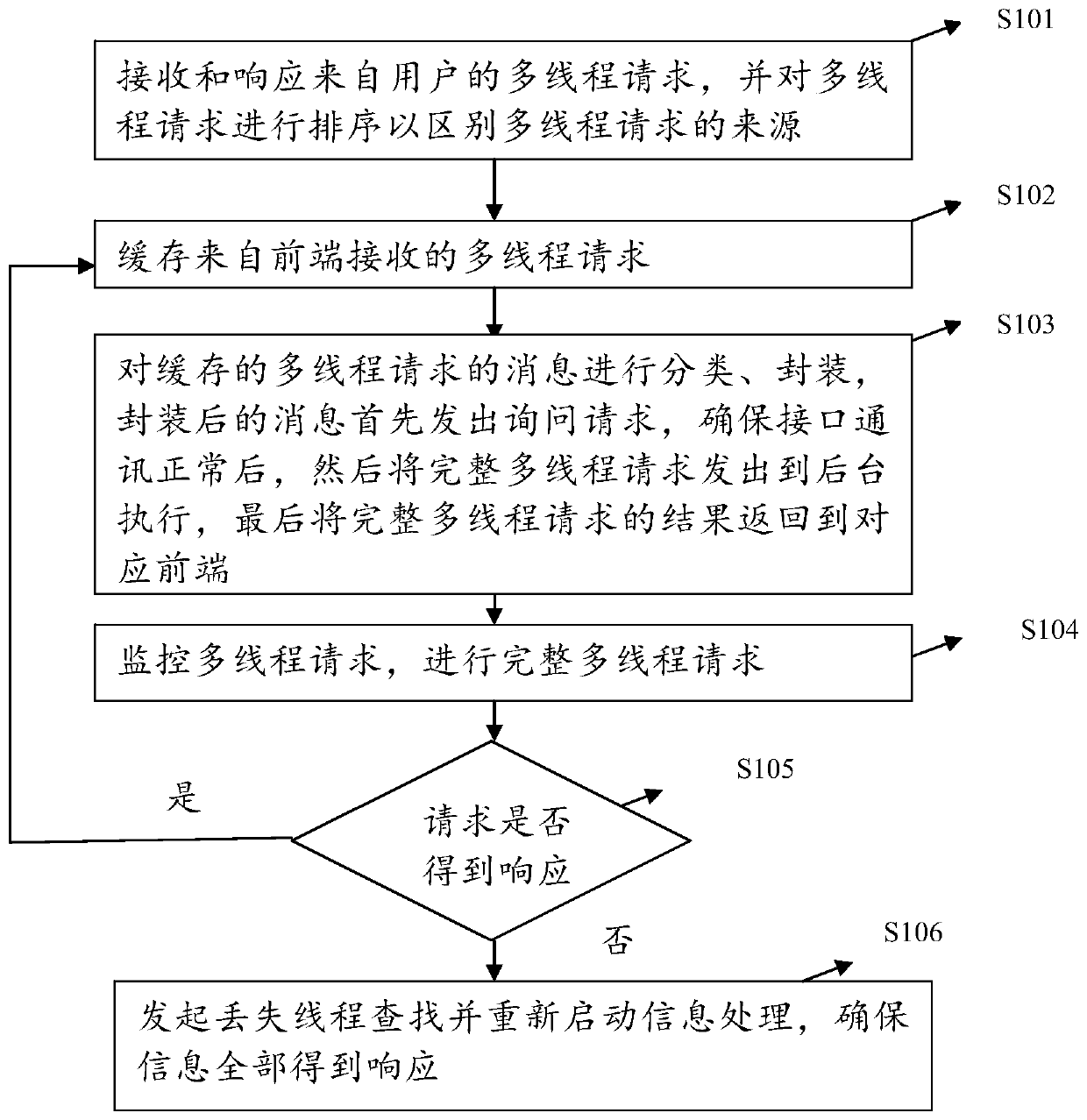

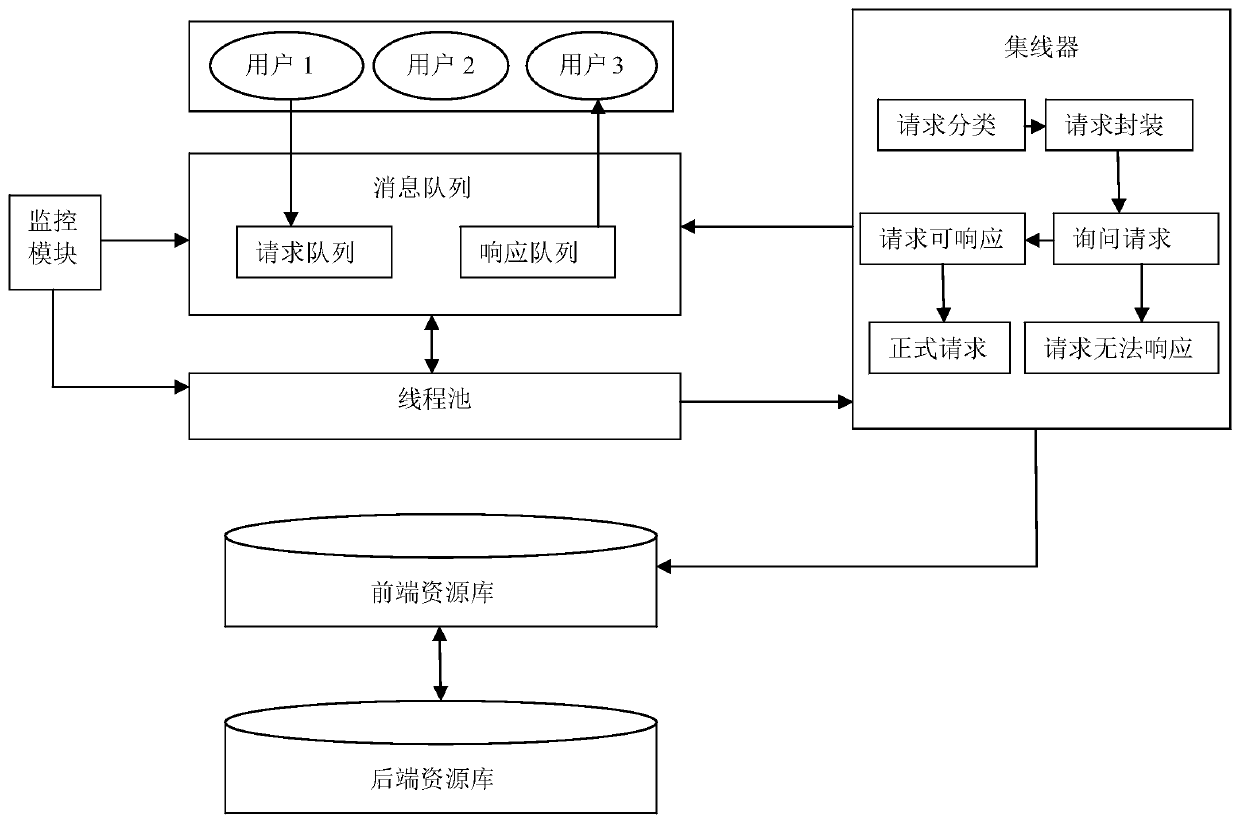

[0032] Embodiment 1 of the present invention proposes a front-end multi-thread scheduling method based on a cloud platform. The bottleneck of high concurrent requests for a large number of front-end interfaces is that they cannot reach the back-end when there are too many requests. Often, interface requests fail due to timeout. We add to the front-end A processing node located between the front-end and the back-end no longer directly requests the back-end from the front-end, but has a transit, such as figure 1 A flow chart of a front-end multi-thread scheduling method based on a cloud platform is given.

[0033] In step S101, receive and respond to the multi-thread request from the user, and at the same time sort the multi-thread request to distinguish the source of the multi-thread request; wherein the received multi-thread request is assigned a serial number, wherein the serial number is composed of timestamp, user ID and request Type composition, which is used to distinguis...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com