Long-time target tracking method based on depth detection

A deep detection and target tracking technology, applied in neural learning methods, instruments, biological neural network models, etc., can solve problems such as class imbalance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0053] Example 1: Long-term target tracking method LT-MDNet based on depth detection

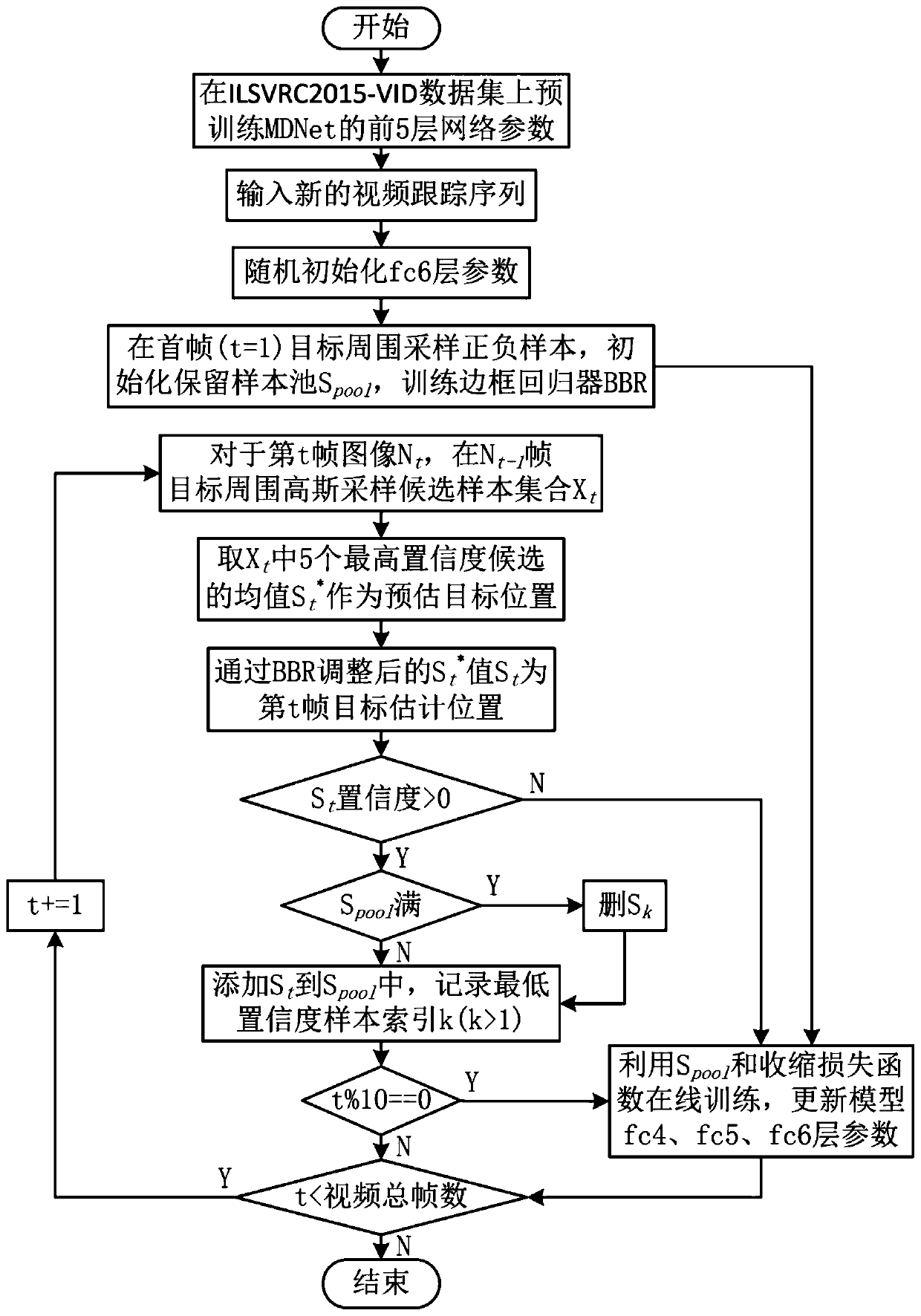

[0054] refer to figure 1 , the specific implementation process of LT-MDNet includes the following steps:

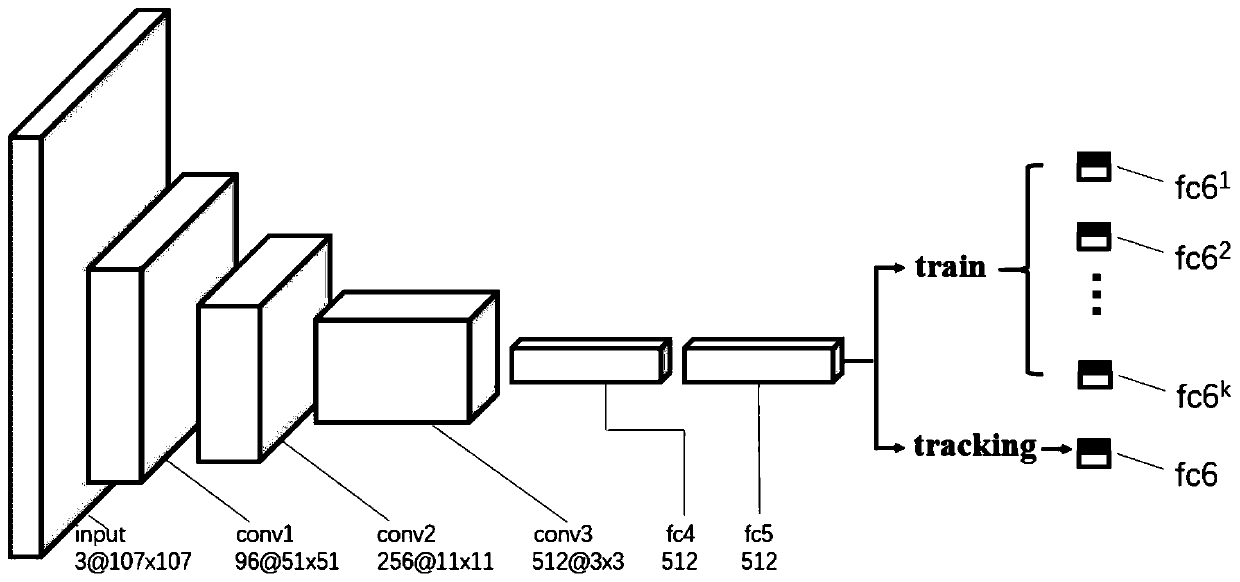

[0055] (1) Offline pre-training, training the weight parameters of three convolutional layers (conv1, conv2, conv3) and two fully connected layers (fc4, fc5) of the MDNet backbone network on the ILSVRC2015-VID target detection tagged dataset;

[0056] (2) Set the last layer of the network (fc6) as a domain-specific layer, which is a binary classification fully connected layer, and output the positive and negative confidence of the sample. The parameters are random at the beginning of each offline training video frame sequence or online tracking video frame sequence initialization.

[0057] (3) Input a new video sequence to be tracked, obtain the first frame of the target (t=1), and manually determine the center position of the target and the length and width of the bounding box (x 1 ...

Embodiment 2

[0074] Embodiment 2: the application of embodiment 1

[0075] 1. Simulation conditions and parameters

[0076] The experiment is implemented based on the PyTorch 1.2.0 programming language and CUDA 10.0 deep learning architecture. The operating system is Windows 10, the processor is AMD R5-2600 3.4GHZ, the GPU is NVIDIA RTX2070, and the memory is 16GB.

[0077] The model is trained offline on the ILSVRC2015-VID target detection label dataset (http: / / bvisionweb1.cs.unc.edu / ilsvrc2015 / ILSVRC2015_VID.tar.gz), and the model parameters are updated every 10 frames; the first frame model updates the training iteration 50 times, the learning rate is 0.0005; the non-first frame update iteration is 15 times, the learning rate is 0.001; the hyperparameters a and c in the loss function are set to 10 and 0.2 respectively, and the shrinkage ratio δ is set to 1.3.

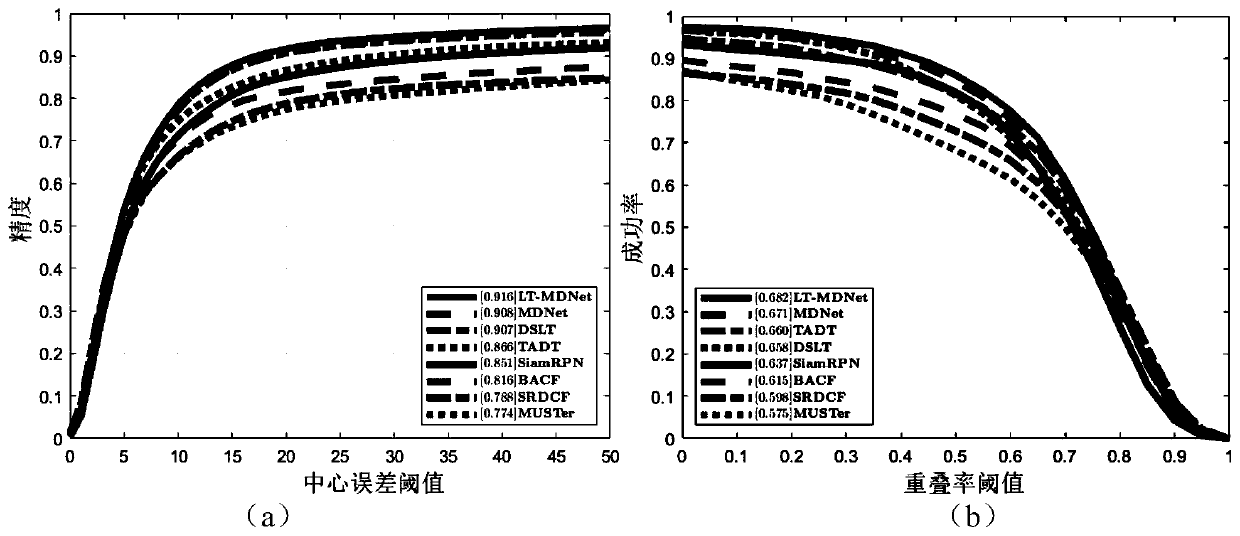

[0078] 2. Simulation content and result analysis

[0079] In order to verify the effectiveness of Example 1 (LT-MDNet), compa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com