Infrared-visible light image fusion method based on saliency map and convolutional neural network

A convolutional neural network, infrared image technology, applied in neural learning methods, biological neural network models, neural architectures, etc., to achieve good visual effects, enhance contrast, improve clarity and contrast.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] specific implementation plan

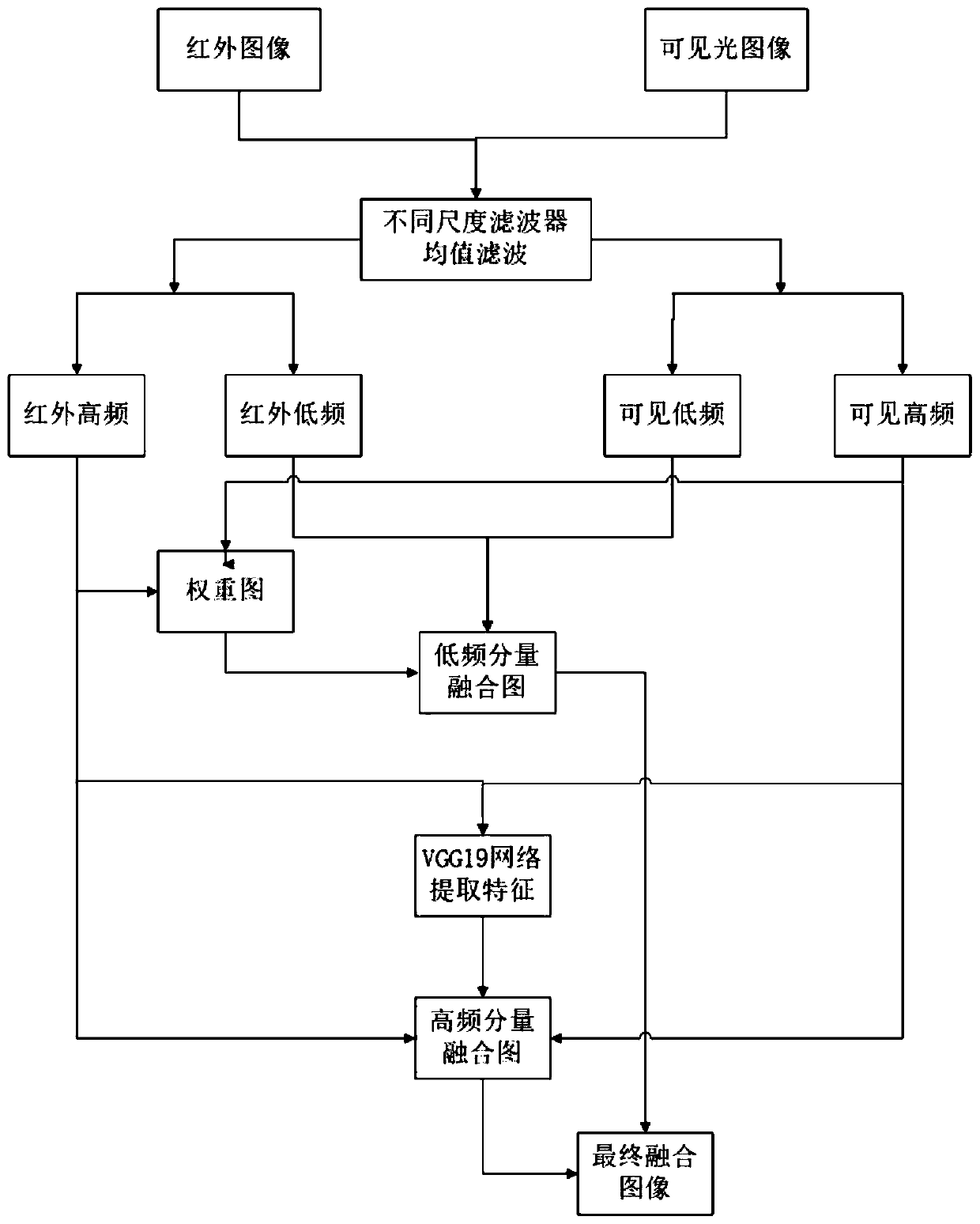

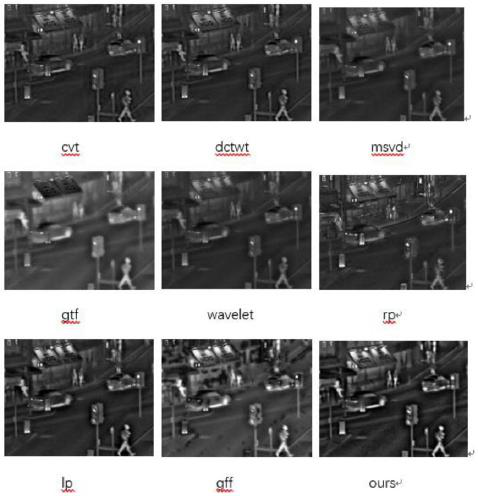

[0022] In order to make the technical solution of the present invention clearer, the specific implementation of the present invention will be further described below in conjunction with the accompanying drawings. The specific implementation plan flow chart is as follows figure 1 shown.

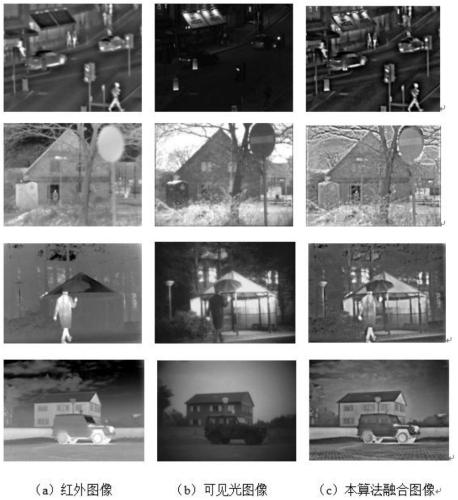

[0023] 1) Take the pre-collected infrared images and their visible light images as a data set, and name each group of images. The naming format is *-1.png and *-2.png, corresponding to infrared images and visible light images respectively .

[0024] 2) Mean filtering is performed on each pair of source images respectively to obtain the low-frequency components and high-frequency components of each pair of source images. The low frequency components represent the integrated intensity of the entire image. The high-frequency component represents where the intensity of the entire image changes sharply, that is, the outline and details of the image. The la...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com