Data conversion method, multiplier, adder, terminal device and storage medium

A conversion method and data technology, applied in electrical digital data processing, digital data processing components, computing using non-contact manufacturing equipment, etc., can solve problems such as excessive design, achieve low power consumption, reduce computing overhead, numerical Indicates a wide range of effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

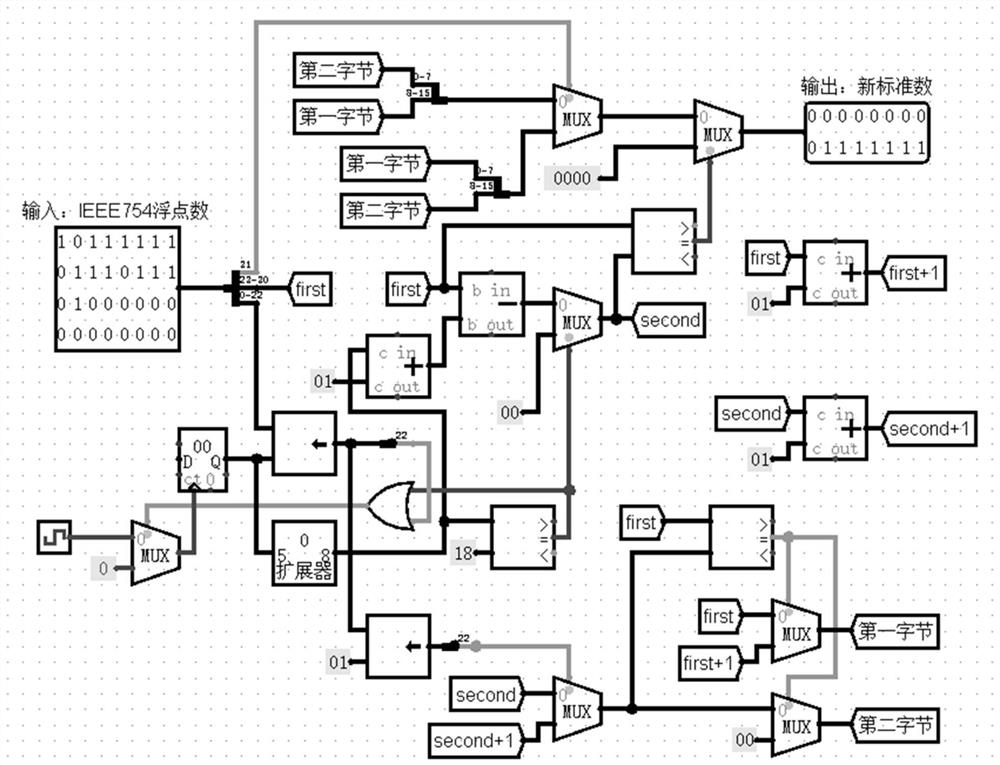

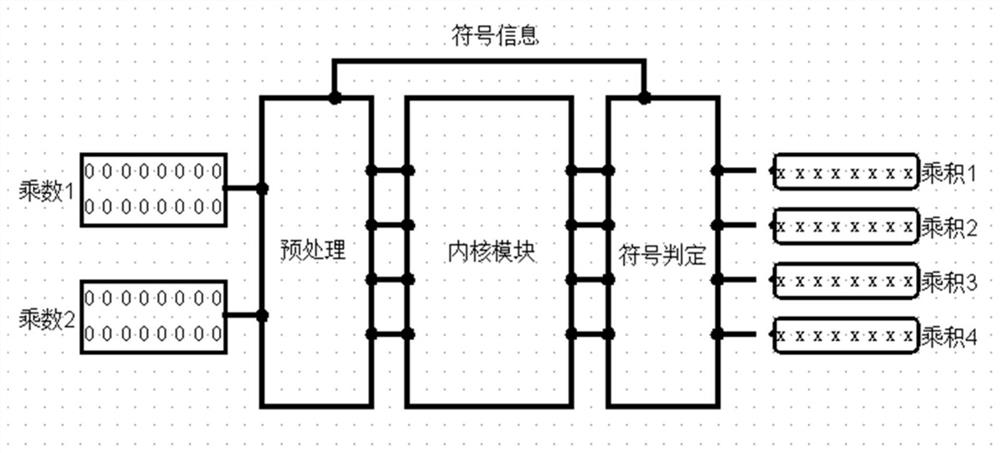

[0056] An embodiment of the present invention provides a data conversion method for image recognition based on a convolutional neural network model, which is used to extract key features from images or videos input by the network through a convolutional neural network, so as to classify images or detect objects . Since the convolution operation is usually the most expensive function in the convolutional neural network, and the multiplication operation is the most expensive step in the convolution operation, therefore, the data conversion method proposed in this embodiment will be used for the convolution operation. Operations are performed after the points are converted to the new standard number format.

[0057] The specific conversion method is as follows:

[0058] 1. The floating-point number F uses k n-bit (bit) integer numbers (a 1 ,a 2 ,a 3 ...a k ) to approximate the sequence, the specific mathematical meaning is expressed as:

[0059] (1) Through this data form...

example 1

[0077] Let’s take the floating point number -0.96582 as an example to illustrate, its IEEE754 format is: 10111111011101110100000000000000, a total of 32 bits, counting from right to left, the 32nd bit represents the sign bit of the original floating point number, 1 represents a negative number, 0 represents a positive number, this implementation In the example, if the 32nd bit is 1, the original floating-point number is a negative number. A total of 8 bits from the 24th to the 31st bits represent the exponent code of the original floating point number. The 1st to 23rd digits represent the mantissa of the original floating-point number with a total of 23 digits. Therefore, the exponent code of the floating point number -0.96582 is 01111110, and the mantissa is 11101110100000000000000.

[0078] (1): The floating point number -0.96582 is non-zero;

[0079] (2): Set the first byte a 1 Equal to the order code 01111110, set count=1;

[0080] (3): The mantissa of the original flo...

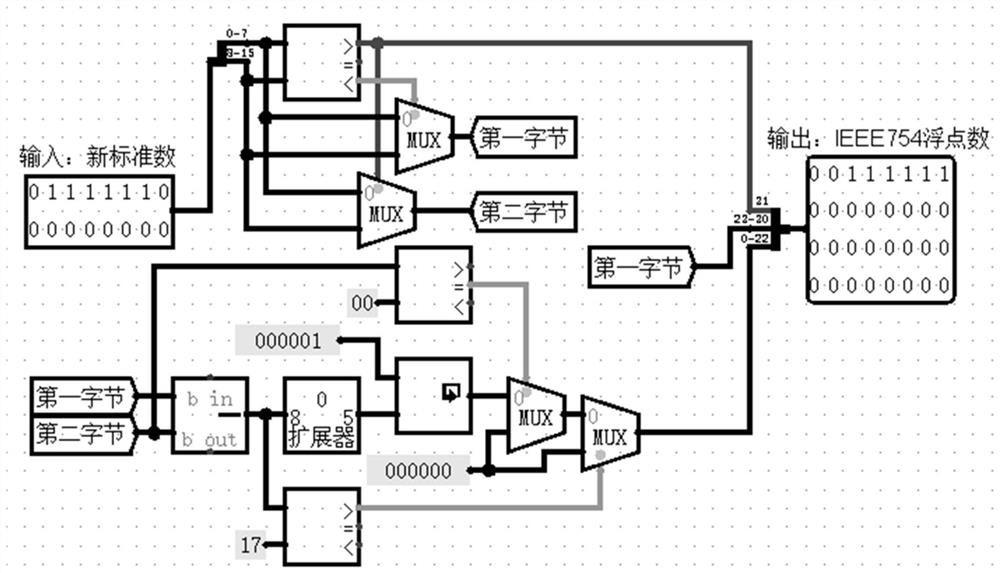

example 2

[0089] The following takes the floating point number 0.5 as an example for illustration, and its IEEE754 format is:

0 01111110 00000000000000000000000

[0090] The sign bit is 0, the exponent code is 01111110, and the mantissa is 00000000000000000000000.

[0091] (1): The floating point number 0.5 is not zero;

[0092] (2): Set the first byte a 1 Equal to the order code 01111110, set count=1;

[0093] (3): the 24th-count=23rd bit of the mantissa is not equal to 1;

[0094] (4): count=23 is not established;

[0095] (5): set count=count+1=1+1=2;

[0096] (6): the 24th-count=22 bit of the mantissa is not equal to 1;

[0097] ...

[0098] (46): count=23 is established, the second byte a will be set 2 =00000000;

[0099] (47): The sign bit is 0, set the first byte a 1 Placed in the high 8 bits, the second byte a 2 Put in the lower 8 bits;

[0100] The floating point number 0.5 in the new standard format is: 01111110 00000000, its mathematical meaning is 0.5, and the rel...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com