Three-dimensional tree image fusion method based on environmental perception

A technology of environment perception and image fusion, applied in image enhancement, image analysis, image data processing, etc., can solve the problems of inability to achieve natural fusion, occlusion, paperization, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

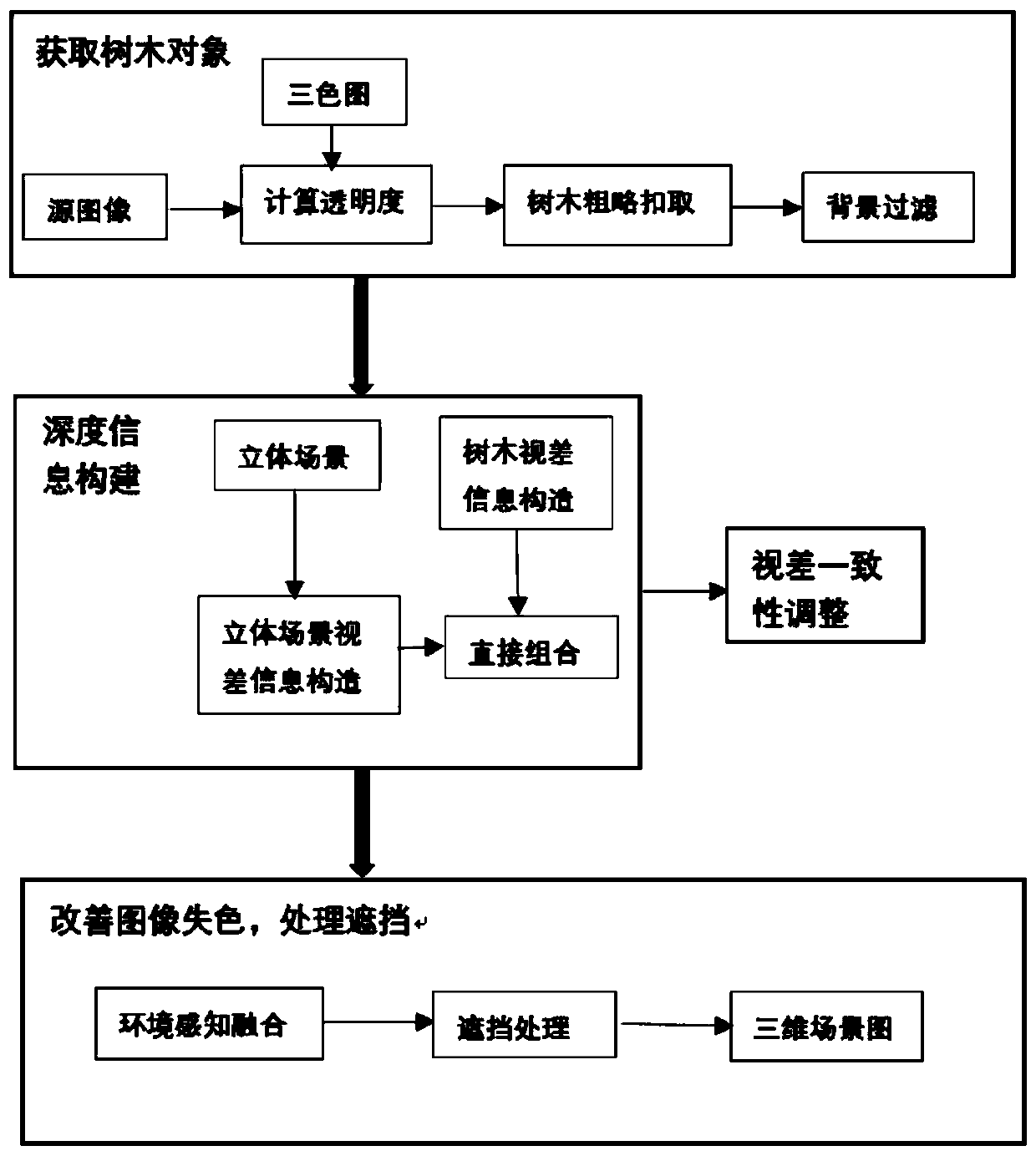

[0066] The technical solution of the present invention will be further described below in conjunction with the accompanying drawings.

[0067] A three-dimensional tree image fusion method based on environmental perception, comprising the following steps:

[0068] 1. Get the tree object;

[0069] (11) Obtain the three-color map, as follows;

[0070] Any pixel of a natural image is a linear combination of corresponding foreground and background:

[0071] I z = α z f z +(1-α z )B z (1)

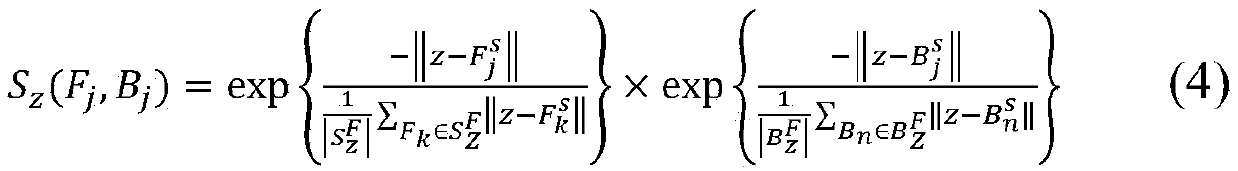

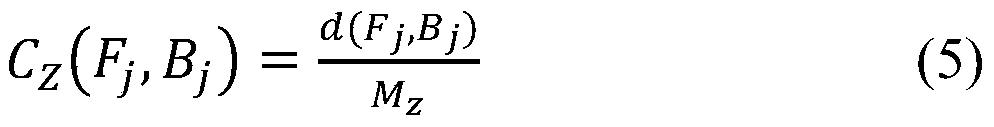

[0072] Among them, z represents any pixel in the image, I z represents the pixel value of z, F z represents the foreground color at z, B z Indicates the background color at z, α z is the weighted ratio of the foreground color to the background color at z, and the value is between [0,1]; α z When it is 0, point z is the absolute background, α z When it is 1, it is the absolute foreground; the three-color map divides the image into three areas: absolute foreground, absolute background...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com