Motion hopper automatic identification and positioning method based on depth visual information

A deep vision and automatic recognition technology, applied in image data processing, image enhancement, instruments, etc., can solve problems such as the inapplicability of research objects, and achieve the effect of fast calculation speed, good effect and high degree of automation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention is described in detail below in conjunction with accompanying drawing and specific embodiment, and present embodiment is carried out under the premise of technical solution of the present invention, has provided detailed implementation mode and process, but protection scope of the present invention is not limited to following Example.

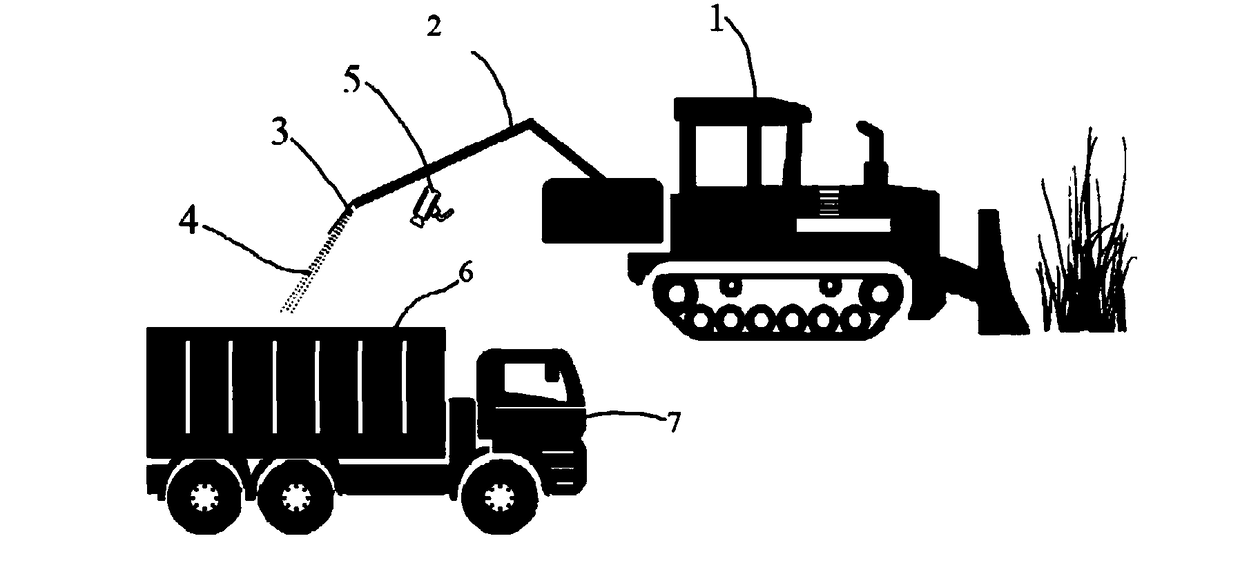

[0024] Such as figure 1 As shown, 1 in the figure is the harvesting vehicle of the green feeding machine, 2 is the mechanical arm, 3 is the nozzle, 4 is the green feed, 5 is the camera, 6 is the bucket, and 7 is the trailer. The throwing device consisting of arms is thrown out and received by the trailer's body. The key to using computer to replace manual material automatic filling system is to determine the relative position between the robot arm nozzle of the green feeding machine and the trailer body. After the relative positions of the two are determined, the drop point of the green feed is determined accordin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com