Model parameter training method and device, server and storage medium

A technology of model parameters and training methods, applied in the information field, can solve the problems of high communication cost and network overhead of gradient transmission, achieve the effect of basically lossless convergence speed and quantization results, reduce communication cost and network overhead, and improve operating efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to make the purpose, technical solution and advantages of the present application clearer, the implementation manners of the present application will be further described in detail below in conjunction with the accompanying drawings.

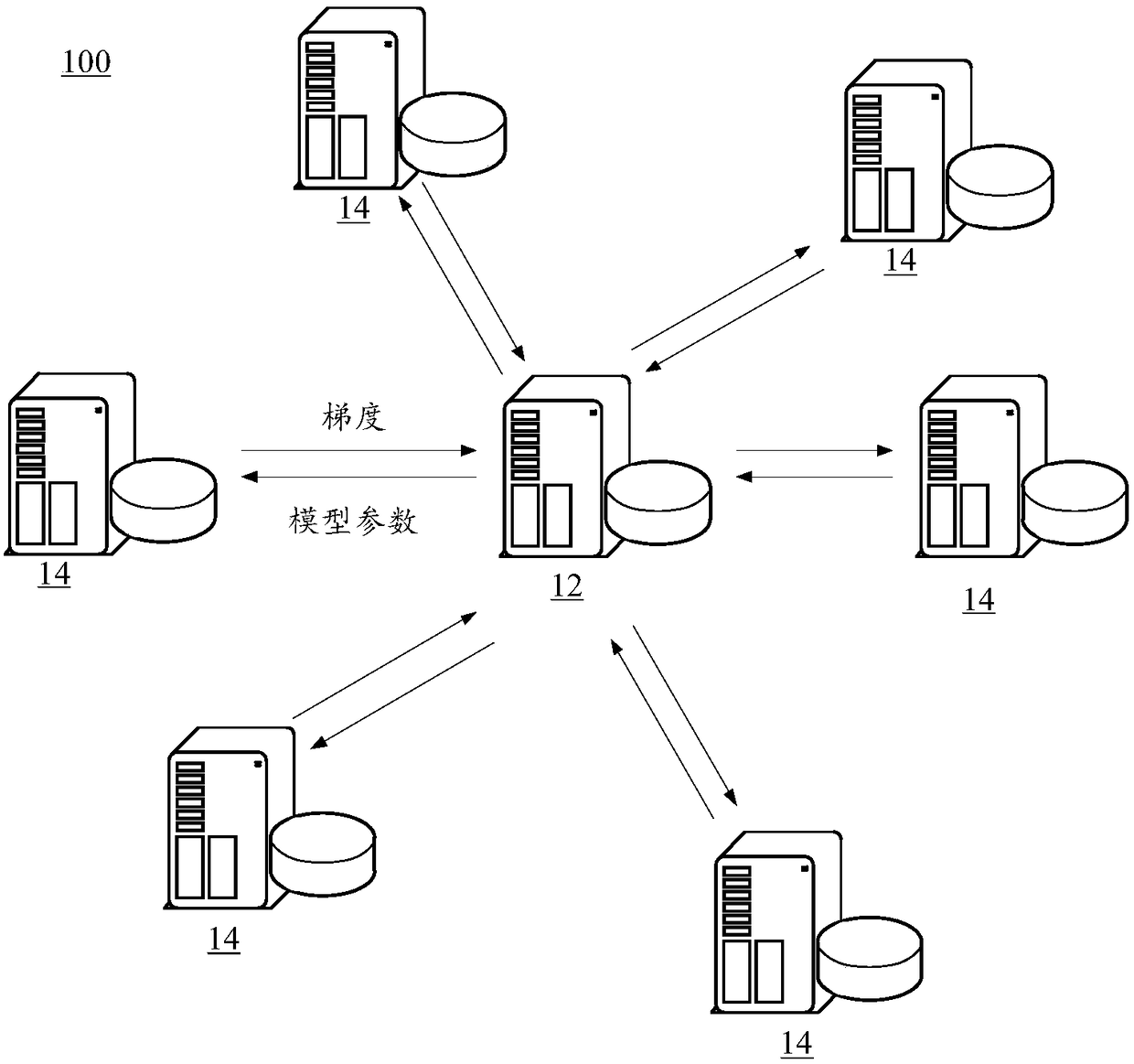

[0028] figure 1 It is a schematic structural diagram of a model training system provided by the embodiment of this application, see figure 1 , the model training system 100 includes a main computing node 12 and N sub-computing nodes 14, where N is a positive integer. The main computing node 12 is connected to the N sub-computing nodes 14 through a network. The main computing node 12 or the sub-computing node 14 may be a server, or may be a computer or a device with a data computing function, etc., and the embodiment of the present application does not limit the main computing node 12 or the sub-computing node 14 .

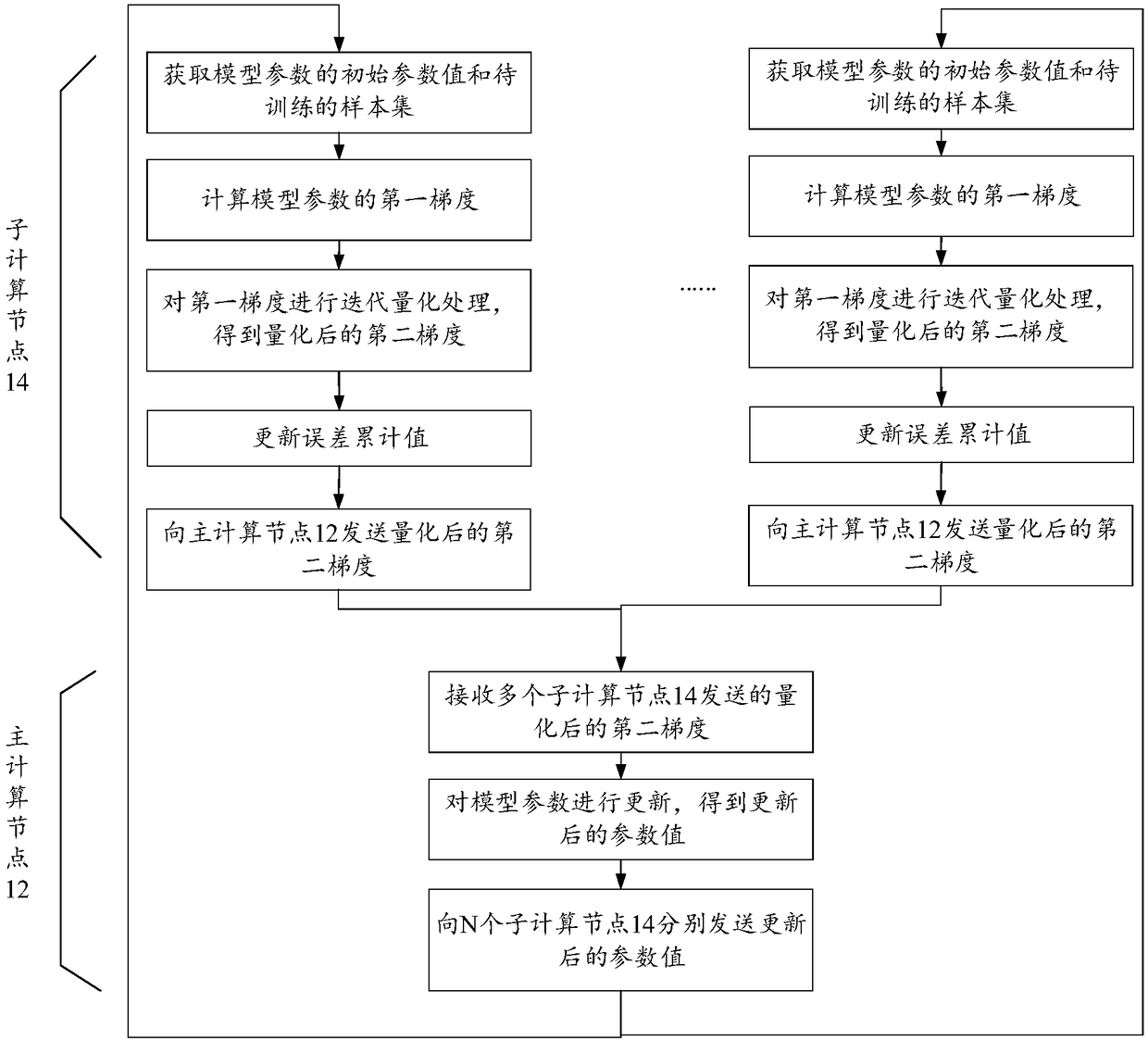

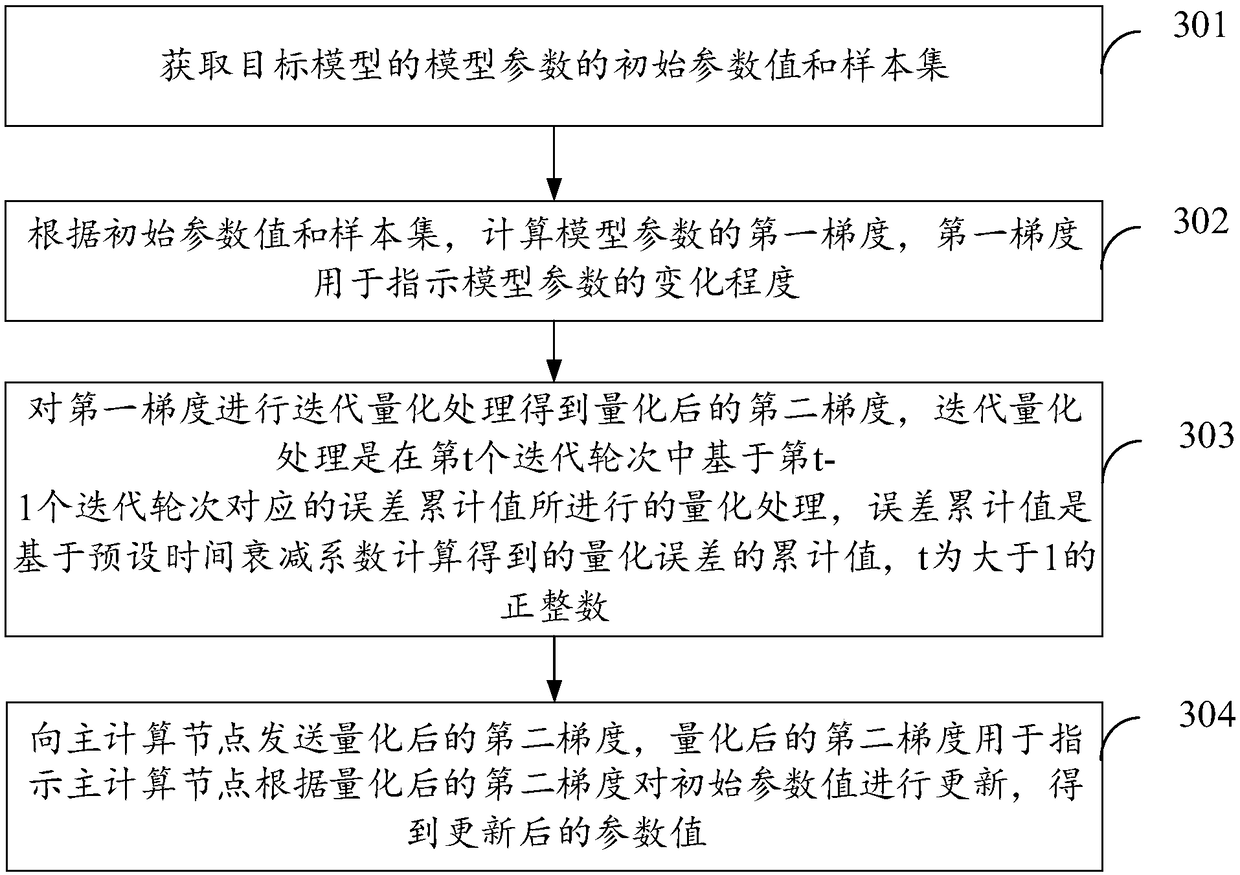

[0029] Such as figure 2 As shown, the interaction process between the main computing node 12 and N sub-computin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com