Compression device for deep neural network

A deep neural network and compressor technology, applied in biological neural network model, neural architecture, physical implementation, etc., can solve problems such as on-chip network burden, increase in on-chip network transmission delay, and affect system performance, and achieve the effect of reducing delay.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

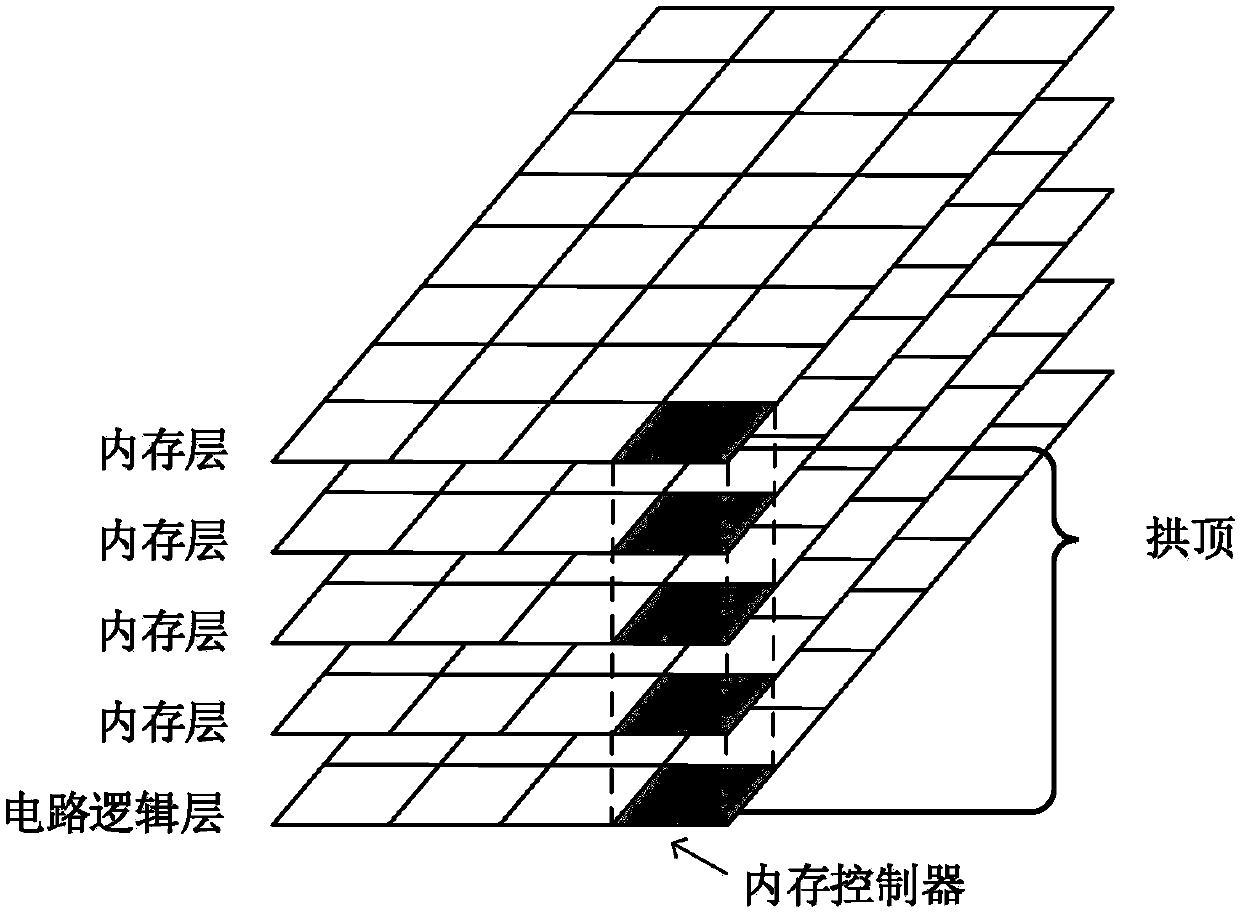

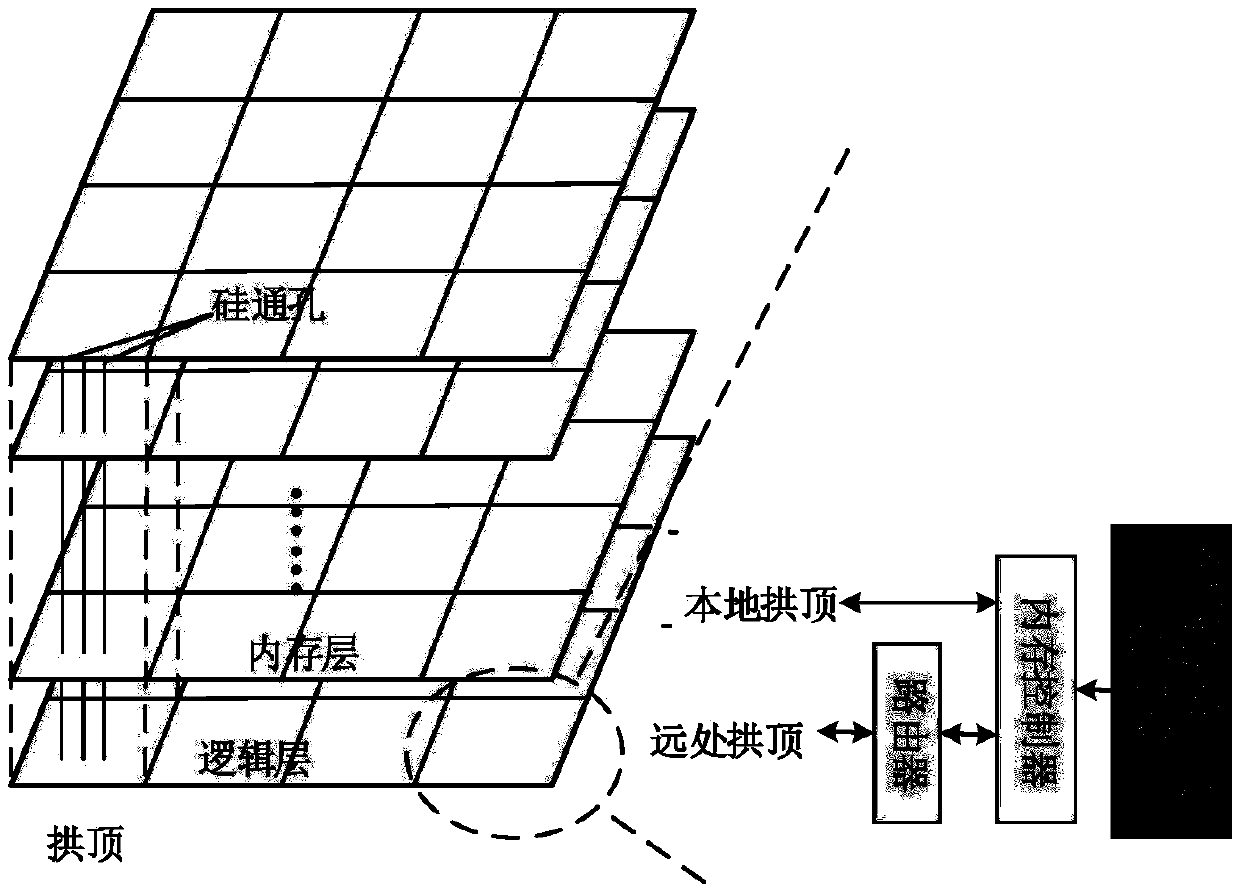

[0048] figure 2 A schematic structural diagram of a 3D memory-based deep neural network acceleration system in the prior art is shown. Such as figure 2 As shown, a deep neural network computing unit is integrated on the logical layer of the 3D memory, and the deep neural network computing unit is connected to a local vault containing the memory controller through a memory controller. The memory controllers of different Vaults transmit data through the common on-chip network, and the memory controllers of the local Vault realize the data routing with the remote Vault through the router of the on-chip network.

[0049] refer to figure 2 , when the deep neural network computing unit starts to perform neural network calculations, it needs to send the data request to the corresponding connected local Vault memory controller, if the location ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com