Optimization method oriented to task parallel programming model under virtualization environment

A technology of virtualization environment and programming model, which is applied in the optimization field of task-parallel programming model under virtualization environment, can solve the problems of lack of virtualization environment optimization, low performance, waste of computing resources, etc., achieve low overhead, reduce waste, The effect of reducing scheduling delay

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

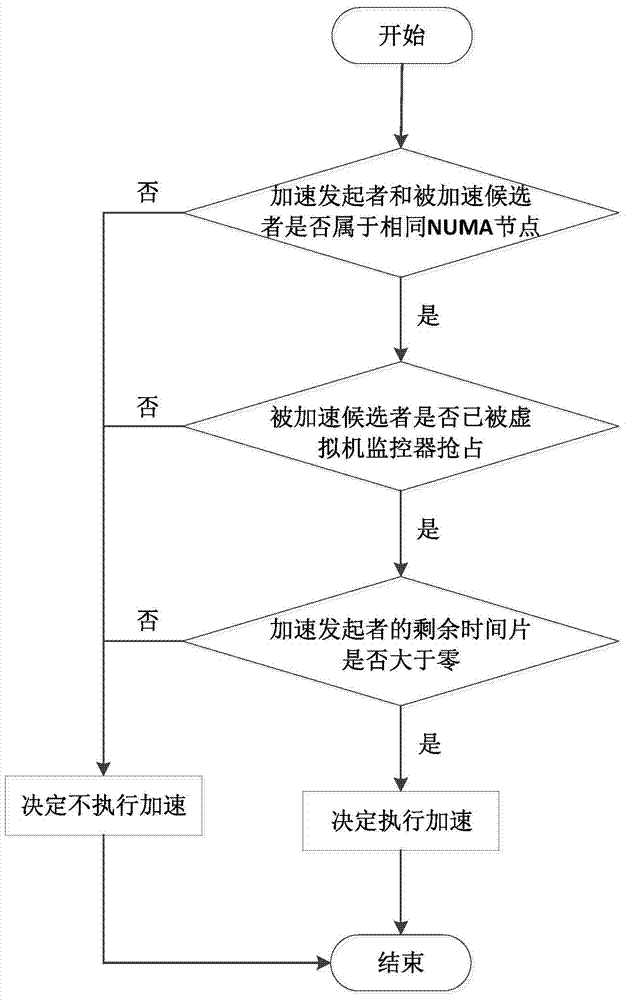

Method used

Image

Examples

Embodiment 1

[0059] Table 1 Example 1 Experiment configuration environment

[0060]

[0061] As listed in Table 1, Embodiment 1 deploys 8 customer virtual machines with 16 cores on a single 16-core physical server; respectively start and run 1, 2, 4, and 8 customer virtual machines to simulate a single physical core Scenarios shared by 1, 2, 4, and 8 virtual CPUs; Embodiment 1 runs the Conjugate Gradient (CG) application based on the task parallel programming model in the guest virtual machines 1 to 8, and tests that CG is used in Cilk++ respectively. , BWS and the running time under the support of the present invention; wherein, CG is derived from a set of application program sets representing fluid dynamics calculations developed by NASA; Cilk++ is the most general task parallel programming model; BWS is realized based on Cilk++ It is the best task parallel programming model in the current traditional single-machine multi-core multi-application environment; in embodiment 1, accelerati...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com