A multi-modal non-contact sentiment analysis recording system

A technology of sentiment analysis and recording system, applied in the field of human-computer emotional interaction, which can solve problems such as high feature dimension, large number, and dimension disaster.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

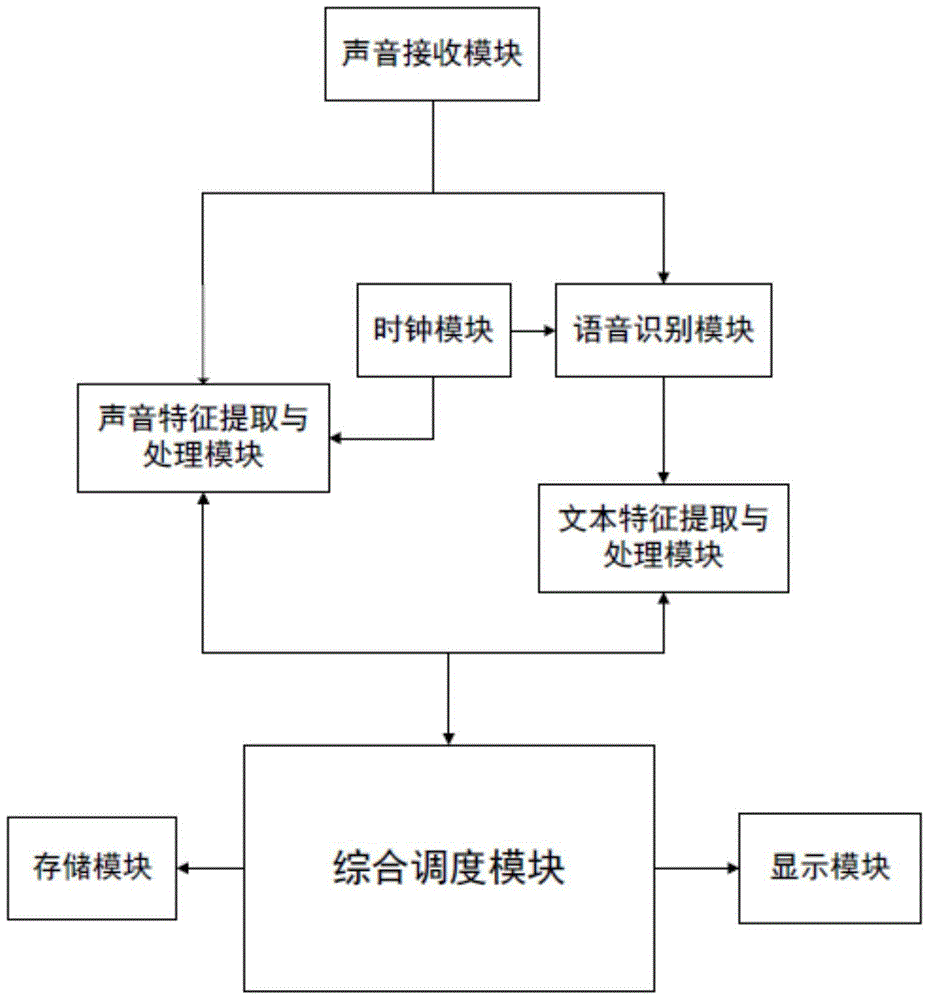

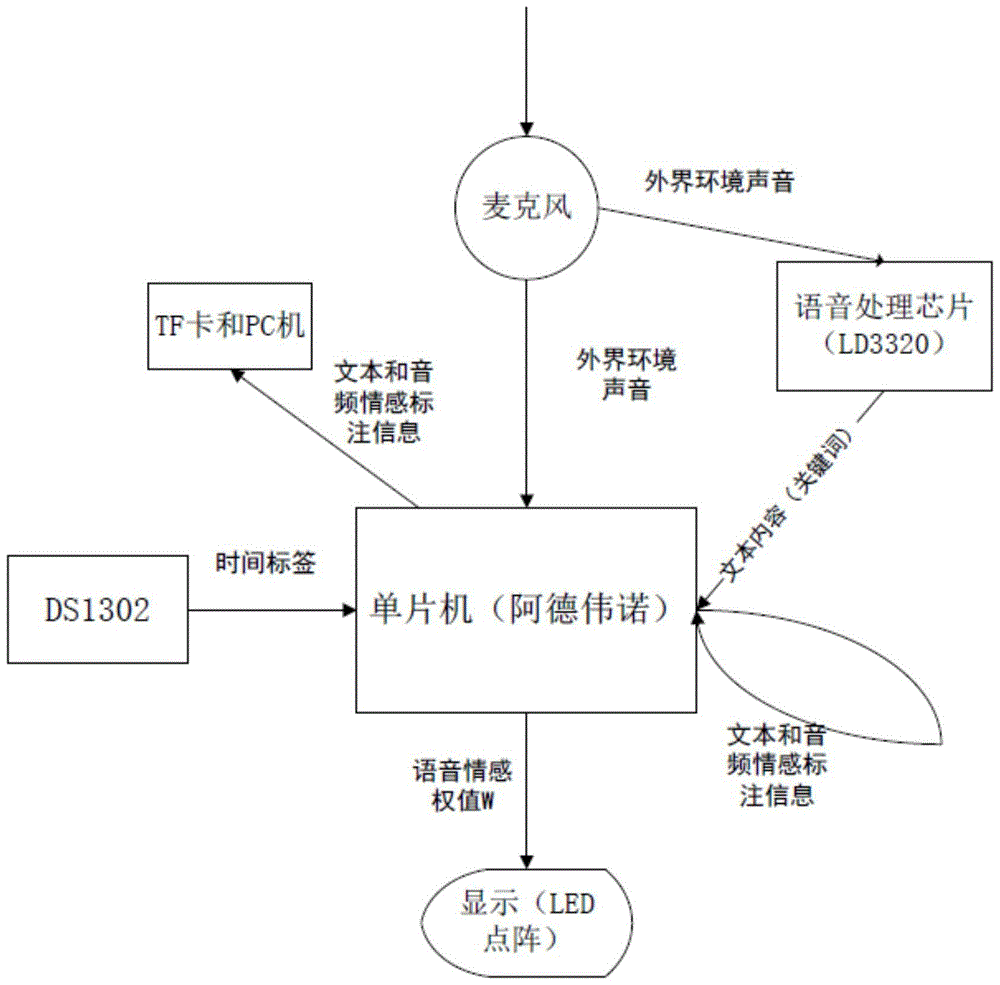

[0050] In this example, if figure 1 As shown, the composition of a non-contact emotion analysis and recording system based on multimodality includes: sound receiving module: used to complete receiving sound from the external environment; sound feature extraction and processing module: used to obtain voice audio emotion labeling information; Speech recognition module: used to complete the conversion of voice content to text content; text feature extraction and processing module: used to obtain voice text emotion labeling information; comprehensive scheduling module: used to complete all data processing, storage, and scheduling tasks; display module : used to complete the display of the detected voice emotional state; clock module: used to complete time recording and provide the function of time label; storage module: used to complete the recording of emotion labeling information of all input voices in the power-on state; button module: used For switching, setting time, selectio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com