Quick direct memory access (DMA) ping-pong caching method

A ping-pong cache and fast technology, applied in the field of information processing, to achieve the effect of reducing the amount of DMA data movement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

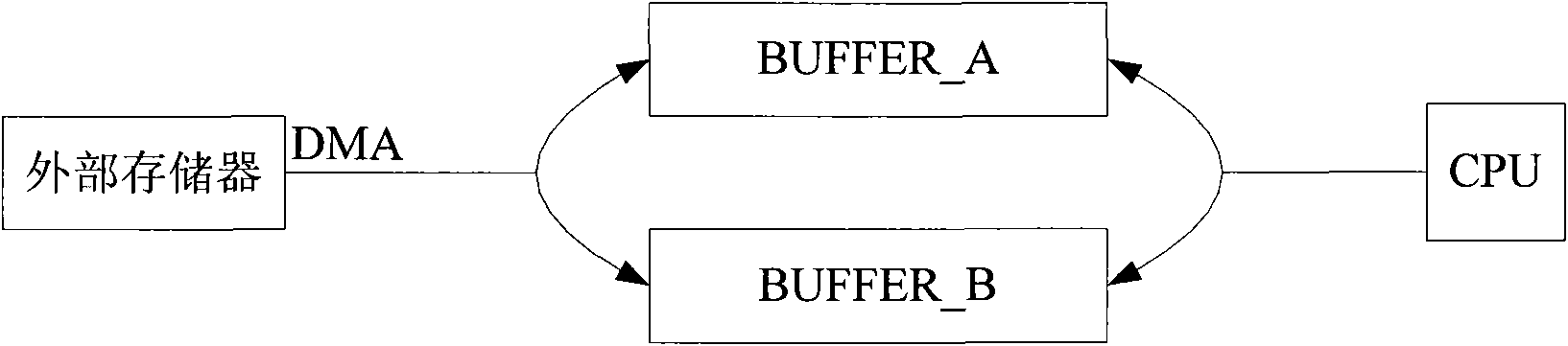

[0014] The method of the present invention will be described in further detail below in conjunction with the accompanying drawings.

[0015] to combine figure 2 , image 3 Shown, for the sake of simplicity, assume that the byte quantity of the data block that CPU of the present invention can handle each time is M byte, and the first address of purpose cache is Addr, and size is N byte, and adjacent two when setting ping-pong cache The same amount of data in the data blocks moved by the DMA is B, and the ratio of B to M bytes is a=B / M, then the number of times to cover the destination cache N bytes for DMA once is

[0016] L=floor((N-M) / (M(1-a)))+1, where floor() represents rounding down.

[0017] The present invention is accomplished according to the following steps:

[0018] The first step: use DMA to start moving the M-byte data block, the destination address is Addr, which is the first address of the destination cache, and the address interval moved to the destination c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com