Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

61 results about "Cache language model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

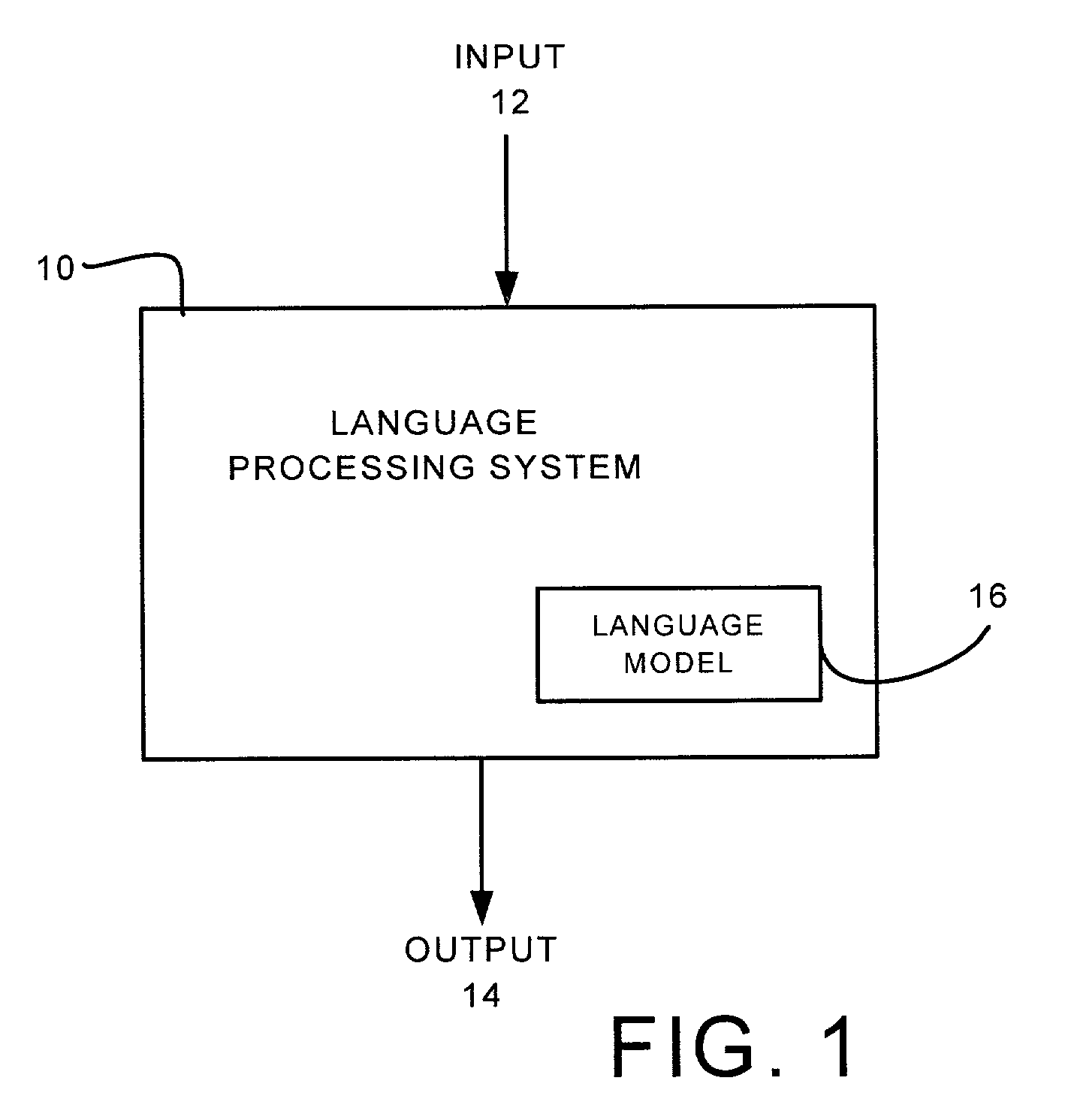

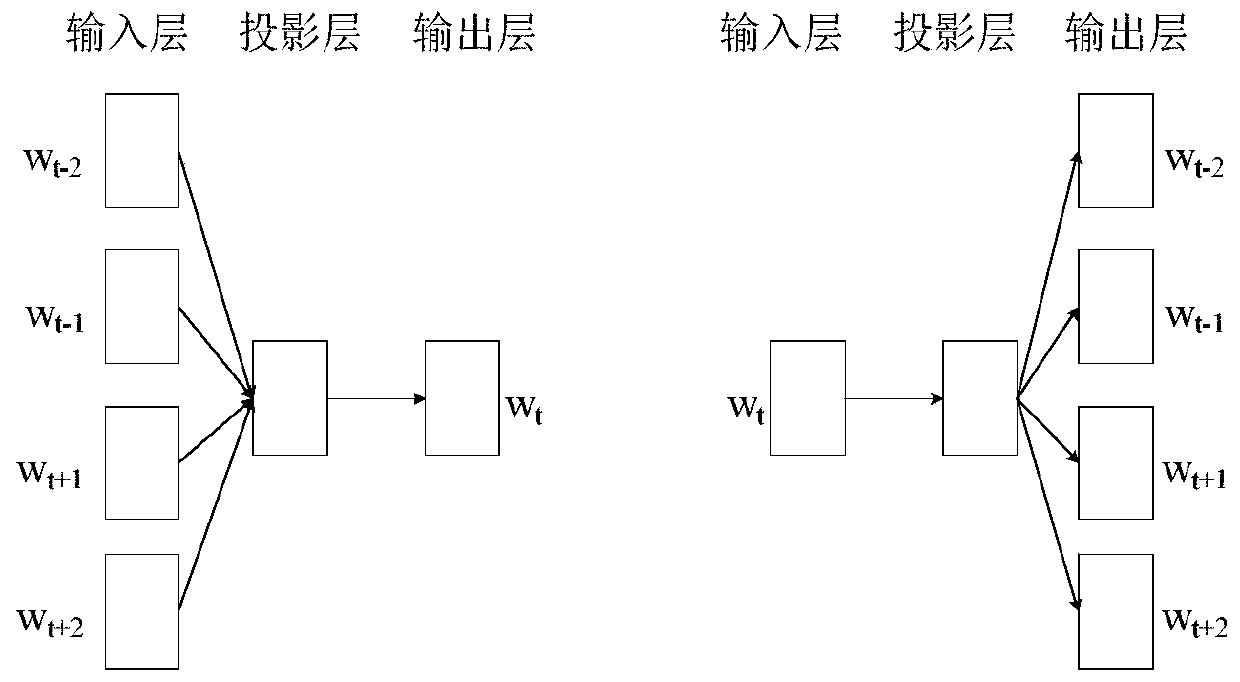

A cache language model is a type of statistical language model. These occur in the natural language processing subfield of computer science and assign probabilities to given sequences of words by means of a probability distribution. Statistical language models are key components of speech recognition systems and of many machine translation systems: they tell such systems which possible output word sequences are probable and which are improbable. The particular characteristic of a cache language model is that it contains a cache component and assigns relatively high probabilities to words or word sequences that occur elsewhere in a given text. The primary, but by no means sole, use of cache language models is in speech recognition systems.

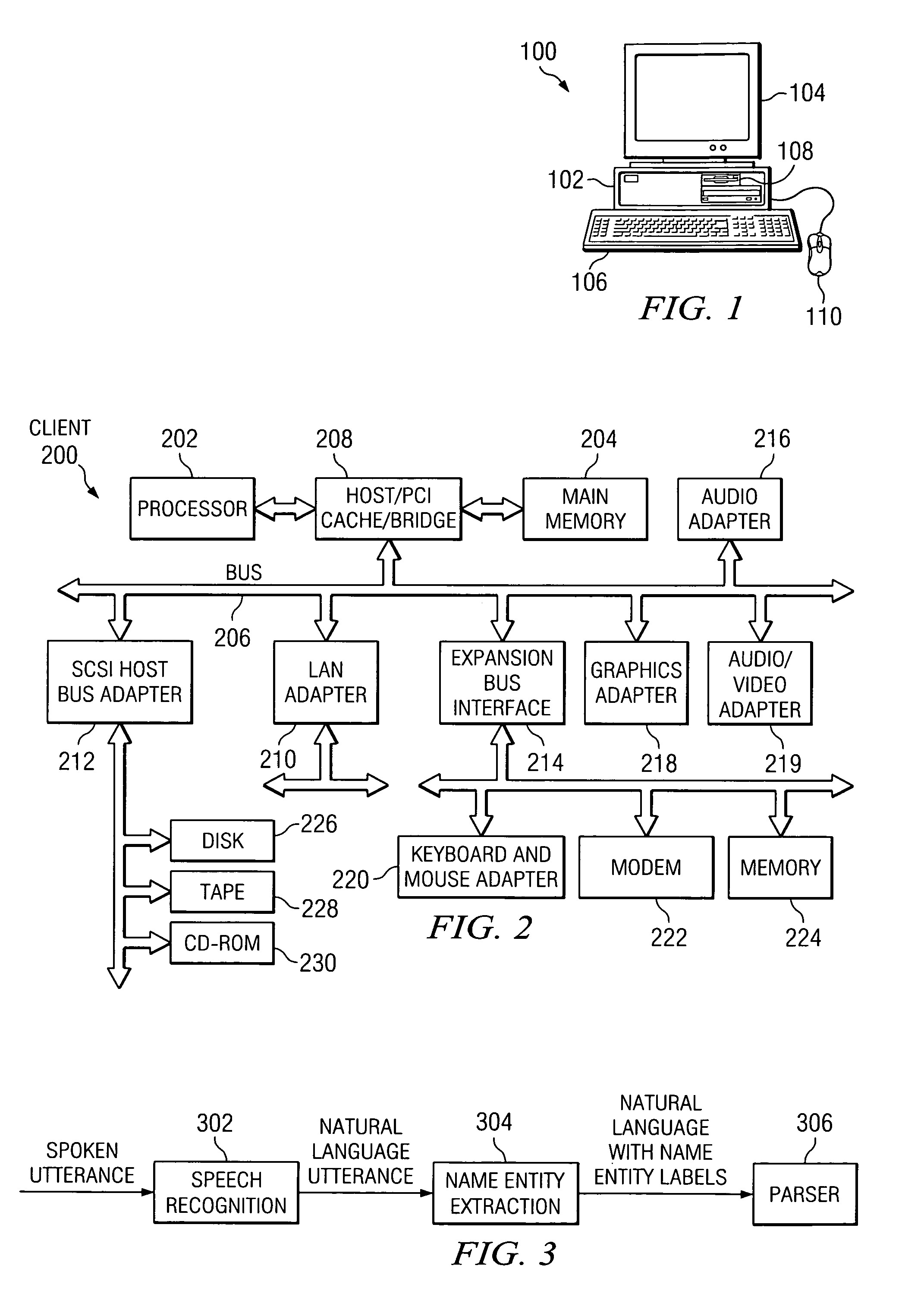

Semantic object synchronous understanding implemented with speech application language tags

ActiveUS7200559B2Sound input/outputSpeech recognitionSpeech comprehensionApplication programming interface

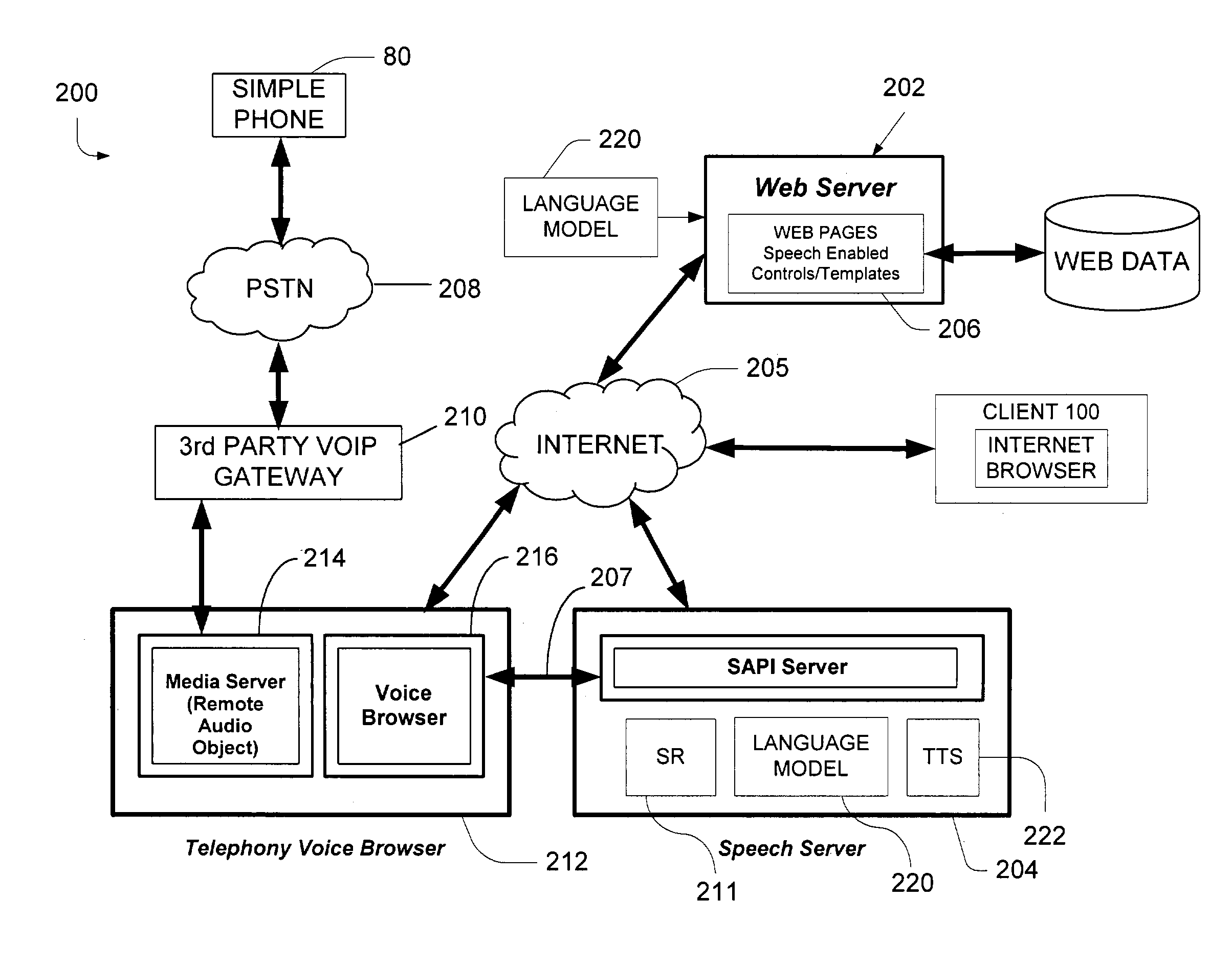

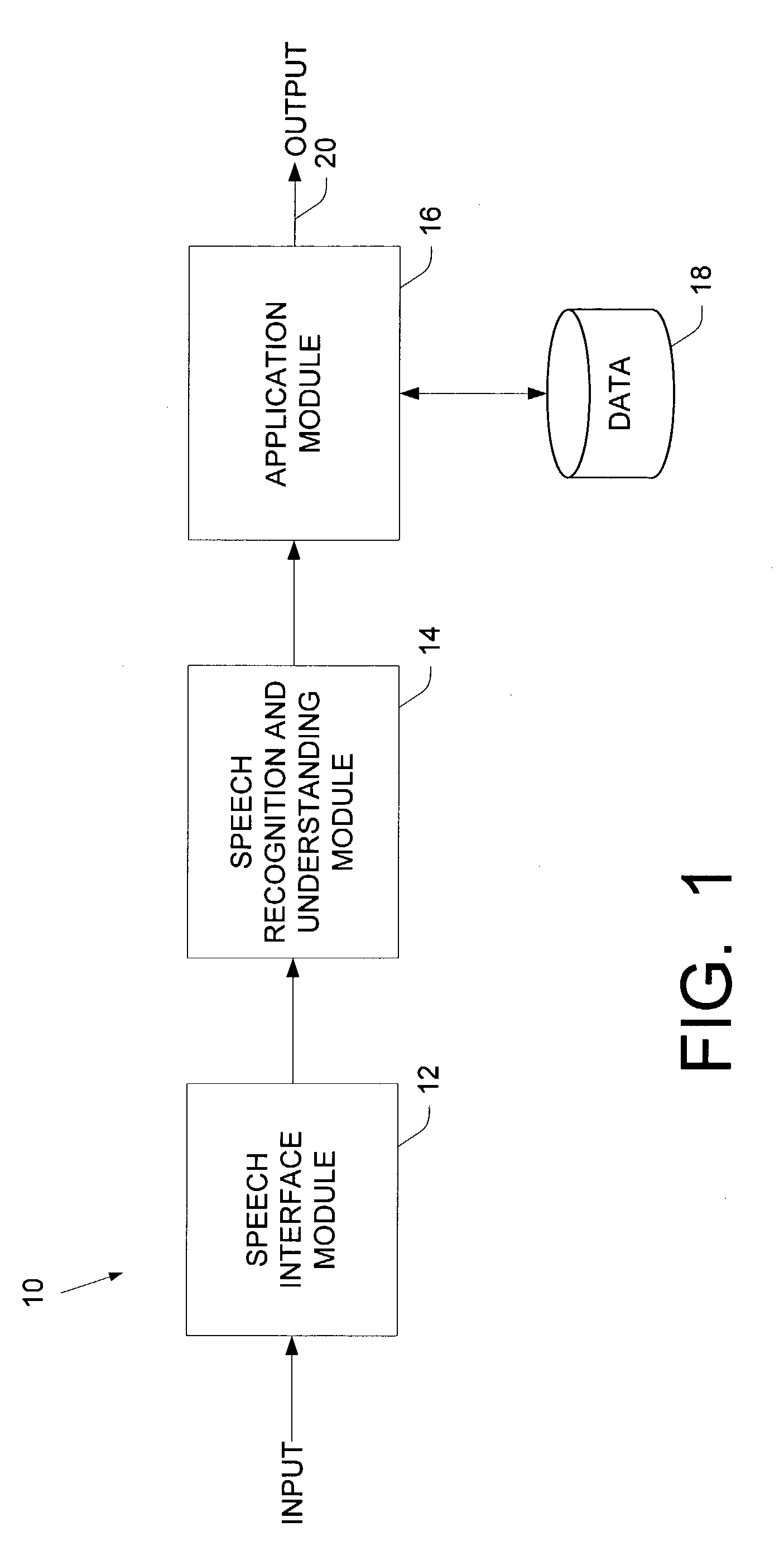

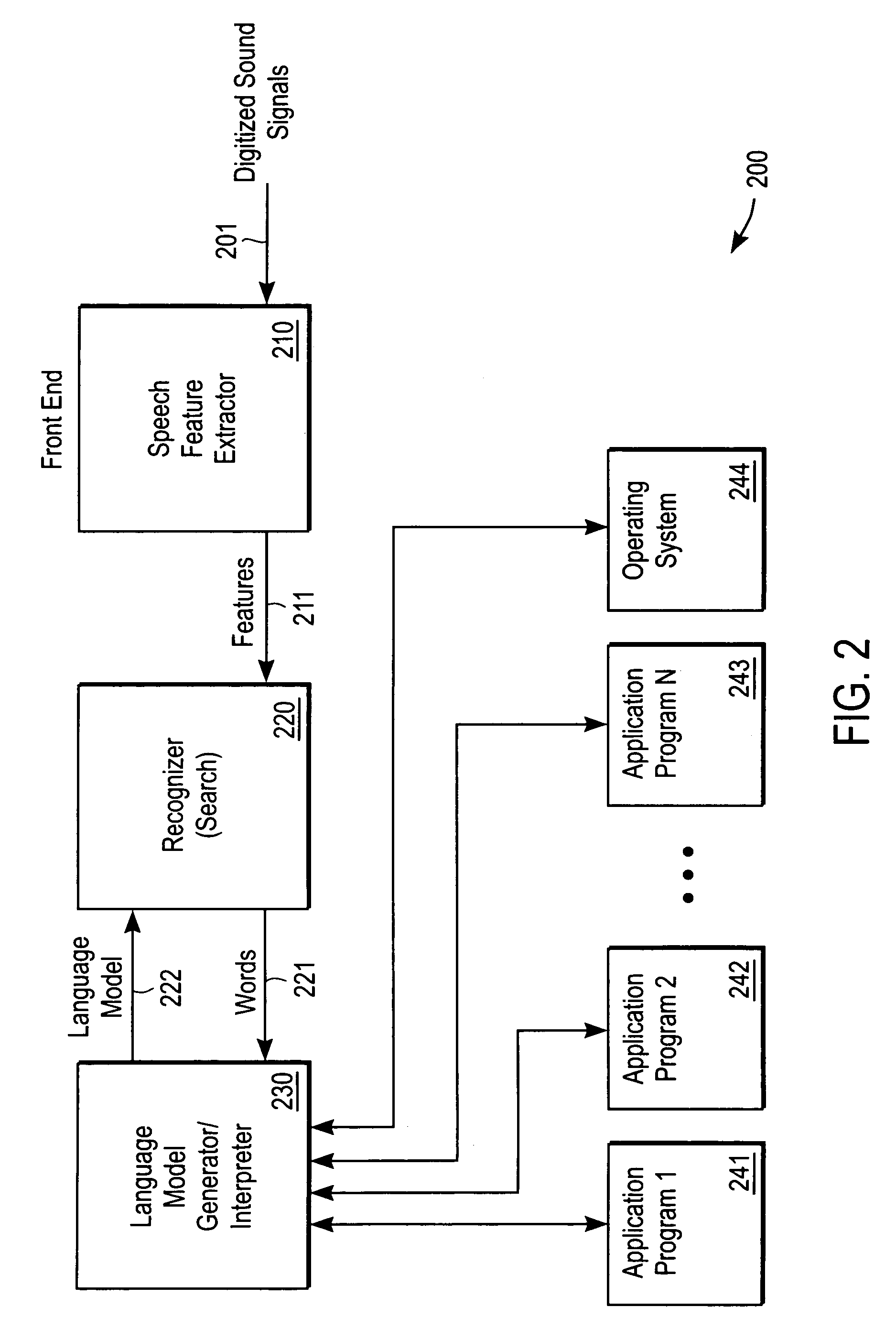

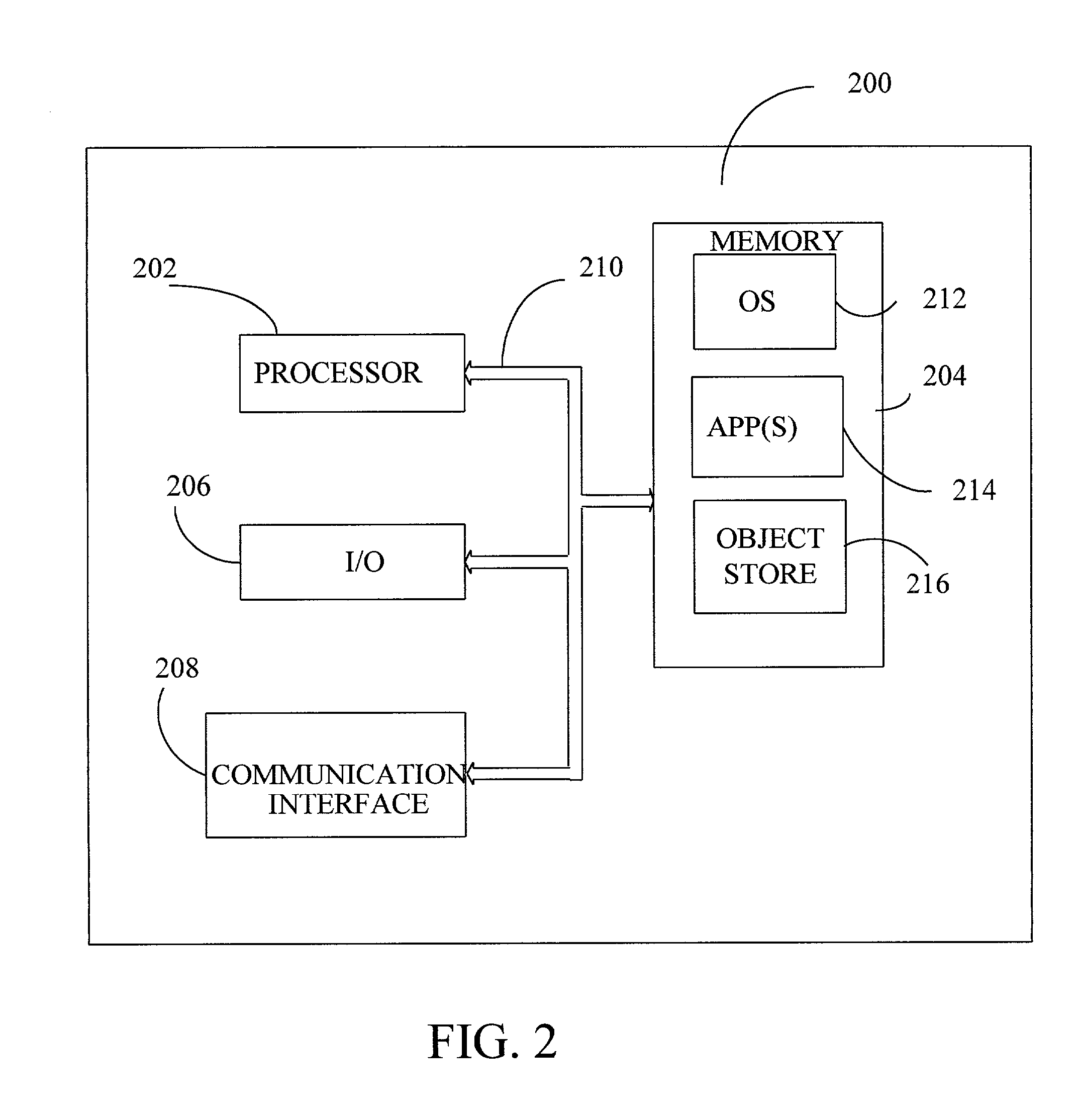

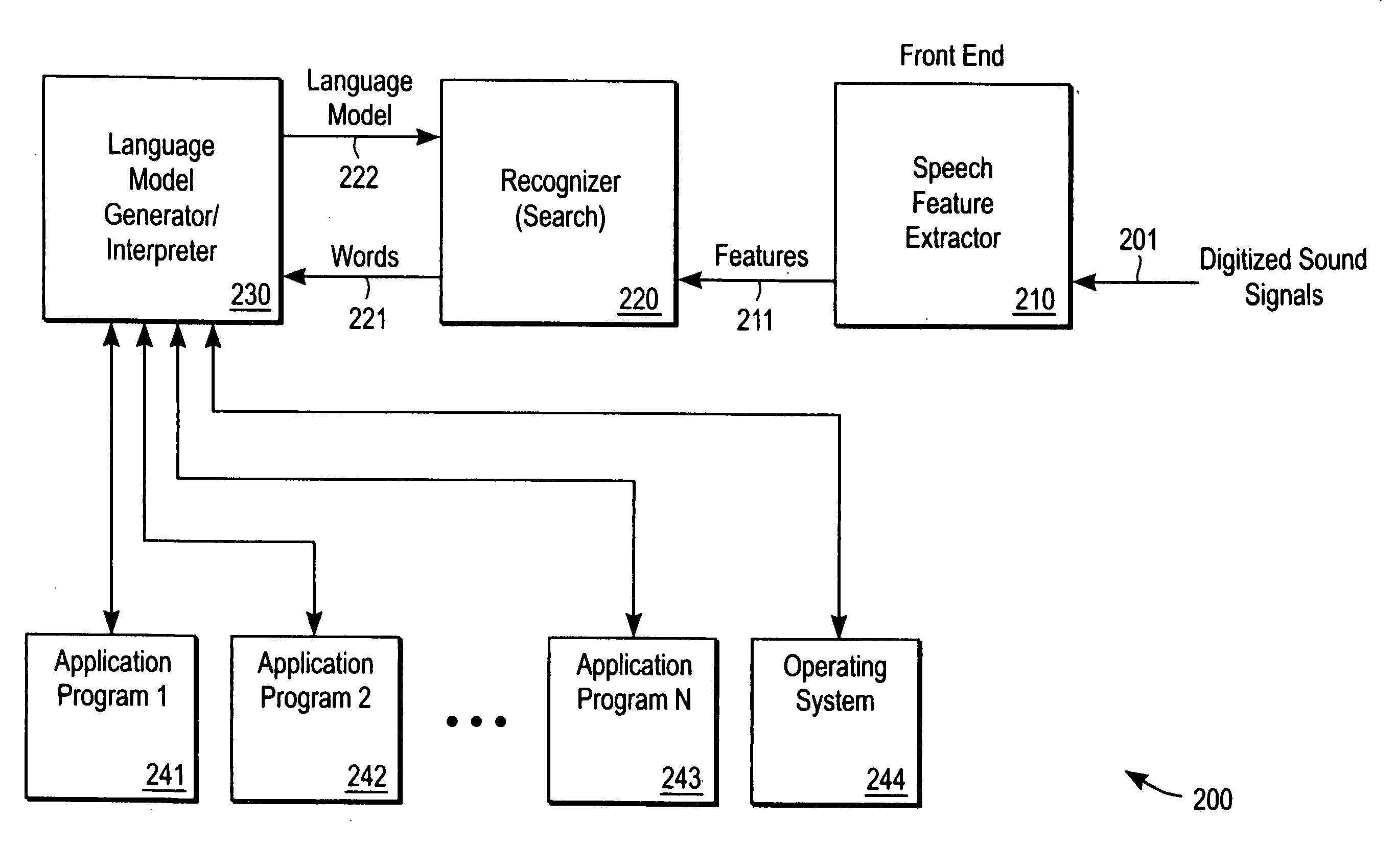

A speech understanding system includes a language model comprising a combination of an N-gram language model and a context-free grammar language model. The language model stores information related to words and semantic information to be recognized. A module is adapted to receive input from a user and capture the input for processing. The module is further adapted to receive SALT application program interfaces pertaining to recognition of the input. The module is configured to process the SALT application program interfaces and the input to ascertain semantic information pertaining to a first portion of the input and output a semantic object comprising text and semantic information for the first portion by accessing the language model, wherein performing recognition and outputting the semantic object are performed while capturing continues for subsequent portions of the input.

Owner:MICROSOFT TECH LICENSING LLC

Message recognition using shared language model

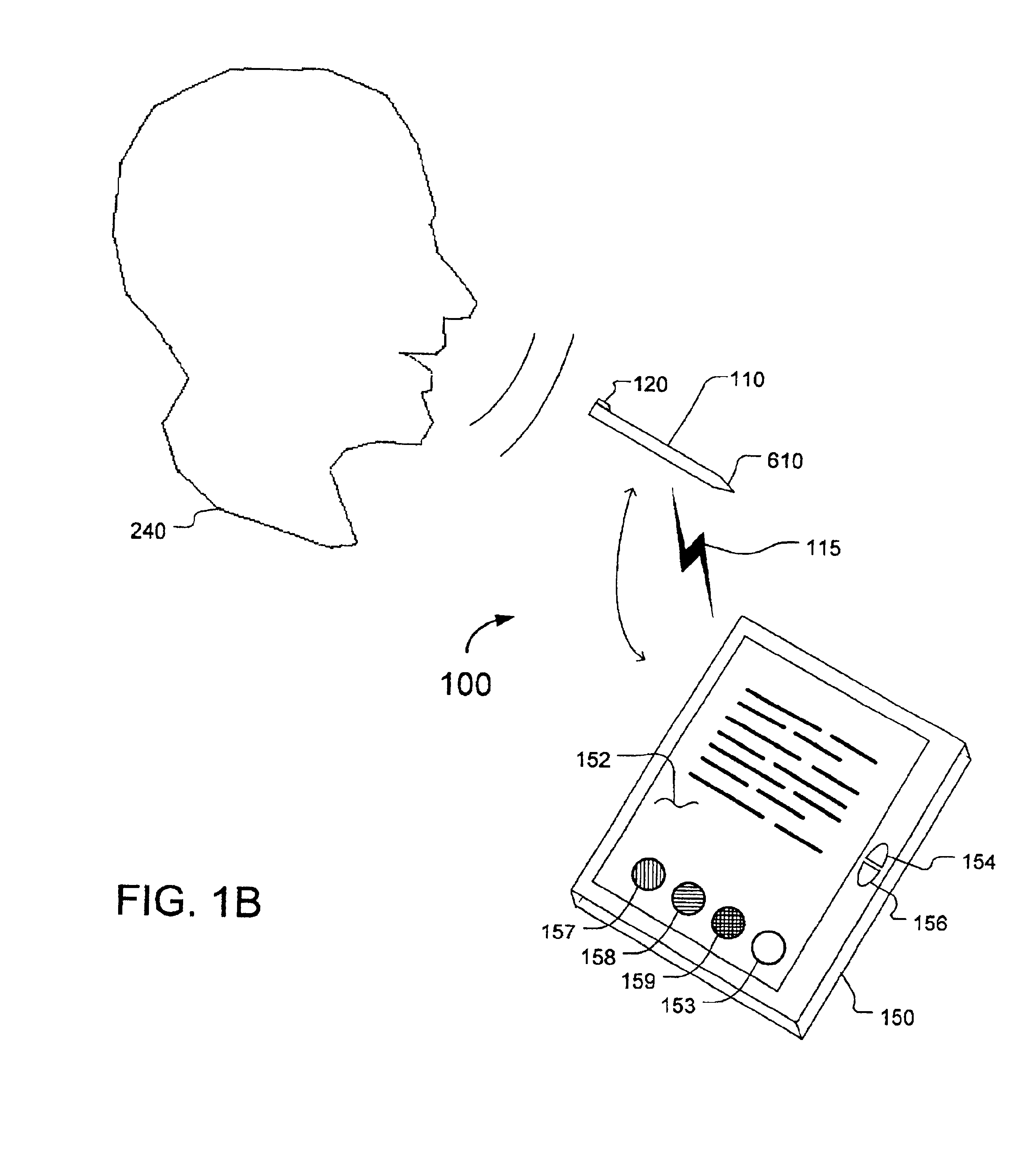

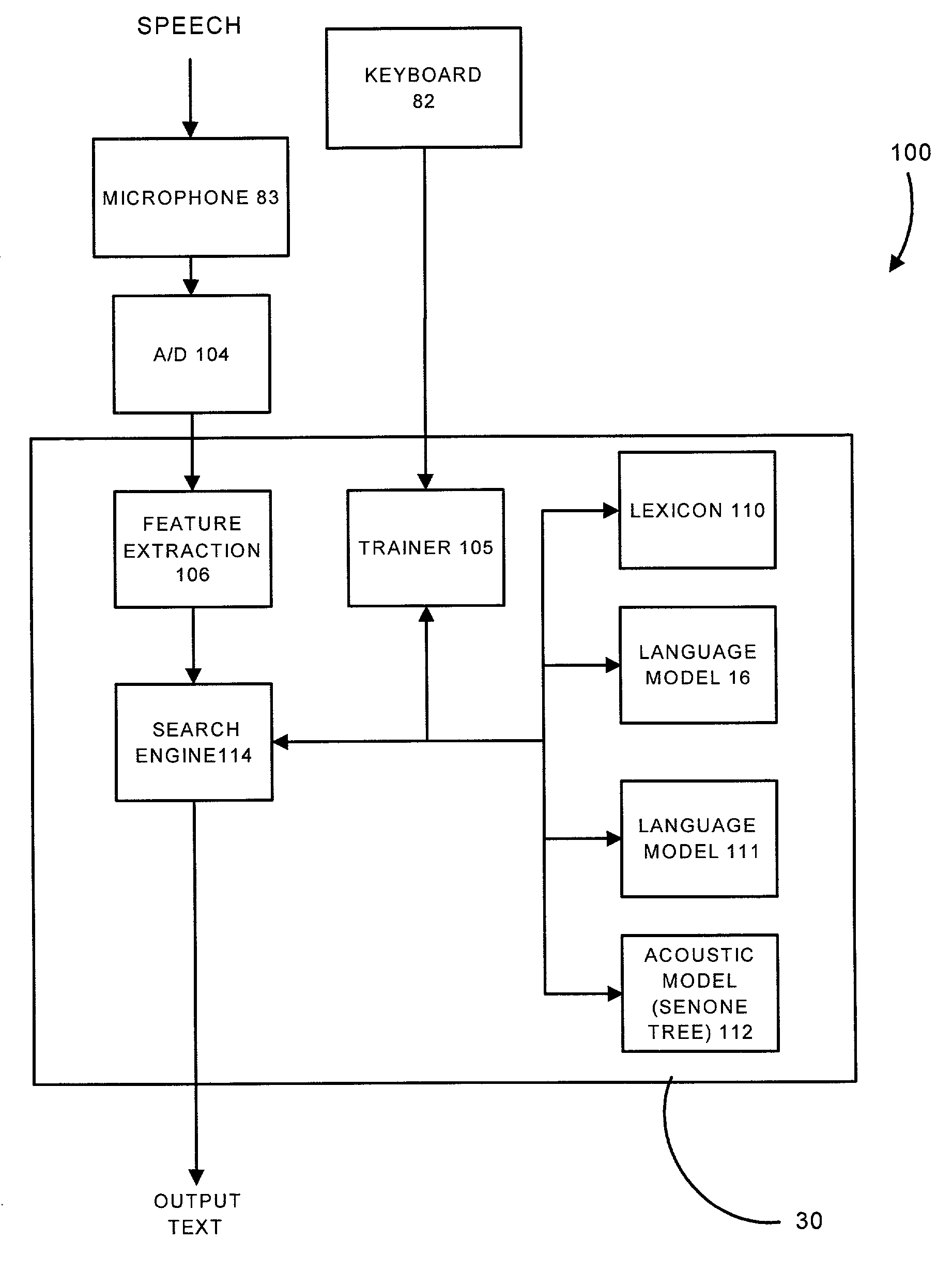

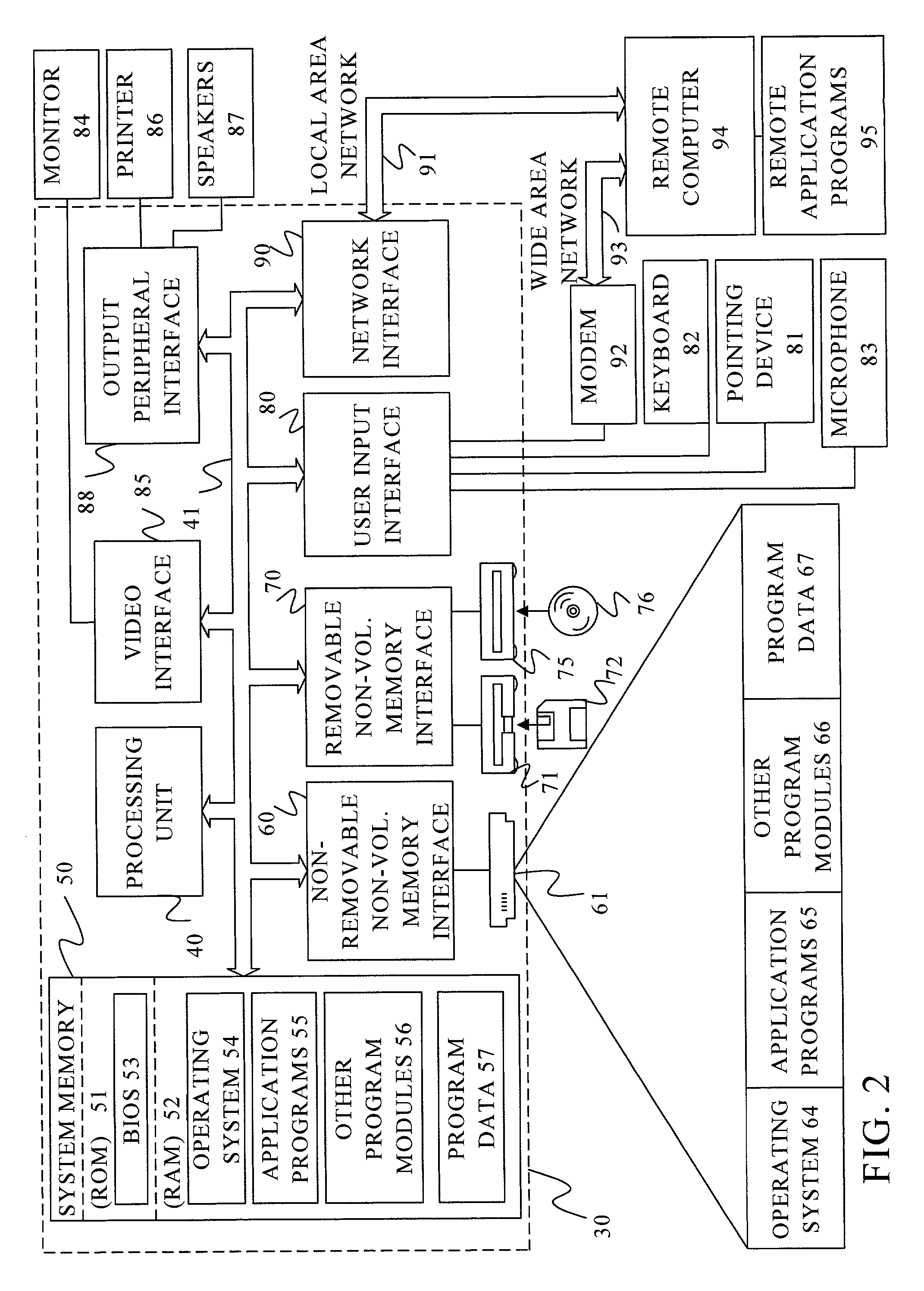

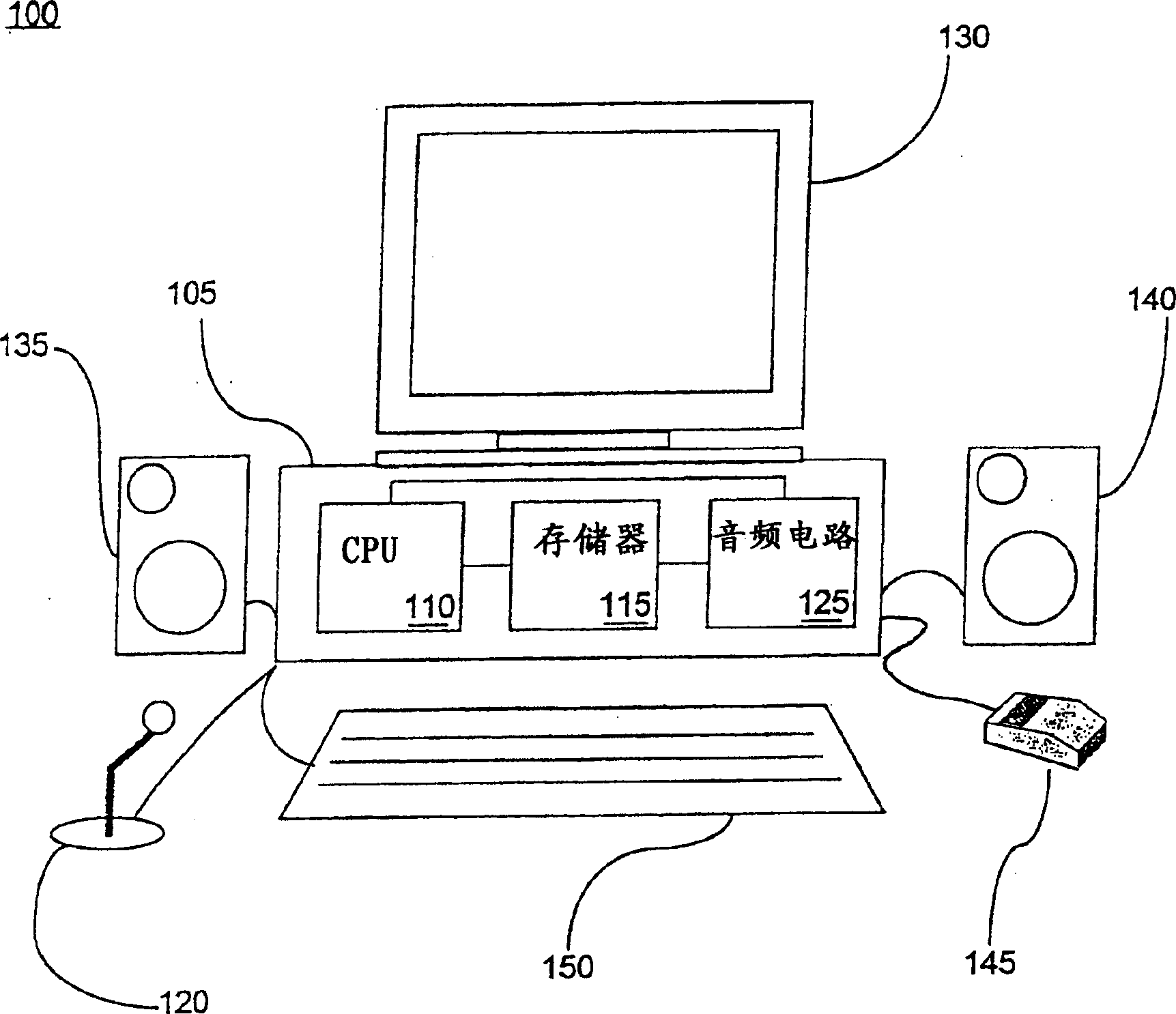

InactiveUS6904405B2Speech recognitionInput/output processes for data processingHandwritingAcoustic model

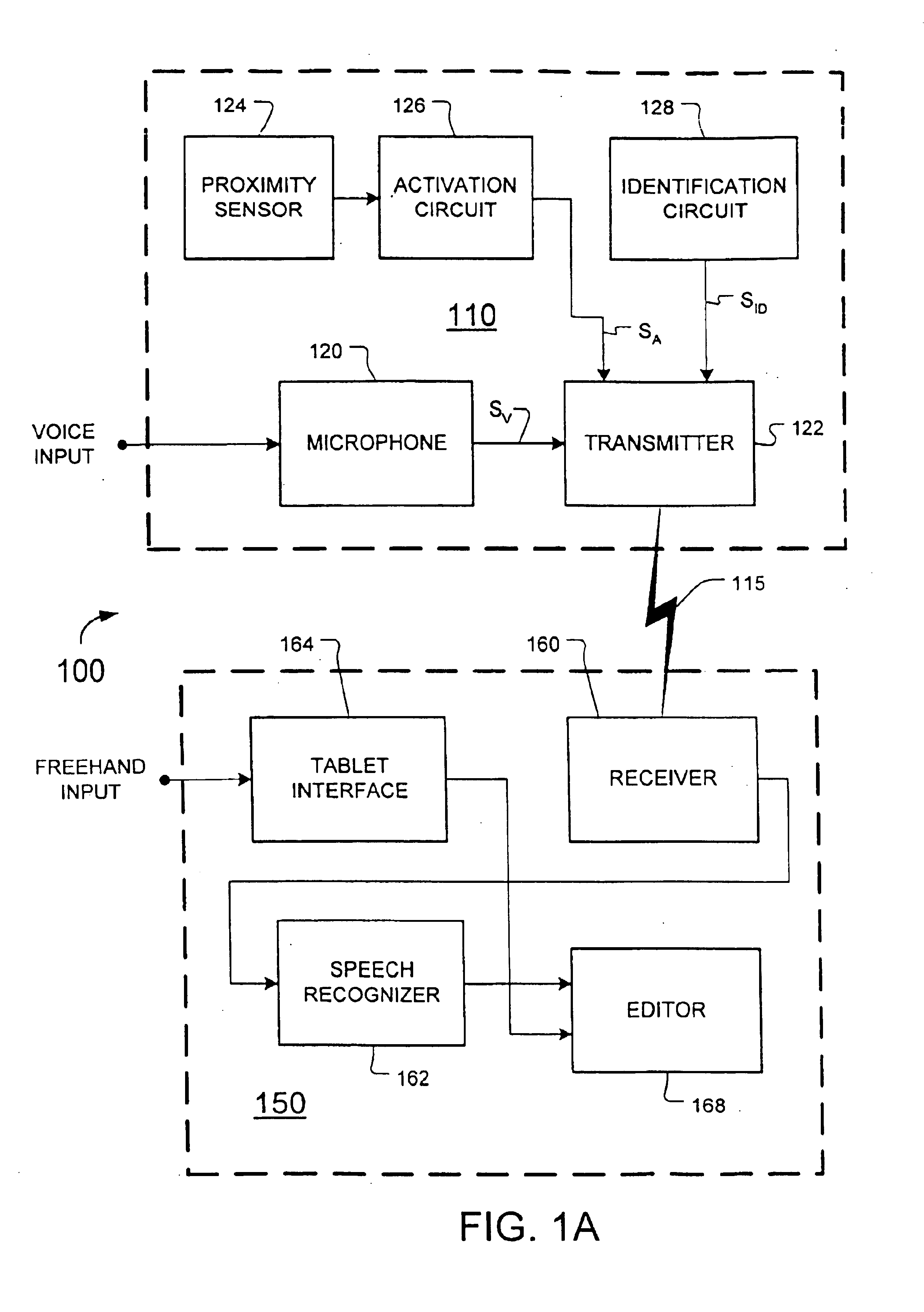

Certain disclosed methods and systems perform multiple different types of message recognition using a shared language model. Message recognition of a first type is performed responsive to a first type of message input (e.g., speech), to provide text data in accordance with both the shared language model and a first model specific to the first type of message recognition (e.g., an acoustic model). Message recognition of a second type is performed responsive to a second type of message input (e.g., handwriting), to provide text data in accordance with both the shared language model and a second model specific to the second type of message recognition (e.g., a model that determines basic units of handwriting conveyed by freehand input). Accuracy of both such message recognizers can be improved by user correction of misrecognition by either one of them. Numerous other methods and systems are also disclosed.

Owner:BUFFALO PATENTS LLC

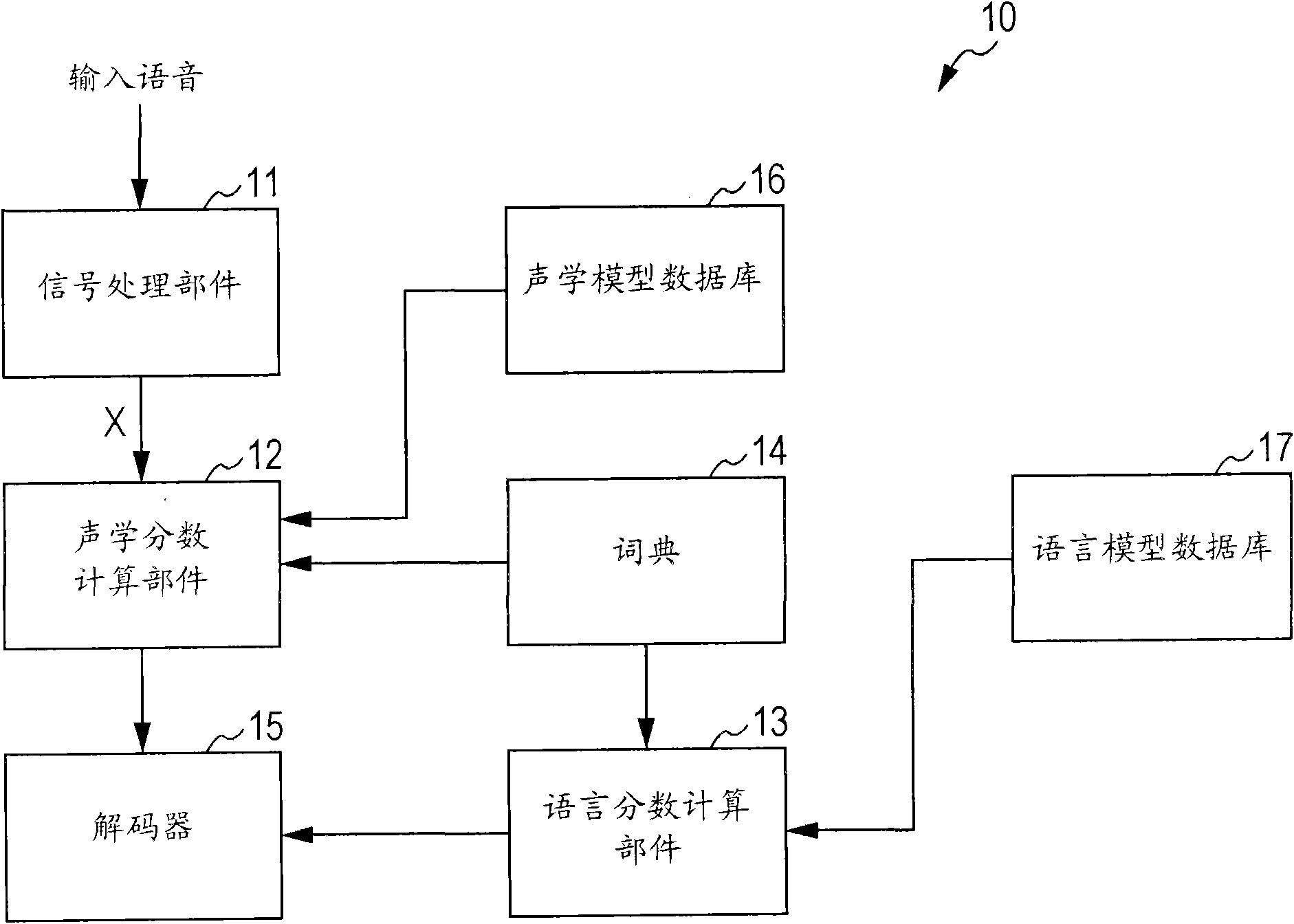

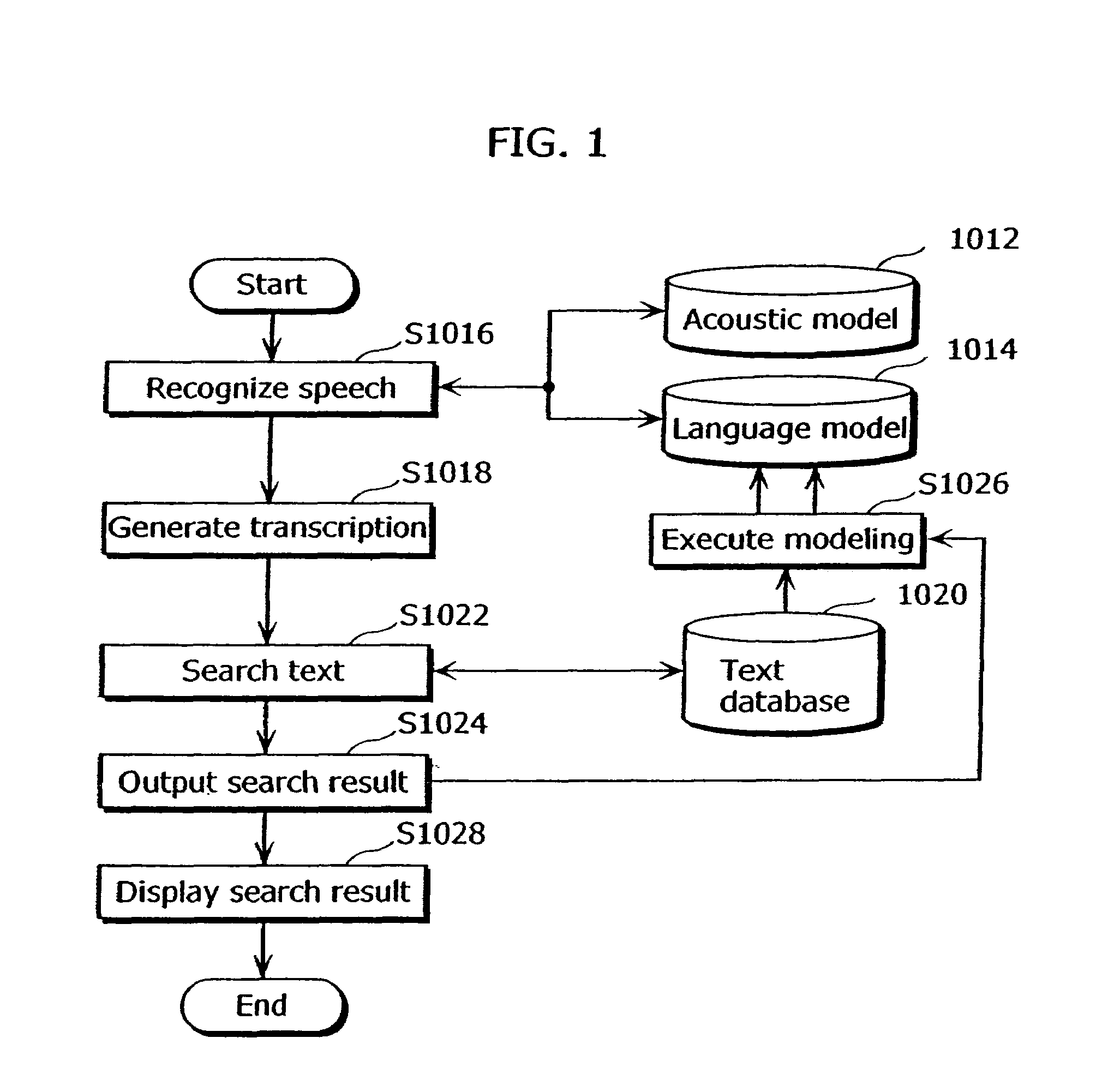

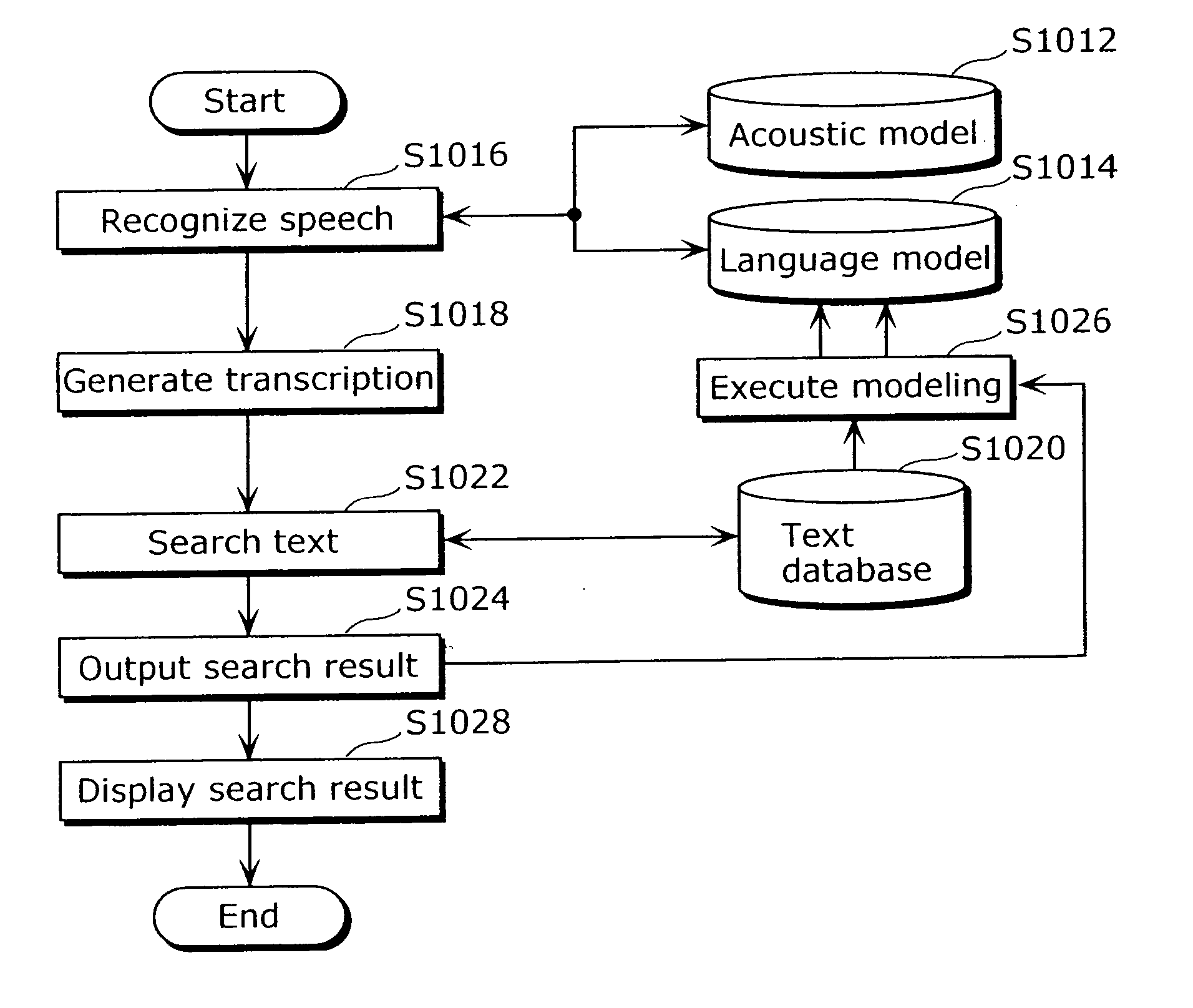

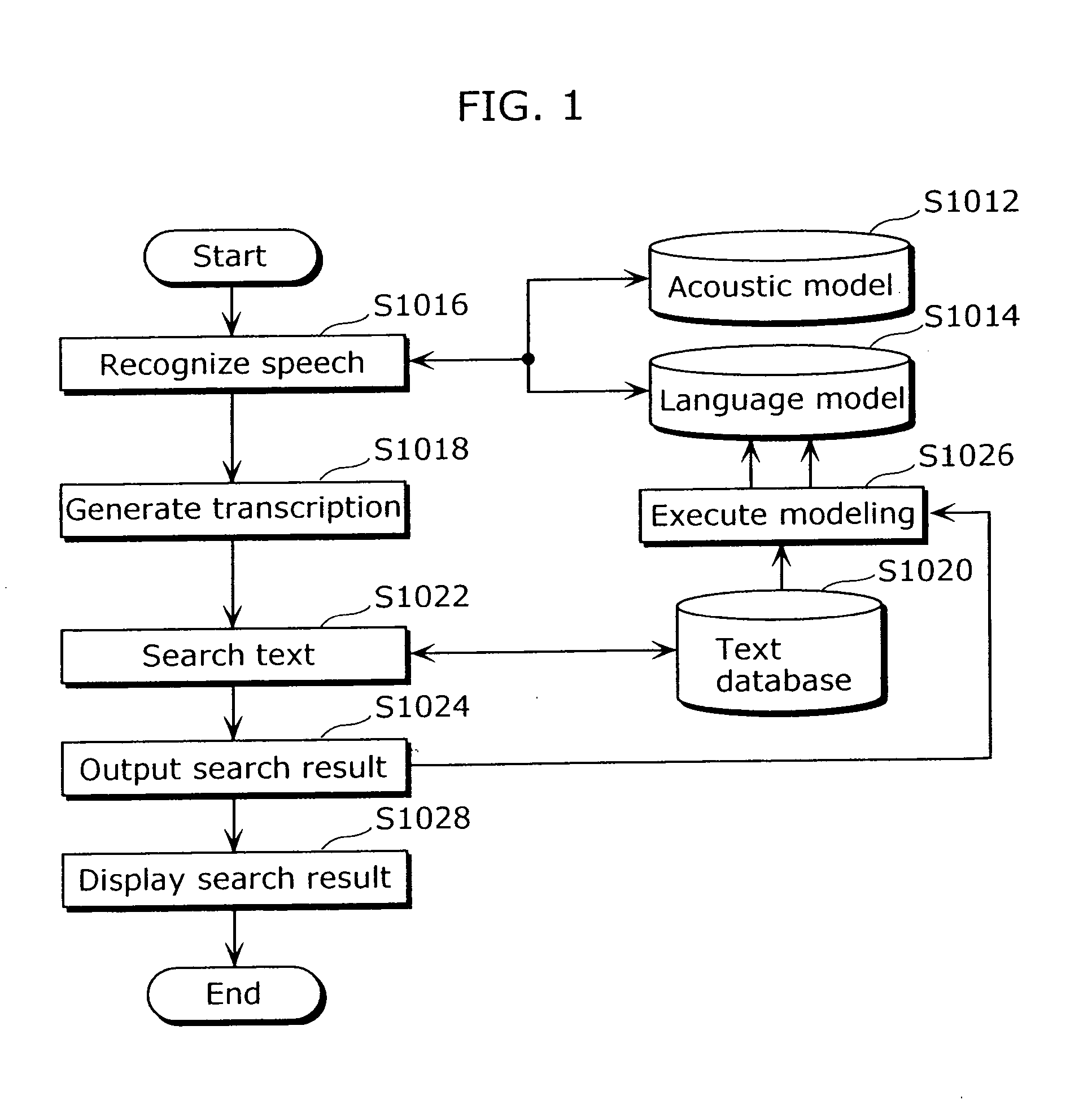

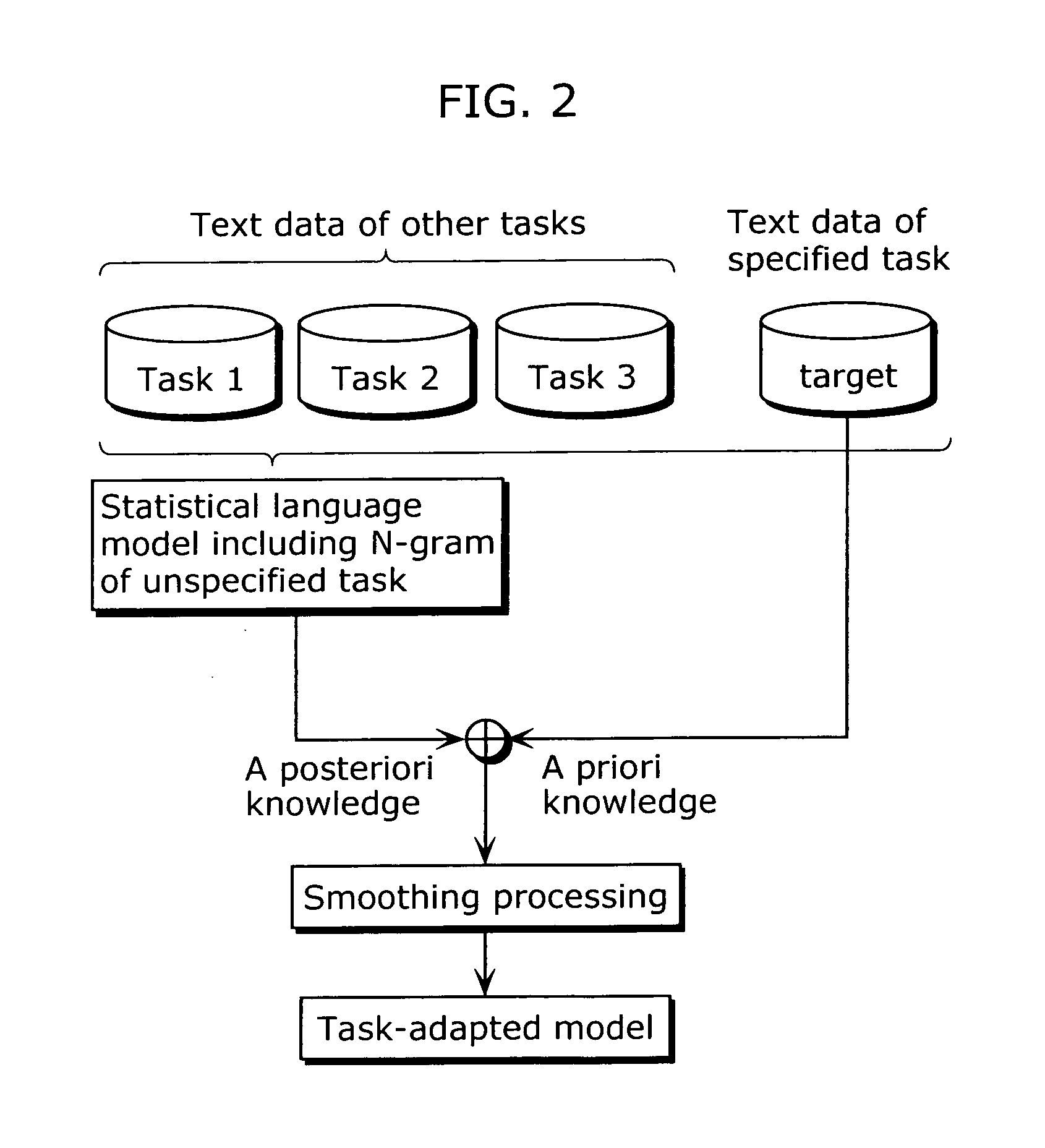

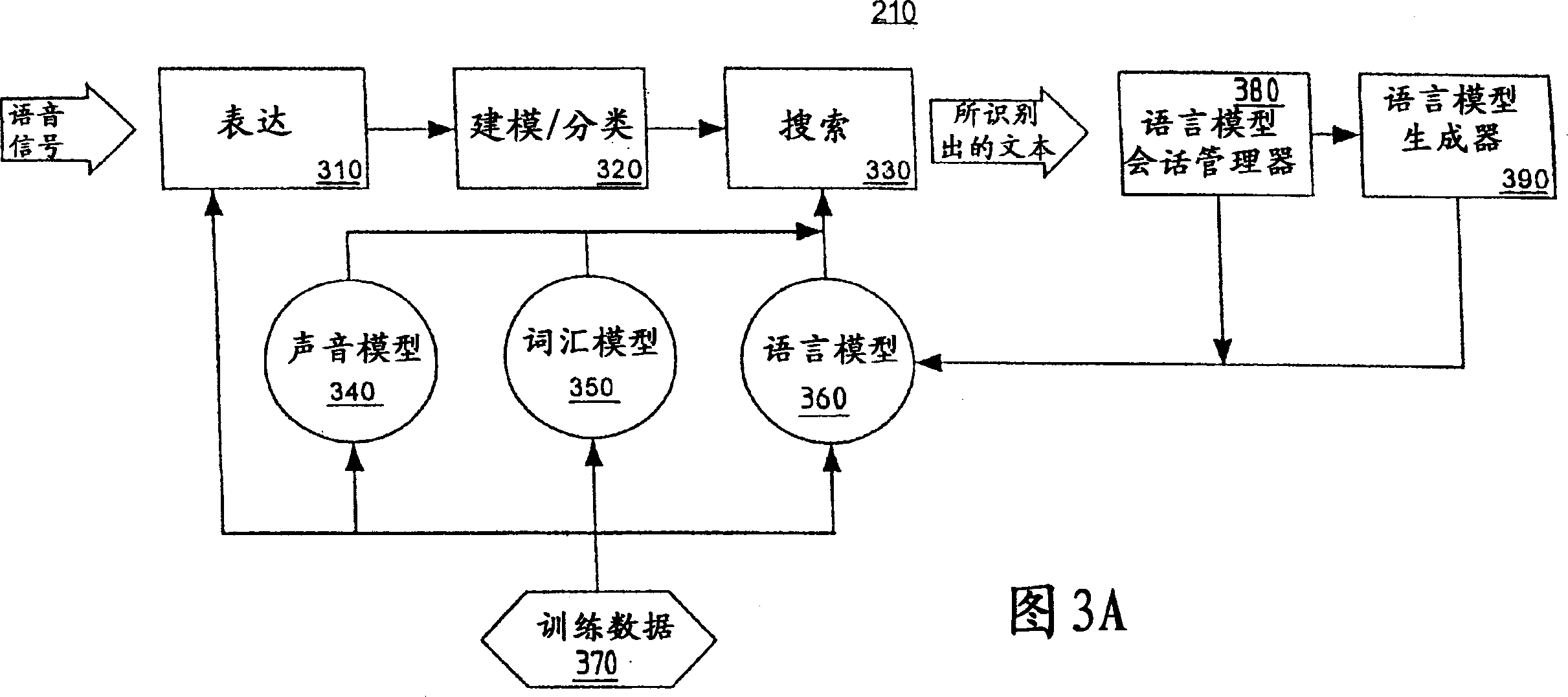

Voice recognition device and voice recognition method, language model generating device and language model generating method, and computer program

InactiveCN101847405AImplement extractionImprove consistencySpeech recognitionSpeech identificationSpeech sound

The invention discloses a voice recognition device and a voice recognition method, a language model generating device and a language model generating method, and computer program. The speech recognition device includes one intention extracting language model and more in which an intention of a focused specific task is inherent, an absorbing language model in which any intention of the task is not inherent, a language score calculating section that calculates a language score indicating a linguistic similarity between each of the intention extracting language model and the absorbing language model, and the content of an utterance, and a decoder that estimates an intention in the content of an utterance based on a language score of each of the language models calculated by the language score calculating section.

Owner:SONY CORP

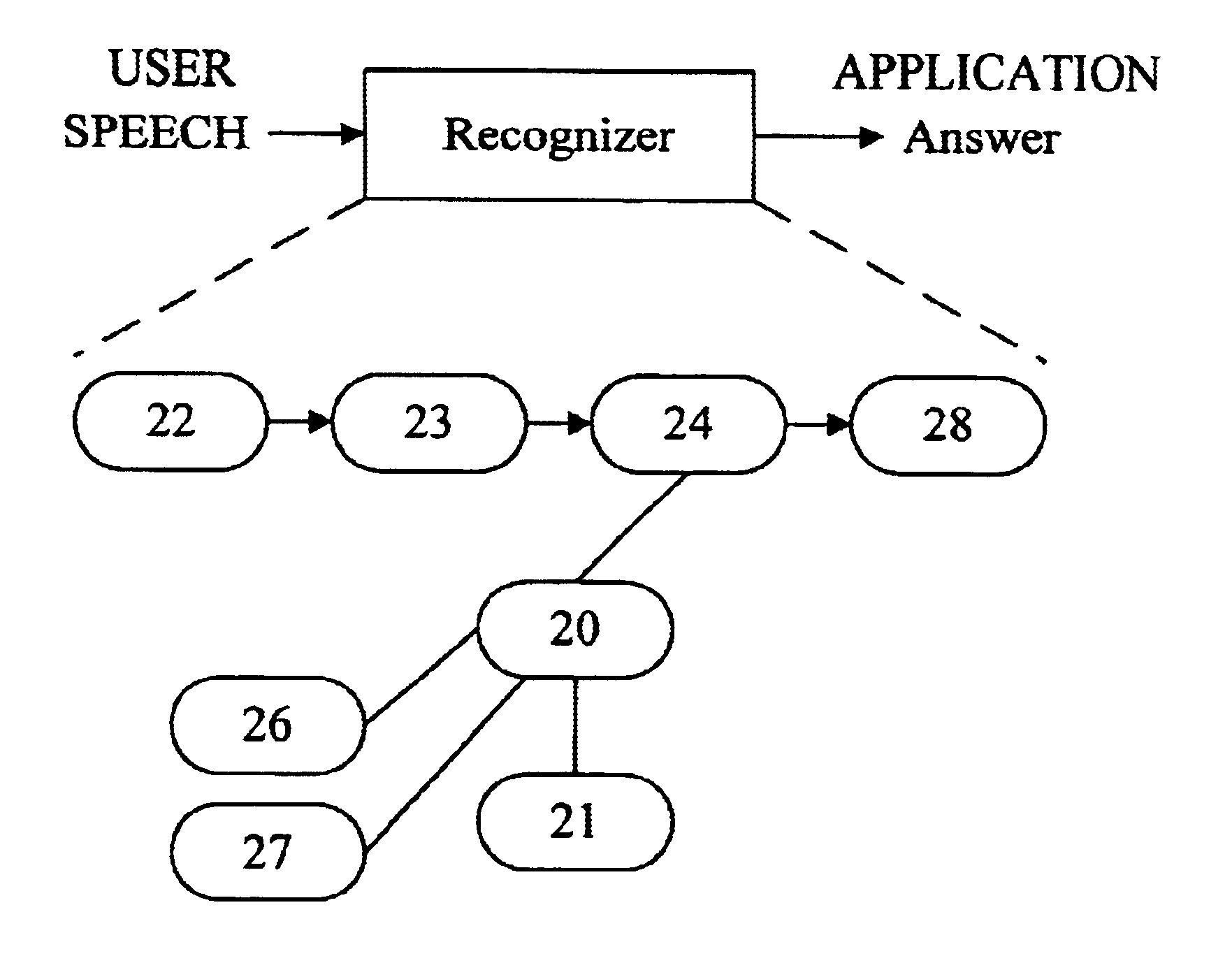

Assigning meanings to utterances in a speech recognition system

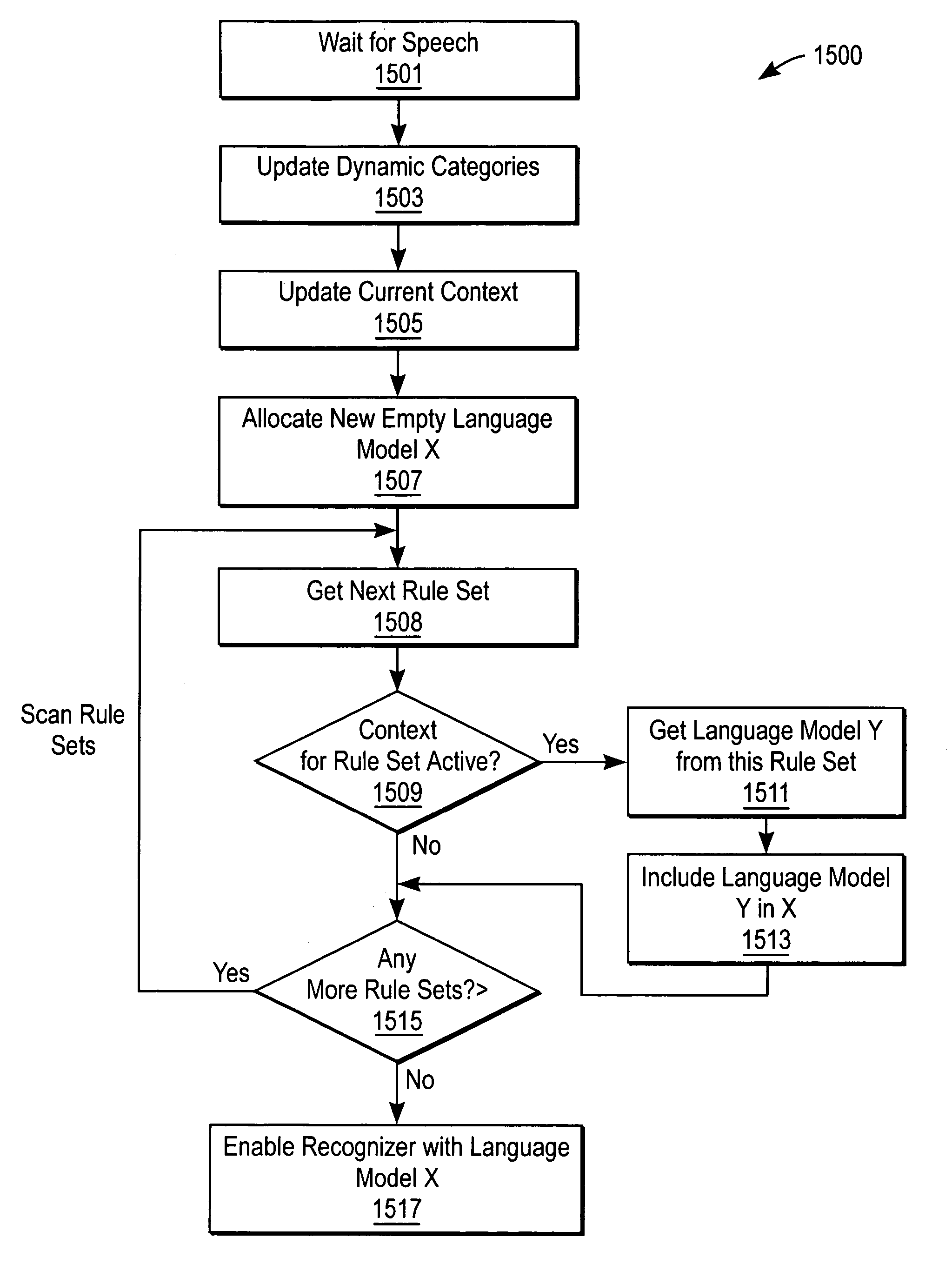

Assigning meanings to spoken utterances in a speech recognition system. A plurality of speech rules is generated, each of the of speech rules comprising a language model and an expression associated with the language model. At one interval (e.g. upon the detection of speech in the system), a current language model is generated from each language model in the speech rules for use by a recognizer. When a sequence of words is received from the recognizer, a set of speech rules which match the sequence of words received from the recognizer is determined. Each expression associated with the language model in each of the set of speech rules is evaluated, and actions are performed in the system according to the expressions associated with each language model in the set of speech rules.

Owner:APPLE INC

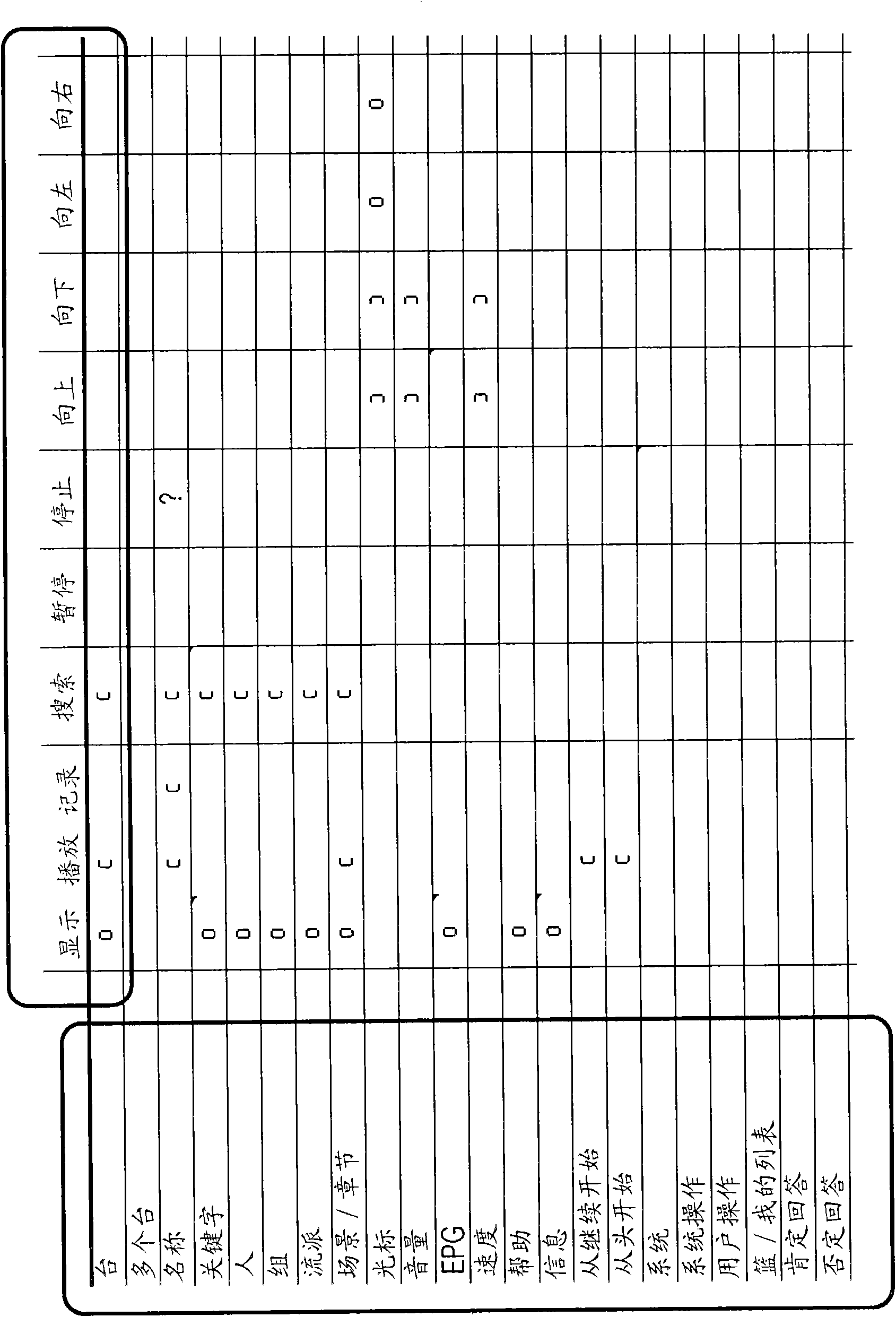

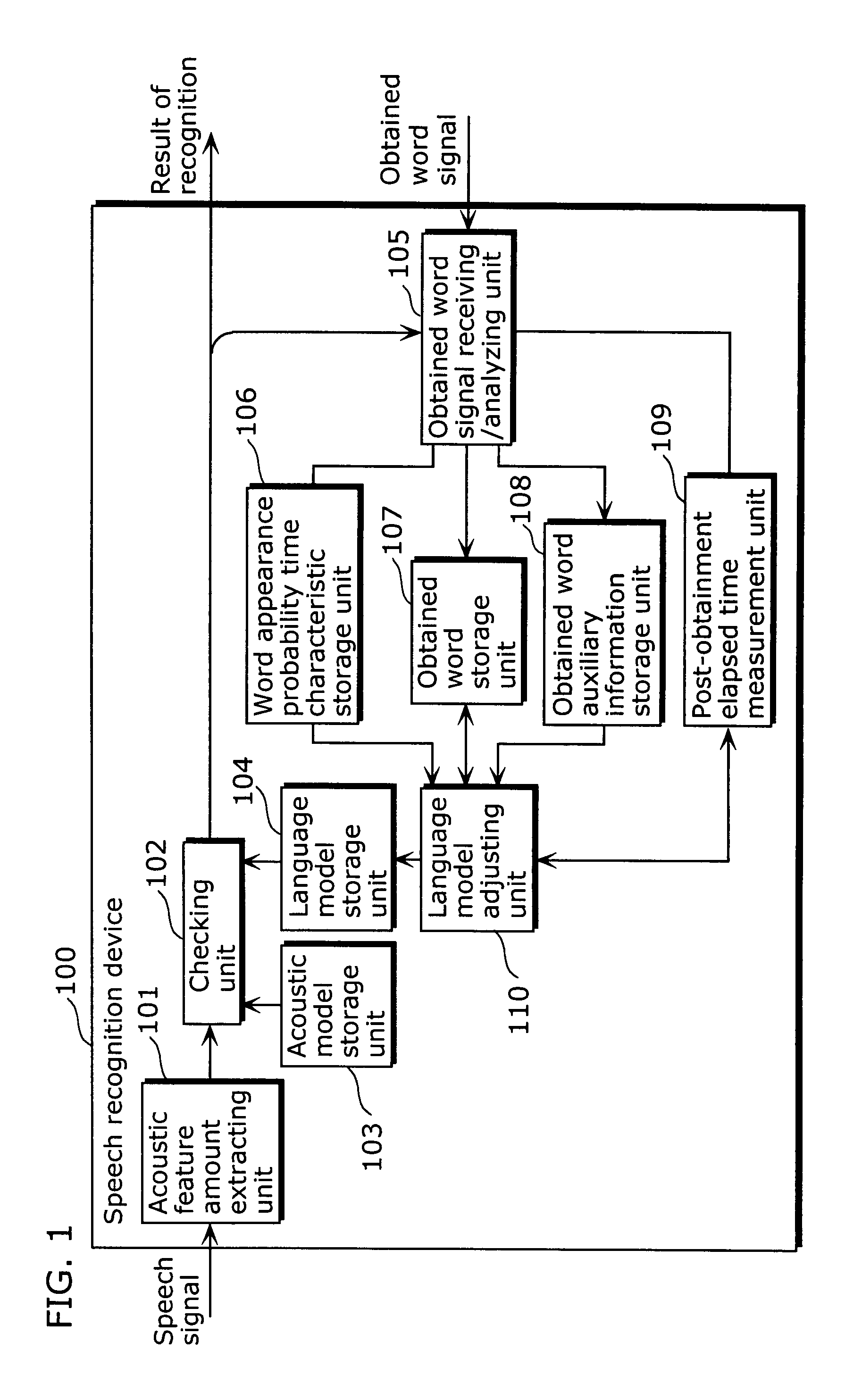

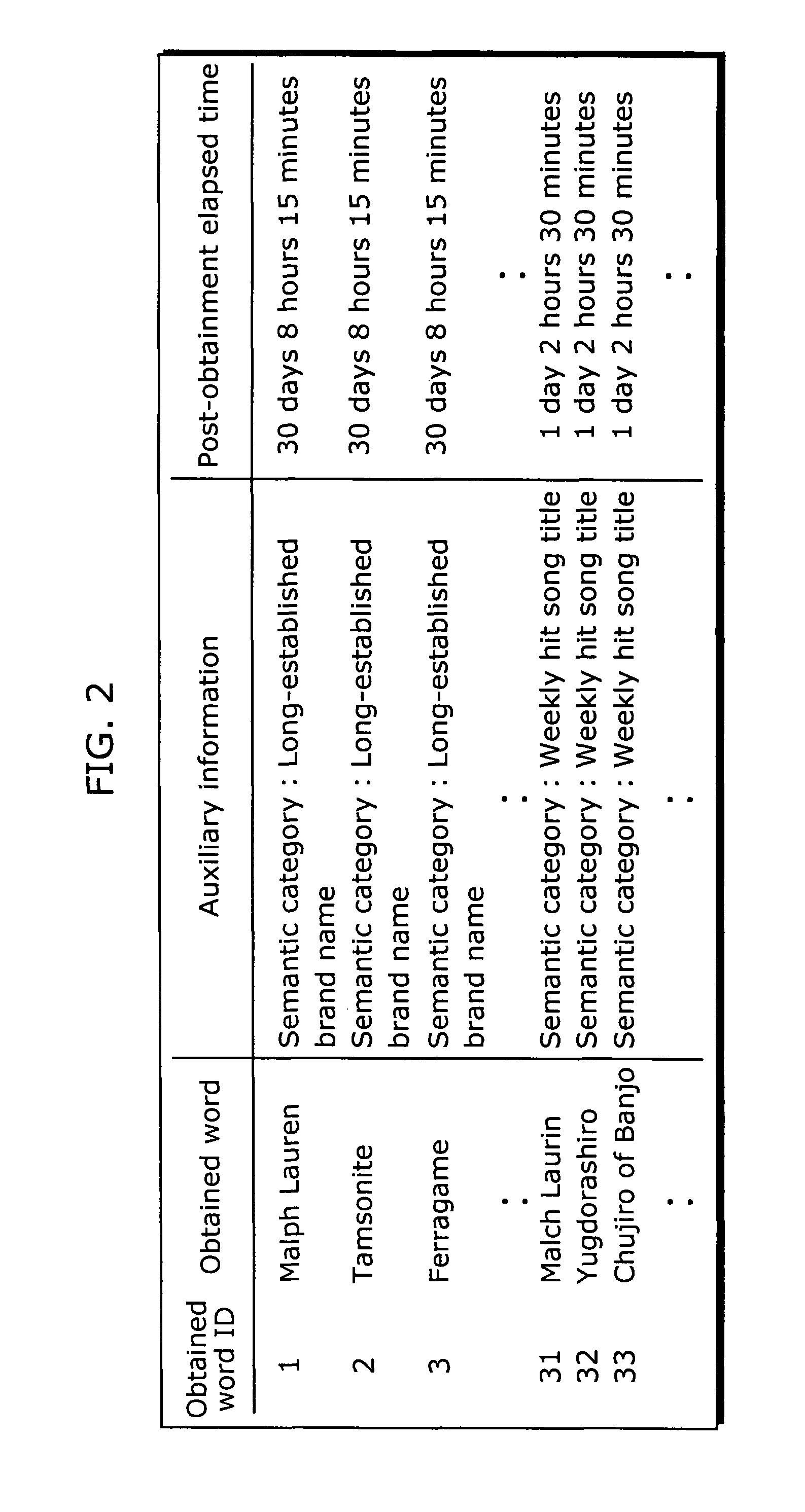

Speech recognition apparatus and speech recognition method

ActiveUS7310601B2Appropriate performanceComplicated processSpeech recognitionSpeech identificationSpeech sound

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

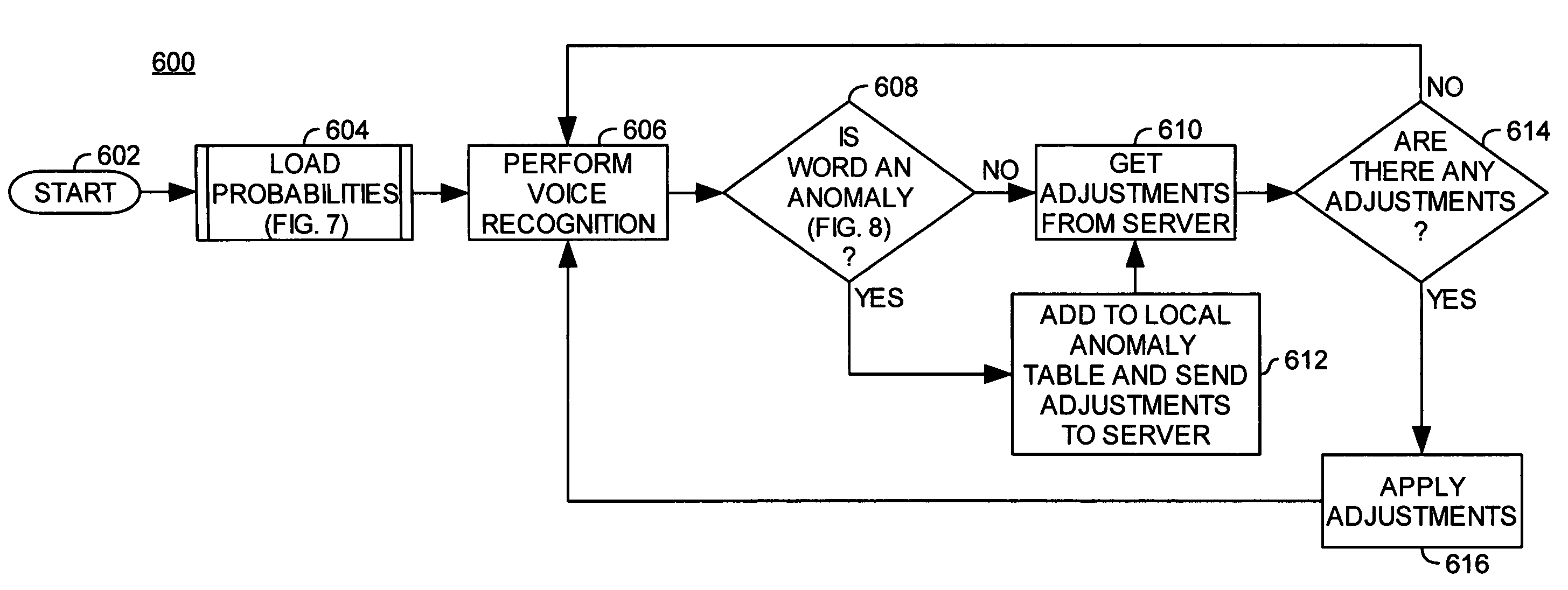

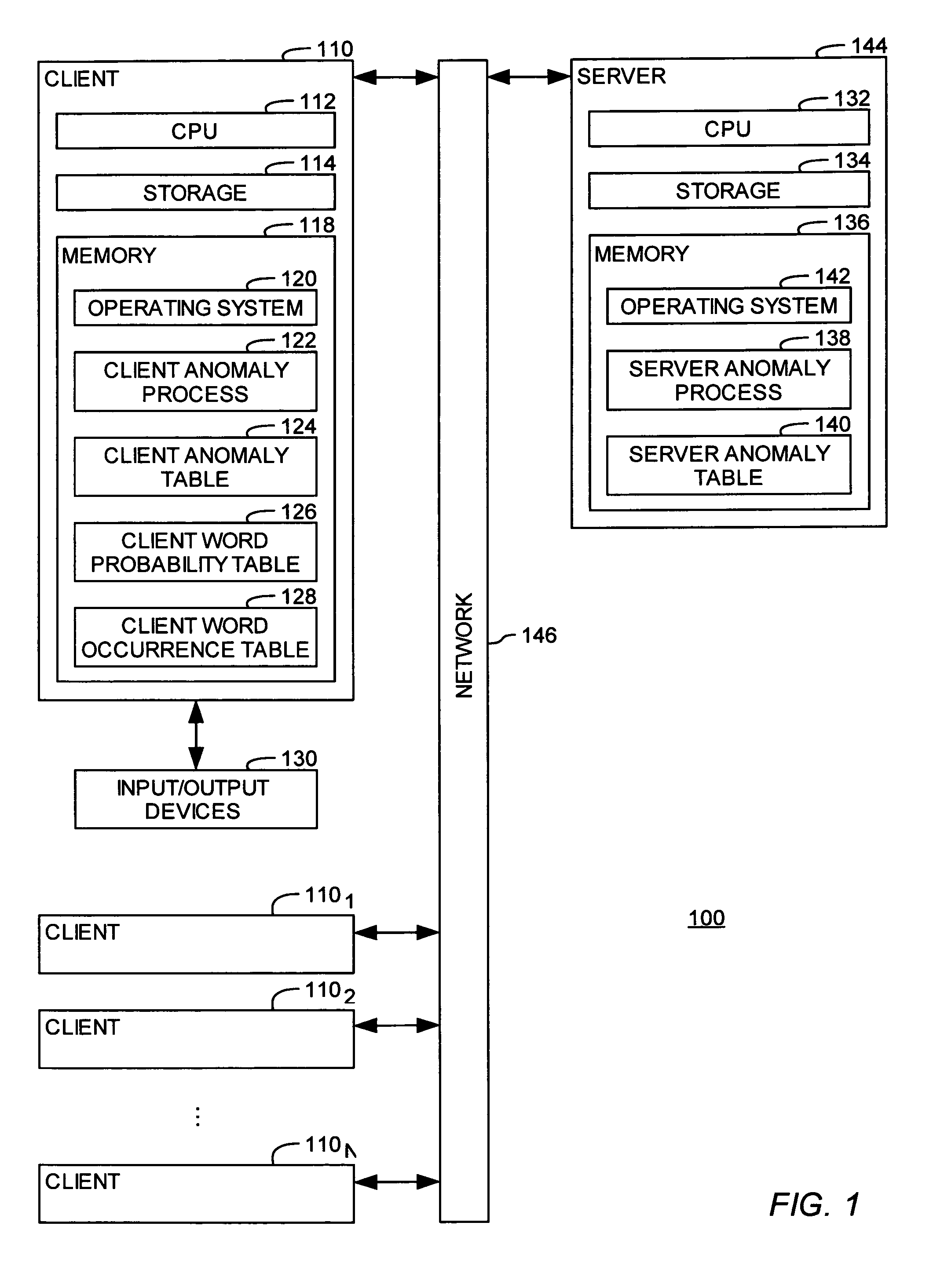

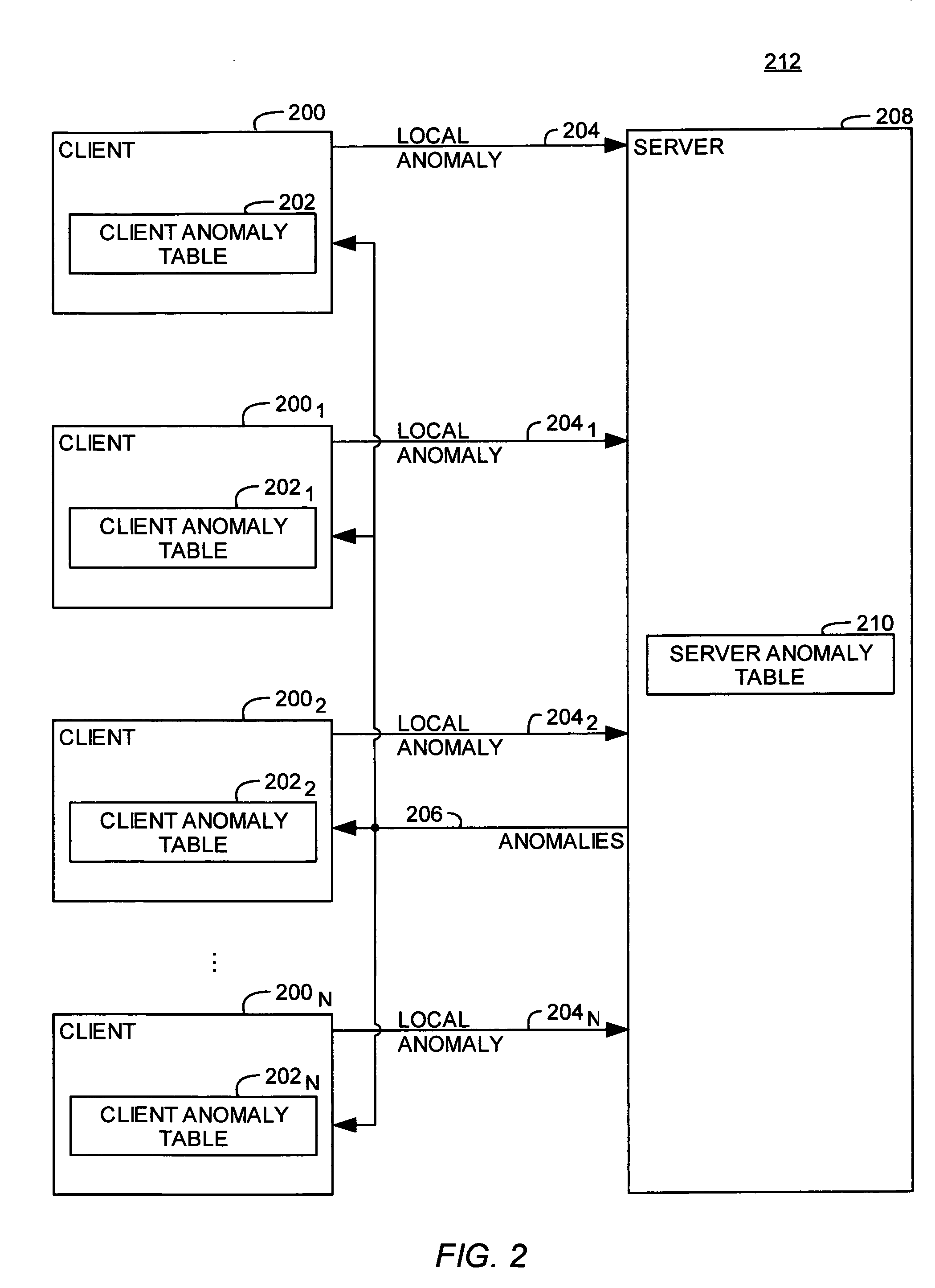

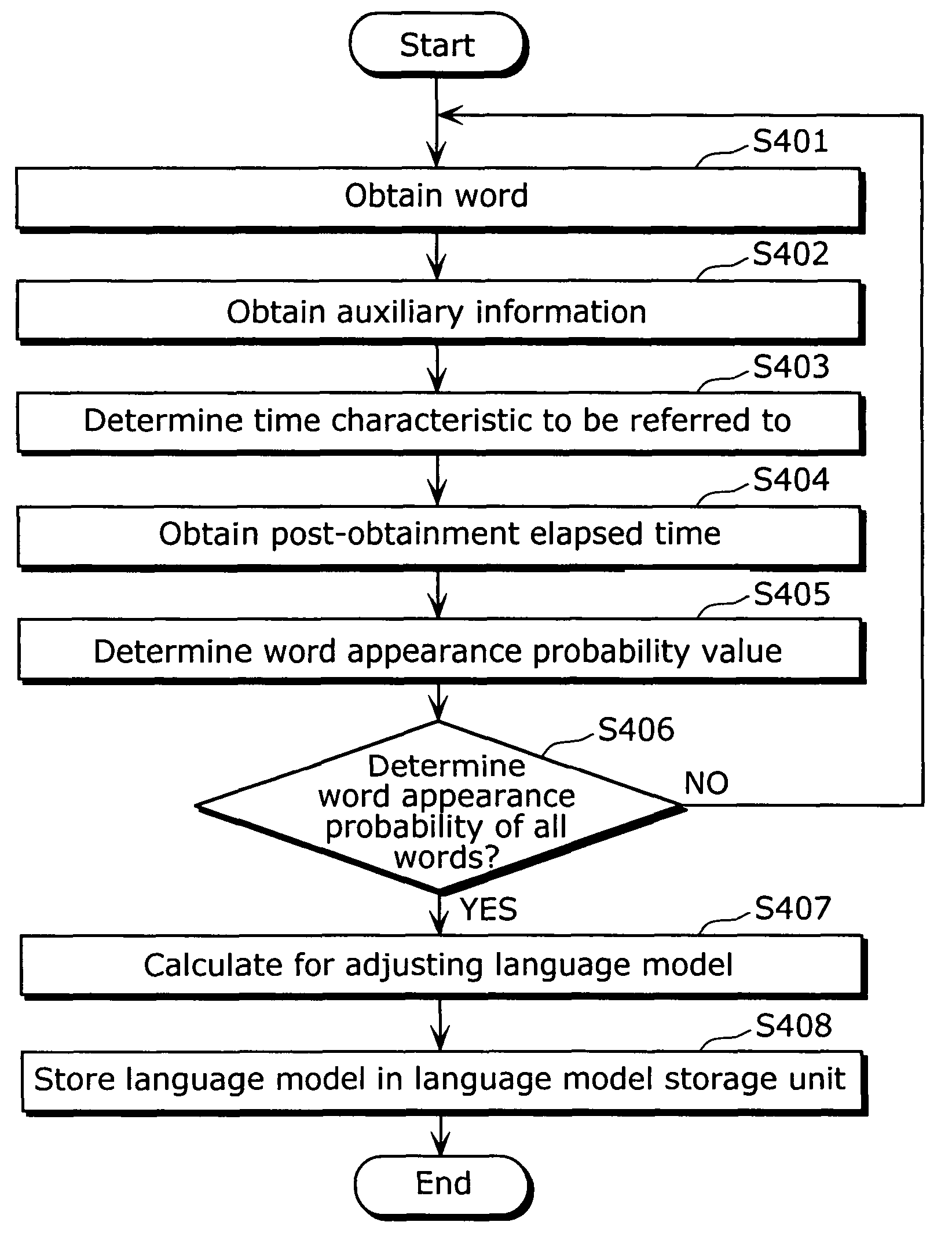

Voice language model adjustment based on user affinity

InactiveUS7590536B2Improve accuracySpeech recognitionData processing systemFacial recognition system

Methods, systems and computer readable medium for improving the accuracy of voice processing are provided. Embodiments of the present invention generally provide methods, systems and articles of manufacture for adjusting a language model within a voice recognition system. In one embodiment, changes are made to the language model by identifying a word-usage pattern that qualifies as an anomaly. In one embodiment, an anomaly occurs when the use of a given word (or phrase) differs from an expected probability for the word (or phrase), as predicted by a language model. Additionally, observed anomalies may be shared and applied by different users of the voice processing system, depending on an affinity in word-usage frequency between different users.

Owner:NUANCE COMM INC

Disambiguation language model

InactiveUS20020128831A1Improve accuracyAutomatic call-answering/message-recording/conversation-recordingSpeech recognitionSpeech identificationSpeech sound

A language model for a language processing system such as a speech recognition system is formed as a function of associated characters, word phrases and context cues. A method and apparatus for generating the training corpus used to train the language model and a system or module using such a language model is disclosed.

Owner:MICROSOFT TECH LICENSING LLC

Speech recognition apparatus and speech recognition method

ActiveUS20060100876A1Appropriate performanceComplicated processSpeech recognitionSpeech identificationSpeech sound

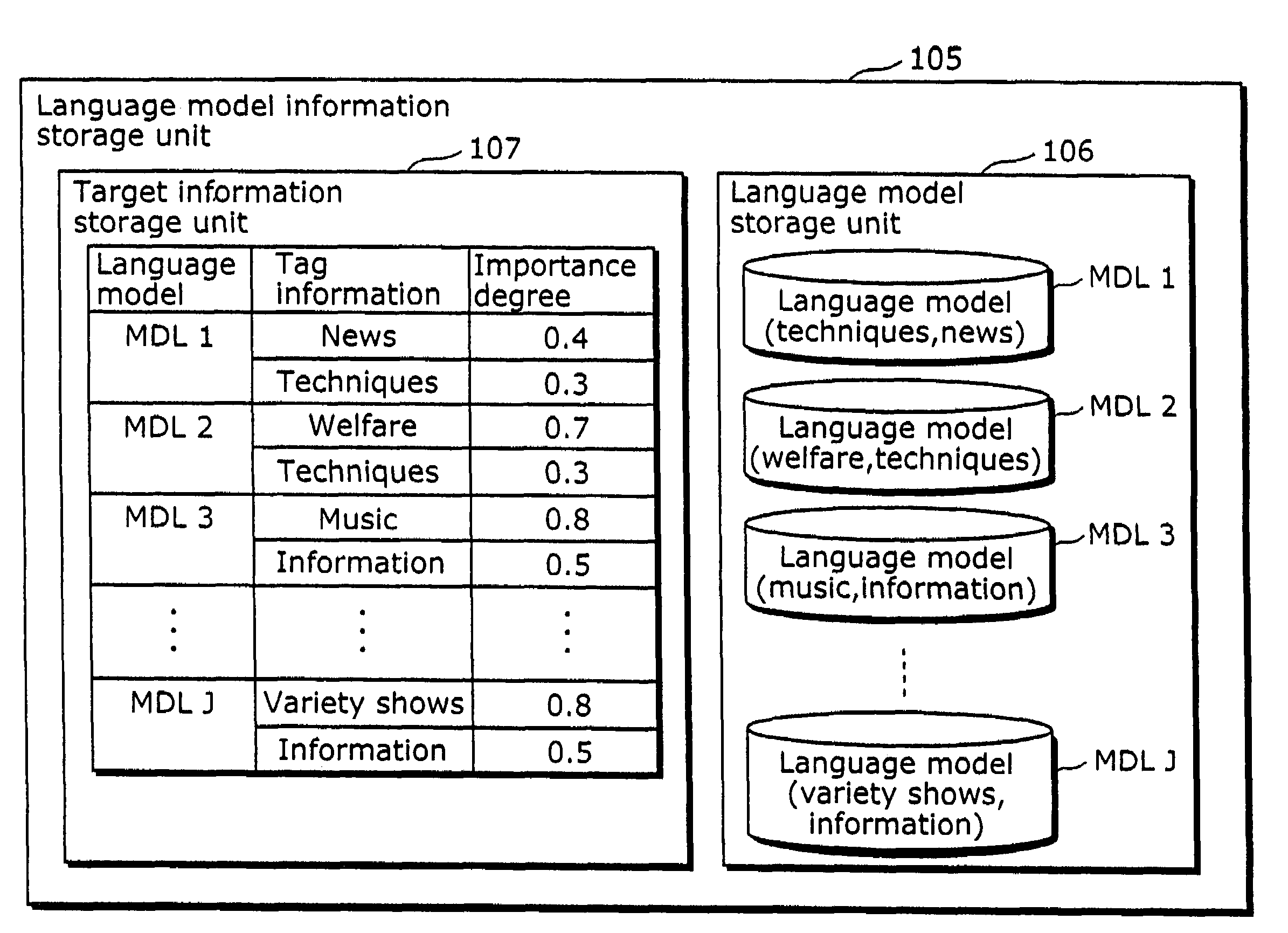

To provide a speech recognition apparatus which appropriately performs speech recognition by generating, in real time, language models adapted to a new topic even in the case where topics are changed. The speech recognition apparatus includes: a word specification unit for obtaining and specifying a word; a language model information storage unit for storing language models for recognizing speech and the respectively corresponding pieces of tag information; a combination coefficient calculation unit for calculating the weights of the respective language models, as combination coefficients, according to the word obtained by the word specification unit, based on the relevance degree between the word obtained by the word specification unit and the tag information of each language model; a language probability calculation unit for calculating the probabilities of word appearance by combining the respective language models according to the calculated combination coefficients; and a speech recognition unit for recognizing speech using the calculated probabilities of word appearance.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

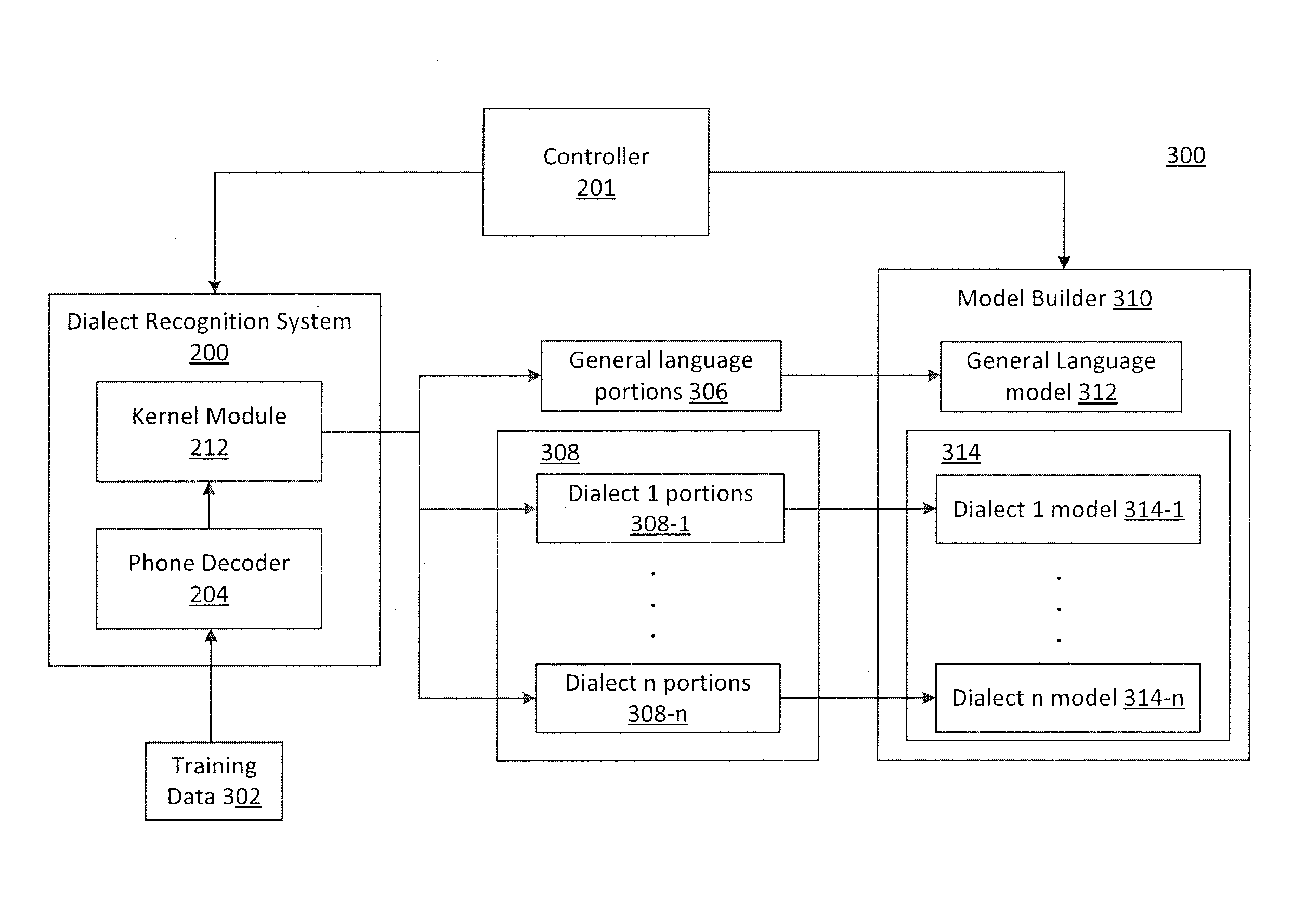

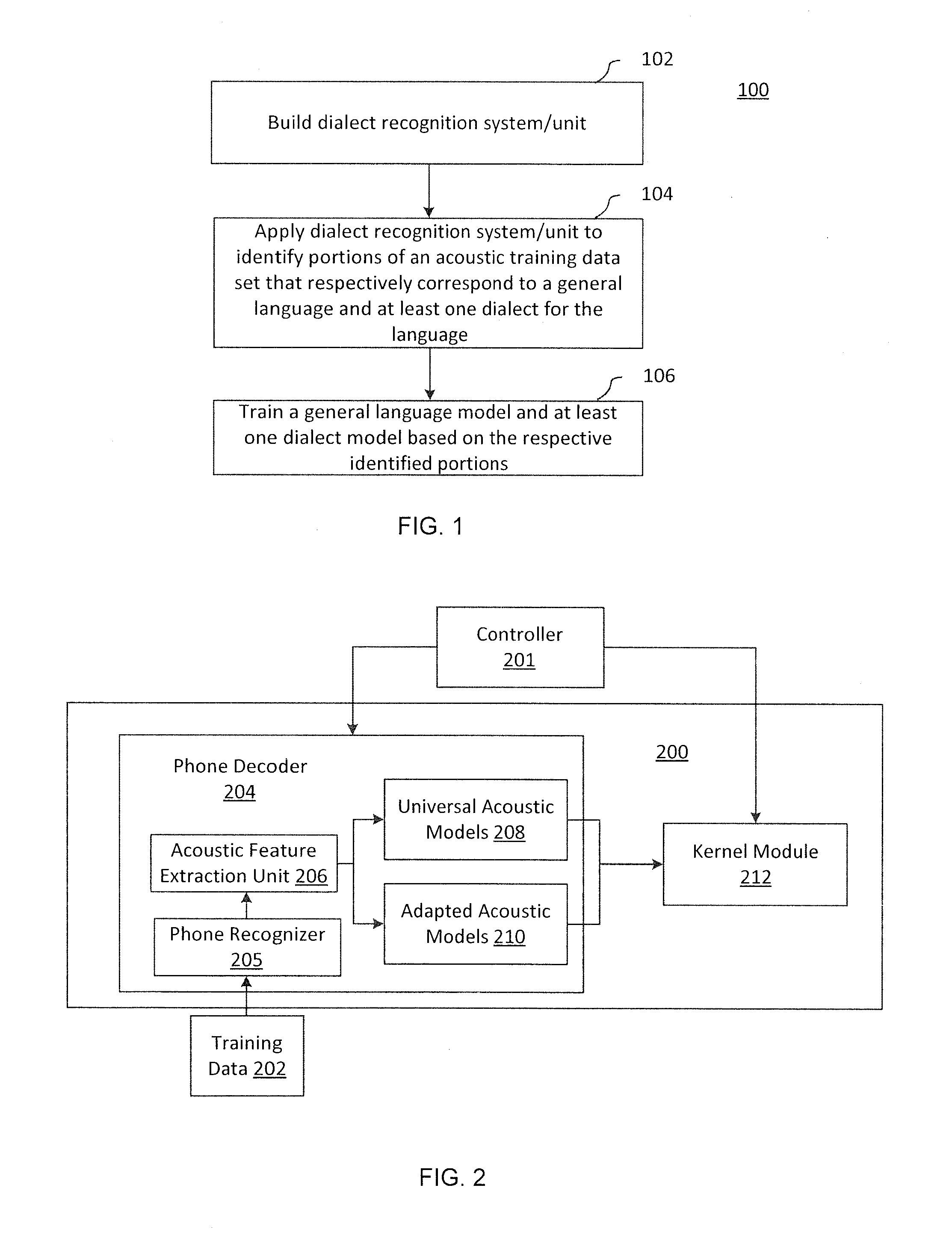

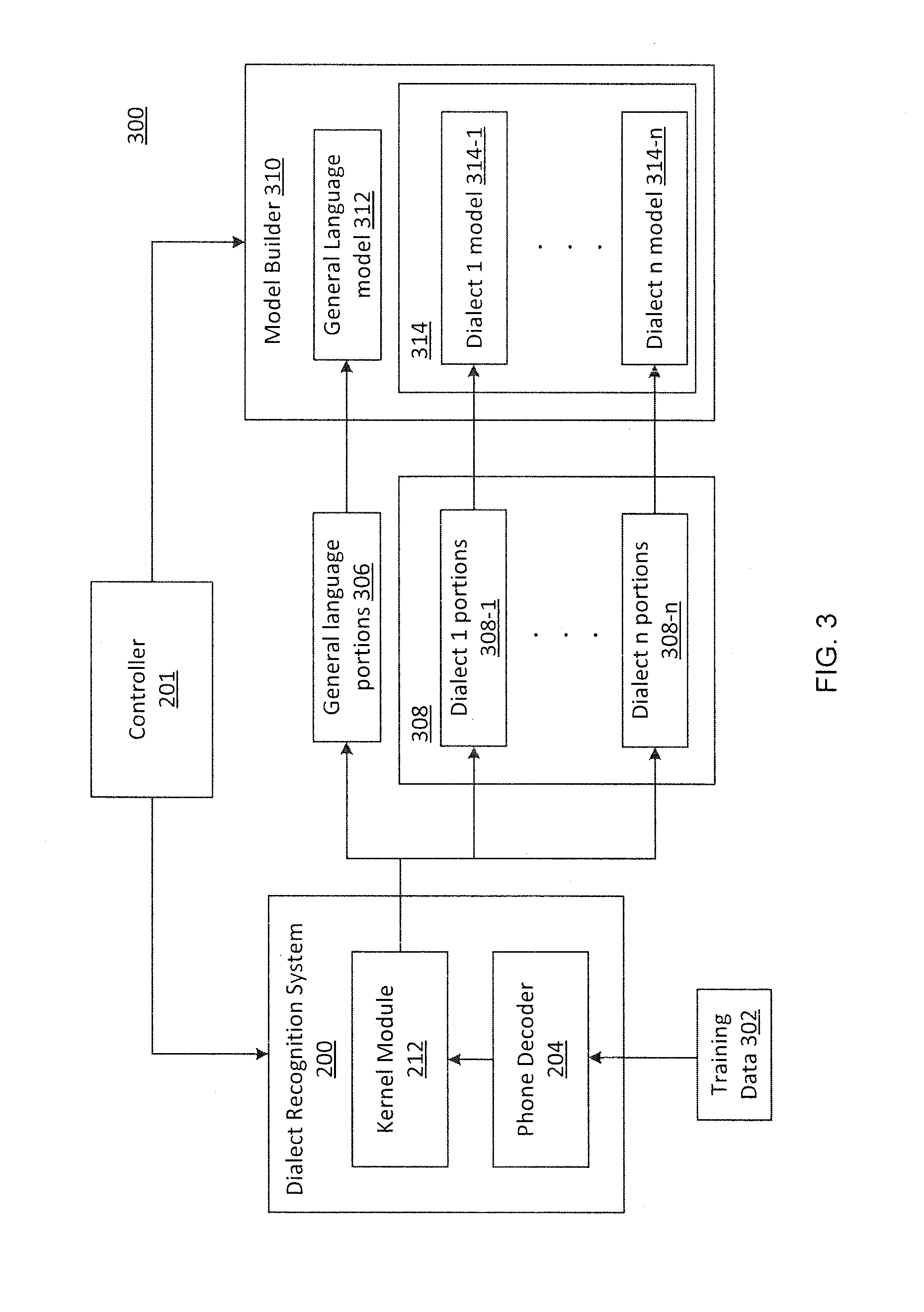

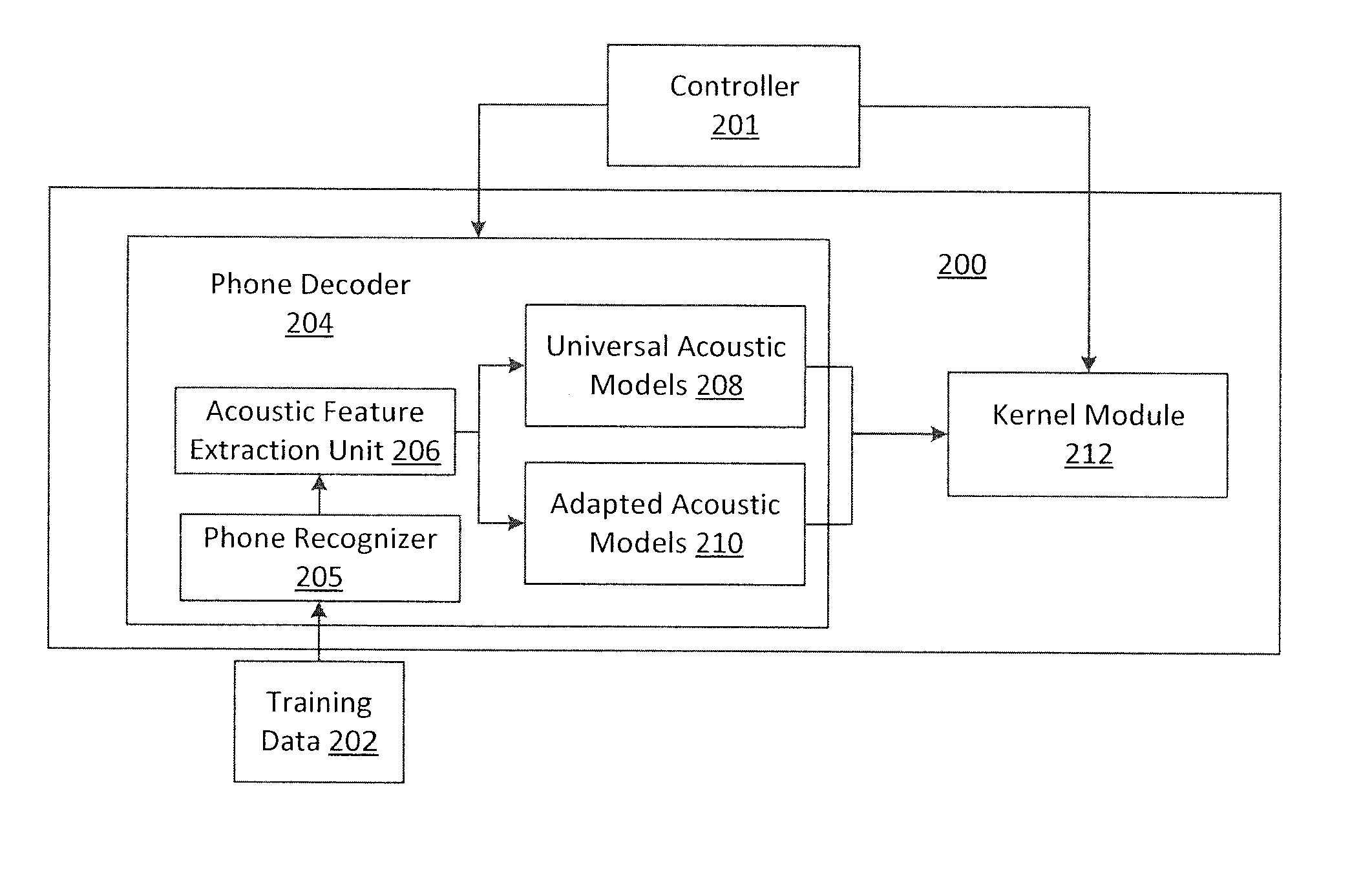

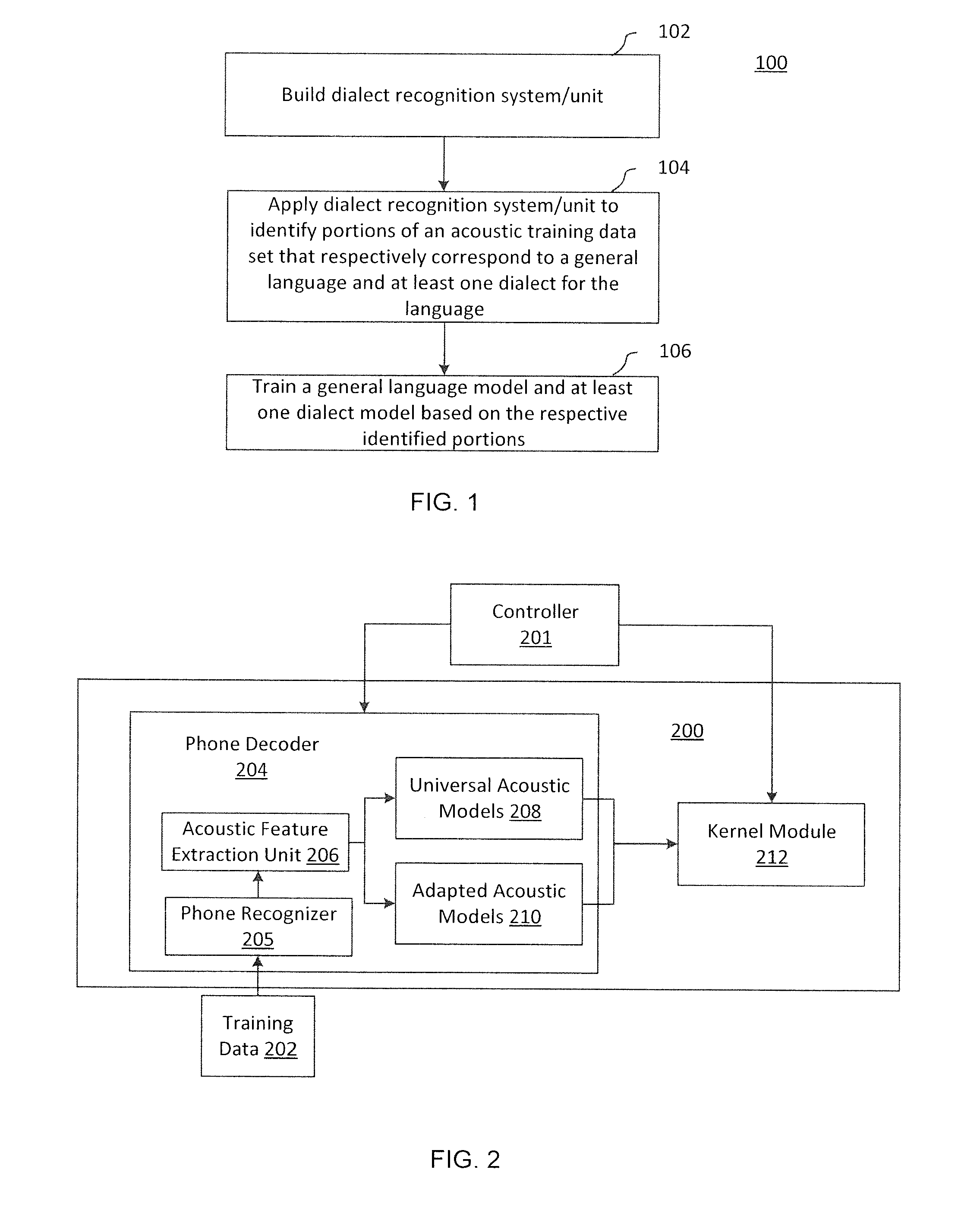

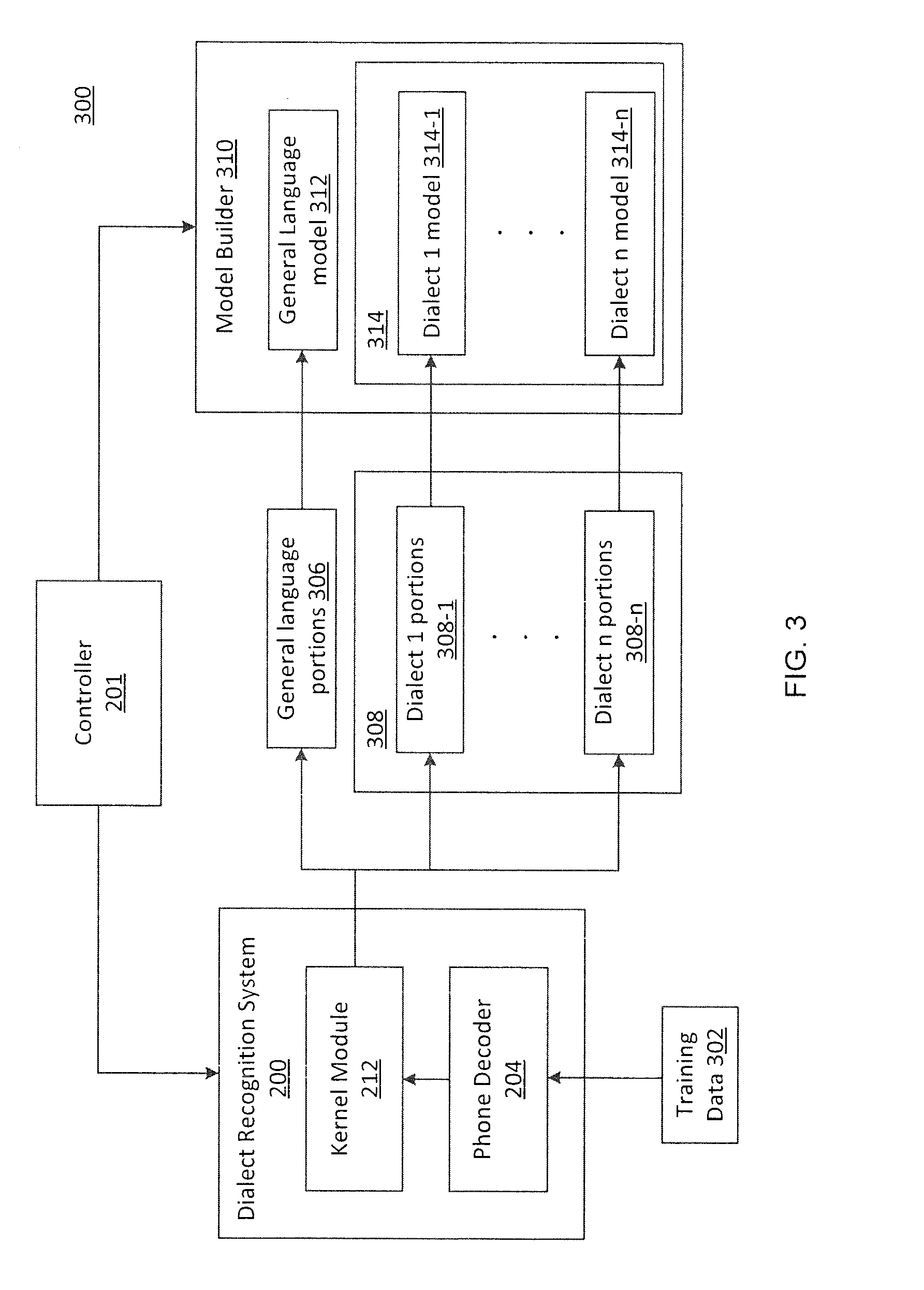

Dialect-specific acoustic language modeling and speech recognition

InactiveUS8583432B1Speech recognitionSpecial data processing applicationsAutomatic speechGeneral-purpose language

Methods and systems for automatic speech recognition and methods and systems for training acoustic language models are disclosed. One system for automatic speech recognition includes a dialect recognition unit and a controller. The dialect recognition unit is configured to analyze acoustic input data to identify portions of the acoustic input data that conform to a general language and to identify portions of the acoustic input data that conform to at least one dialect of the general language. In addition, the controller is configured to apply a general language model and at least one dialect language model to the input data to perform speech recognition by dynamically selecting between the models in accordance with each of the identified portions.

Owner:IBM CORP

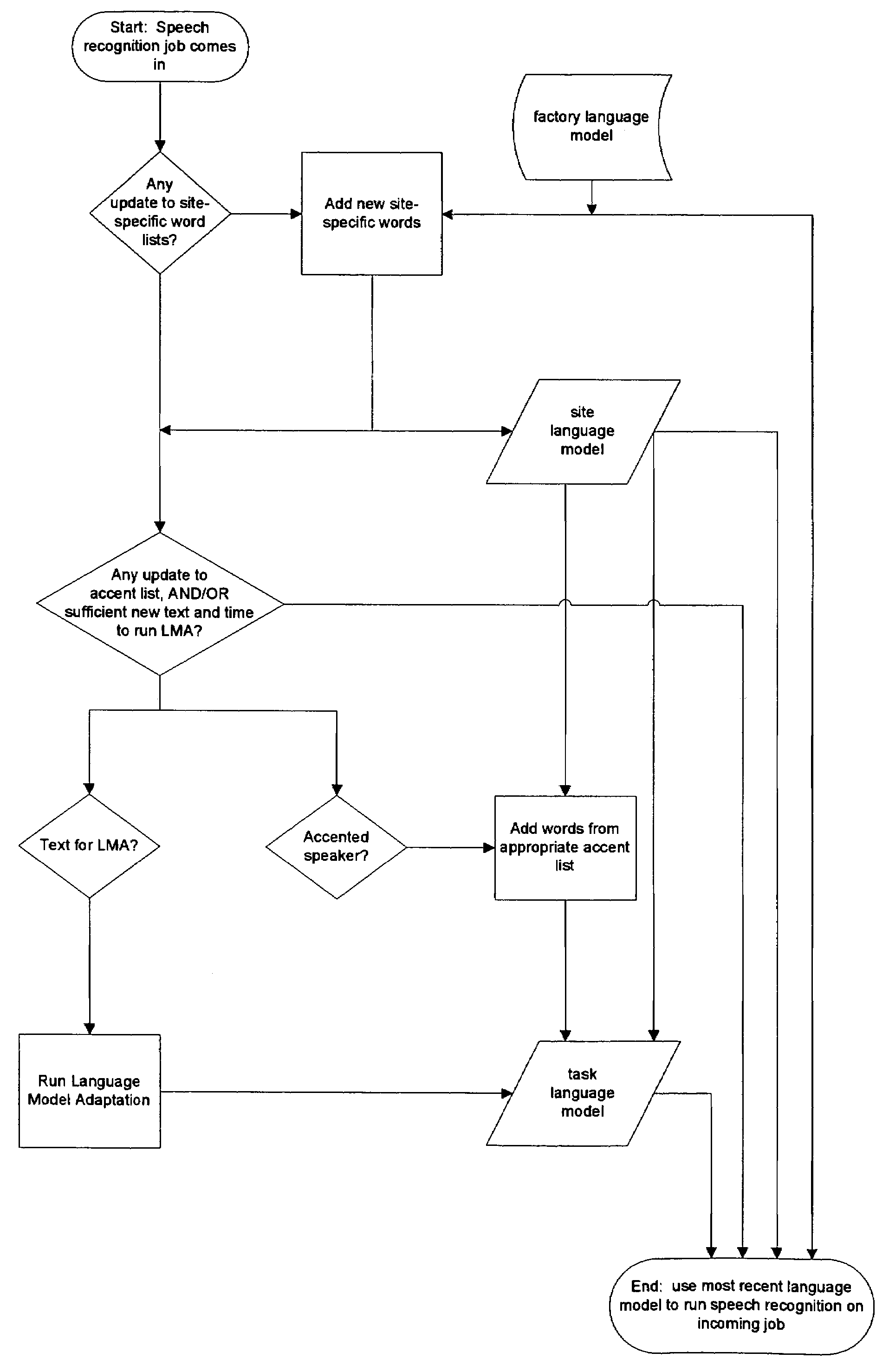

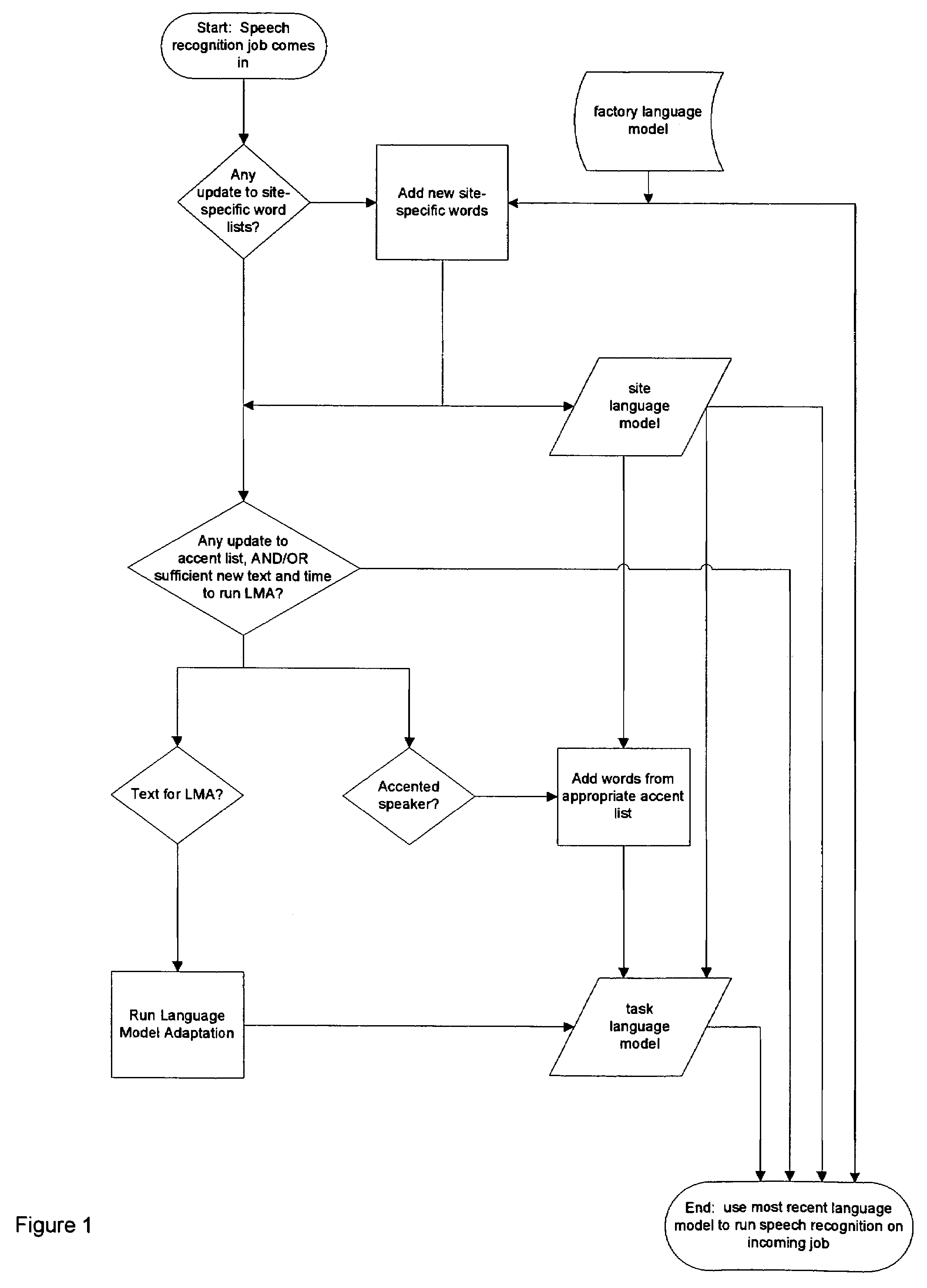

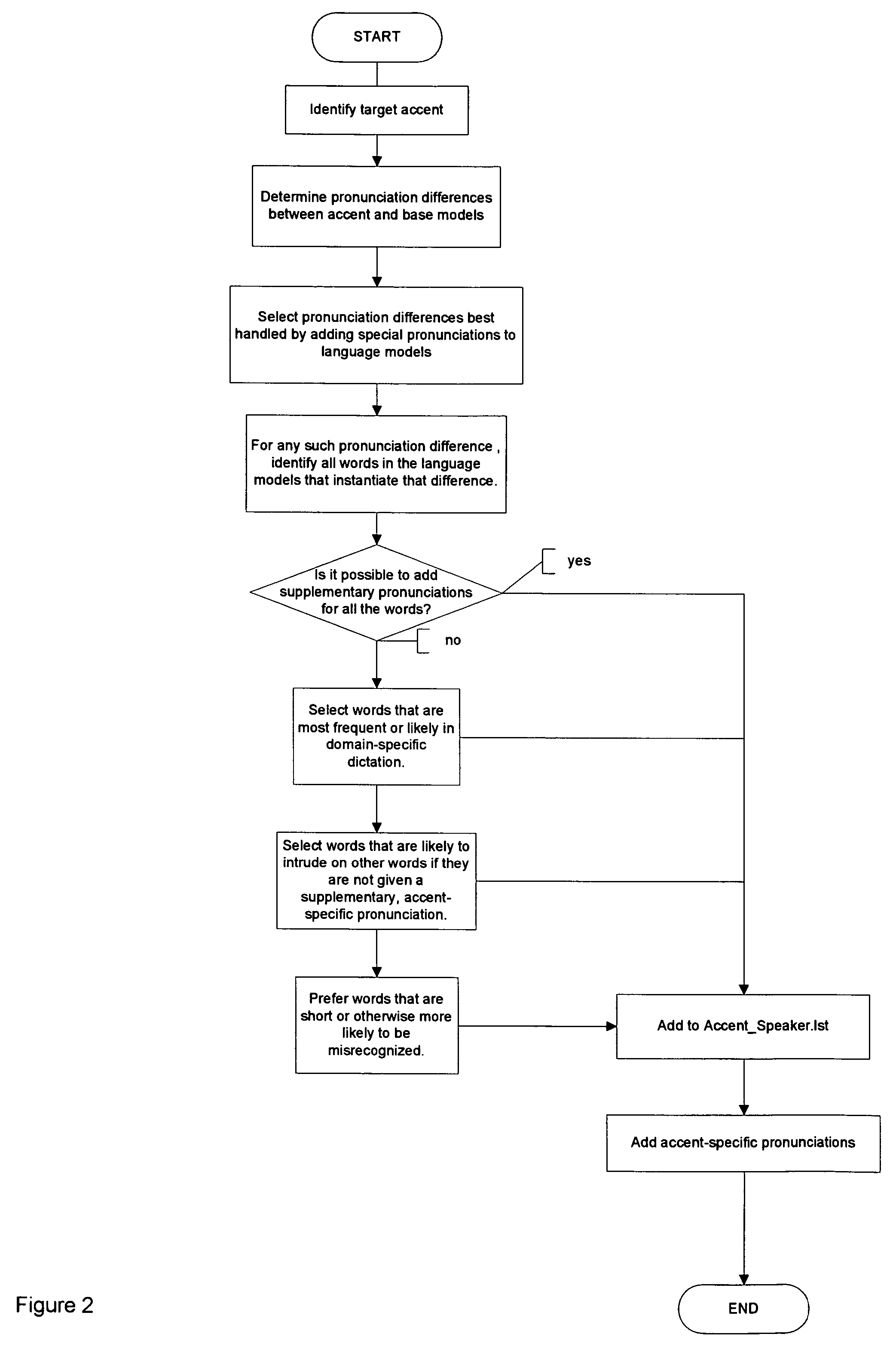

System and method for accented modification of a language model

ActiveUS7315811B2Speech recognitionSpecial data processing applicationsSpeech identificationSpeech sound

A system and method for a speech recognition technology that allows language models for a particular language to be customized through the addition of alternate pronunciations that are specific to the accent of the dictator, for a subset of the words in the language model. The system includes the steps of identifying the pronunciation differences that are best handled by modifying the pronunciations of the language model, identifying target words in the language model for pronunciation modification, and creating a accented speech file used to modify the language model.

Owner:NUANCE COMM INC

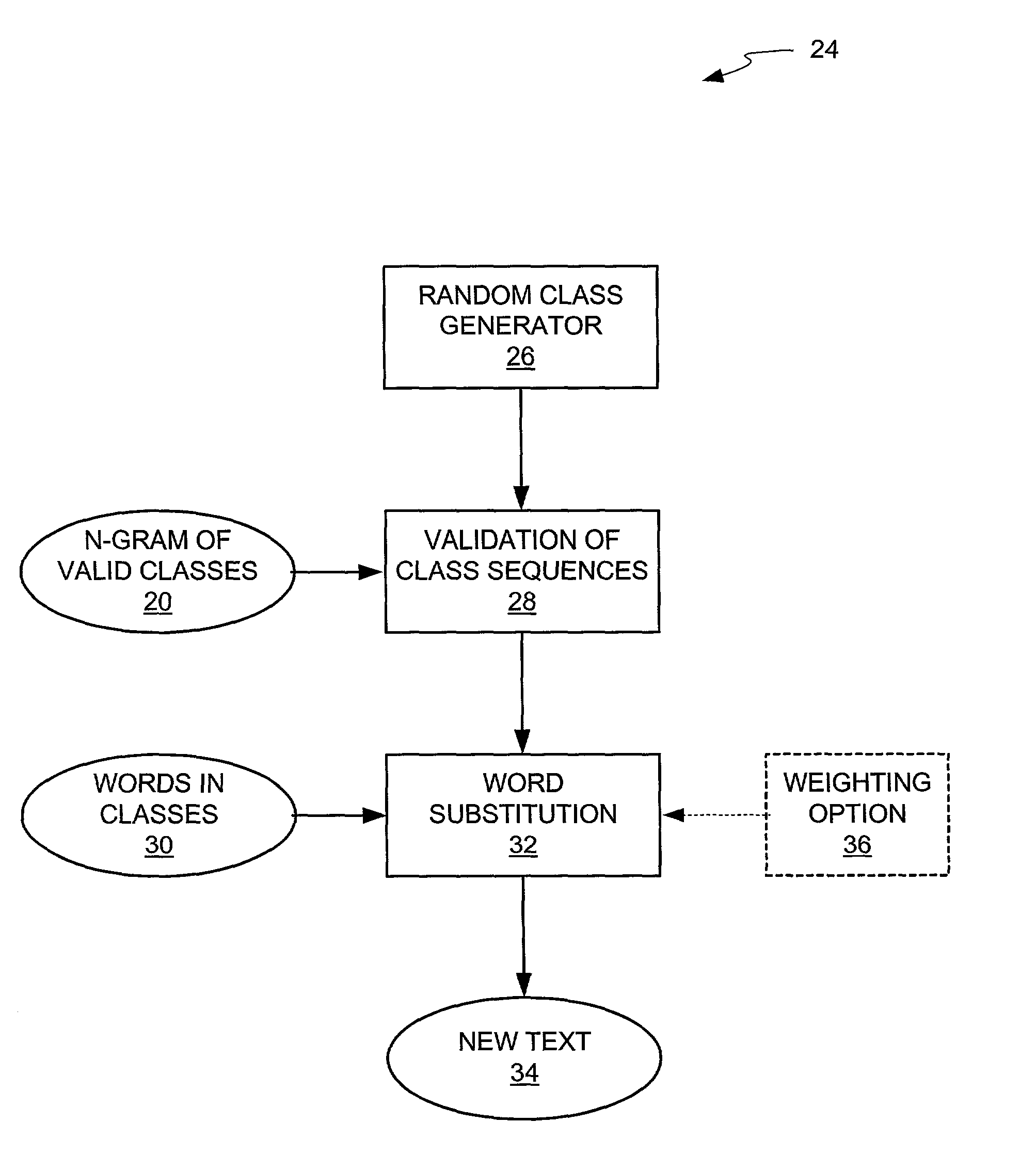

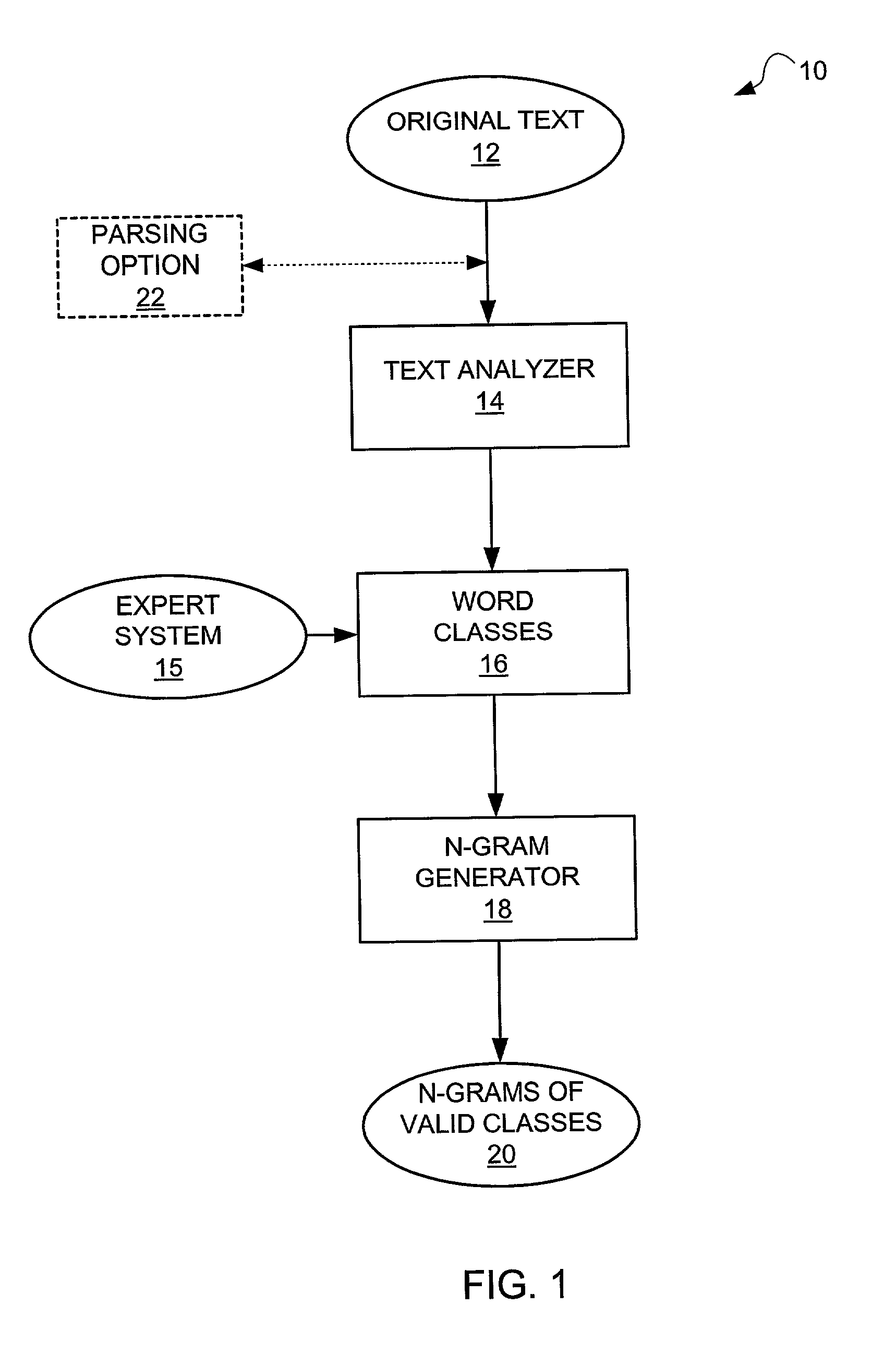

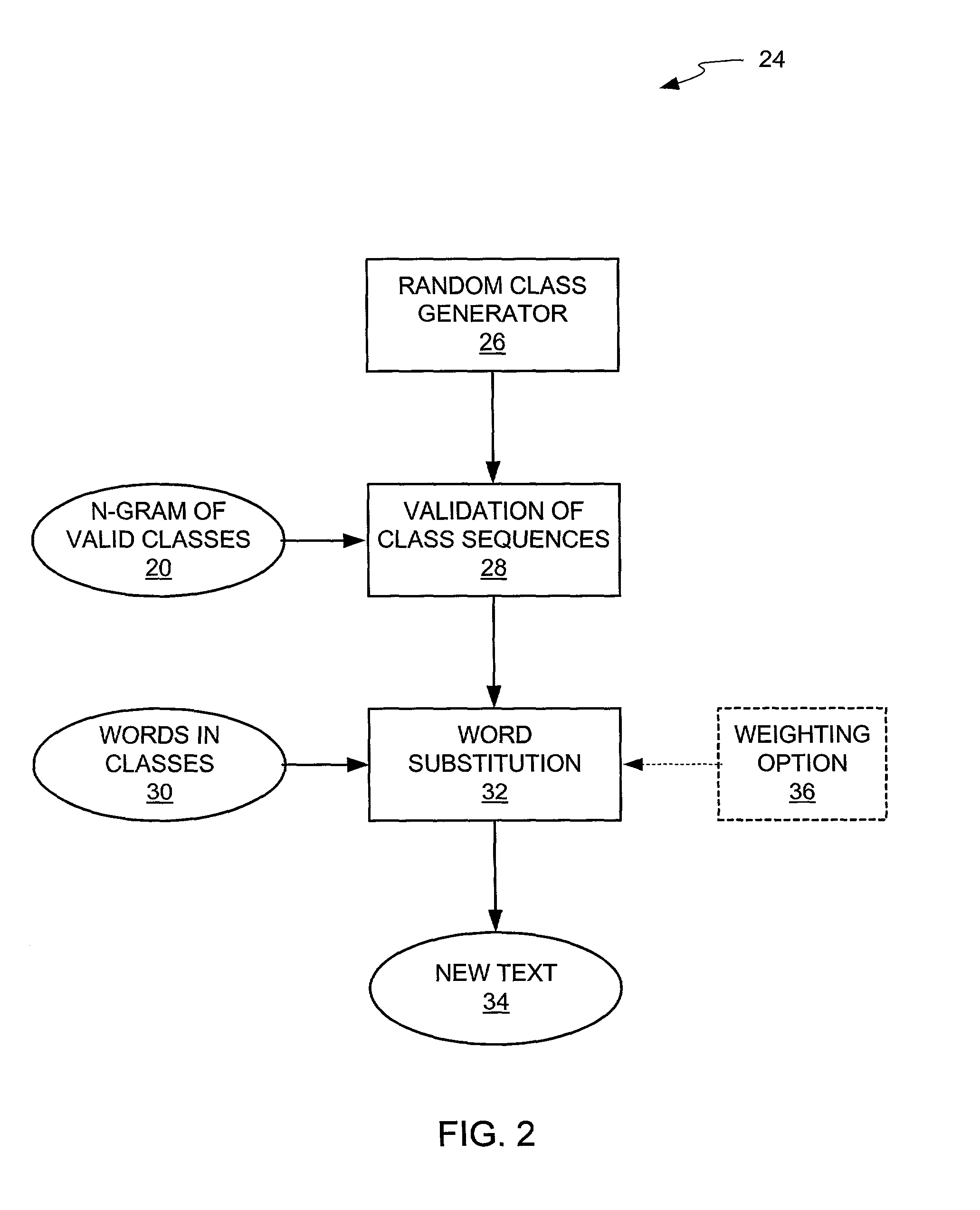

Supervised automatic text generation based on word classes for language modeling

InactiveUS7035789B2Simple structureImprove accuracyNatural language data processingSpeech recognitionCache language modelLanguage model

A system and method is provided that randomly generates text with a given structure. The structure is taken from a number of learning examples. The structure of training examples is captured by word classification and the definition of the relationships between word classes in a given language. The text generated with this procedure is intended to replicate the information given by the original learning examples. The resulting text may be used to better model the structure of a language in a stochastic language model.

Owner:SONY CORP +1

Network and language models for use in a speech recognition system

InactiveUS6668243B1Lower Level RequirementsQuick and efficient accessSpeech recognitionNetwork modelSpeech identification

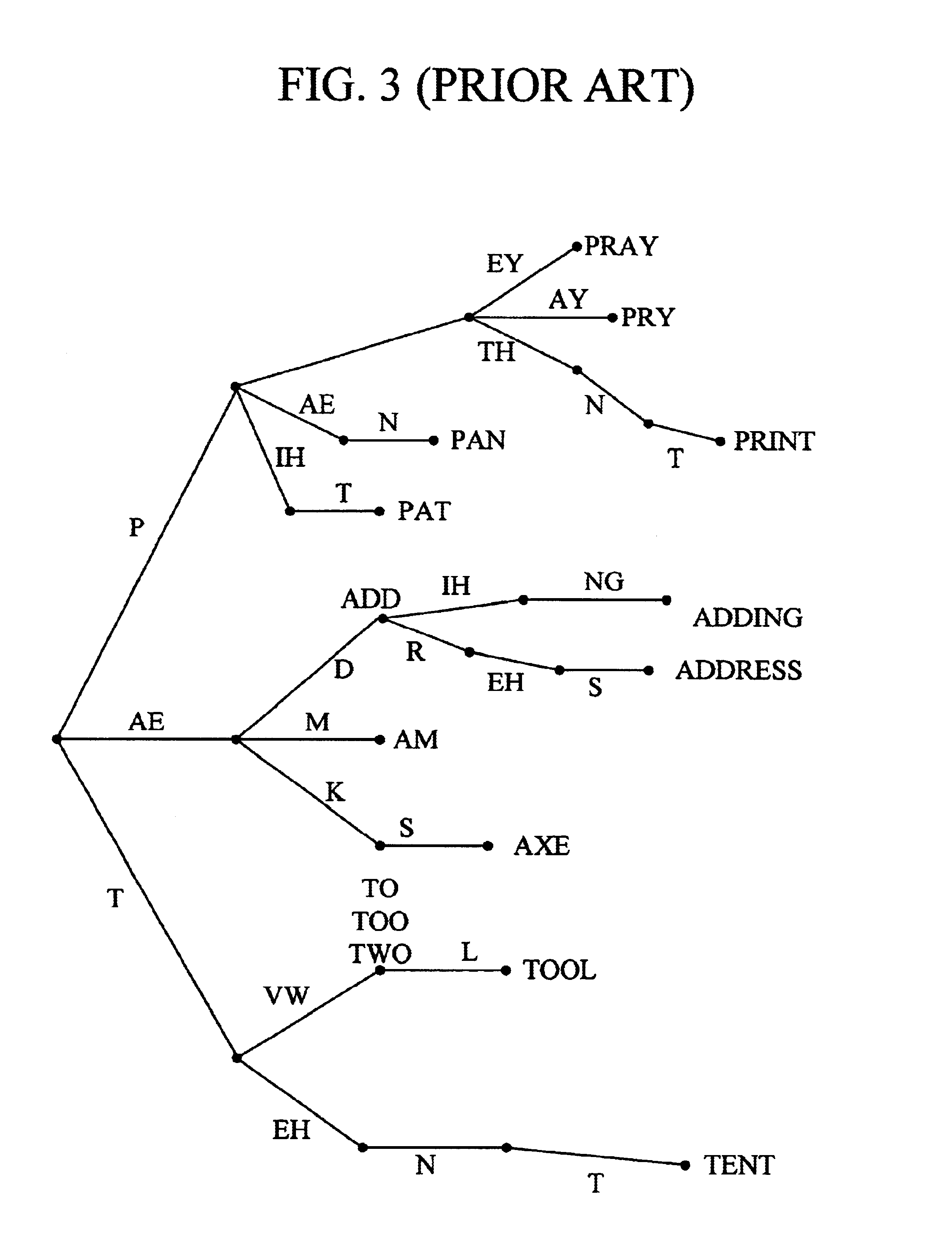

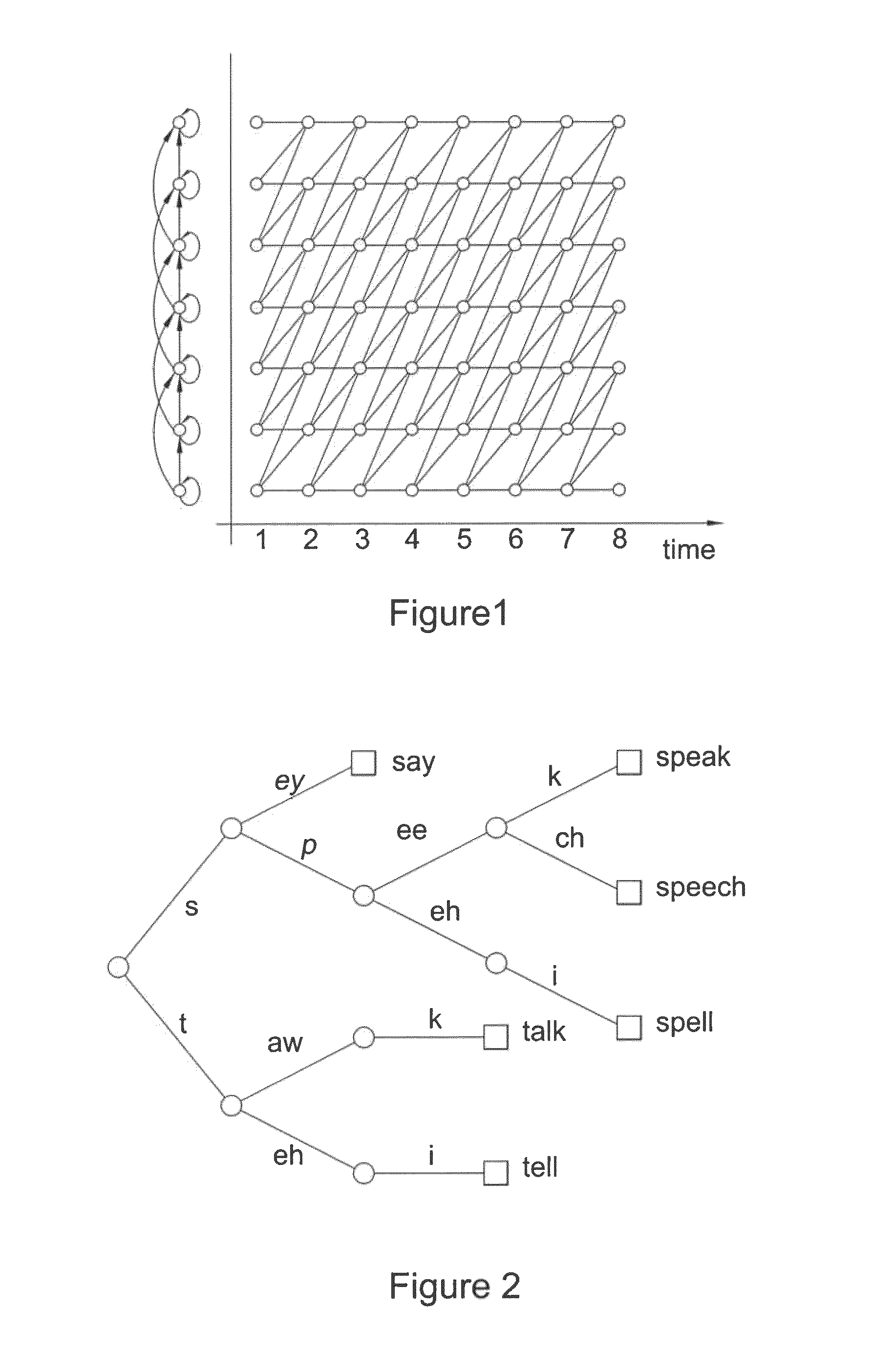

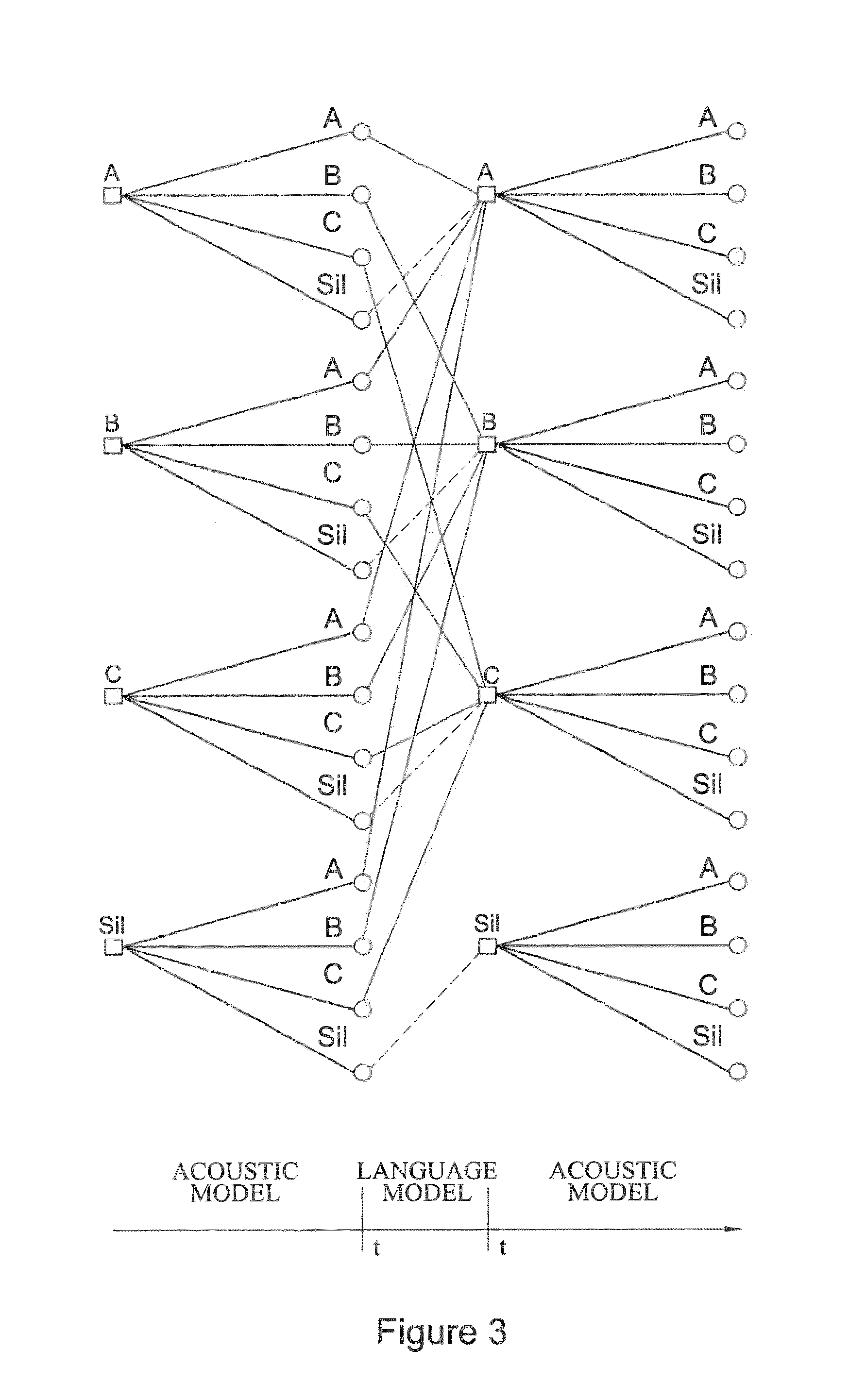

A language model structure for use in a speech recognition system employs a tree-structured network model. The language model is structured such that identifiers associated with each word and contained therein are arranged such that each node of the network model with which the language model is associated spans a continuous range of identifiers. A method of transferring tokens through a tree-structured network in a speech recognition process is also provided.

Owner:MICROSOFT TECH LICENSING LLC

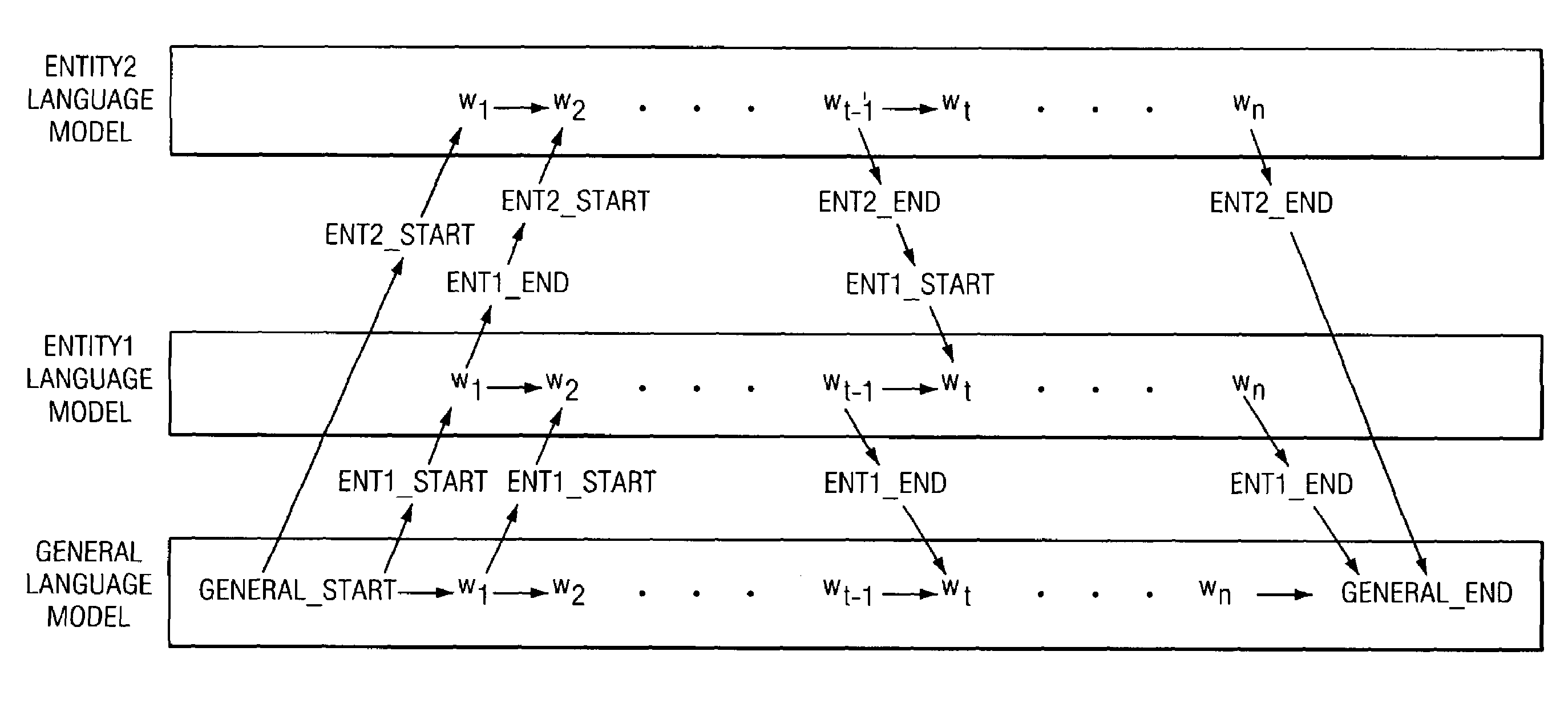

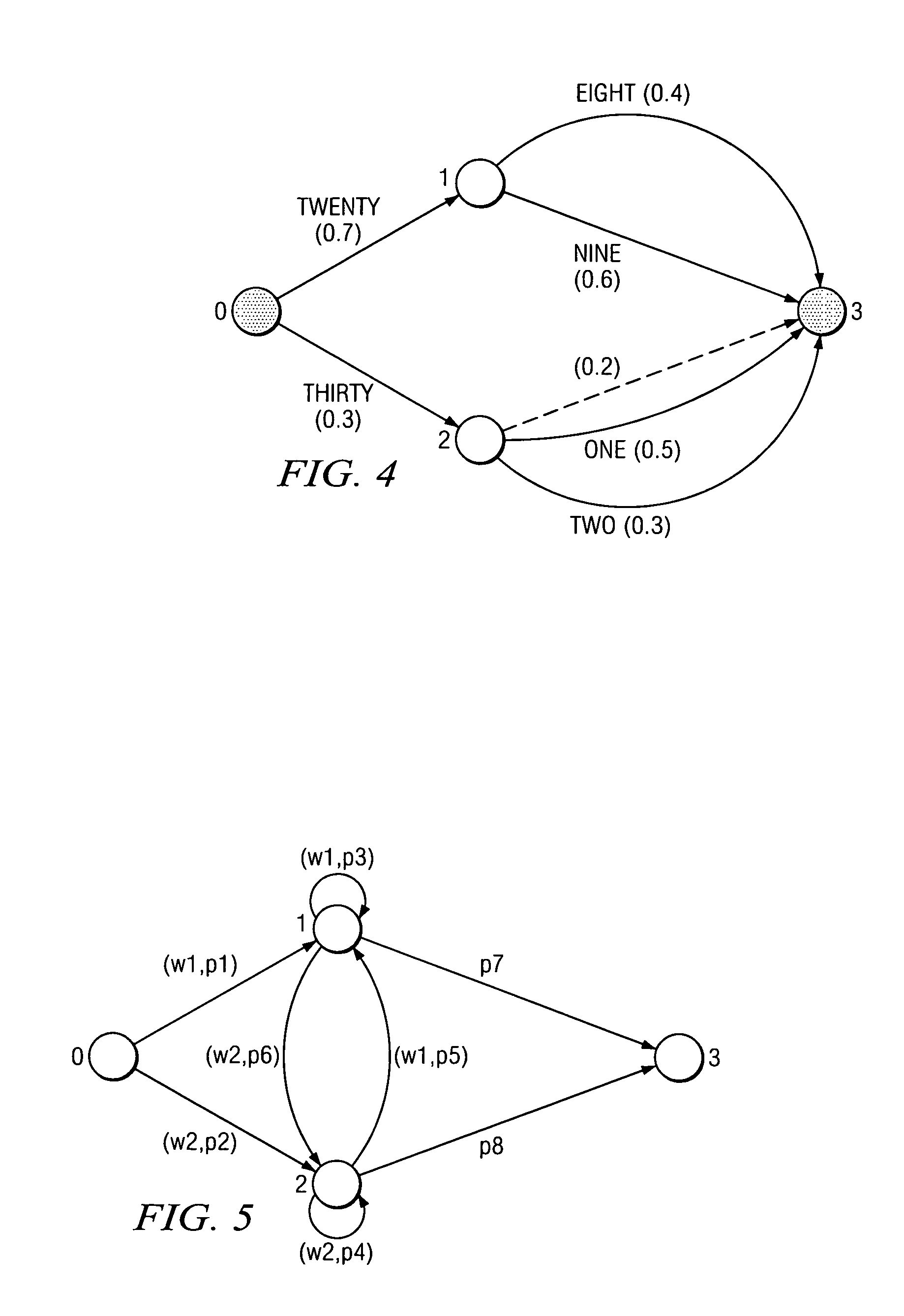

Name entity extraction using language models

ActiveUS7299180B2Shorten the timeSave expenseNatural language data processingSpeech recognitionNatural language understandingAlgorithm

A name entity extraction technique using language models is provided. A general language model is provided for the natural language understanding domain. A language model is also provided for each name entity. The name entity language models are added to the general language model. Each language model is considered a state. Probabilities are applied for each transition within a state and between each state. For each word in an utterance, the name extraction process determines a best current state and a best previous state. When the end of the utterance is reached, the process traces back to find the best path. Each series of words in a state other than the general language model state is identified as a name entity. A technique is provided to iteratively extract names and retrain the general language model until the probabilities do not change. The name entity extraction technique of the present invention may also use a general language model with uniform probabilities to save the time and expense of training the general language model.

Owner:NUANCE COMM INC

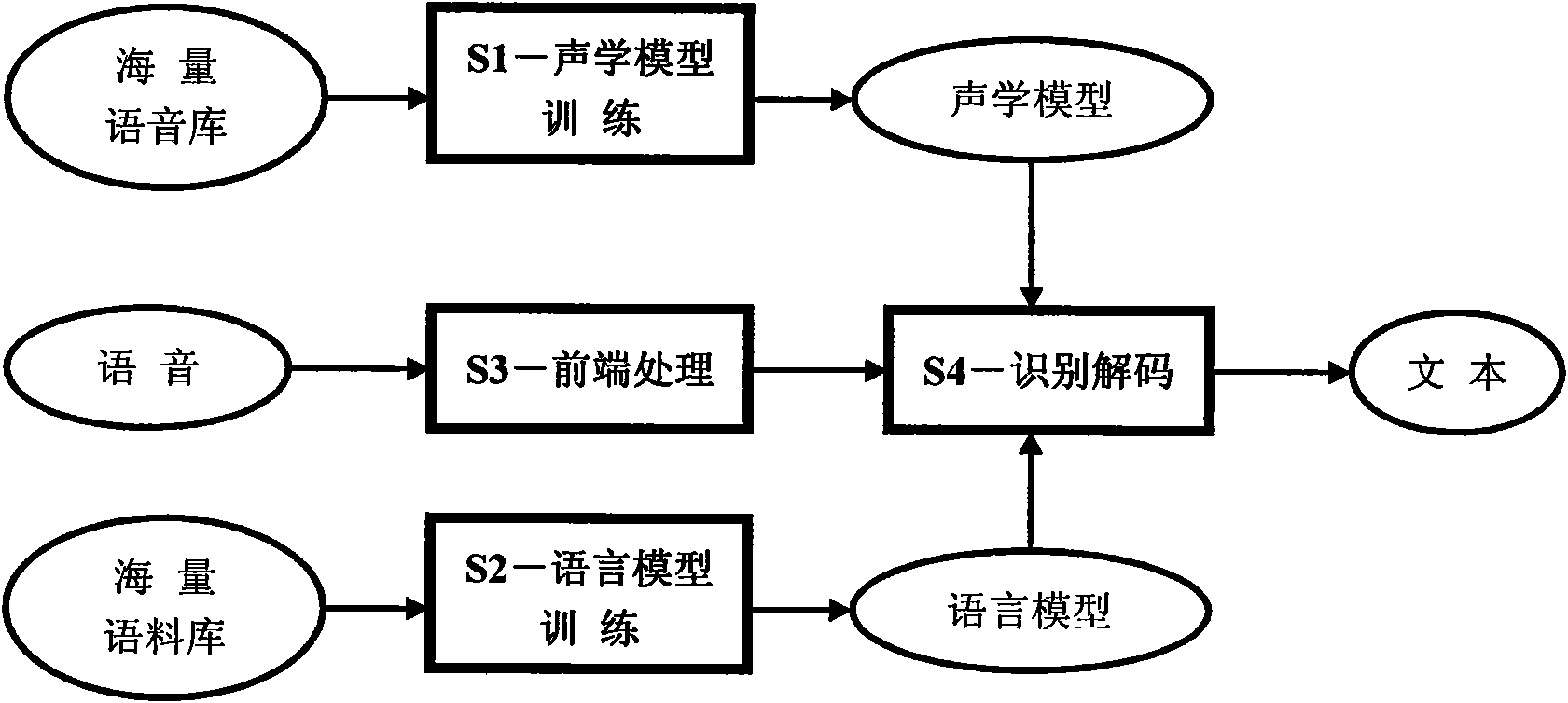

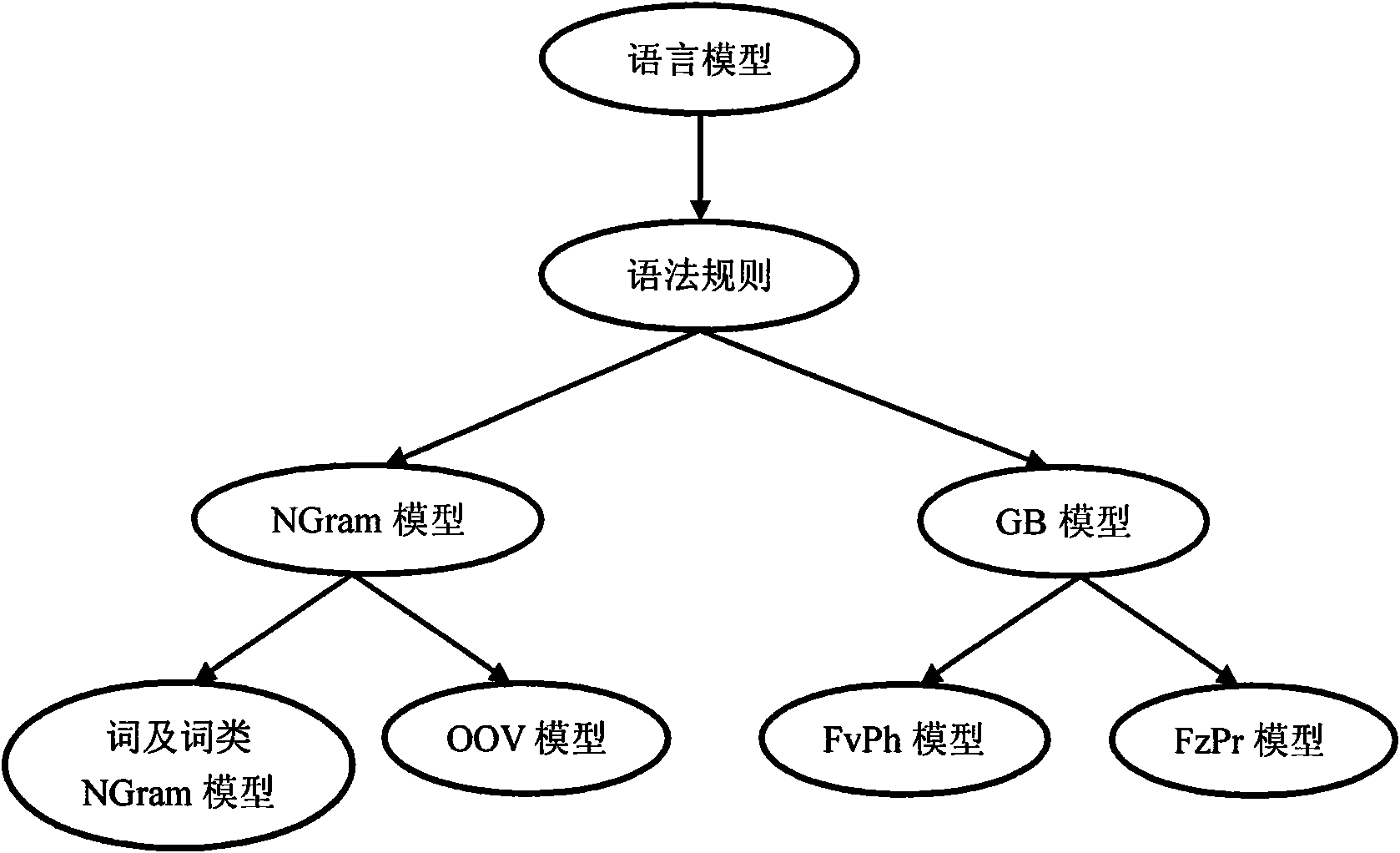

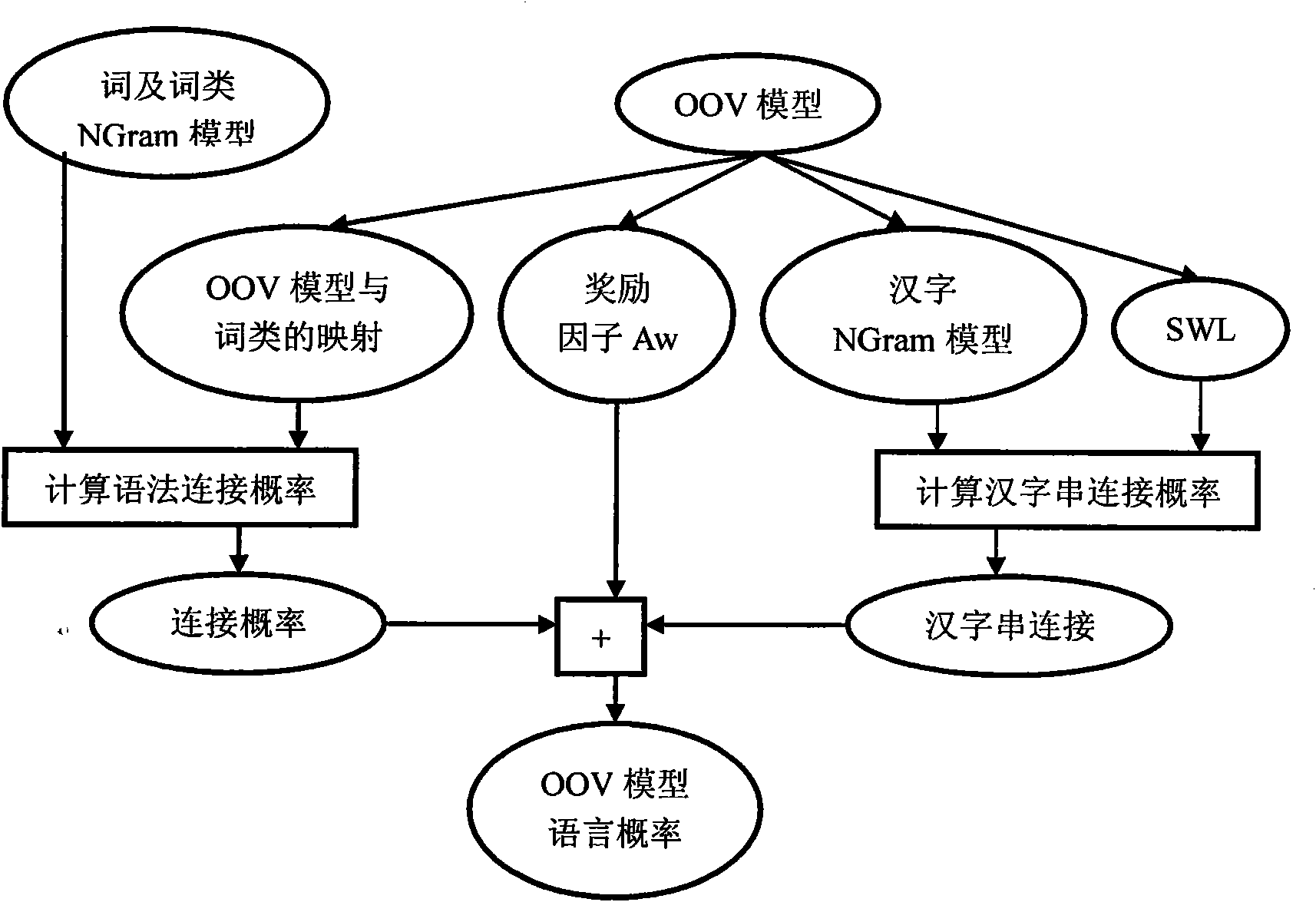

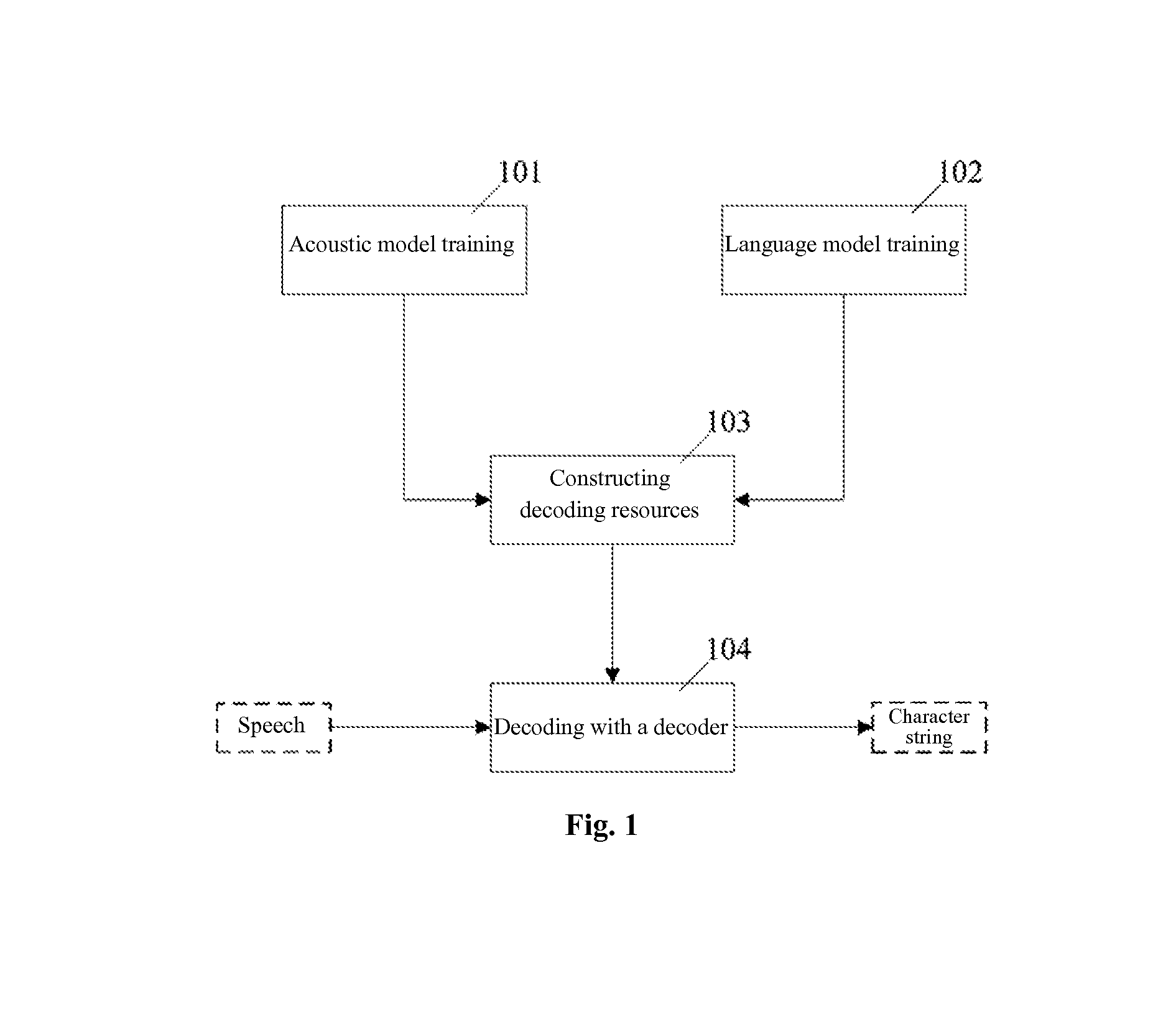

Spoken language voice recognition method based on statistic model and grammar rules

InactiveCN101604520AEliminate non-stationary noiseSpeech recognitionSpoken languageSpeech identification

The invention a voice recognition method based on statistic language model, combining grammar rules, and oriented to language speaking recognition application. The invention comprises an acoustic model training, a language model training, a front-end processing, and a recognizing decoding. Based on the N element grammar statistic model, and grammar rule network, the language model of the invention is capable of effectively processing phenomenon such as out-of-vocabulary words, slogan, blurred pronunciation, fast switching between sentences, so that higher recognition rate is achieved with the proviso that naturalness of voice recognition is ensured.

Owner:北京森博克智能科技有限公司

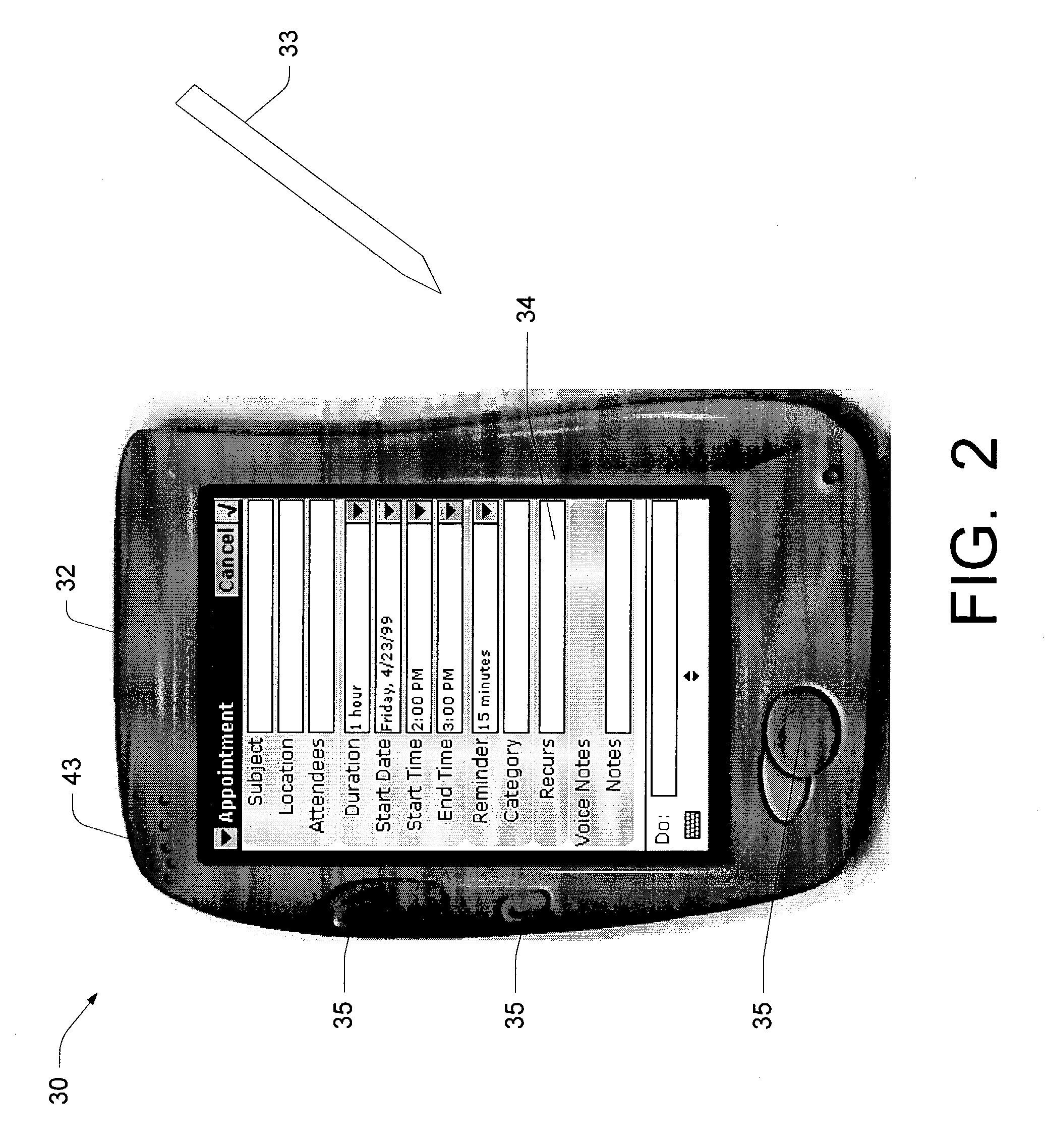

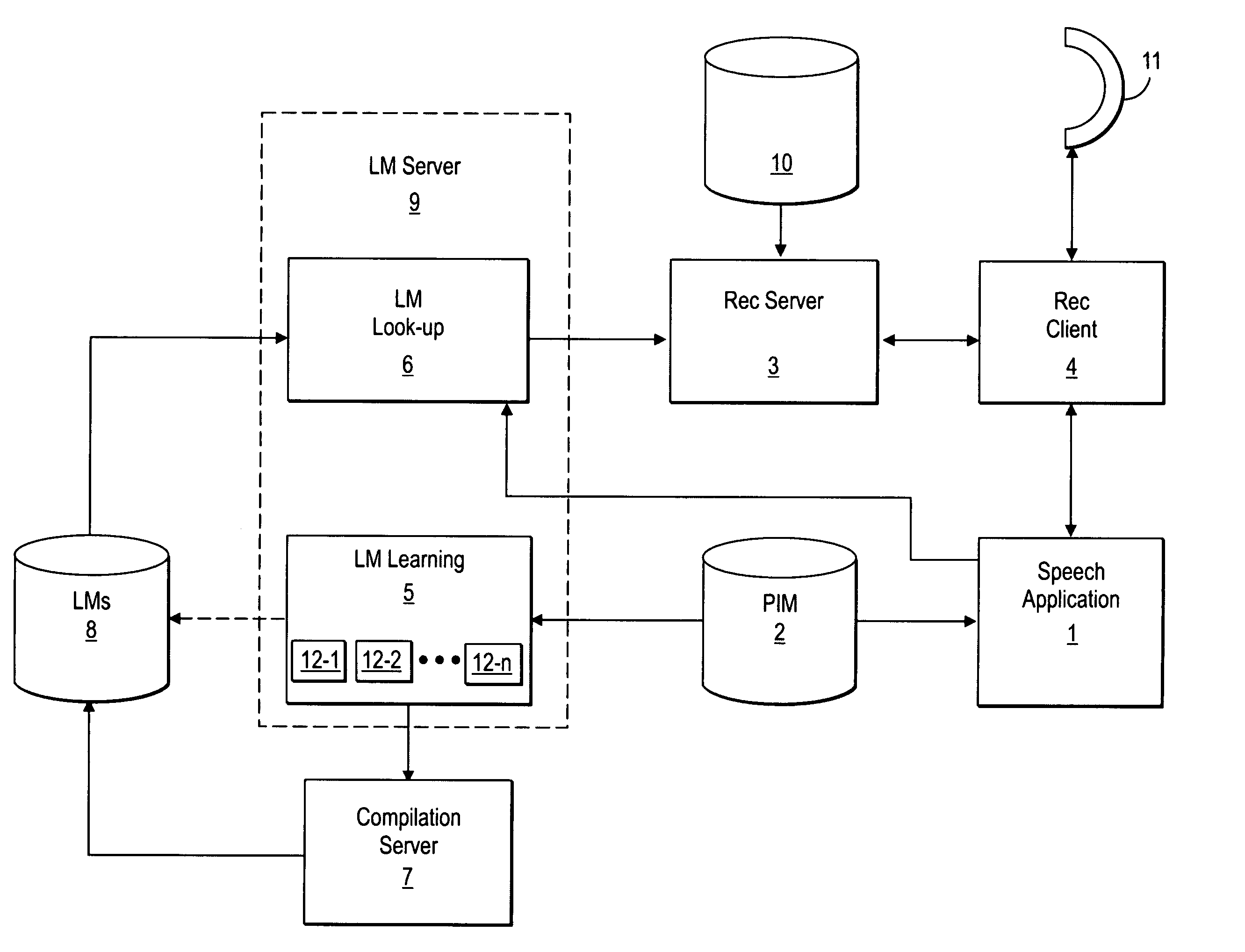

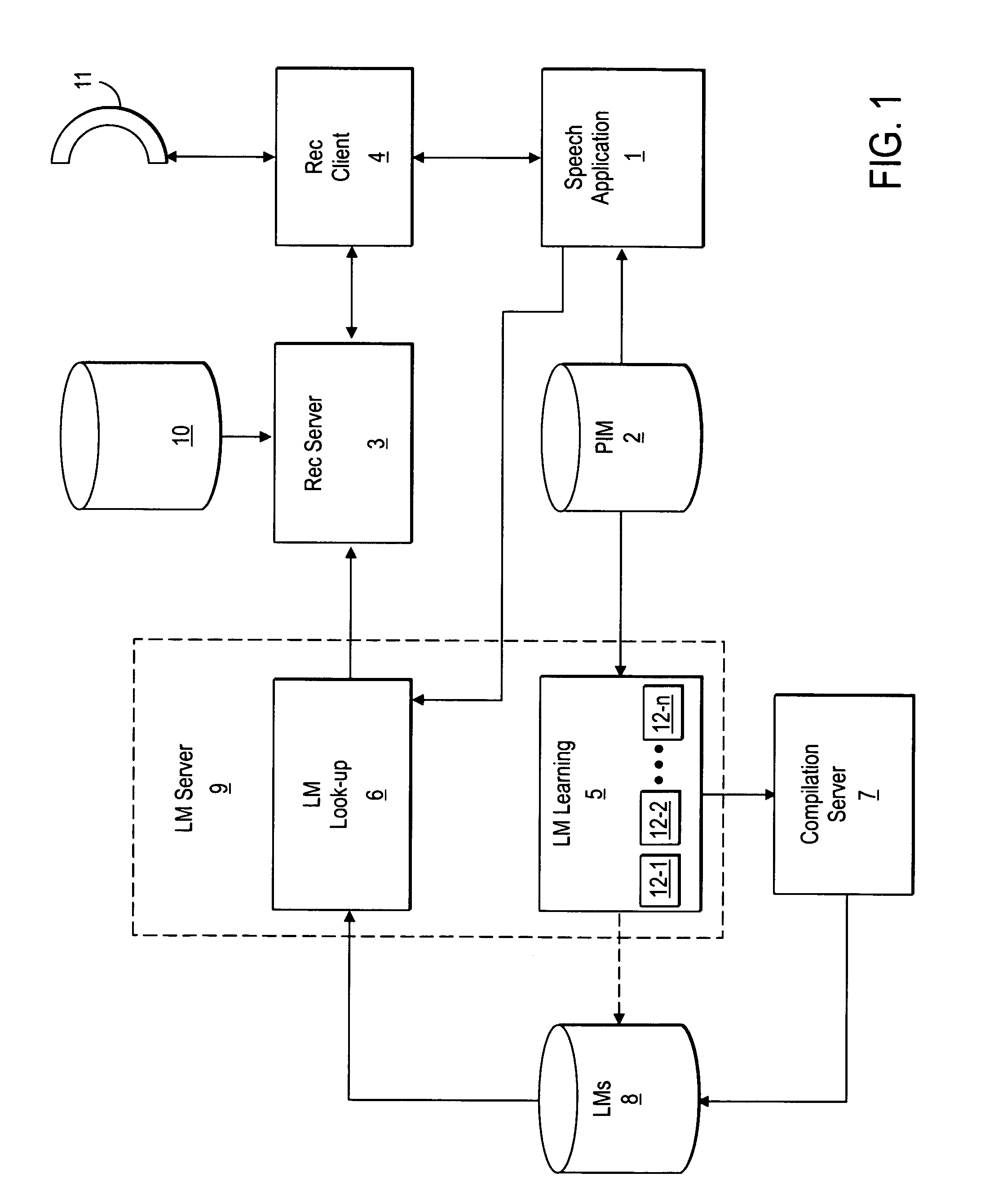

Method and apparatus for improving human-machine dialogs using language models learned automatically from personalized data

A speech-based processing system includes a database of PIM data of a user, a set of language models, a learning unit, a recognition server, and a speech application. The learning unit uses a language model learning algorithm to provide language models based on the PIM data. The recognition server recognizes an utterance of the user by using one of the language models. The speech application identifies and accesses a subset of the PIM data specified by the utterance by using the recognition result. The language model learning algorithm may use grammar induction and / or or may train statistical language models based on the PIM data. The language model learning algorithm may be applied to generate language models periodically or on-the-fly during a session with the user.

Owner:NUANCE COMM INC

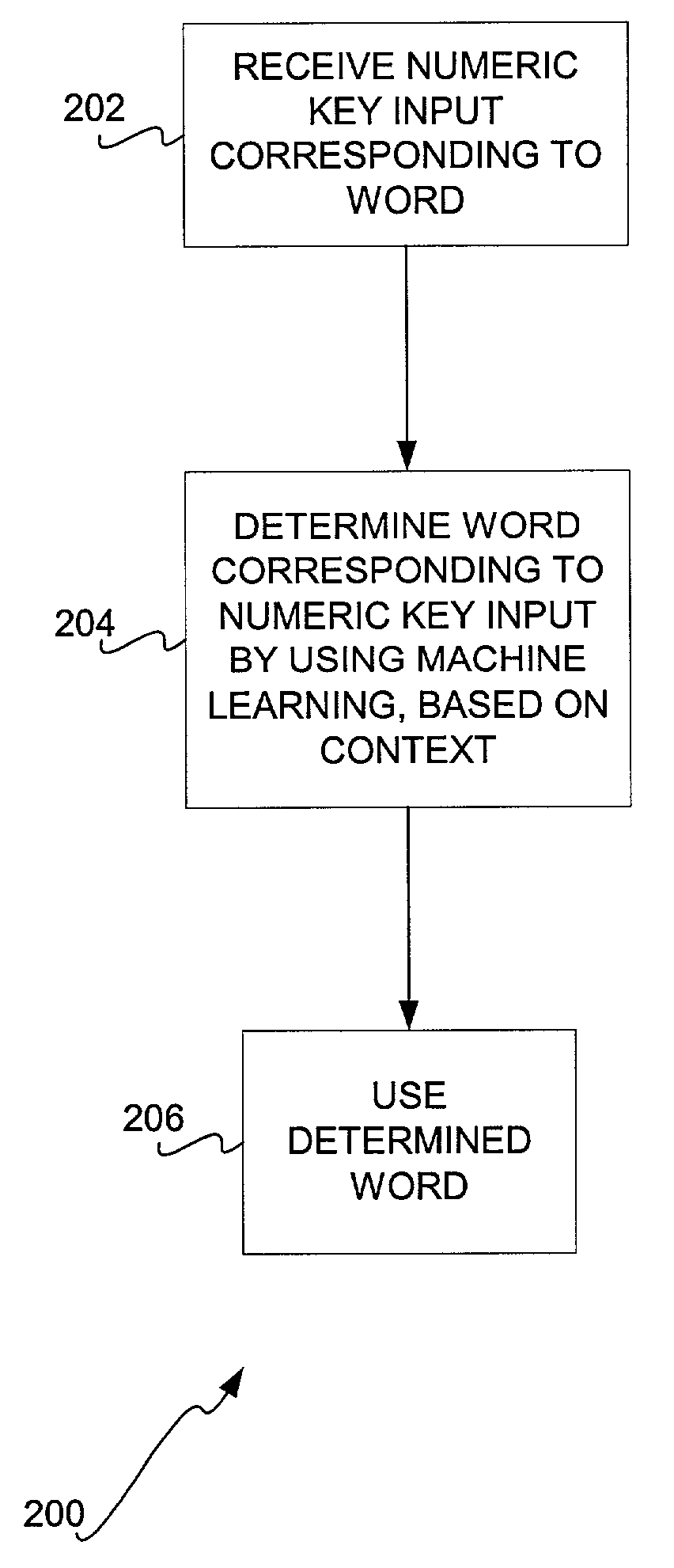

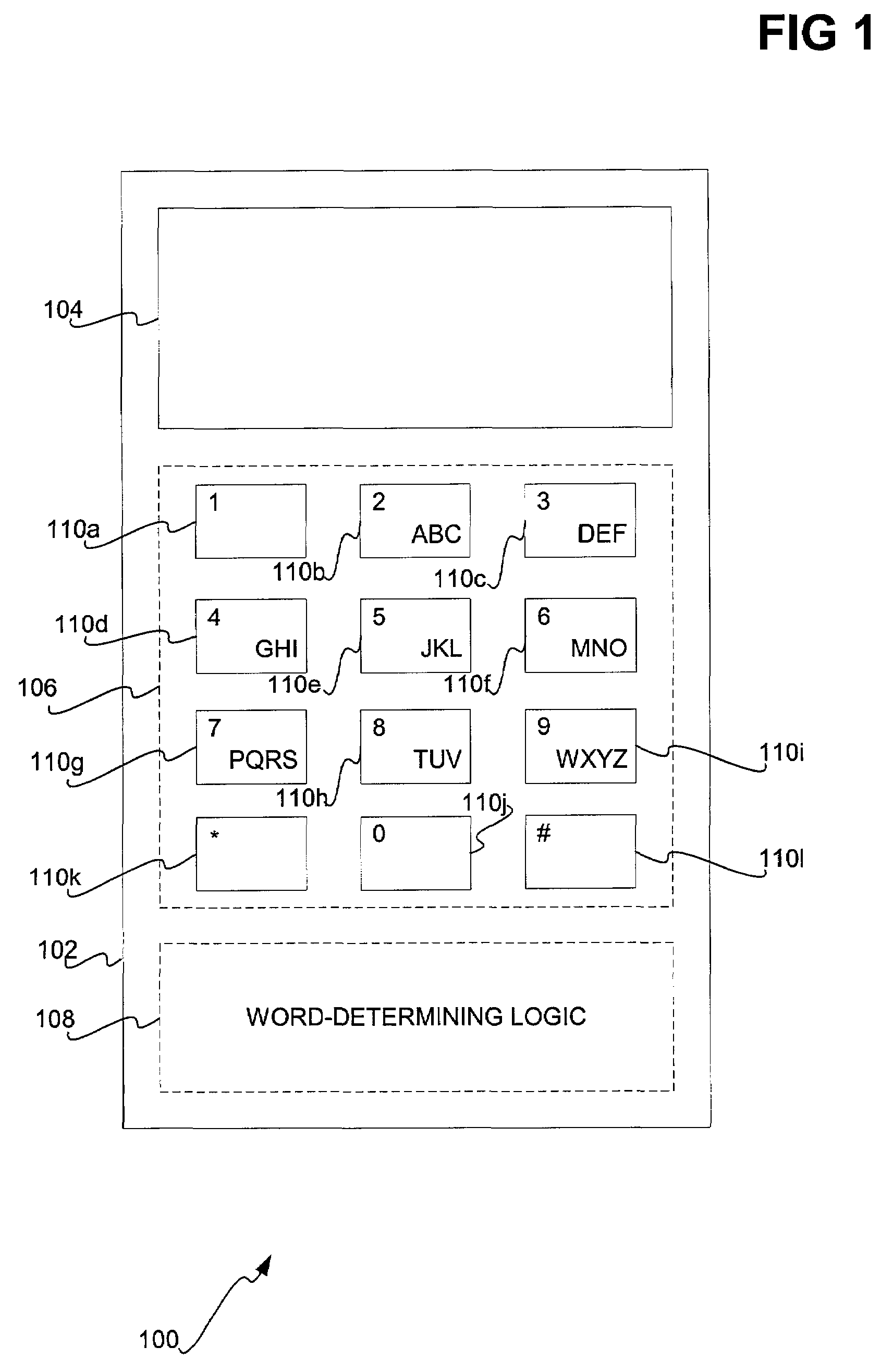

Machine learning contextual approach to word determination for text input via reduced keypad keys

ActiveUS7103534B2Accurately guessingNatural language data processingSpecial data processing applicationsStudy methodsLearning methods

Determination of a word input on a reduced keypad, such as a numeric keypad, by entering a key sequence ambiguously corresponding to the word, by taking into account the context of the word via a machine learning approach, is disclosed. Either the left context, the right context, or the double-sided context of the number sequence can be used to determine the intended word. The machine learning approach can use a statistical language model, such as an n-gram language model. The compression of a language model for use with small devices, such as mobile phones and other types of small devices, is also disclosed.

Owner:MICROSOFT TECH LICENSING LLC

Dialect-specific acoustic language modeling and speech recognition

Methods and systems for automatic speech recognition and methods and systems for training acoustic language models are disclosed. In accordance with one automatic speech recognition method, an acoustic input data set is analyzed to identify portions of the input data set that conform to a general language and to identify portions of the input data set that conform to at least one dialect of the general language. In addition, a general language model and at least one dialect language model is applied to the input data set to perform speech recognition by dynamically selecting between the models in accordance with each of the identified portions. Further, speech recognition results obtained in accordance with the application of the models is output.

Owner:IBM CORP

Speech recognition device and method of recognizing speech using a language model

ActiveUS7848927B2Eliminate uncomfortable feelingIncrease capacitySpeech recognitionSpeech identificationSpeech sound

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

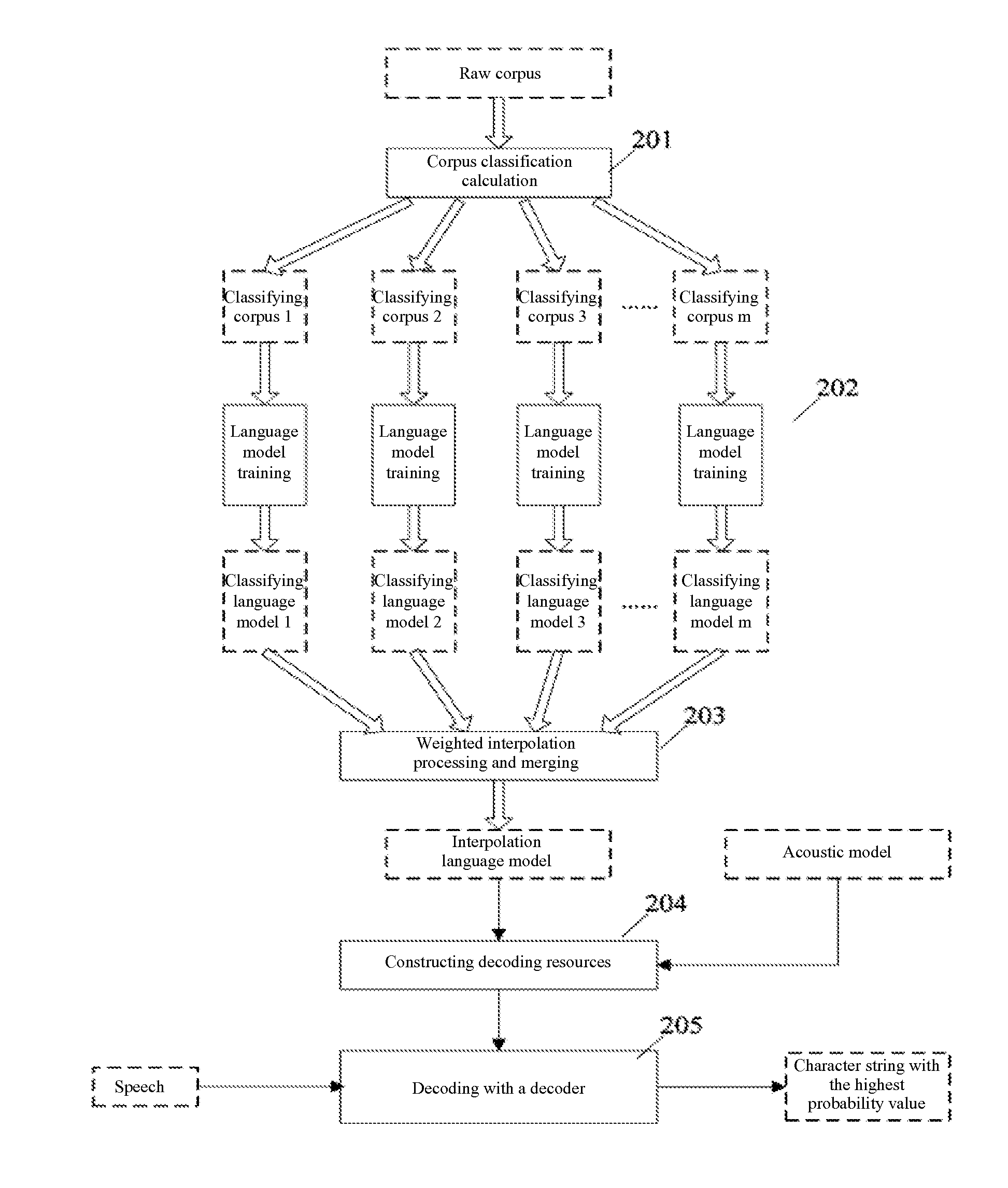

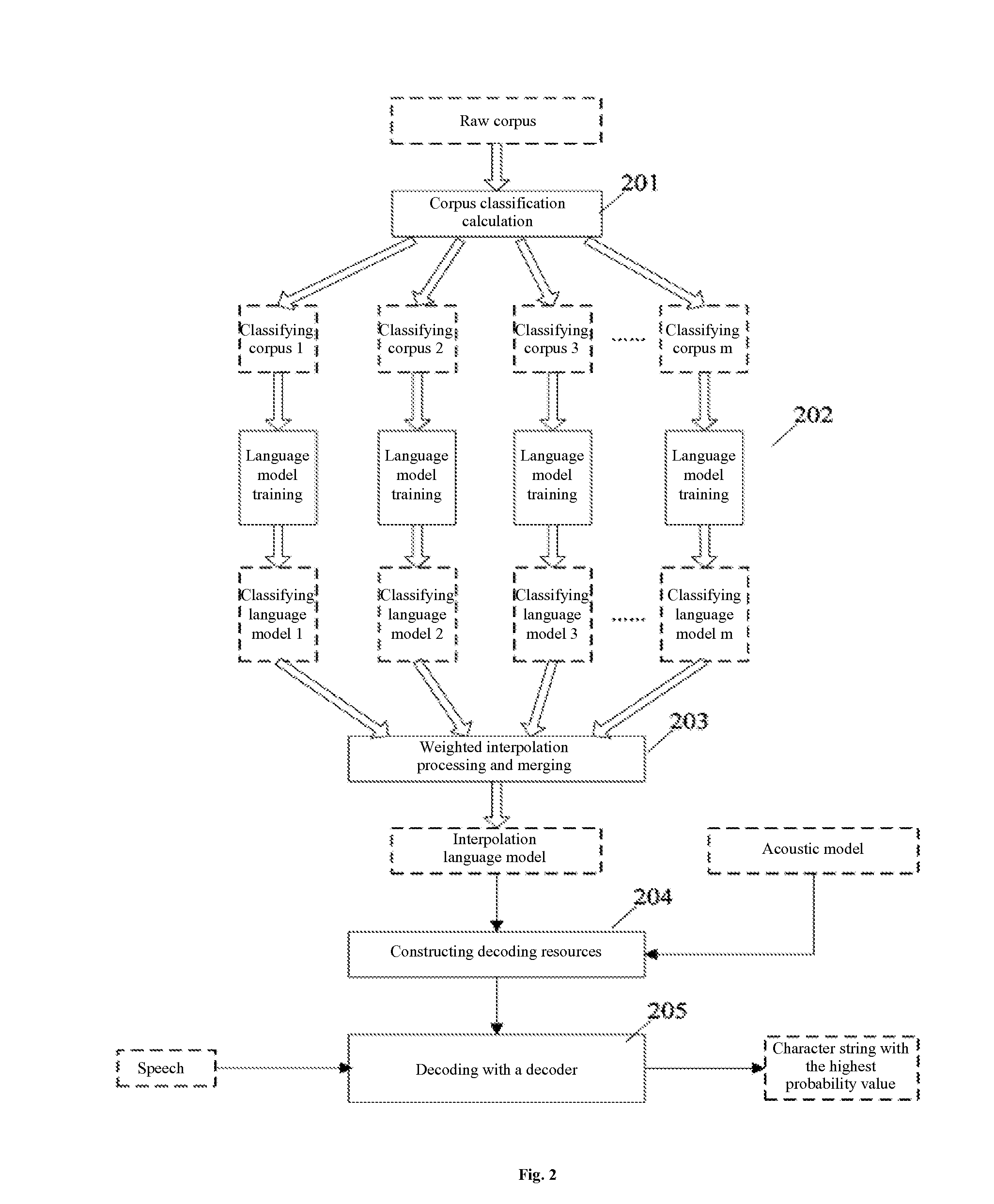

Method and system for automatic speech recognition

An automatic speech recognition method includes at a computer having one or more processors and memory for storing one or more programs to be executed by the processors, obtaining a plurality of speech corpus categories through classifying and calculating raw speech corpus; obtaining a plurality of classified language models that respectively correspond to the plurality of speech corpus categories through a language model training applied on each speech corpus category; obtaining an interpolation language model through implementing a weighted interpolation on each classified language model and merging the interpolated plurality of classified language models; constructing a decoding resource in accordance with an acoustic model and the interpolation language model; and decoding input speech using the decoding resource, and outputting a character string with a highest probability as a recognition result of the input speech.

Owner:TENCENT TECH (SHENZHEN) CO LTD

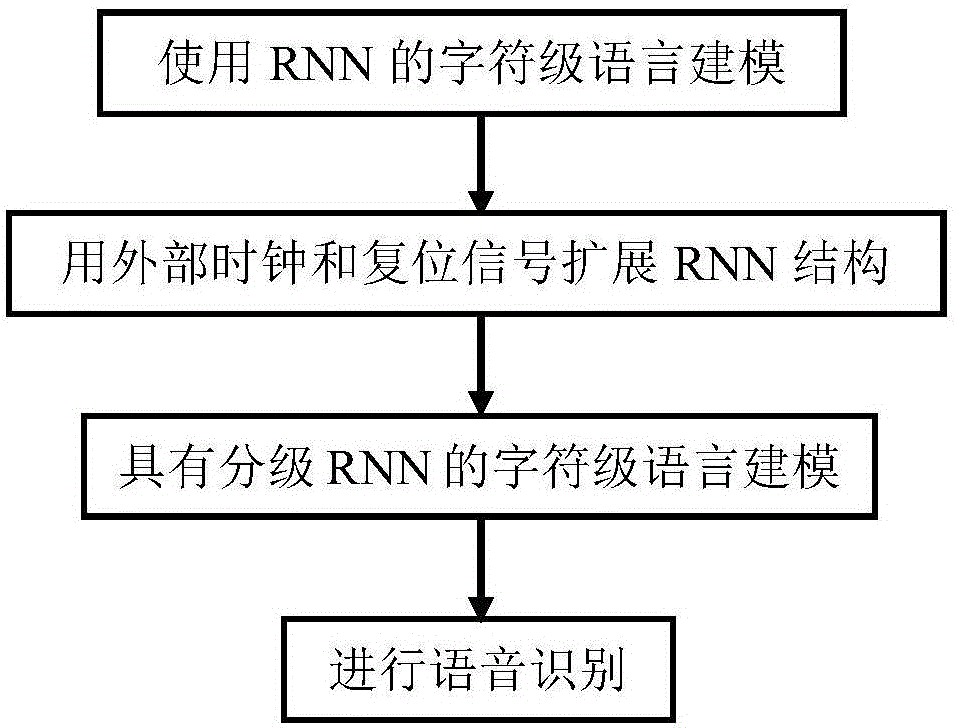

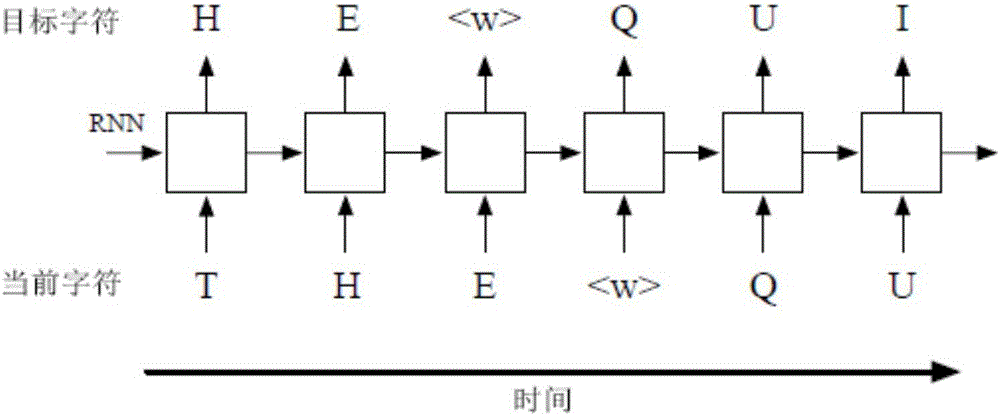

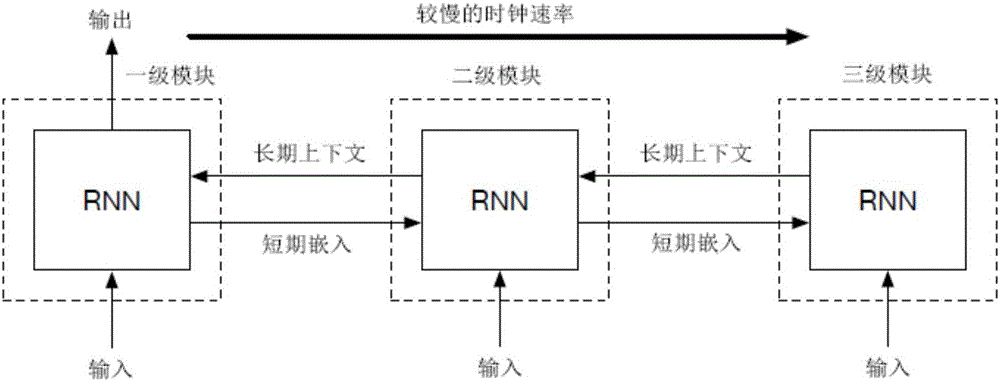

Voice recognition method based on layered circulation neural network language model

InactiveCN106782518ASpeech recognitionNeural learning methodsSpeech identificationProcess information

The invention provides a voice recognition method based on a layered circulation neural network language model. The method mainly comprises steps of character-level language modeling using RNN, expansion of an RNN structure by use of an external clock and a reset signal, character-level language modeling with graded RNN and voice recognition. According to the invention, the traditional single-clock RNN character-level language model is replaced by layered circulation neural network-based language model, so quite high recognition precision is achieved; quantity of parameters is reduced; vocabulary of the language model is huge; required storage space is quite small; and a layered language model can be expanded to process information of quite long period, such as sentences, topics or other contexts.

Owner:SHENZHEN WEITESHI TECH

Automatic speech recognition method and apparatus

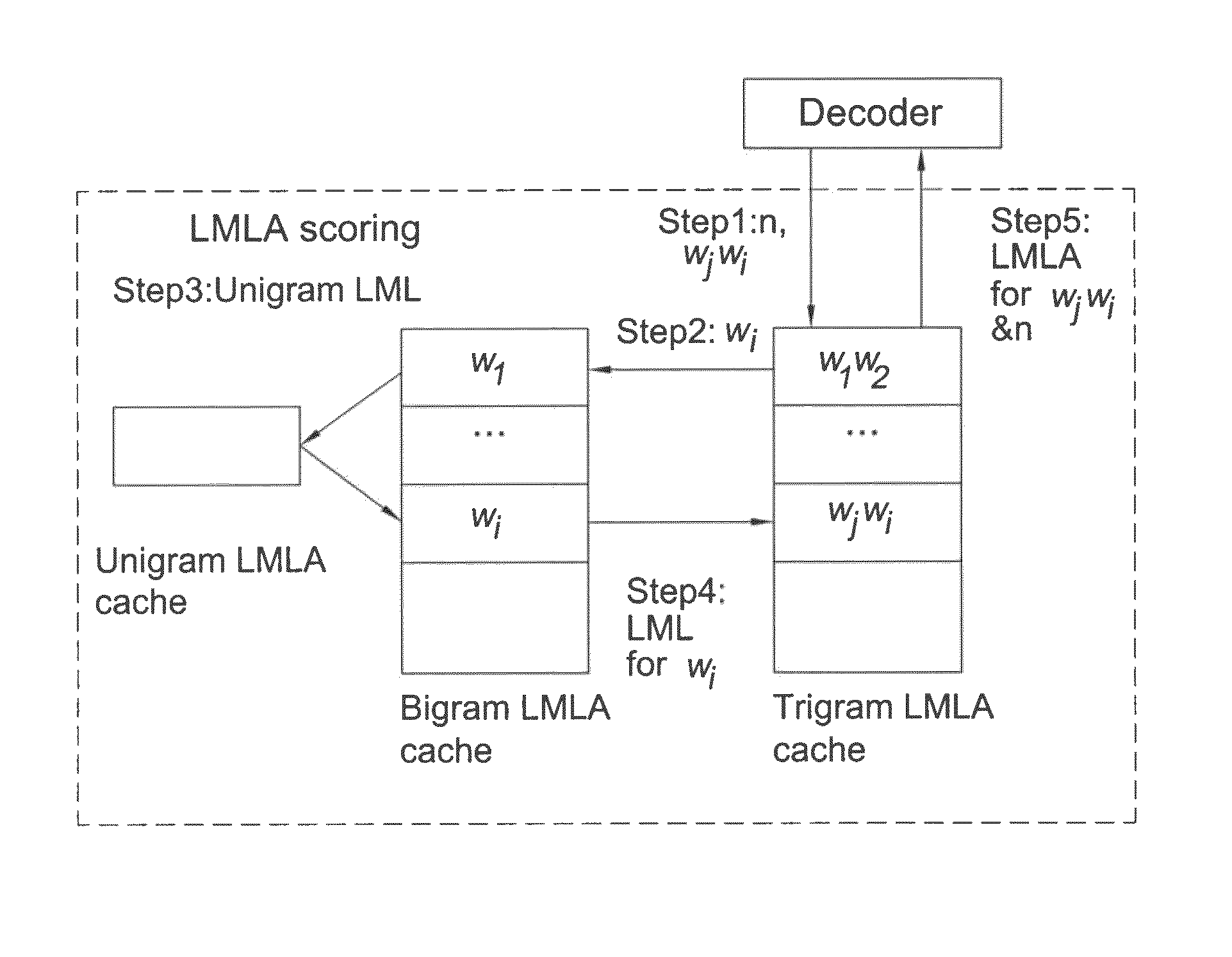

ActiveUS8311825B2Reduce in quantityReduce memory costSpeech recognitionSpecial data processing applicationsHigher order languagesAlgorithm

A system for calculating the look ahead probabilities at the nodes in a language model look ahead tree, wherein the words of the vocabulary of the language are located at the leaves of the tree,said apparatus comprising:means to assign a language model probability to each of the words of the vocabulary using a first low order language model;means to calculate the language look ahead probabilities for all nodes in said tree using said first language model;means to determine if the language model probability of one or more words of said vocabulary can be calculated using a higher order language model and updating said words with the higher order language model; andmeans to update the look ahead probability at only the nodes which are affected by the words where the language model has been updated.

Owner:TOSHIBA DIGITAL SOLUTIONS CORP

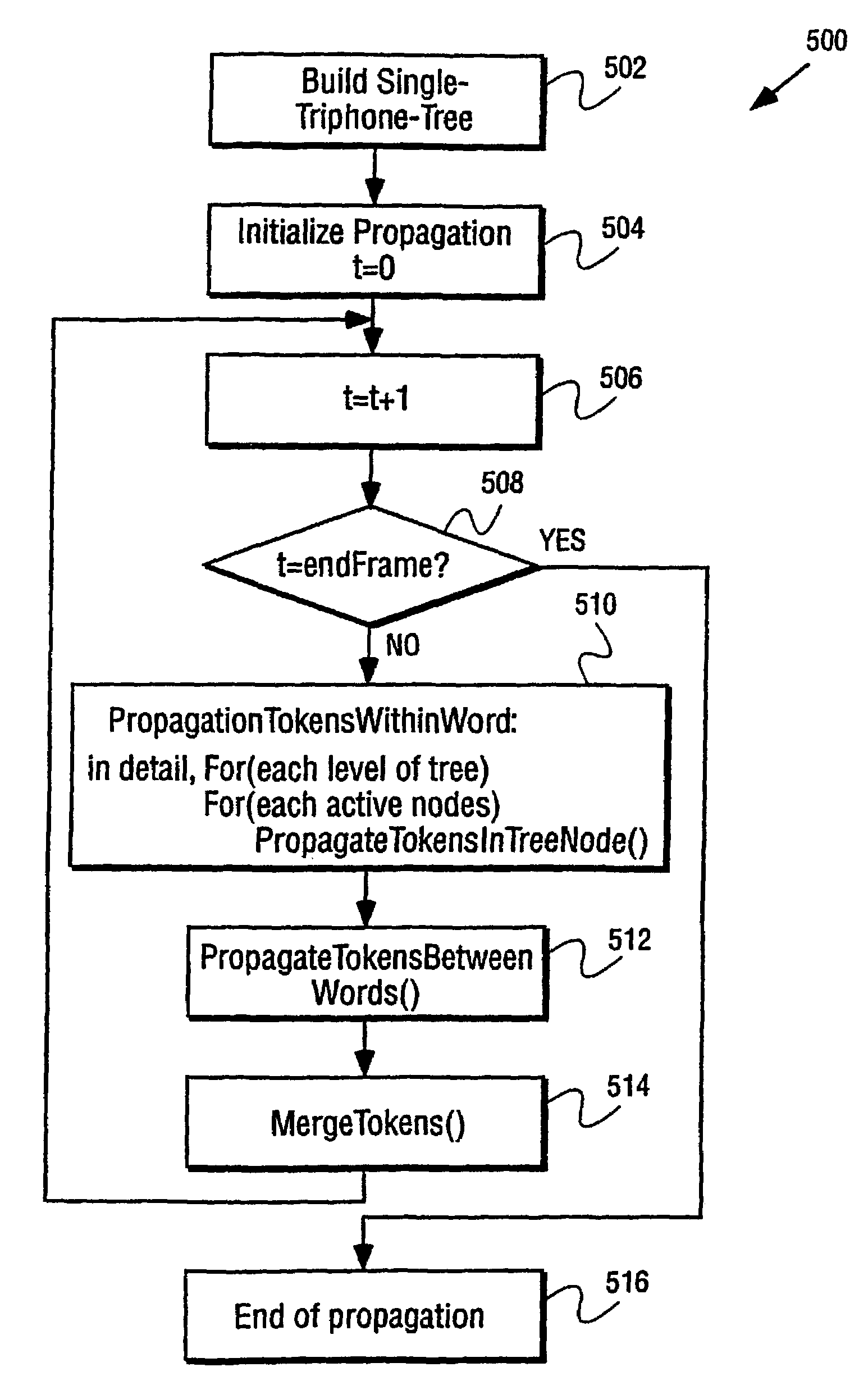

Search method based on single triphone tree for large vocabulary continuous speech recognizer

InactiveUS6980954B1Improve accuracyShorten the timeNatural language data processingSpeech recognitionSpeech identificationSpeech sound

A search method based on a single triphone tree for large vocabulary continuous speech recognizer is disclosed in which speech signal are received. Tokens are propagated in a phonetic tree to integrate a language model to recognize the received speech signals. By propagating tokens, which are preserved in tree nodes and record the path history, a single triphone tree can be used in a one pass searching process thereby reducing speech recognition processing time and system resource use.

Owner:INTEL CORP

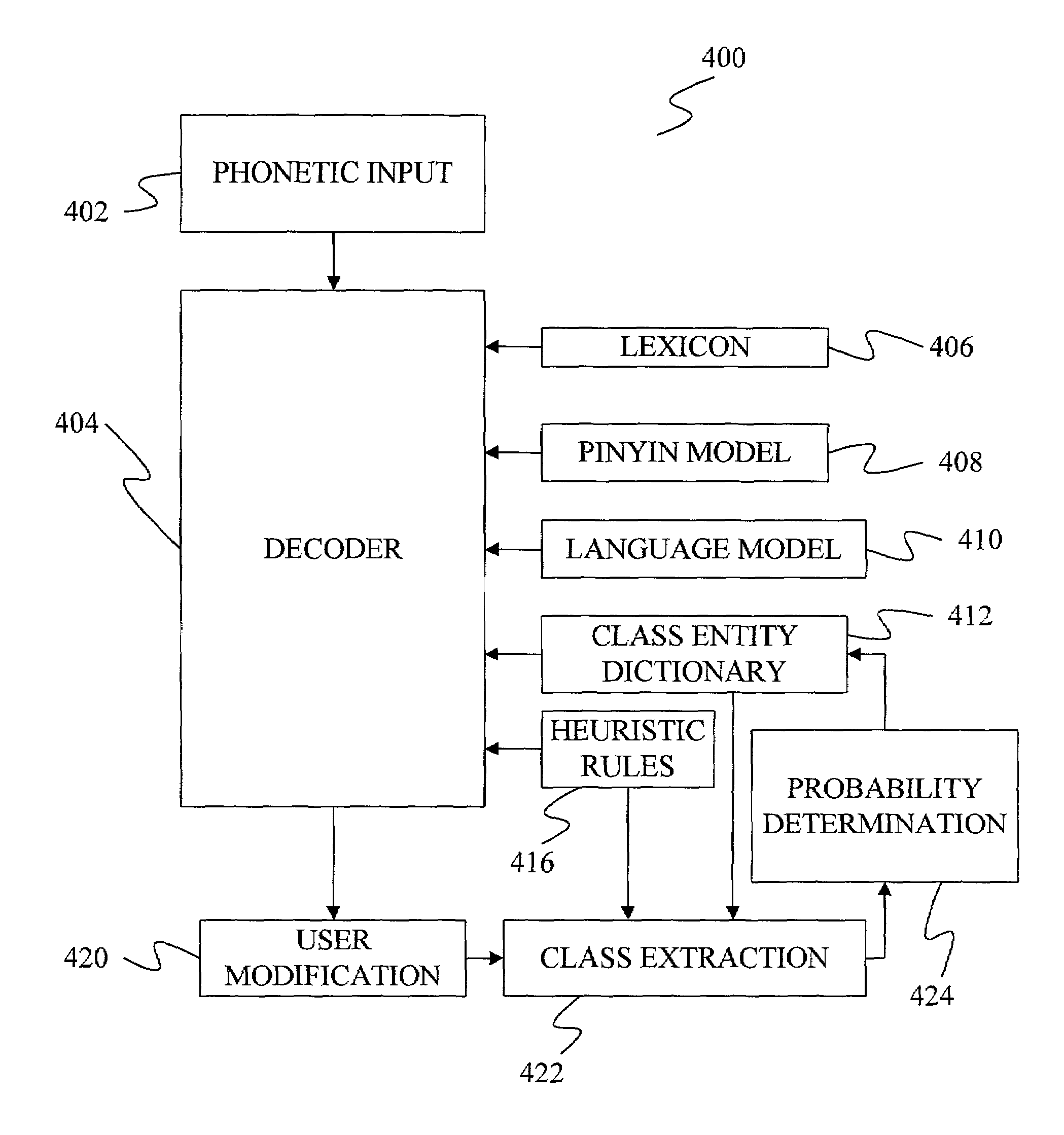

Method and apparatus for adapting a class entity dictionary used with language models

A method and apparatus are provided for augmenting a language model with a class entity dictionary based on corrections made by a user. Under the method and apparatus, a user corrects an output that is based in part on the language model by replacing an output segment with a correct segment. The correct segment is added to a class of segments in the class entity dictionary and a probability of the correct segment given the class is estimated based on an n-gram probability associated with the output segment and an n-gram probability associated with the class. This estimated probability is then used to generate further outputs.

Owner:MICROSOFT TECH LICENSING LLC

Discriminative training of language models for text and speech classification

Methods are disclosed for estimating language models such that the conditional likelihood of a class given a word string, which is very well correlated with classification accuracy, is maximized. The methods comprise tuning statistical language model parameters jointly for all classes such that a classifier discriminates between the correct class and the incorrect ones for a given training sentence or utterance. Specific embodiments of the present invention pertain to implementation of the rational function growth transform in the context of a discriminative training technique for n-gram classifiers.

Owner:MICROSOFT TECH LICENSING LLC

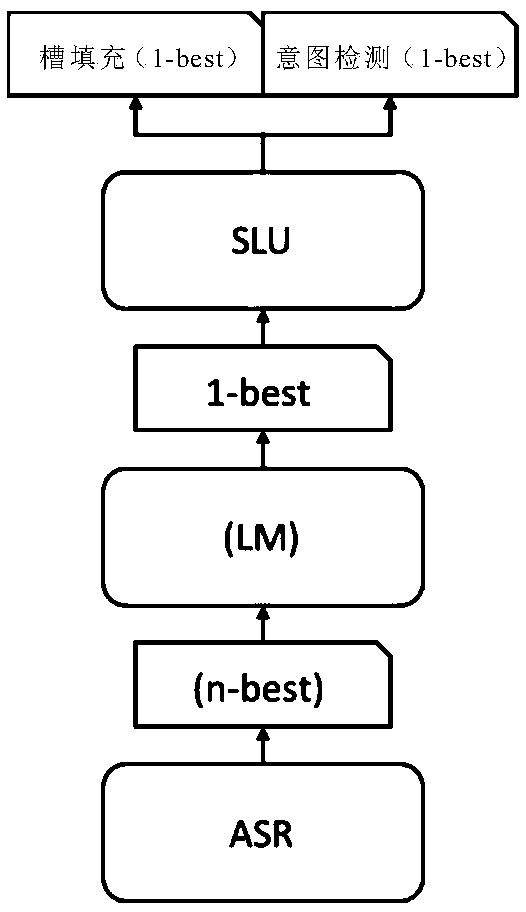

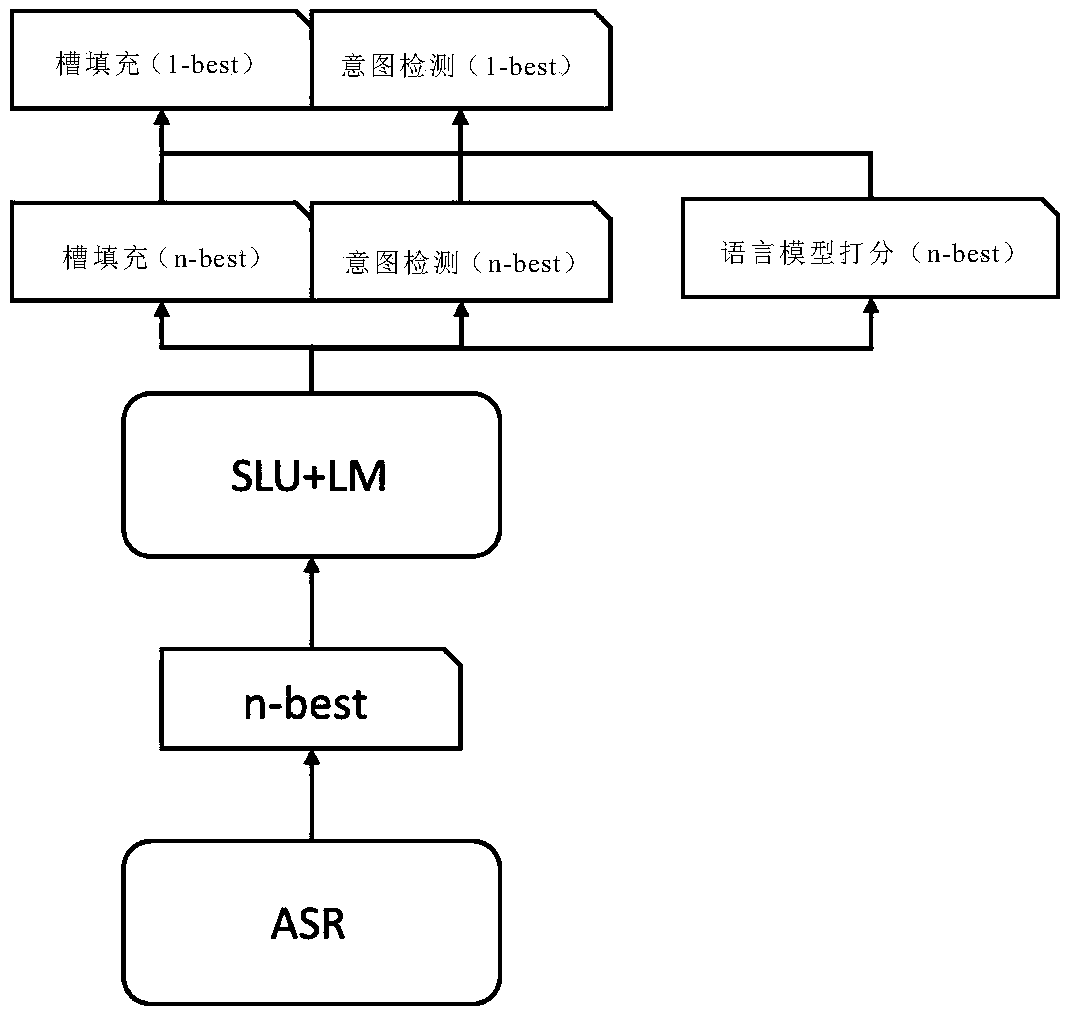

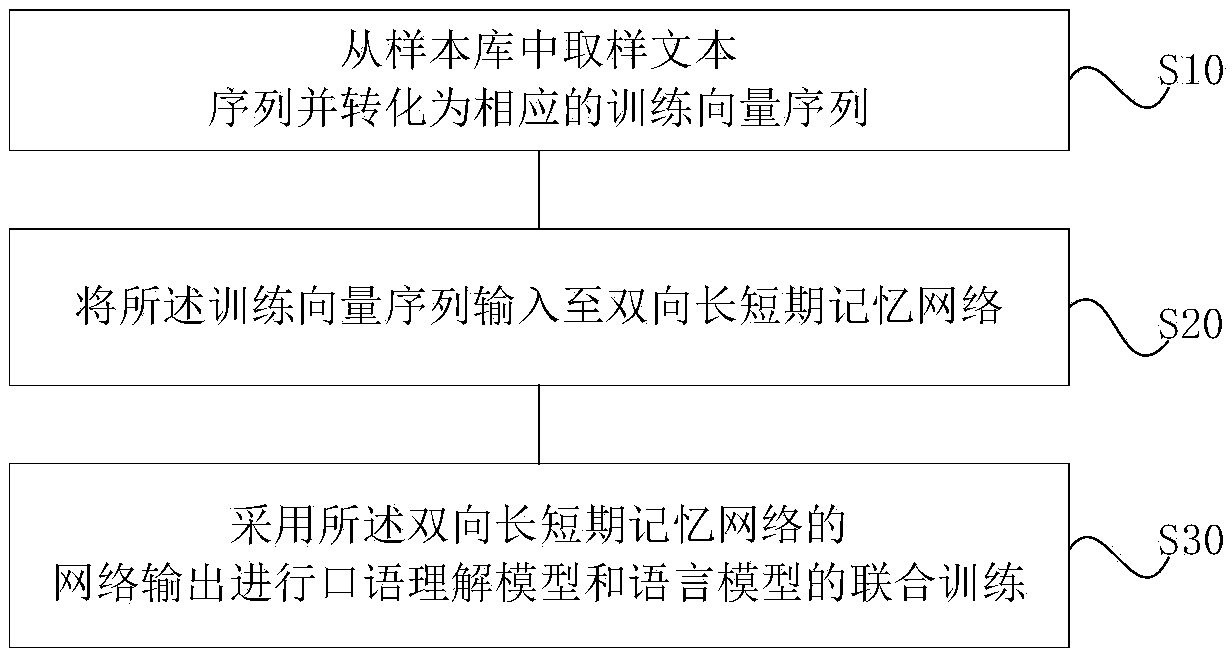

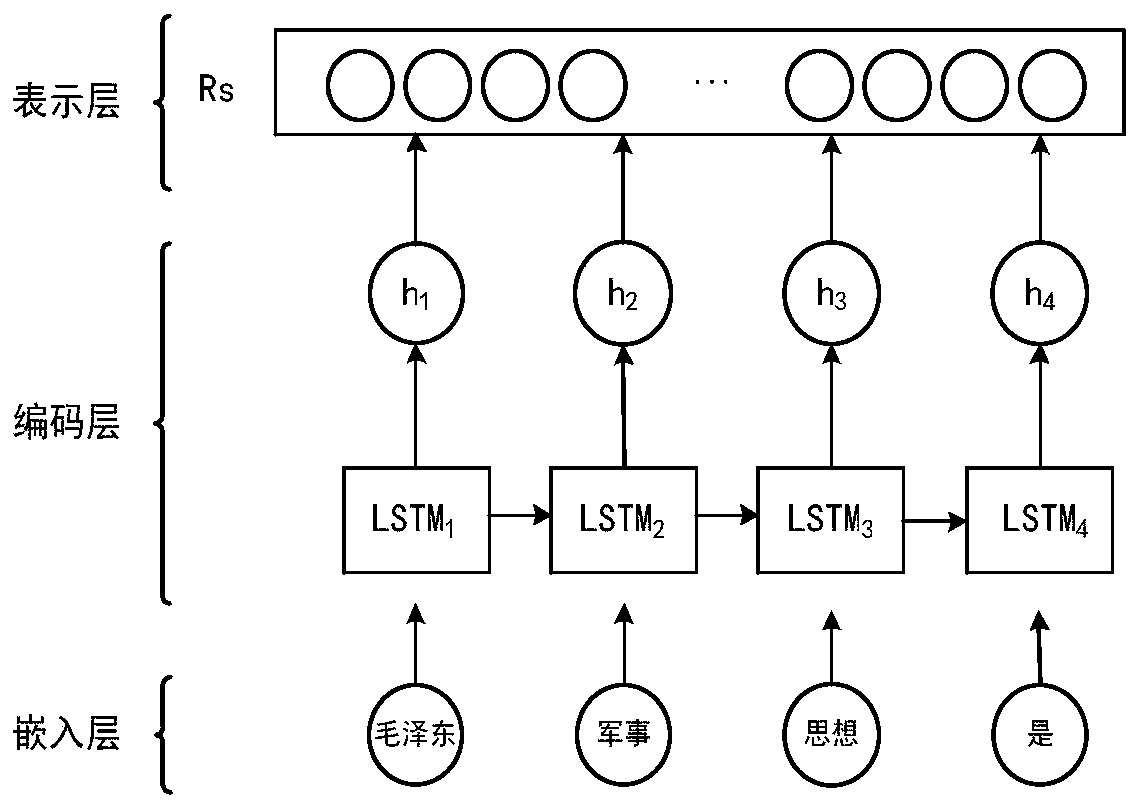

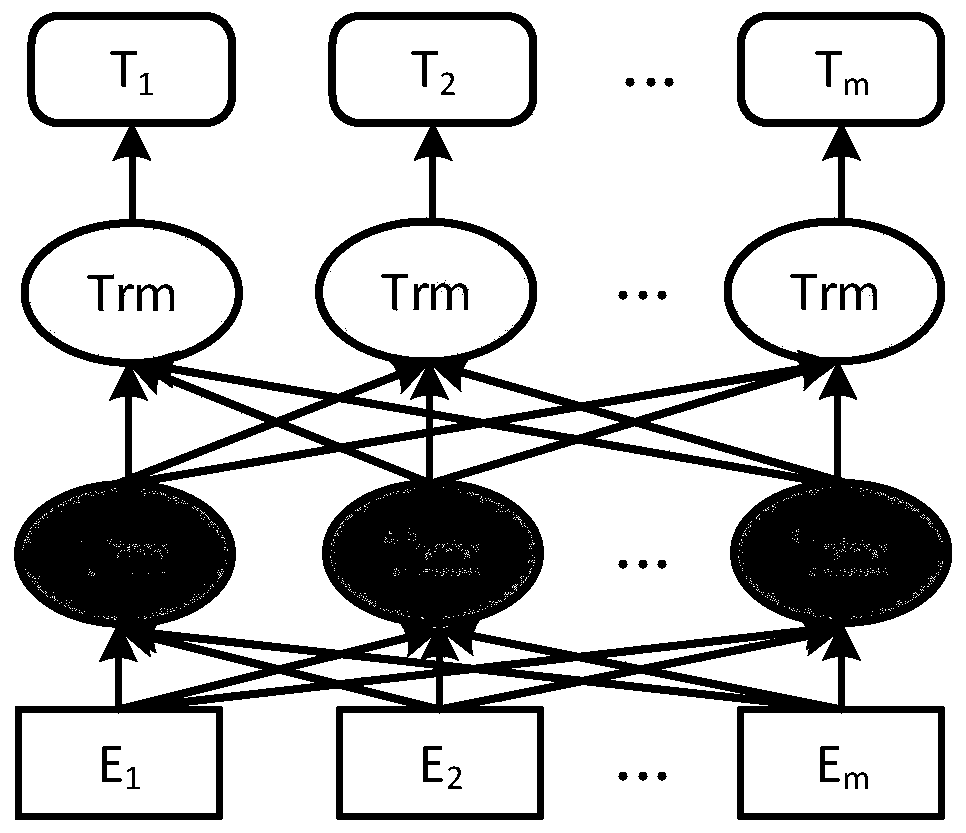

Joint modeling method for spoken language understanding model and language model and dialogue method

ActiveCN108962224AAchieve sharingImprove robustnessSpeech recognitionLanguage understandingShort-term memory

The invention discloses a joint modeling method for a spoken language understanding model and a language model, which comprises the steps of sampling a text sequence from a sample library, and transforming the text sequence into a corresponding training vector sequence; inputting the training vector sequence into a bidirectional long-short term memory network; performing joint training of the spoken language understanding model and the language model by adopting network output of the bidirectional long-short term memory network; and extracting text feature information from the training vectorsequence by adopting the bidirectional long-short term memory network for joint training of the spoken language understanding model and the language model, thereby realizing the sharing of semantic and grammatical feature information between the spoken language understanding model and the language model.

Owner:AISPEECH CO LTD

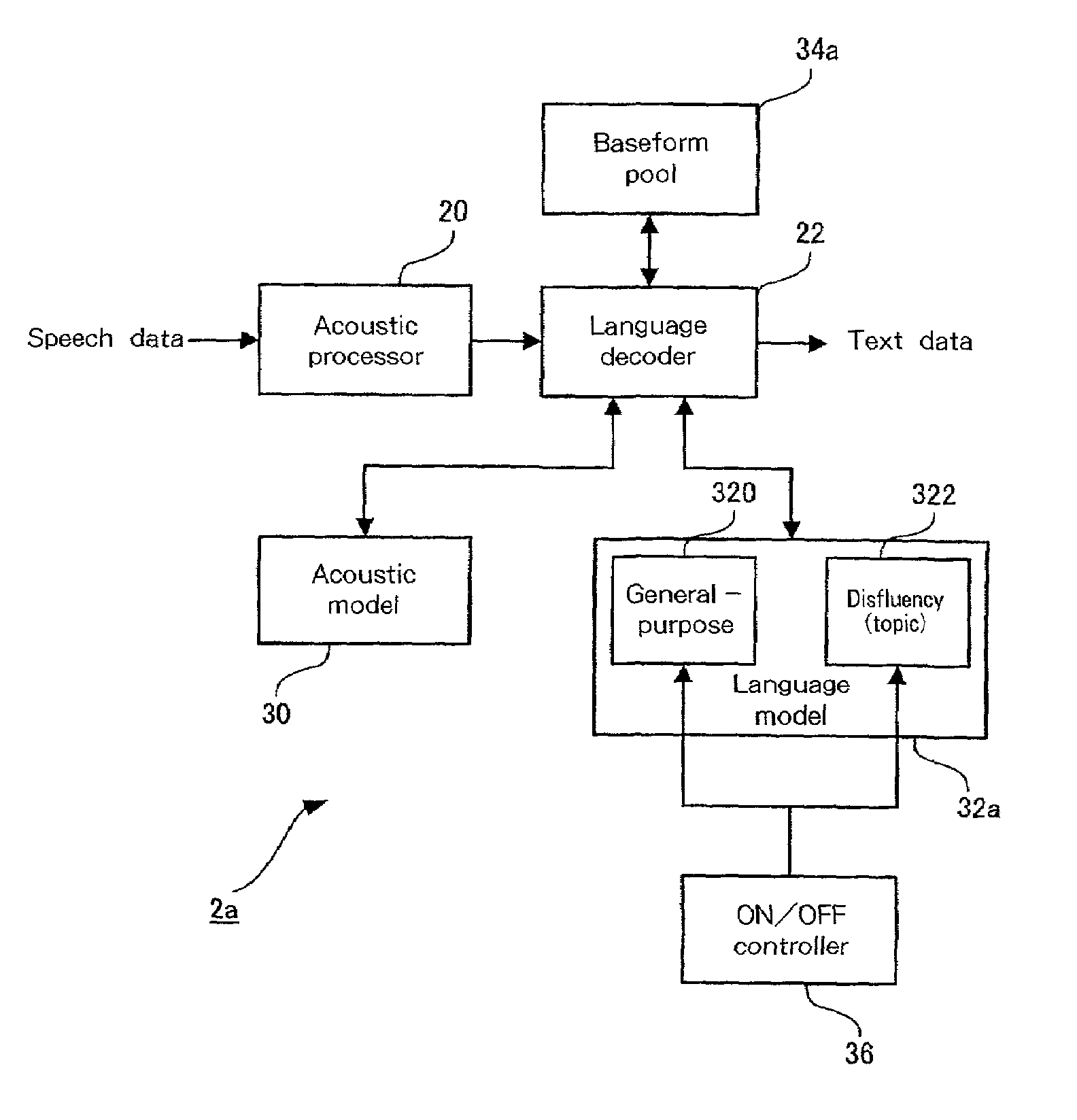

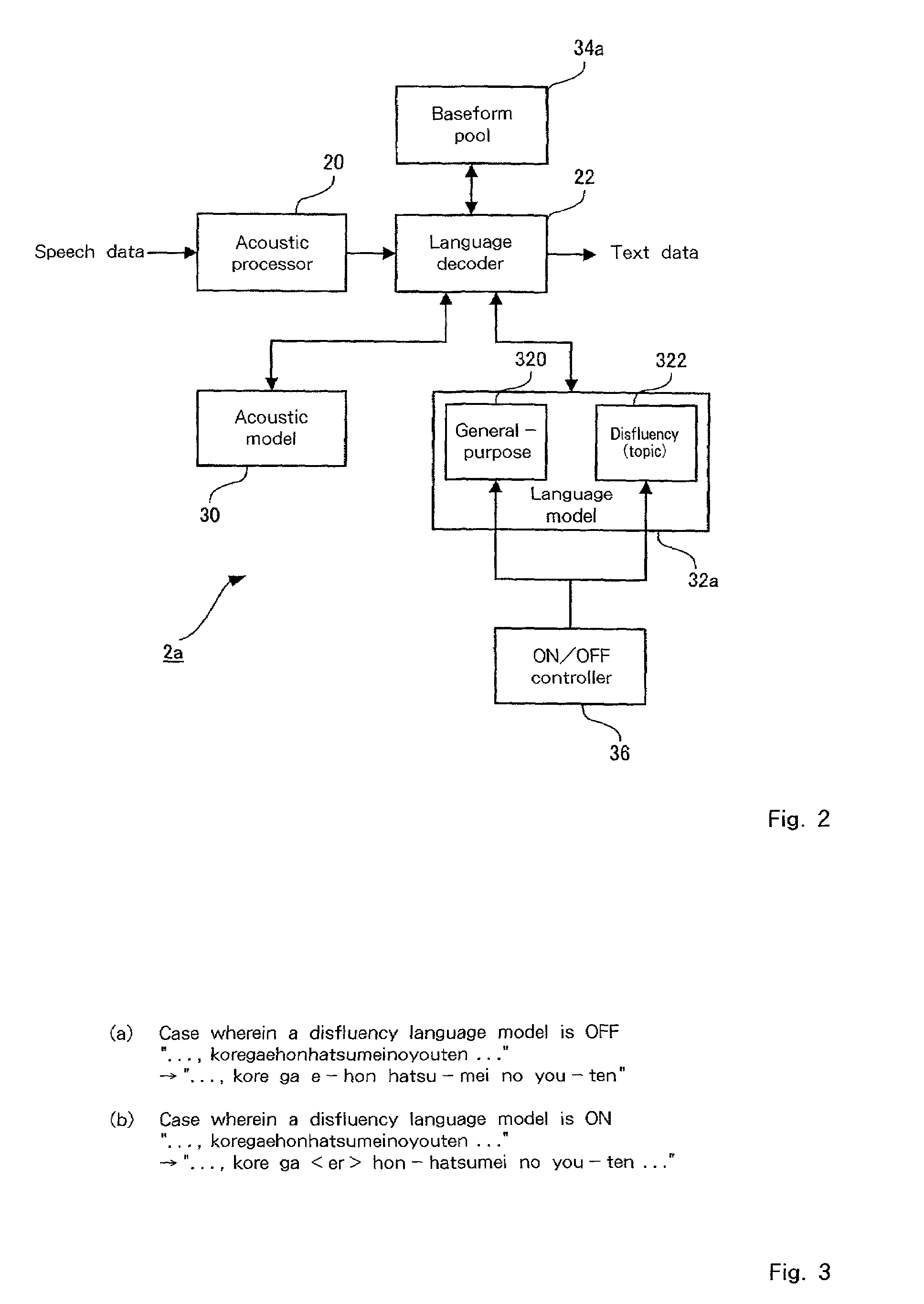

Speech recognition apparatus and method utilizing a language model prepared for expressions unique to spontaneous speech

ActiveUS6985863B2Facilitate automatic removalEasy to distinguishSound input/outputSpeech recognitionSpeech identificationSpeech sound

A speech recognition apparatus can include a transformation processor configured to transform at least one phoneme sequence included in speech into at least one word sequence, and to provide the word sequence with an appearance probability indicating that the phoneme sequence originally represented the word sequence. A renewal processor can renew the appearance probability based on a renewed numerical value indicated by language models corresponding to the word sequence provided by the transformation processor. A recognition processor can select one of the word sequences for which the renewed appearance probability is the highest to indicate that the phoneme sequence originally represented the selected word sequence. The renewal processor can calculate the renewed numerical value using a first language model prepared for expressions unique to spontaneous speech, and a second language model different from the first which employs the renewed numerical value to renew the appearance probability.

Owner:NUANCE COMM INC

Unsupervised text similarity calculation method

ActiveCN110532557AImprove accuracyHigh precisionCharacter and pattern recognitionNeural architecturesAlgorithmText matching

The invention relates to an unsupervised text similarity calculation method, which comprises the following steps of: 1, pre-training an embedded layer model, and pre-training all words in a problem set to generate word vectors meeting the requirements of the model; 2, mining semantic information of sentences through a coding layer network; step 3, performing model improvement based on TFIDF fusion; the method comprises the steps that when each question sentence is input into a neural network, TFIDF calculation is conducted on each input question sentence, calculated weights are input into theneural network, final sentence vector representation is controlled, a normalized TFIDF calculation method is adopted, and the final sentence vector representation is fused into a coding layer and a representation layer. According to the method, the deep neural network model (Bi-LSTM) is used for unsupervised training of the corpus to obtain the language model, and the information of the large-scale corpus can be fully utilized in an unsupervised training mode, so that the text matching accuracy is improved, and the information retrieval precision is improved.

Owner:BEIJING INST OF COMP TECH & APPL

Hierarchichal language models

InactiveCN1535460ASpeech recognitionSpecial data processing applicationsVoice transformationSpeech sound

The invention disclosed herein concerns a method of converting speech to text using a hierarchy of contextual models. The hierarchy of contextual models can be statistically smoothed into a language model. The method can include processing text with a plurality of contextual models. Each one of the plurality of contextual models can correspond to a node in a hierarchy of the plurality of contextual models. Also included can be identifying at least one of the contextual models relating to the text and processing subsequent user spoken utterances with the identified at least one contextual model.

Owner:NUANCE COMM INC

Language model training method and device

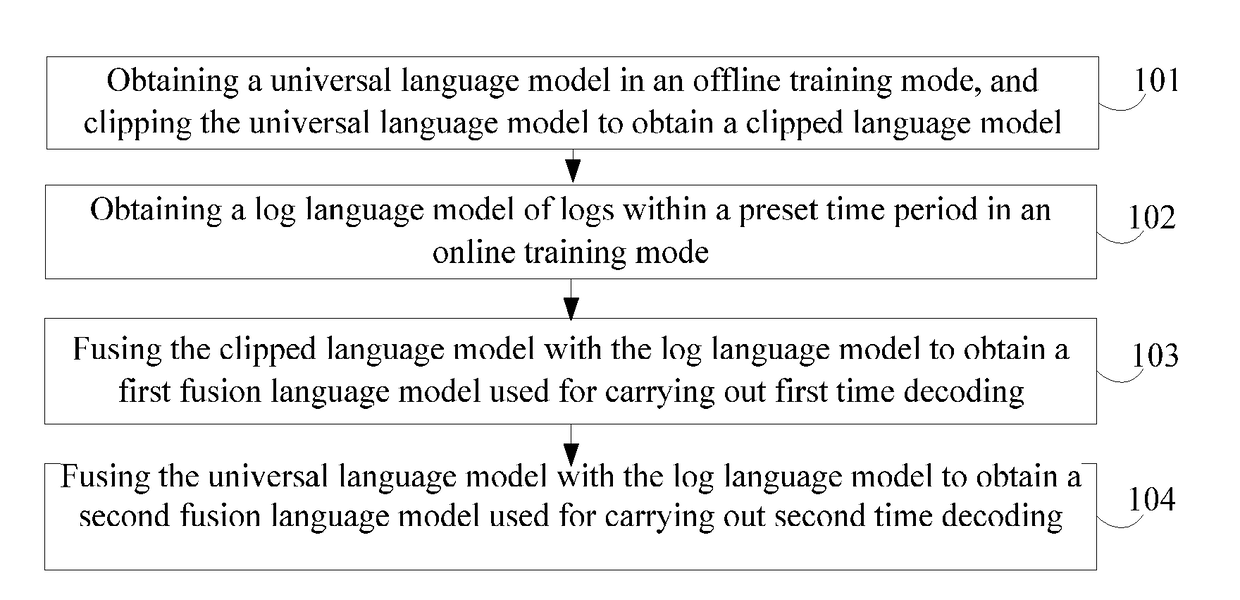

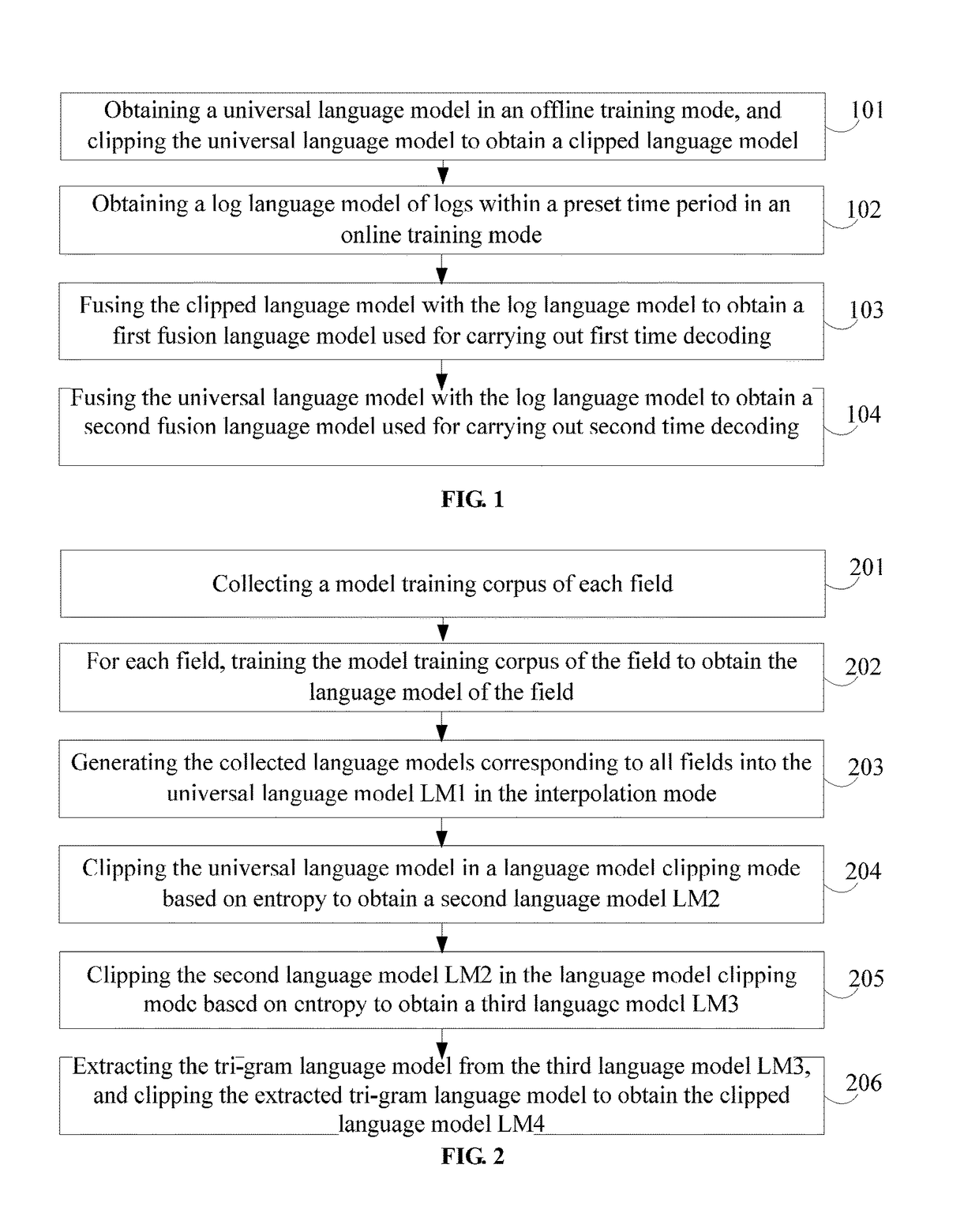

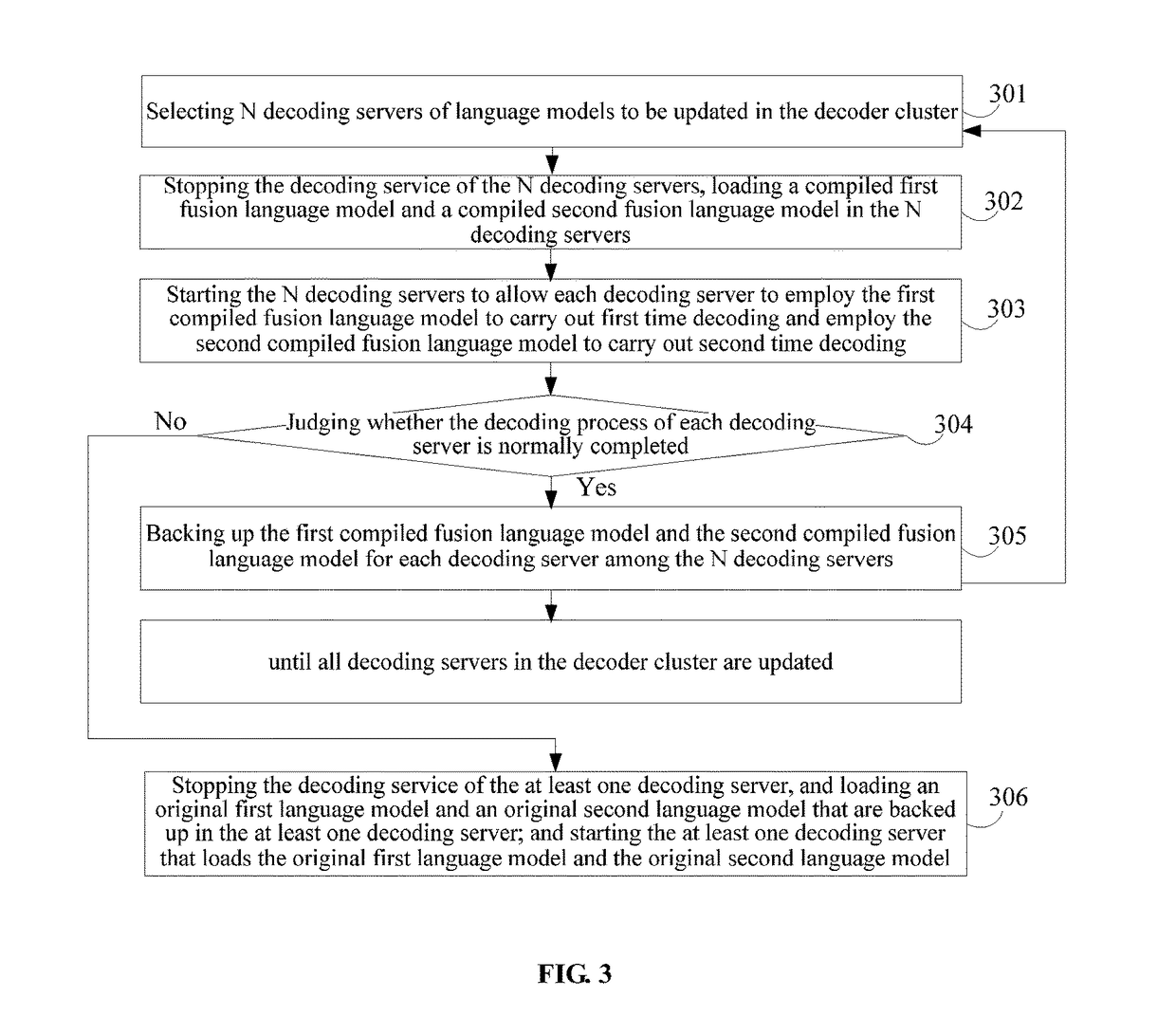

The present disclosure provides a language model training method and device, including: obtaining a universal language model in an offline training mode, and clipping the universal language model to obtain a clipped language model; obtaining a log language model of logs within a preset time period in an online training mode; fusing the clipped language model with the log language model to obtain a first fusion language model used for carrying out first time decoding; and fusing the universal language model with the log language model to obtain a second fusion language model used for carrying out second time decoding. The method is used for solving the problem that a language model obtained offline in the prior art has poor coverage on new corpora, resulting in a reduced language recognition rate.

Owner:LETV HLDG BEIJING CO LTD +1

Assigning meanings to utterances in a speech recognition system

InactiveUS20070033038A1Simple methodSpeech recognitionSpecial data processing applicationsSpeech identificationSpeech sound

Assigning meanings to spoken utterances in a speech recognition system. A plurality of speech rules is generated, each of the speech rules comprising a language model and an expression associated with the language model. At one interval (e.g. upon the detection of speech in the system), a current language model is generated from each language model in the speech rules for use by a recognizer. When a sequence of words is received from the recognizer, a set of speech rules which match the sequence of words received from the recognizer is determined. Each expression associated with the language model in each of the set of speech rules is evaluated, and actions are performed in the system according to the expressions associated with each language model in the set of speech rules.

Owner:APPLE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com