Distributed Compute Work Parser Circuitry using Communications Fabric

a compute work and circuit technology, applied in the field of parallel processing, can solve problems such as substantial affecting the performance and power consumption of compute tasks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example front -

Example Front-End Circuitry for Control Stream

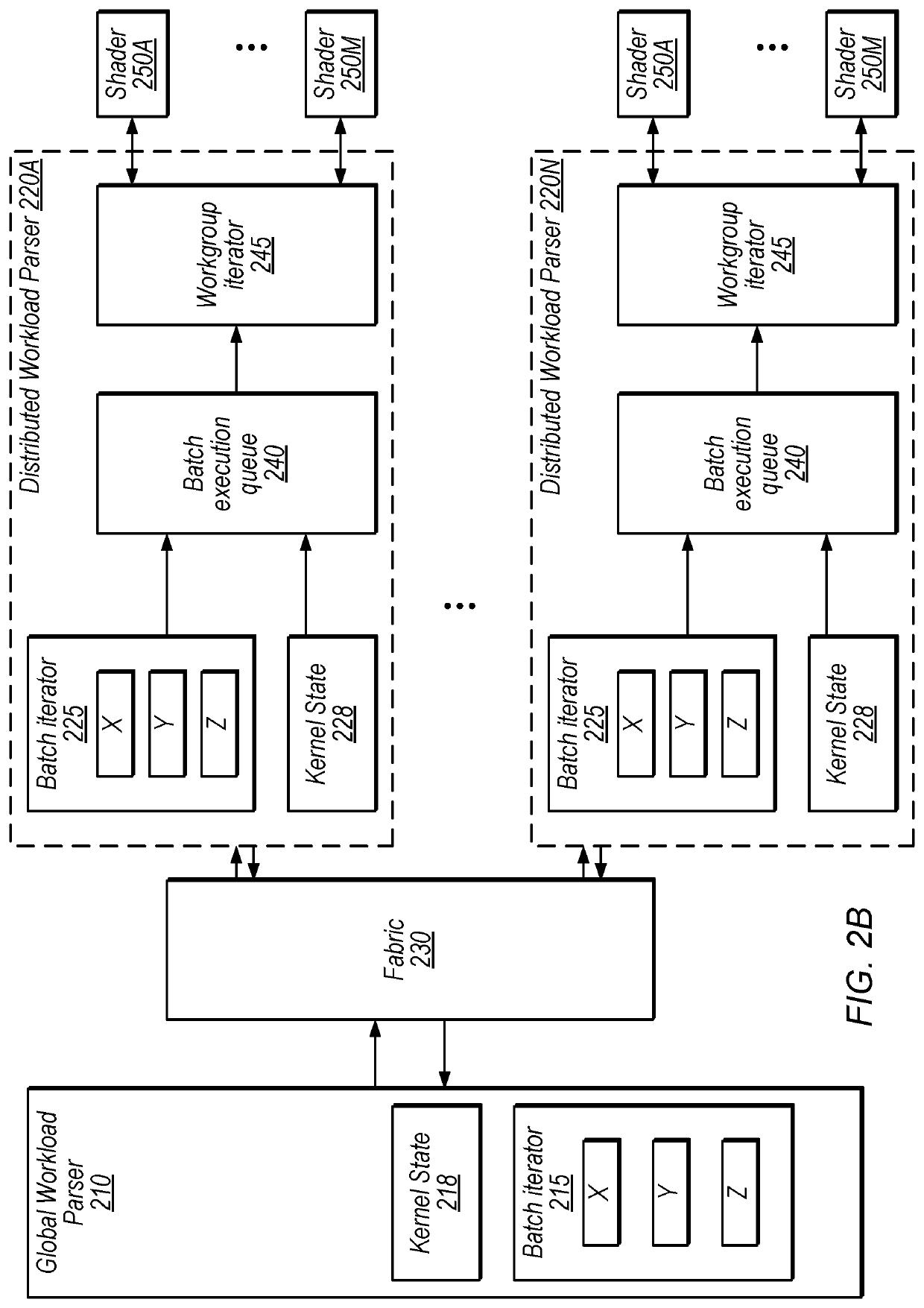

[0050]FIG. 3 is a block diagram illustrating example circuitry configured to fetch compute control stream data, according to some embodiments. In the illustrated embodiment, front-end circuitry 300 includes stream fetcher 310, control stream data buffer 320, fetch parser 330, indirect fetcher 340, execute parser 350, and execution packet queue 360. In some embodiments, decoupling of fetch parsing and execution parsing may advantageously allow substantial forward progress in fetching in the context of links and redirects, for example, relative to point reached by actual execution.

[0051]Note that, in some embodiments, output data from circuitry 300 (e.g., in execution packet queue 360) may be accessed by global workload parser 210 for distribution.

[0052]In some embodiments, the compute control stream (which may also be referred to as a compute command stream) includes kernels, links (which may redirect execution and may or may not include ...

example device

[0114]Referring now to FIG. 11, a block diagram illustrating an example embodiment of a device 1100 is shown. In some embodiments, elements of device 1100 may be included within a system on a chip. In some embodiments, device 1100 may be included in a mobile device, which may be battery-powered. Therefore, power consumption by device 1100 may be an important design consideration. In the illustrated embodiment, device 1100 includes fabric 1110, compute complex 1120 input / output (I / O) bridge 1150, cache / memory controller 1145, graphics unit 150, and display unit 1165. In some embodiments, device 1100 may include other components (not shown) in addition to and / or in place of the illustrated components, such as video processor encoders and decoders, image processing or recognition elements, computer vision elements, etc.

[0115]Fabric 1110 may include various interconnects, buses, MUX's, controllers, etc., and may be configured to facilitate communication between various elements of devic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com