Sharing cache dynamic threshold early drop device for supporting multi queue

A dynamic threshold and shared cache technology, applied in digital transmission systems, electrical components, transmission systems, etc., can solve the problems of difficult parameter setting, difficult hardware high-speed implementation, and a large number of resources, and achieve the effect of avoiding floating-point operations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

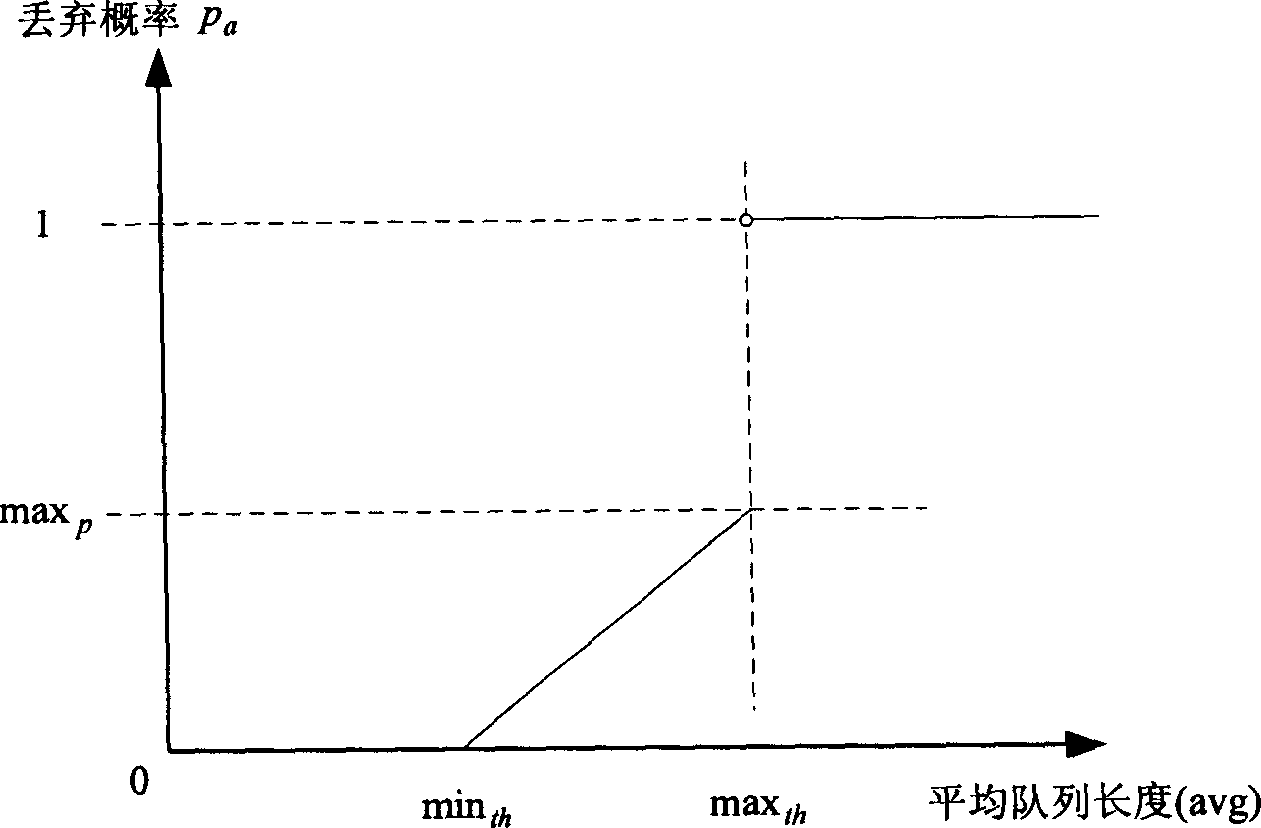

[0063] The Internet Engineering Task Force (IETF) proposed the AQM technology and recommended the RED mechanism. When a router implementing the RED algorithm detects a precursor to congestion, it randomly discards some packets in the buffer queue in advance, instead of discarding all new packets after the buffer is full. When the average queue length of the cache of the intermediate node device in the network exceeds a specified minimum threshold min th When , it is considered that there is a congestion precursor, and at this time the router presses a certain probability p a Drop a packet, the probability p a is a function of the average queue length avg(t):

[0064] p b = p max ( avg ( t ) - min th ) ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com