Pedestrian identification method based on local feature perception image-text cross-modal model and model training method

A technology of local features and training methods, applied in the field of pattern recognition, can solve the problems of insufficient precision, complex feature extraction process, difficult to put into practical application scenarios, etc., and achieve the effect of high accuracy and simple structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

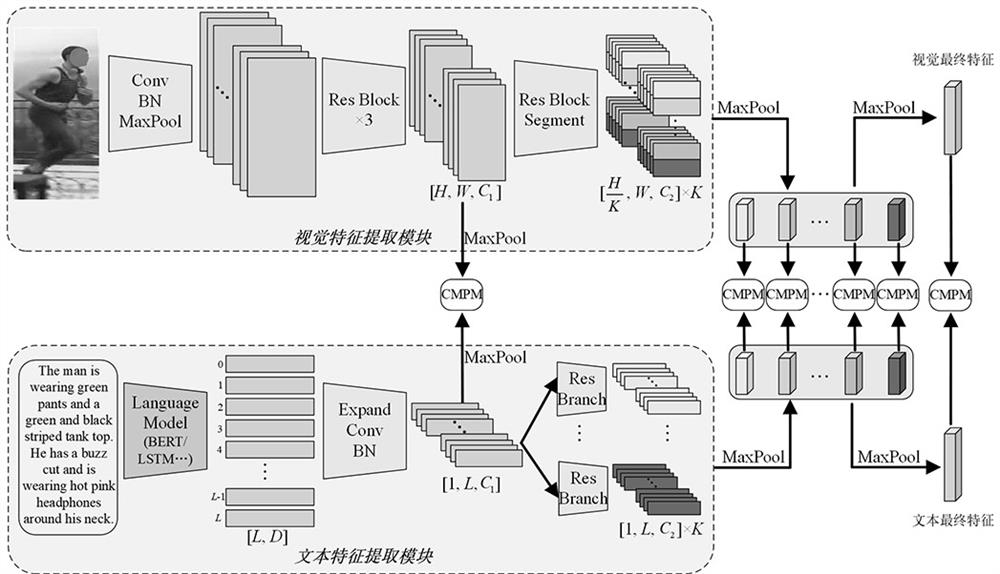

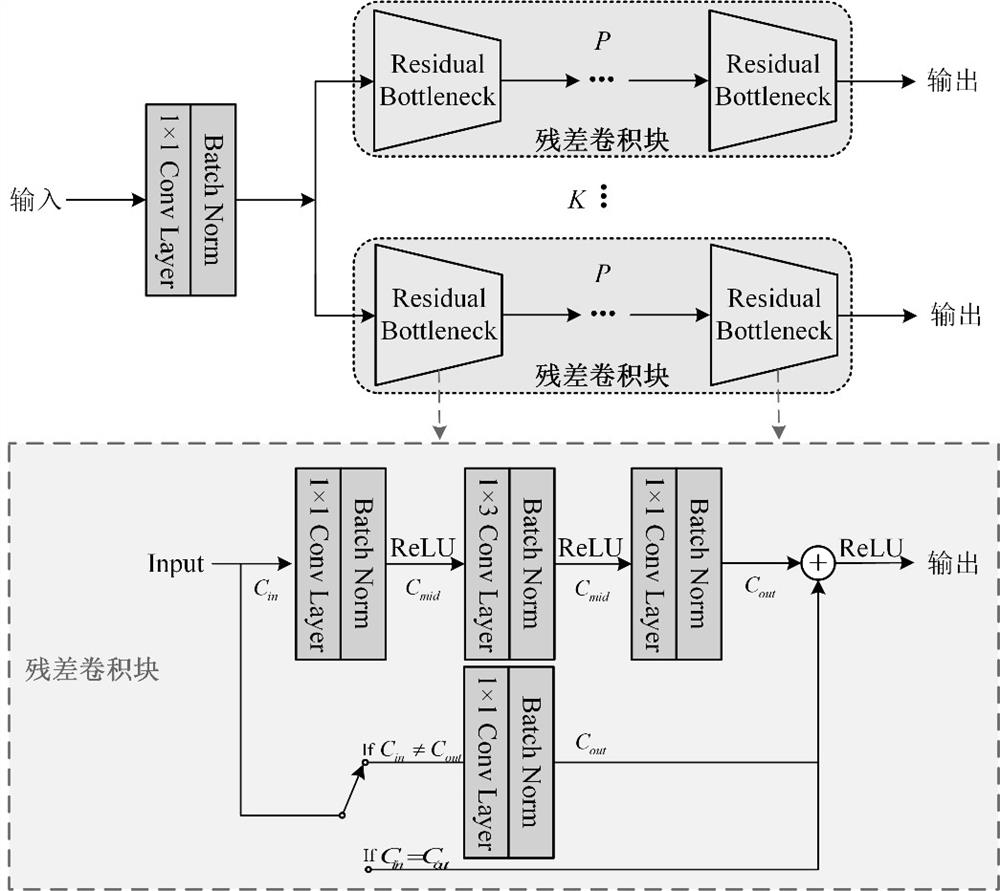

[0052] This embodiment provides a training method for a local feature-aware graphic-text cross-modal model, wherein the local-feature-aware graphic-text cross-modal model is based on the Pytorch deep learning framework, and is used to mine the feature information of pedestrian images and text descriptions. The perceptual image-text cross-modal model includes a visual feature extraction module and a text feature extraction module. The visual feature extraction module includes a PCB structure for extracting local images, and the text feature extraction module includes a multi-branch convolution structure for extracting text features. Each branch of the multi-branch convolutional structure is aligned with one of the local images.

[0053] Specifically, as figure 1 As shown, the training method of the local feature-aware image-text cross-modal model is as follows.

[0054] 1. Prepare the graphic data set

[0055] Construct an image and text data set, which includes a training s...

Embodiment 2

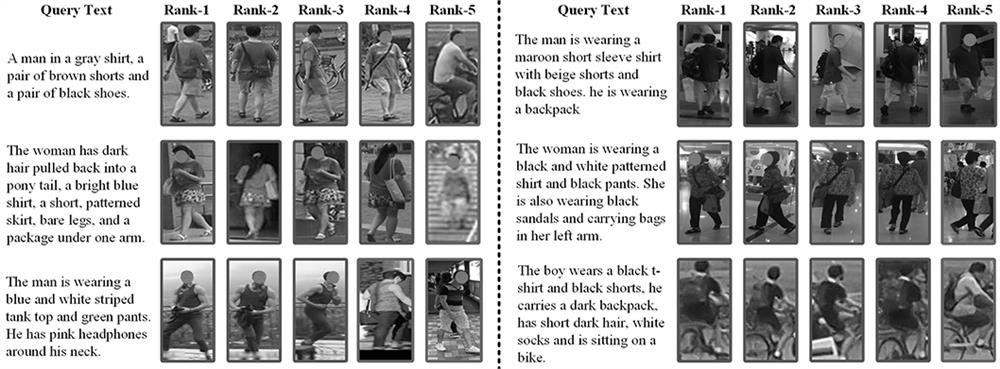

[0113] This embodiment provides a pedestrian recognition method based on a local feature-aware graphic and text cross-modal model, such as image 3 and Figure 4 As shown, the pedestrian identification method includes:

[0114] Get the graphic data of pedestrians,

[0115] Input the pedestrian's graphic data into the pre-trained local feature-aware graphic-text cross-modal model for feature extraction, and output the pedestrian recognition result.

[0116] The construction and training of the local feature-aware graphic and text cross-modal model have been clearly described in Embodiment 1, and will not be repeated here.

[0117] As will be appreciated by one skilled in the art, the embodiments of the present application may be provided as a method, a system, or a computer program product. Accordingly, the present application may take the form of an entirely hardware embodiment, an entirely software embodiment, or an embodiment combining software and hardware aspects. Furt...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap