Electronic signature handwriting segmentation method based on recognition

An electronic signature and handwriting technology, applied in neural learning methods, instruments, biological neural network models, etc., can solve the problems of rough division of picture grids, poor detection effect, and low detection accuracy, so as to improve the accuracy of handwriting segmentation and improve Accuracy, the effect of training volume reduction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

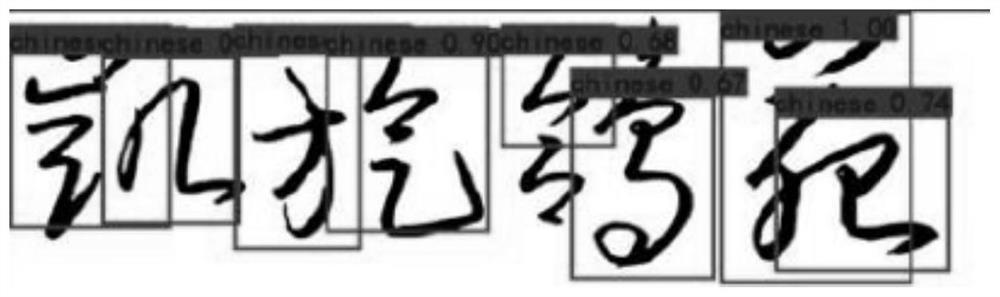

[0043] A recognition-based handwriting segmentation method for electronic signatures, comprising the following steps:

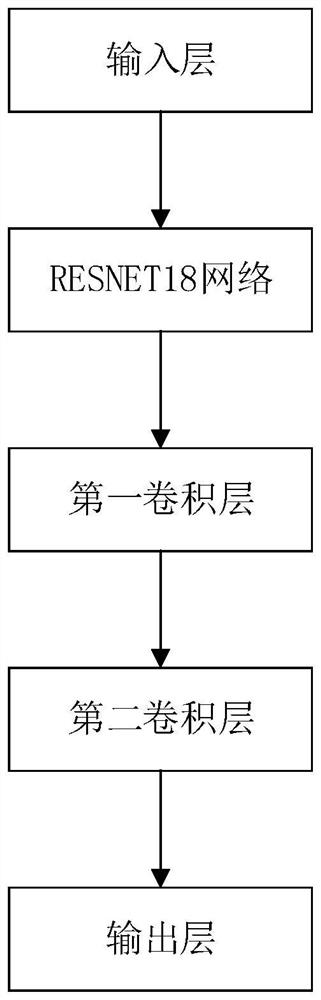

[0044] S1, such as figure 1 As shown, build a handwriting segmentation model: the handwriting segmentation model includes an input layer, a backbone network (in this embodiment, the backbone network is ResNet18, including 17 convolutional layers, a hidden layer of a fully connected layer, and multiple residual structures), The first convolutional layer (dimension 3*3*512), the second convolutional layer (dimension 1*1*5) and the output layer.

[0045] S2, construct the loss function L=L obj +L bbox ;

[0046] where L ob j is the target prediction loss function; L bbox Prediction loss function for bounding boxes.

[0047]

[0048]

[0049] In the formula, λ obj =5;λ noobj =1;s 2 Indicates the length and width of the features extracted by the backbone network, in this embodiment s 2 =14*14; Represents the label value of the input data; and ...

Embodiment 2

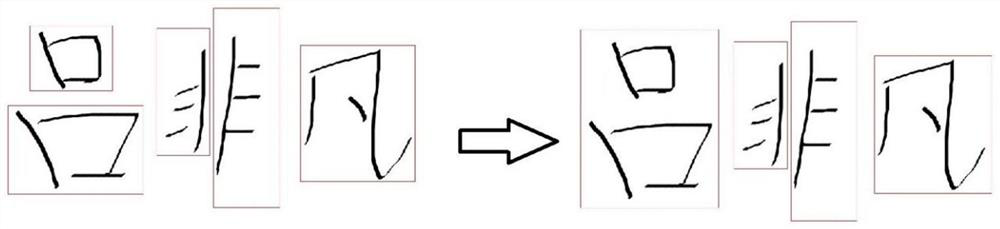

[0057] Further, several bounding boxes output by the handwriting segmentation model are combined, and the specific steps are as follows:

[0058] A1. Merge the overlapping bounding boxes in the Y-axis direction: such as image 3 As shown, if the two bounding boxes do not overlap in the Y-axis direction but overlap in the X-axis direction (specifically, the bounding box and another bounding box overlap in the Y-axis coordinate range, and the X-axis coordinate range does not overlap), then merge the two bounding box.

[0059] A2. Calculate the average value W and variance value S of the width of the bounding box;

[0060] A3. Traverse the bounding box to find adjacent bounding boxes whose spacing in the X-axis direction is less than the spacing threshold (the spacing threshold is set to 0.5*W in this embodiment); if multiple adjacent bounding boxes with spacing less than the spacing threshold are found, priority Merge the adjacent bounding boxes with the smallest distance; cal...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com