Static gesture recognition method based on machine vision attention mechanism

A machine vision and gesture recognition technology, applied in the field of car cockpit interaction, can solve the problems of missing recognition, limited computing power, increased cost, etc., achieve the best balance between accuracy and performance, improve classification accuracy, and enhance the effect of expression ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described in detail below with reference to the accompanying drawings and specific embodiments.

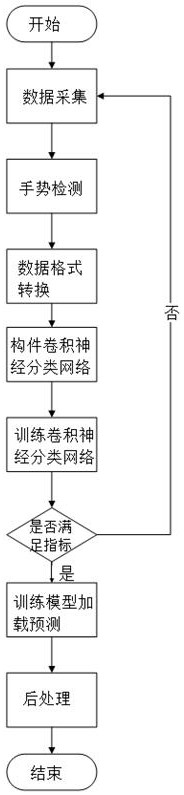

[0041] A static gesture recognition method based on machine vision attention mechanism, its flow chart is as follows figure 1 shown, including the following steps:

[0042] (1) Data collection: RGB cameras are used to collect multi-category static gesture images;

[0043] (2) Gesture area detection: After detecting the gesture area in the static gesture image, crop it to obtain the gesture image, and then save it, and divide the gesture images of all categories into training set, verification set and test set;

[0044] (3) Data format conversion: Convert the gesture pictures obtained in step (2) from RGB format to YUV format, so that the gesture pictures in the training set, validation set and test set are all in YUV format;

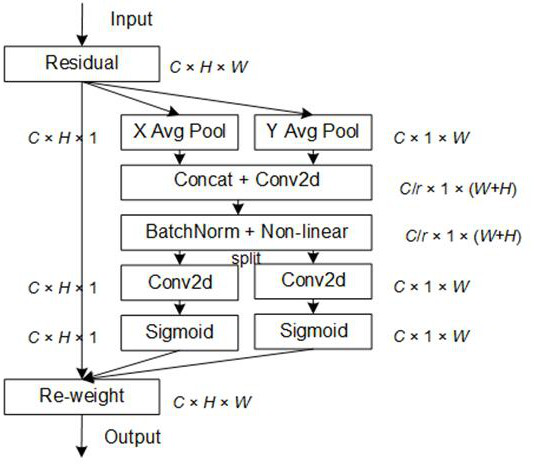

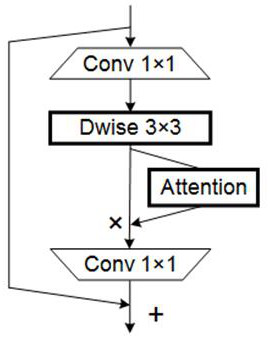

[0045](4) Build a convolutional neural classification network: use the MobileNetV2 network as the network fra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com