Multi-modal emotion recognition method based on acoustic and text features

A technology of emotion recognition and acoustic features, applied in character and pattern recognition, neural learning methods, biological neural network models, etc., can solve the problems of not taking into account the mismatch of data volume, unable to make up for the transcribed text, etc., to speed up the convergence speed, The effect of correcting ambiguity and improving utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] In order to explain the present invention more fully, the present invention will be described in detail below in conjunction with the drawings and specific embodiments.

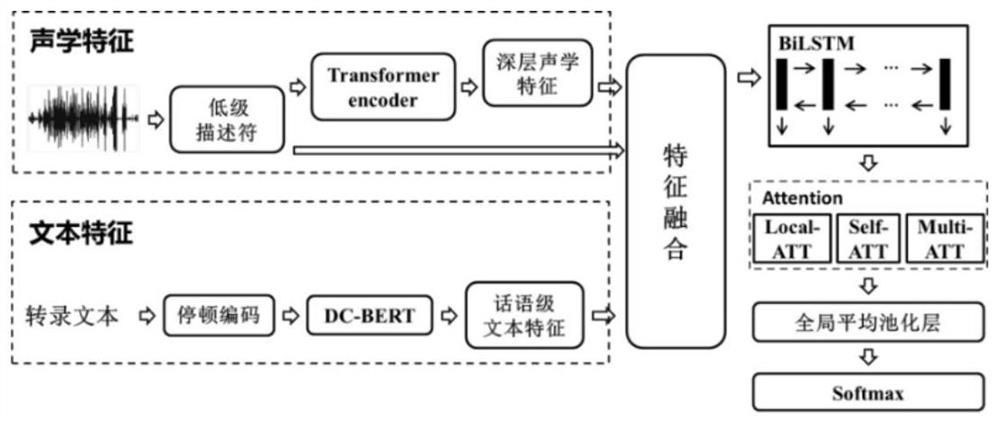

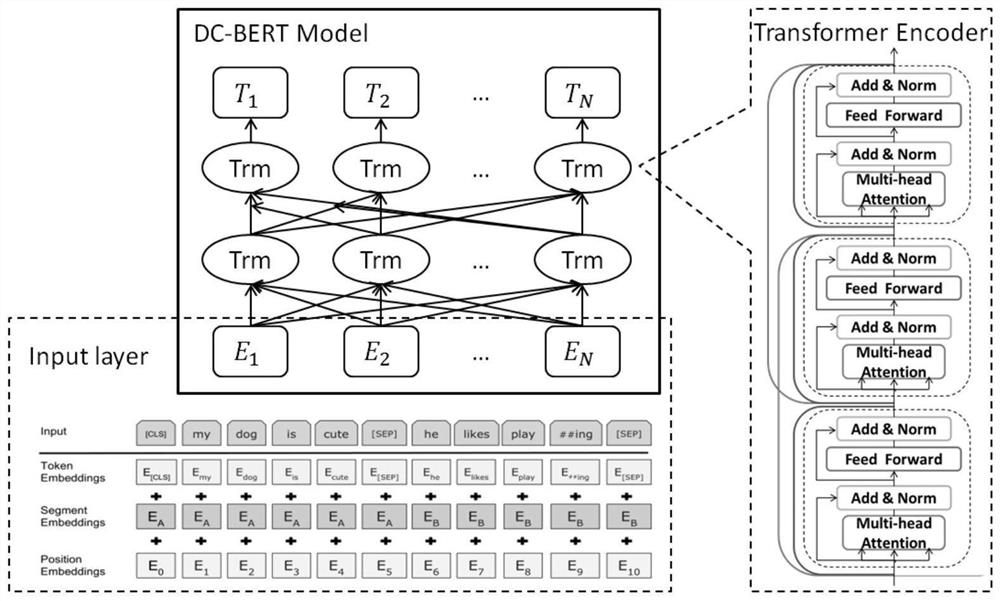

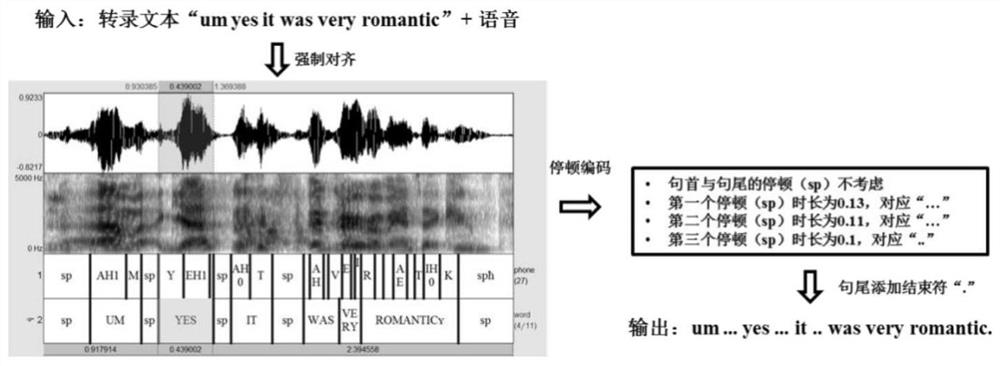

[0054] Such as figure 1 As shown, the multimodal emotion recognition method based on acoustic and text features of the present invention uses OpenSMILE to extract the emotional shallow features of the input voice, and fuses with the deep features obtained after the Transformer network learns the shallow features to generate a multi-level acoustic Features; use the speech with the same content to perform forced alignment with the transcribed text to obtain pause information, then encode the speech pause information in the speech and add it to the transcribed text, and send it to the layered dense connection DC-BERT model to obtain the features of this article, and then combine with Acoustic feature fusion; use BiLSTM-ATT, a two-way long-short-term memory neural network based on attention mechanism, as a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com