Image color conversion device, method and storage medium based on generative adversarial network

A technology of color transformation and picture, applied in biological neural network model, graphics and image conversion, image enhancement, etc., can solve the problem of detection effect of few defect samples, etc., achieve less training data, good practicability, and reduce development labor costs and time cost effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0070] A device for image color transformation based on generative confrontation networks, including a data collection module, a training module, and a transformation module, the data collection module is used to collect part image data and form a training data set, and random colors are added to the training data set The training picture of noise; the training module is used to adopt the training data set to train the network model to obtain the trained network model; the transformation module is used to input the picture to be converted into the trained network model and output the picture after color transformation.

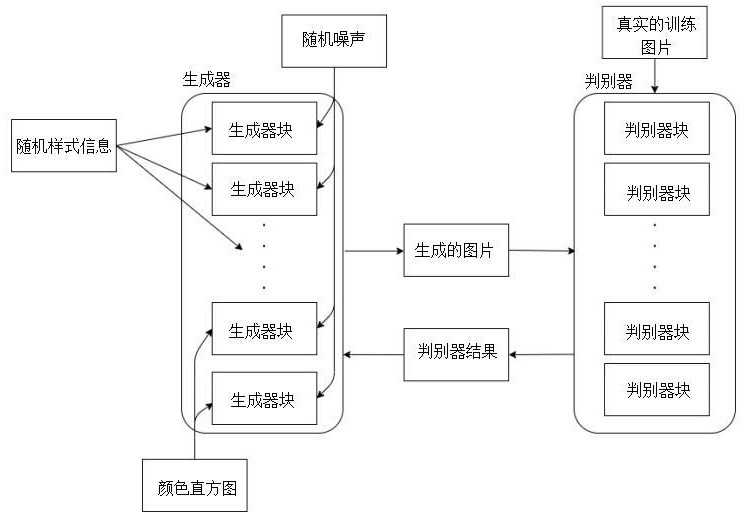

[0071] Such as image 3 As shown, the network model includes a generator and a discriminator, the generator is used to generate generated pictures conforming to the distribution of training data, and the generated pictures and real pictures are respectively input to the discriminator for training, and the discriminator is used to score the generated pictures ,...

Embodiment 2

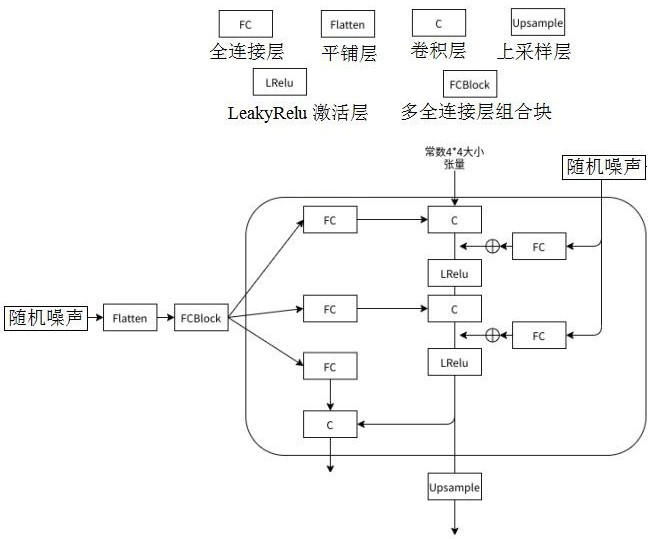

[0074] This embodiment is optimized on the basis of embodiment 1, such as figure 1 As shown, the generator blocks of the front-end generation block and the back-end generation block include several feature generation layers and coloring layers arranged in sequence from front to back, and the feature generation layers respectively include convolutional layers and coloring layers connected in sequence from front to back. LeakyRelu activation function layer, the convolutional layer is used to fuse the input and externally extracted style information, and the coloring layer is used to fuse the input and externally extracted color information; the feature generation layer of the previous generator block is upsampled As the input of the feature generation layer of the next generator block, the output of the shading layer of the last generator block of the back-end generation block is added to the output of the feature generation layer and output after the upsampling layer to generate...

Embodiment 3

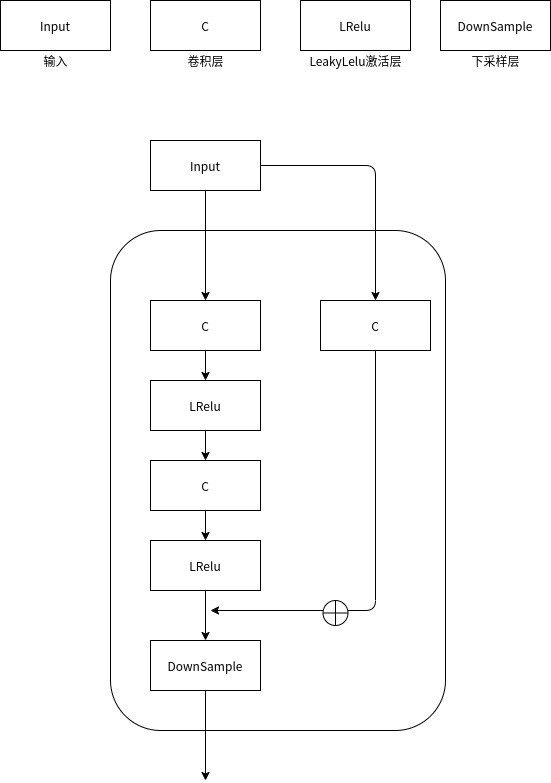

[0079] This embodiment is optimized on the basis of embodiment 1 or 2, as figure 2 As shown, the discriminator includes a discriminator block arranged in series from front to back, and the discriminator block includes several layers of residual blocks arranged in series from front to back, and the residual block includes residual convolution and convolution A product block, the residual convolution is used to extract the input residual information, the output of the convolution block is added to the output of the residual convolution and input to the downsampling layer to obtain the output of the residual block.

[0080] Further, the convolutional block consists of a double nesting of a convolutional layer and a LeakyRelu activation layer.

[0081] Other parts of this embodiment are the same as those of Embodiment 1 or 2 above, so details are not repeated here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com