Knowledge distillation method and device fusing channel and relation feature learning, and equipment

A technology of feature learning and distillation method, which is applied in the field of knowledge distillation that integrates channel and relational feature learning. It can solve the problems of ignoring relational feature knowledge, not considering channel-related knowledge on convolutional layers, and unable to effectively improve student network performance. , to achieve the effect of improving performance and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] In order to make the objects, technical solutions, and advantages of the present disclosure, the technical solutions of the present disclosure will be described in contemplation in the drawings of the embodiments of the present disclosure. Obviously, the described embodiment is a part of the embodiments of the present disclosure, not all of the embodiments. Based on the embodiments described herein, all other embodiments obtained without the need for creative labor without the need for creative labor, in the embodiment of the present disclosure.

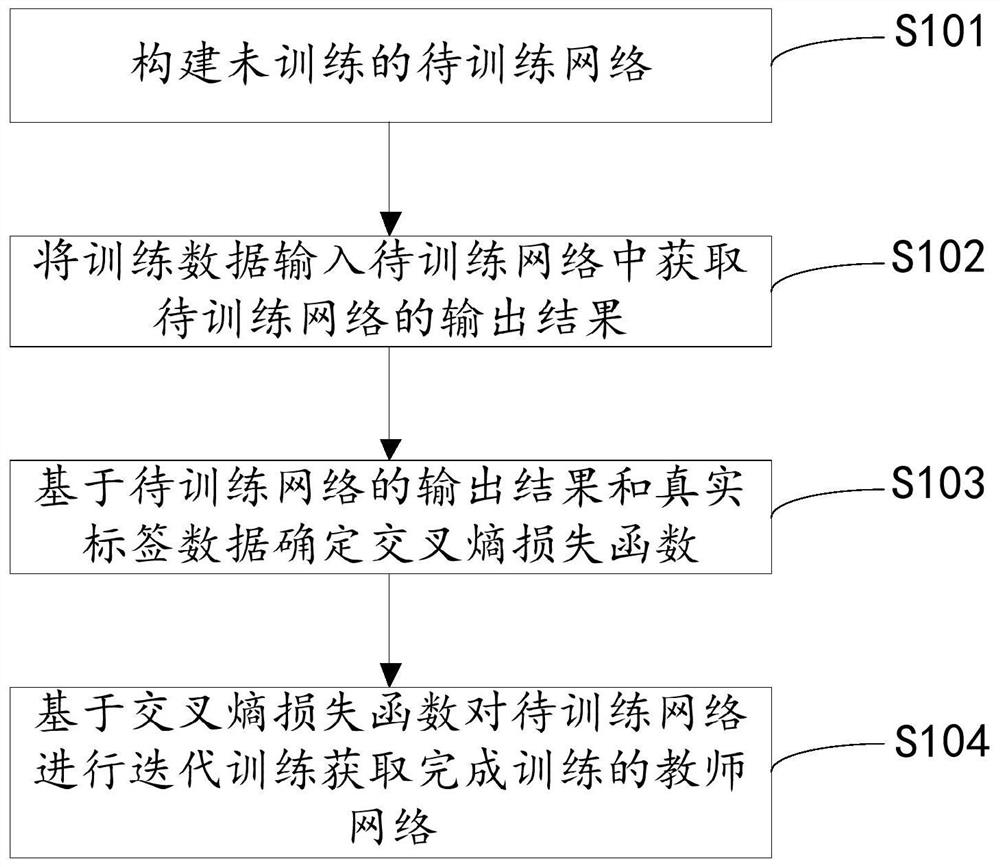

[0025] The purpose of this application is to provide a knowledge distillation method, apparatus and apparatus for fusion channel and relationship characteristics. The method includes constructing unstoped student networks and completing pre-training teachers network; input training data The teacher network obtains the output of the student network, the output of the teacher network, and the training data also includes the correspo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com