Multi-modal sentiment classification method based on heterogeneous fusion network

A technology that integrates network and emotion classification, applied in biological neural network models, neural learning methods, text database clustering/classification, etc. Accuracy is not high and other problems, to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

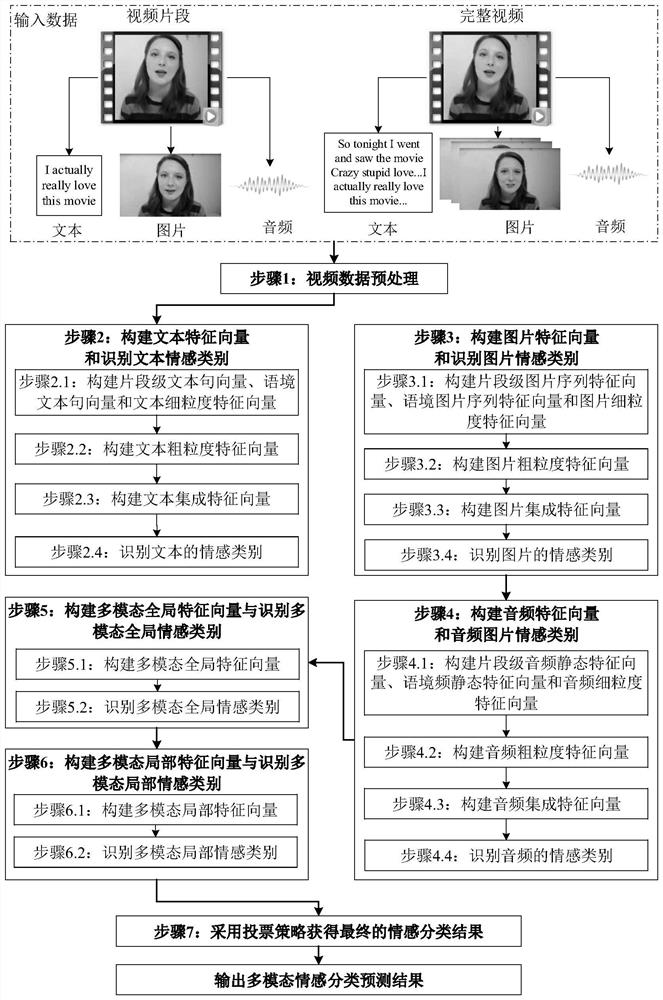

[0098] This embodiment describes the process of adopting a heterogeneous fusion network-based multimodal emotion classification method according to the present invention, such as figure 1 shown. The input data comes from the video emotion classification data set CMU-MOSI. The emotional label of the data set is represented by elements in {-3,-2,-1,0,1,2,3}, and there are 7 types in total, among which- 3, -2 and -1 are negative, 0, 1, 2 and 3 are non-negative. The input data includes complete video and video clips, all of which are extracted into three modal data of text, picture and audio.

[0099] First, a heterogeneous fusion network model based on deep learning is proposed. The heterogeneous fusion network model uses different forms, different strategies, and different angles to achieve data fusion. Two fusion forms of fusion between different modal data, two fusion strategies using feature layer fusion and decision layer fusion, and multi-modal global feature vectors cons...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com