Facial expression analysis method based on capsule network

An analysis method and facial expression technology, applied in the direction of neural learning method, biological neural network model, acquisition/recognition of facial features, etc., can solve the problem of increasing the workload of manual marking, emotional analysis of difficult facial images, noise interference of facial expressions, etc. problem, to achieve the effect of improving the classification effect, high accuracy of expression recognition, and not easy to interfere with noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The present invention will be further described in detail below in combination with test examples and specific embodiments. However, it should not be understood that the scope of the above subject matter of the present invention is limited to the following embodiments, and all technologies realized based on the content of the present invention belong to the scope of this aspect.

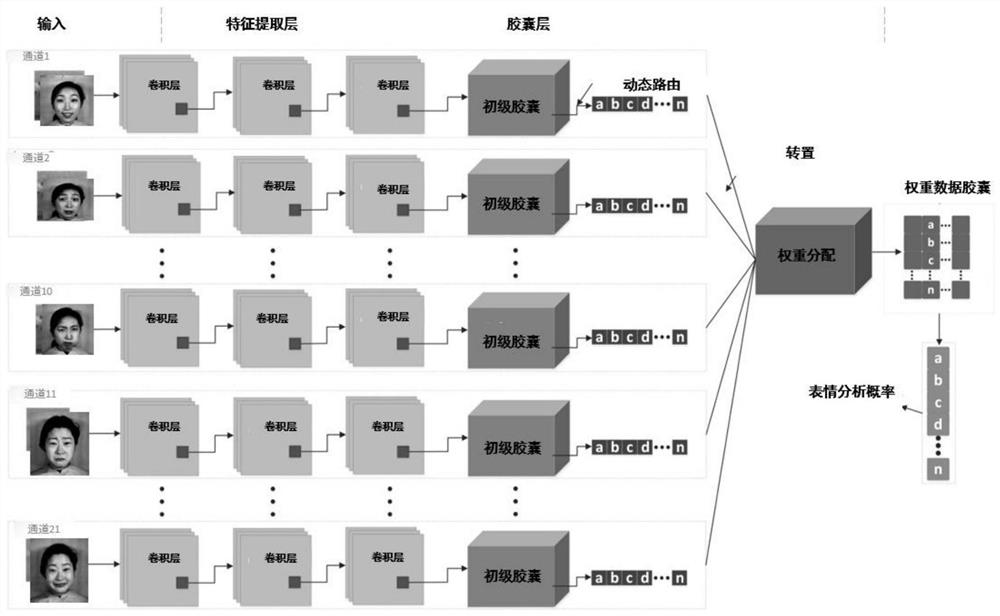

[0047] The implementation steps of a method for emotional analysis of human face images based on capsule networks provided by the present invention, the neural network diagram is as follows figure 1 shown.

[0048] exist figure 1 Among them is the flow chart of the network model of the facial image emotion analysis network based on the capsule network in the present invention. In an example of the present invention, the model is first trained on the augmented data set, and the size of all inputs is 32×32×3. The input is passed through three convolutional layers to extract features, and the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com