Visual question and answer method of original feature injection network based on composite attention

A technology of original features and attention, applied in the field of visual question answering, can solve the problems of forgetting the edge information of the original image, ignoring the autocorrelation information of the image area, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] The accompanying drawings are for illustrative purposes only and should not be construed as limiting the patent.

[0058] The present invention will be further elaborated below in conjunction with the accompanying drawings and embodiments.

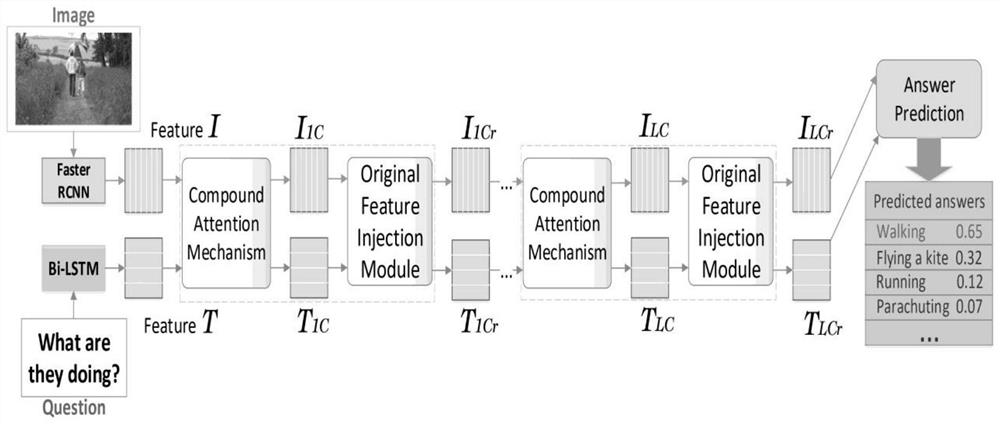

[0059] figure 1 Schematic diagram of the architecture for the original feature injection network based on composite attention. Such as figure 1 As shown, the whole visual question answering framework is mainly composed of two parts: compound attention mechanism and original feature injection module.

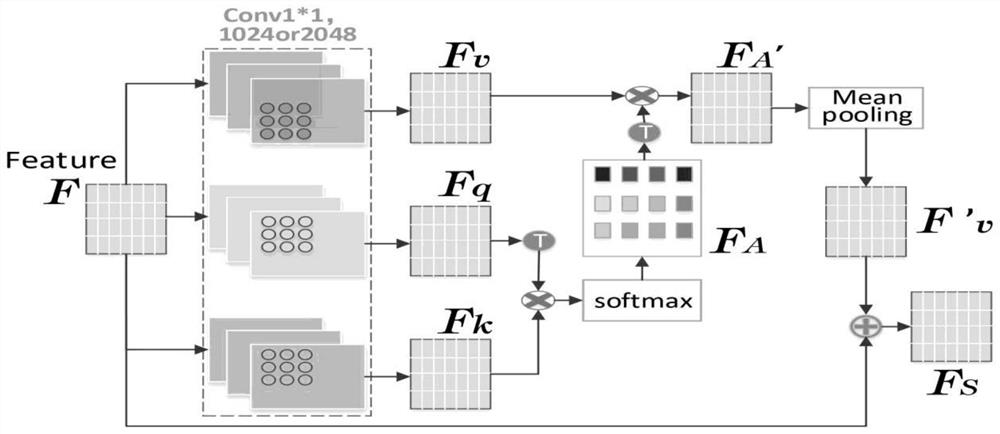

[0060] figure 2 It is a schematic diagram of the sensory feature enhancement module. Such as figure 2 As shown, input a feature F ∈ R d×K , generate F through three 1*1 convolution kernels respectively q , F k , F v .

[0061] f q =W q F, F k =W k F, F v =W v f

[0062] (1)

[0063] in Is the weight matrix of the 1*1 convolution kernel, H=2048.

[0064] by F q , F k Calculate the attention F of F A .

[0065]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com