Real-time depth completion method based on pseudo depth map guidance

A depth map and depth technology, applied in the field of real-time depth completion based on pseudo-depth map guidance, can solve the problems of multi-data annotation of pre-training model, increase of computing resources, increase of running time of single-frame depth map, etc., to achieve high robustness performance, improved accuracy, depth boundaries, and well-structured effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

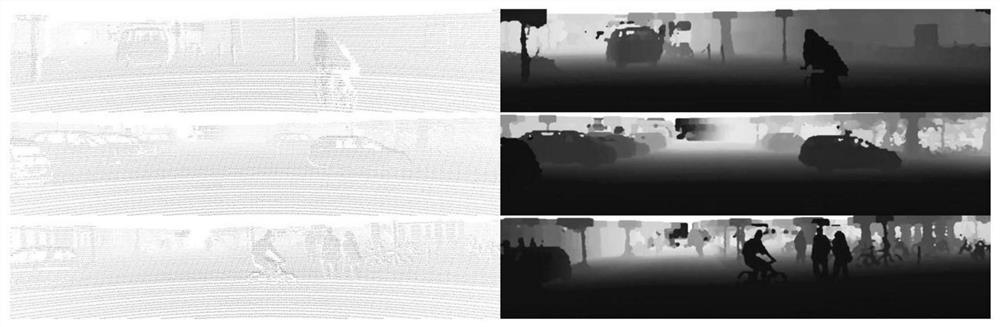

Image

Examples

Embodiment Construction

[0086] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

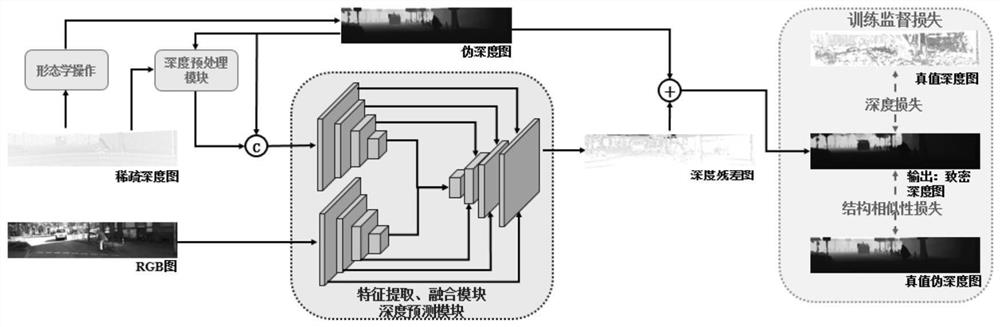

[0087] Such as figure 1 Shown in the flow chart of the present invention, according to the embodiment that the complete method of the present invention implements and its implementation process are as follows:

[0088] Taking the known dataset of KITTI Depth Completion as the known dataset and the completion of the sparse depth map as an example, the idea and specific implementation steps of the depth completion guided by the pseudo-depth map are described.

[0089] Both the sparse depth map and the ground-truth depth map in the embodiment are from the known data set of KITTI Depth Completion.

[0090] Step 1: Using KITTI Depth Completion known data set division, the training set contains 138 sequences, and the verification set includes 1000 pictures extracted from 13 sequences. There is no intersection between the training set and the validation set...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com