Adjustable hardware aware pruning and mapping framework based on ReRAM neural network accelerator

A neural network and mapping framework technology, applied in the field of computer science artificial intelligence, can solve the problems of inefficient model mapping performance, restricting the development of ReRAM neural network accelerator performance advantages, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

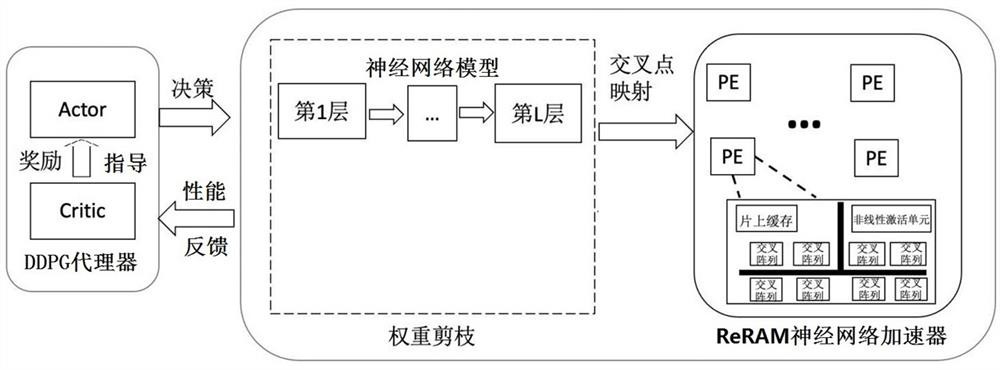

[0021] figure 1 It is the overall structural diagram of the hardware-aware pruning and mapping framework of the present invention, as shown in the figure, including a DDPG agent and a ReRAM neural network accelerator; wherein, the ReRAM neural network accelerator includes multiple processing units (ProcessingElement, PE), each processing The unit is composed of a cross array composed of multiple ReRAM units, an on-chip cache, a nonlinear activation processing unit, an analog-to-electricity converter, and other peripheral circuits (only the cross array, on-chip cache, and nonlinear activation processing unit are shown in the figure). The DDPG agent is composed of a behavior decision module Actor and a judgment module Critic; the whole pruning and mapping framework includes two levels. In the first level, the behavior decision module Actor of the DDPG agent makes a pruning decision on the neural network model from the first layer to the last layer according to the hardware feed...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com