A template matching tracking method and system based on deep feature fusion

A technology of template matching and depth features, applied in the field of target tracking, can solve problems such as insufficient robustness, lack of real-time performance, and easy drift, etc., and achieve the effect of improving robustness and suppressing jitter and drift

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

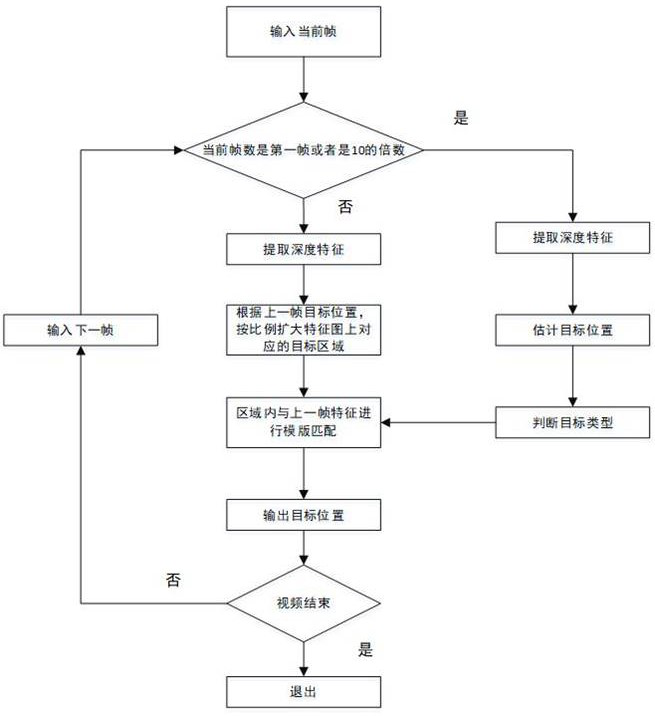

[0042] The present invention realizes the purpose of target tracking through a template matching tracking method based on deep feature fusion and a system for realizing the method. Below through embodiment, in conjunction with accompanying drawing, this scheme is described further in detail.

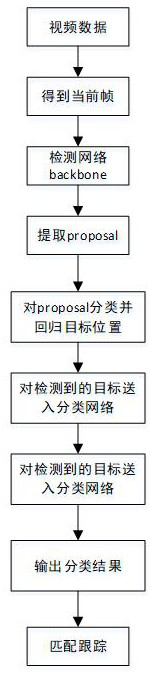

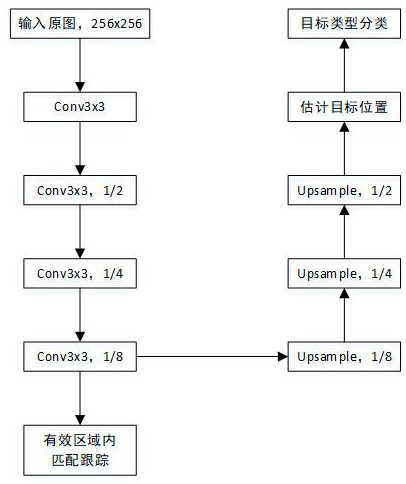

[0043] In this application, we propose a template matching tracking method based on deep feature fusion and a system for implementing the method, which includes a template matching tracking method based on deep feature fusion, as shown in the attached figure 1 Shown, be that the inventive method realizes flowchart, specifically divide into the following steps:

[0044] Step 1: Obtain video data, and input the first frame image of the video into the deep convolutional network; this step further preprocesses the acquired video data, specifically processing the size of the image to be input into the deep convolutional network into a deep convolutional network The acceptable size of the pro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com