Image saliency target detection method and system based on multi-depth feature fusion

A feature fusion and target detection technology, applied in instruments, biological neural network models, computing, etc., can solve problems such as the impact of detection results and subsequent processing operations accuracy, unclear target contours, and small targets to be measured, to meet the accuracy requirements and real-time requirements, high precision, and speed-up effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0039]The salient target detection method of the present invention can be applied to the fields of medical image segmentation, intelligent photography, image retrieval, virtual background, intelligent unmanned system and the like.

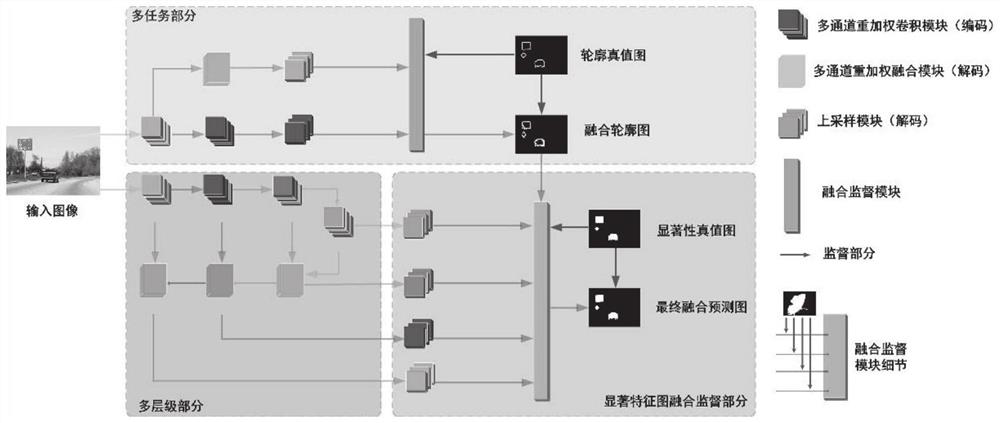

[0040]In this embodiment, the multi-depth feature fusion neural network refers to a saliency detection neural network that integrates multi-level, multi-task, and multi-channel deep features.

[0041]In this embodiment, an unmanned driving scene is taken as an example to describe the method of the present invention in detail:

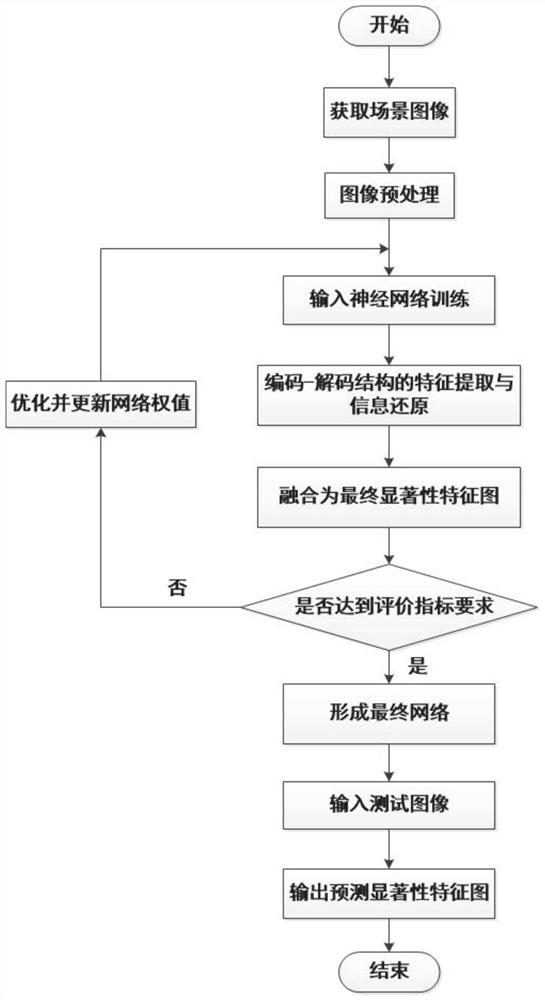

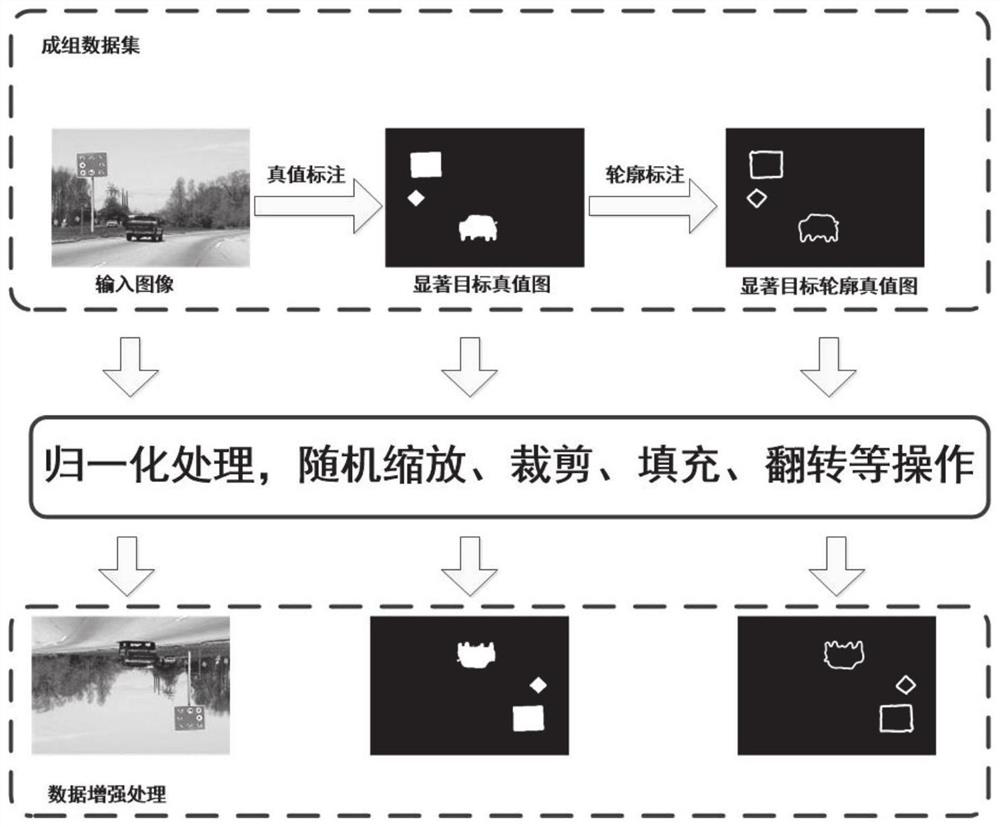

[0042]A method of image saliency target detection based on multi-depth feature fusion, refer tofigure 1 ,include:

[0043]Obtain the image information to be detected in the set scene;

[0044]Input the image information into the trained multi-depth feature fusion neural network model;

[0045]The multi-depth feature fusion neural network model uses convolution for feature extraction in the encoding stage, and combines the upsampling method of convo...

Embodiment 2

[0084]In one or more embodiments, a multi-depth feature fusion image salient target detection system is disclosed, including:

[0085]A device for acquiring image information to be detected in a set scene;

[0086]A device for inputting the image information into the trained multi-depth feature fusion neural network model;

[0087]The multi-depth feature fusion neural network model uses convolution for feature extraction in the encoding stage, and combines the up-sampling method of convolution and bilinear interpolation in the decoding stage to restore the information of the input image, and output a feature map with saliency information s installation;

[0088]A device used to learn feature maps of different levels using a multi-level network, and to merge feature maps of different levels;

[0089]A device used to output the final salient target detection result.

[0090]It should be noted that the specific working mode of the above-mentioned device is implemented by the method disclosed in Embodime...

Embodiment 3

[0092]In one or more embodiments, a terminal device is disclosed, including a server, the server including a memory, a processor, and a computer program stored in the memory and running on the processor, and the processor executes the The program implements the image saliency target detection method of multi-depth feature fusion in the first embodiment. For the sake of brevity, I will not repeat them here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com