Chinese text abstract generation method based on sequence-to-sequence model

A sequence and model technology, applied in the field of Chinese text summary generation based on sequence-to-sequence model, can solve the problems of loss of important information, long training time, semantic incoherence, etc., to enhance coding ability, improve accuracy, and avoid vocabulary oversized effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be further described below in conjunction with specific embodiment

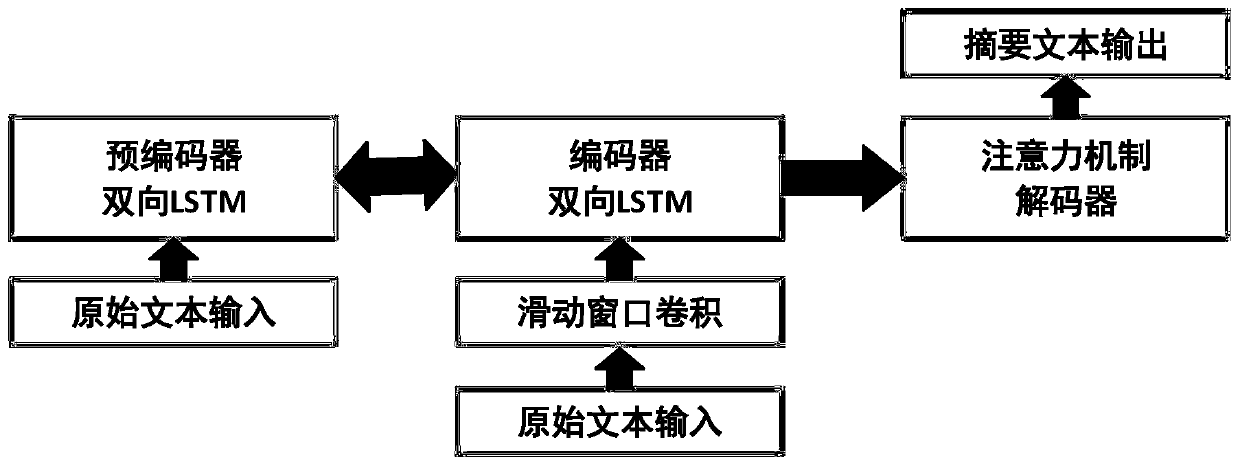

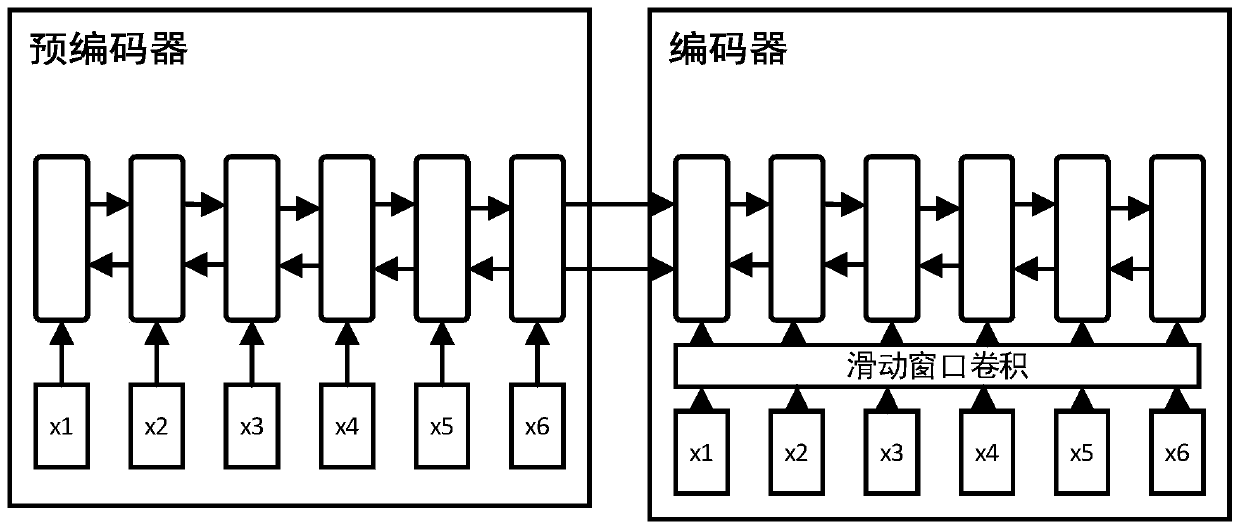

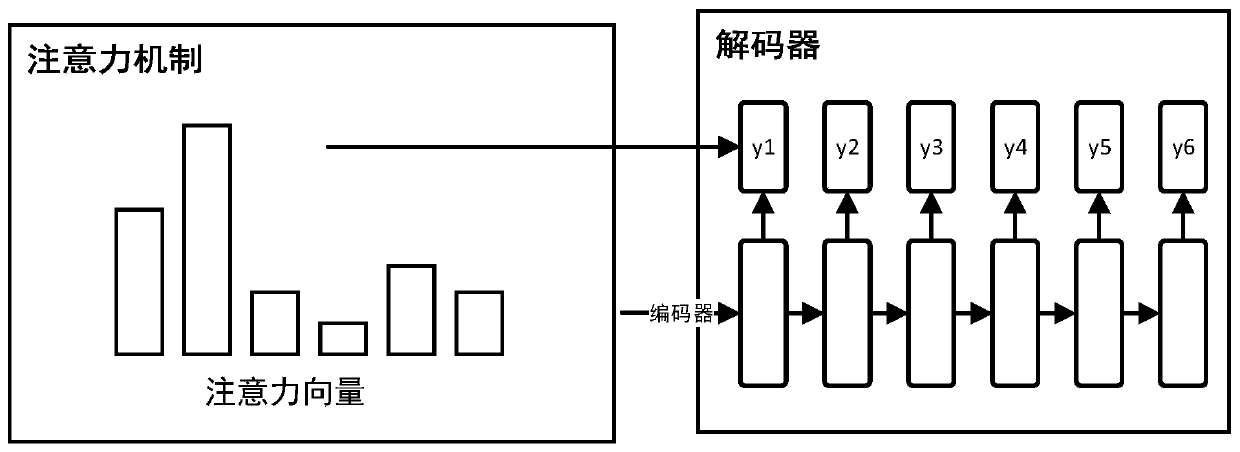

[0050] The method for generating a Chinese text abstract based on the sequence-to-sequence model provided by this embodiment includes the following steps:

[0051] 1) After distinguishing the original text and the abstract text on the large-scale Chinese microblog data, the original text and the abstract text are segmented word by word, and the English words and numbers are not segmented, and they are respectively filled to a fixed length, the original text This setting is 150, the summary text is set to 30, and it is used as a training sample in one-to-one correspondence. Construct a word table from the data obtained above, first determine the dimension of the word vector of the word table, this method is set to 256 dimensions, then use the Gaussian distribution to randomly initialize, and set it to be trainable, do the summary text according to the word table One-hot vecto...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com