Real-time multi-modal language analysis system and method based on mobile edge intelligence

A language analysis and multi-modal technology, applied in the field of real-time multi-modal language analysis system, can solve problems such as difficult to implement, large energy consumption, difficult real-time multi-modal language analysis, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0085] The present invention will be further described below in conjunction with specific embodiment and accompanying drawing:

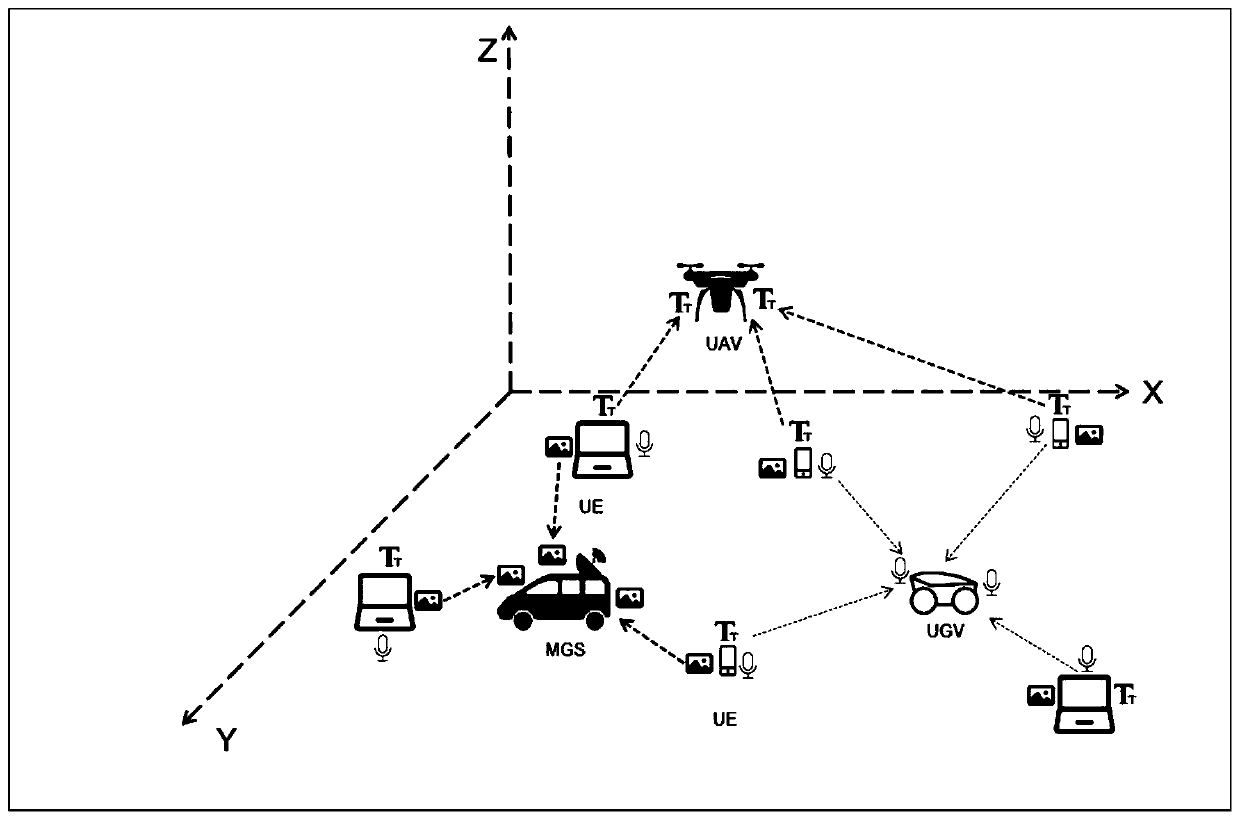

[0086] Such as figure 1 Shown, the system of multimodal language analysis system of the present invention comprises UAV, UGV and MGS three kinds of MEI servers, there are various single mode or multimodal tasks on every mobile terminal such as mobile phone and notebook, according to our method will each These tasks are offloaded to UAV, UGV and MGS for execution, the computing resources of the three are reduced in turn, and the flexibility of movement is increased in turn, realizing real-time and efficient multi-modal language analysis;

[0087] This solution divides the user's language data into three modes: text, voice and image, and assigns computing tasks to appropriate MEI servers for execution according to the difficulty of computing and analysis and the size of computing resources required.

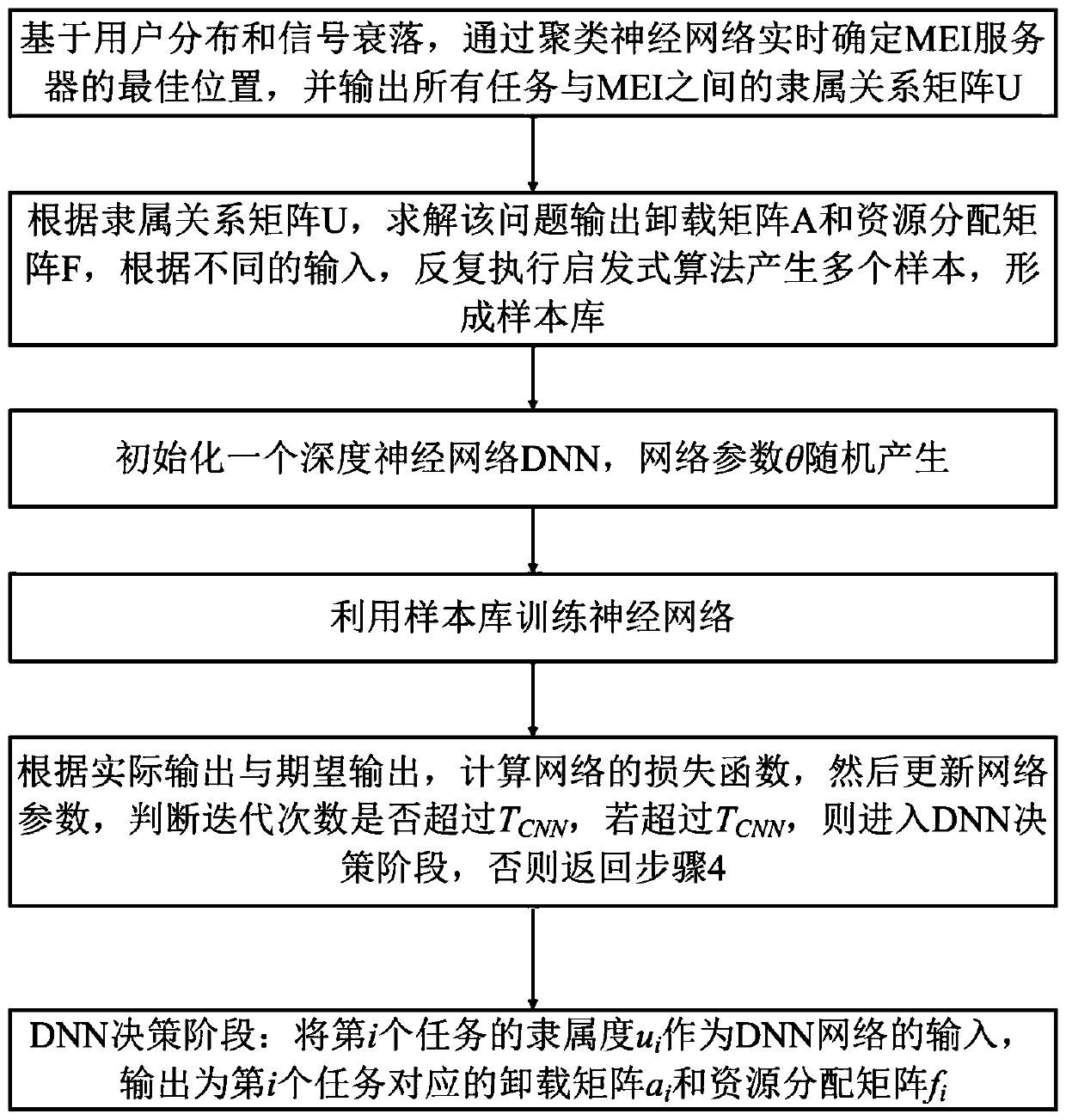

[0088] The present invention also provides an onli...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com